We’re excited to share a host of product updates that make fine-tuning and serving SLMs in production on Predibase easier, more reliable, and more cost effective than ever. Read on to learn more about:

- New model support: Solar Pro Preview, Gemma 2, Qwen2, and Phi-3.5

- Turbo LoRA: 2-3x faster inference

- Synthetic data generation

- Deployment health analytics

- Resume training from checkpoint

- Prompt LLMs during training

- Support for 1GB+ datasets

- Prompt prefix caching

- Update live deployments

- Integrate with Comet’s Opik

Solar Pro Preview is now available for fine-tuning and serving in Predibase!

We’re thrilled to announce the general availability of Solar Pro Preview Instruct, Upstage's latest and most powerful small language model (SLM) that runs on a single GPU! 🌟

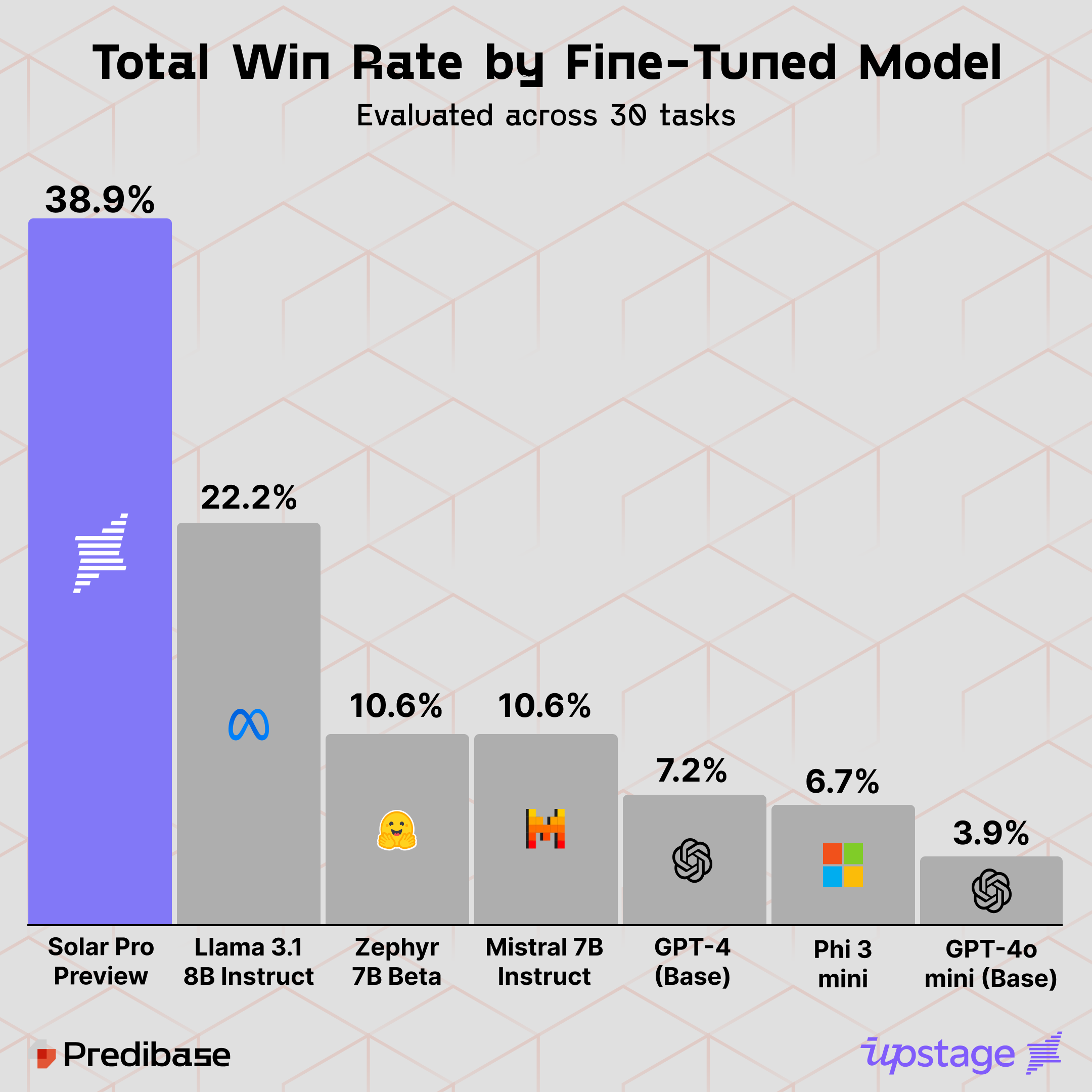

Fine-tuned Solar Pro Preview outperformed all other open-source models, including the previous leader Llama-3.1-8B, topping 38.9% of tasks across 30 benchmarks on our updated Fine-Tuning Leaderboard.

Check out the updated Fine-Tuning Leaderboard for a detailed breakdown of Solar Pro Preview’s performance against other fine-tuned and commercial models.

We’ve introduced a number of models recently

See the full list here. Recently added models include:

- Gemma 2 9B

- Gemma 2 9B Instruct

- Gemma 2 27B

- Gemma 2 27B Instruct

- Qwen2 1.5B Instruct

- Qwen2 7B

- Qwen2 7B Instruct

- Qwen2 72B

- Phi-3-5-mini-instruct

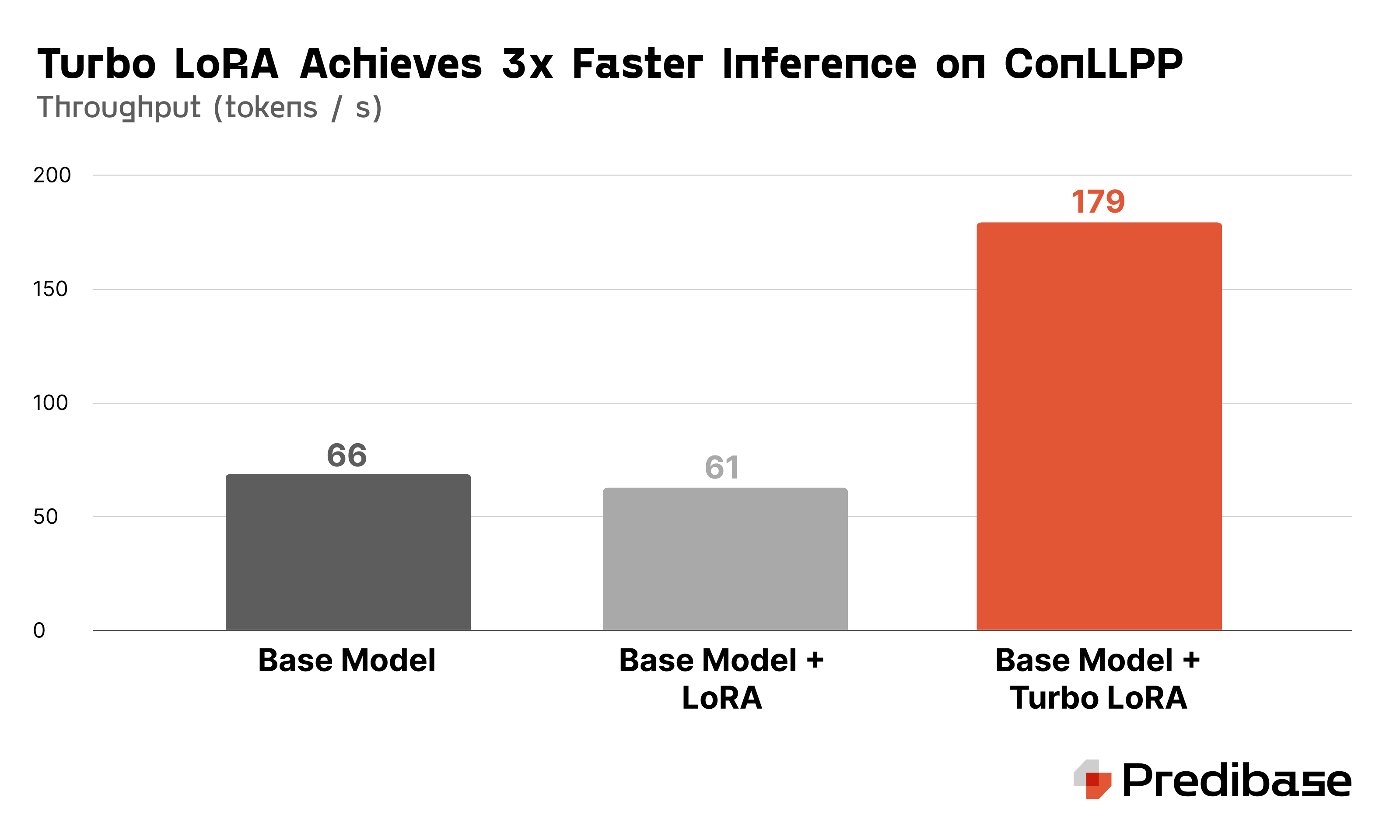

Get 2-3x faster fine-tuned LLM inference with Turbo LoRA

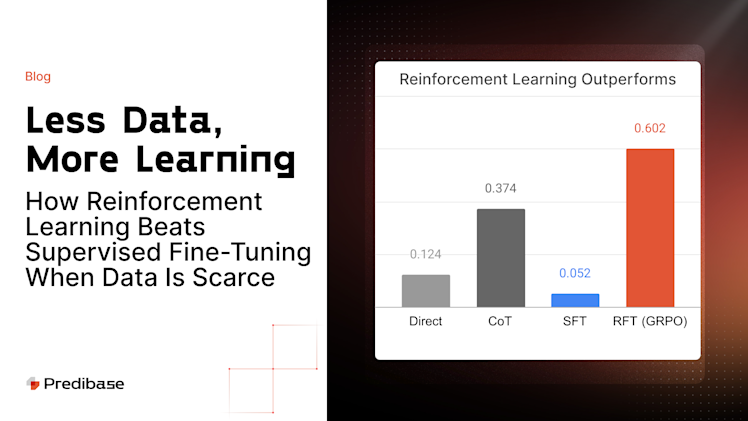

By combining LoRA with speculative decoding, we’ve developed a method that boosts model accuracy and speeds up inference by 2-3x. Unlike LoRA and speculative decoding on their own, Turbo LoRA overcomes tradeoffs between speed and quality, reducing costs and latency while delivering top-tier task-specific performance.

Learn more in our webinar on 9/24.

Average throughput (token/s) for base model prompting, base model with LoRA, and base model with Turbo LoRA.

Beat GPT-4o by generating synthetic data from as few as 10 rows of seed data

While fine-tuned SLMs can reliably outperform commercial LLMs, one of the biggest challenges for organizations is acquiring enough data to effectively train these smaller models. Our new synthetic data generation workflow solves this problem. With as few as 10 rows of seed data, you can now train a SLM that outperforms GPT-4o at a fraction of the cost.

Watch our synthetic data webinar.

Maximize deployment efficiency with detailed health analytics

Our Deployment Health Analytics are a must-have tool for optimizing the performance of your LLM deployments. Get real-time insights into crucial metrics like request volume, throughput, queue duration, number of GPU replicas, and GPU utilization, helping you make smarter decisions while balancing performance and cost. You can also customize GPU scaling thresholds to meet your specific needs, ensuring smooth, efficient operations regardless of workload changes.

Resume training from any checkpoint

You can now continue training on any fine-tuned adapter, which helps you save time and money. Whether you want to further improve performance on the same dataset or adapt your model to new data, you no longer have to start from scratch. Learn more in our docs.

Prompt LLMs during training

You can now prompt intermediate adapter checkpoints during training, which allows you to interact with your adapter before training is finished. Running inference in the middle of training allows you to determine if you’d like to stop training earlier and make adjustments sooner.

Introducing support for large datasets

We’re excited to announce that Predibase now fully supports fine-tuning large language models (LLMs) with datasets over 1 GB! Large datasets require special preparation to ensure smooth processing, and we’ve made it easier than ever.

For optimal performance, large datasets need to be tokenized and saved in partitioned arrow format, compatible with HuggingFace’s Datasets library. Once prepped, connect your data to Predibase, and you’re ready to fine-tune your model with ease.

This update ensures you can work with massive datasets efficiently, giving you more flexibility to build high-performance models at scale. Learn more in our docs.

Speed up inference with Prompt Prefix Caching

Prompt Prefix Caching is a feature designed to speed up inference, especially when you're working with long documents, contexts, or multi-turn chat conversations. When this feature is enabled in private serverless deployments, it stores previously computed parts of prompts (called "prefixes") so they can be reused for future prompts. This caching reduces the time it takes for the model to generate the first response, making interactions faster and more efficient, particularly for tasks like retrieval-augmented generation (RAG) or extended chats. Learn more in our docs.

Update private deployments while they’re live

If you need to make any changes to your private serverless deployment, you can now update common configurations such as min/max replicas and scale up threshold via the SDK or UI. Updating a deployment will not cause any downtime as the existing deployment will continue to serve requests while the new configuration is applied. Learn more in our docs.

Track and evaluate fine-tuning jobs with Comet’s Opik

We're excited to be a launch partner for Comet's hashtag#Opik! Together we make it easy to customize best-of-breed small models (hashtag#SLMs), seamlessly track and hashtag#evaluate your experiments, and serve at blazing fast speeds in production!

Track your Predibase fine-tuning jobs and see in-depth analytics in Comet. Learn more in our docs.