Join our upcoming webinar to dive into the details and methodology of how we achieved these massive improvements in fine-tuning.

Today, we are excited to announce our latest major release, which features a brand new fine-tuning stack, resulting in training speeds of up to 10x faster! Here are a few of the highlights:

- New fine-tuning stack with 10x faster training

- Addition of Llama-3 for Inference & Fine-Tuning

- Adapters as First-Class Citizen

- Brand new Python SDK

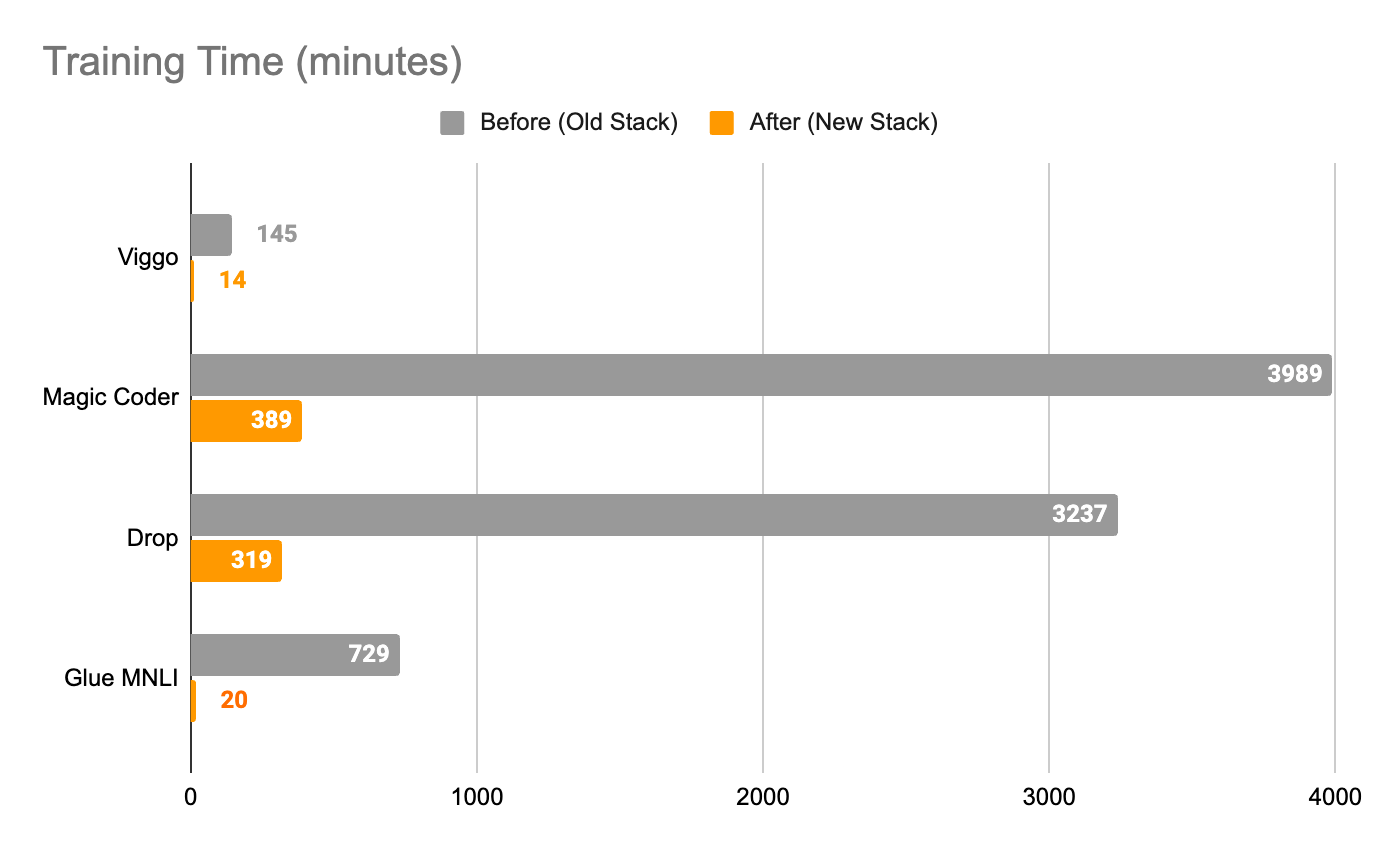

New Fine-tuning Stack: Lightning Fast and Efficient

Historically, we leveraged Ludwig under the hood for all fine-tuning jobs in Predibase to provide a performant and high-speed training engine. With this release, we are now leveraging a brand new fine-tuning system along with a dedicated A100 cluster to take our training engine to the next level, providing users with the fastest fine-tuning speeds possible.

This means you can get the same industry-leading pricing along with these new benefits:

- Faster experimentation:Significant reduction in training time (up to 10x) to enable rapid iteration.

- Better results: Quality improvements in the fine-tuned models.

- Unlocking new capabilities: New fine-tuning features in the near future (such as completions-style fine-tuning & Direct Preference Optimization (DPO)).

Improvements in training speed with our new fine-tuning stack.

We enabled a number of industry leading training techniques to achieve these massive gains in our fine-tuning throughput, including:

- Automatic Batch Size Tuning to Maximize Throughput,

- Removing Gradient Checkpointing,

- Using Regular Optimizers over Paged Variants,

- Flash Attention 2 ,

- Optimized CUDA Kernels,

- and more!

Lastly, while this new system is independent of Ludwig today, our intent is to open-source this system as part of Ludwig’s next generation fine-tuning story. We will share more updates with the community as we finalize the details of the open-source plan.

New Models: Fine-tune and Serve Llama-3!

Last week, Meta released the Llama-3 models. We’re excited to announce that all four models released are available for inference and fine-tuning on Predibase:

Llama-3 includes a number of significant improvements:

- Uses a tokenizer with a vocabulary of 128k tokens (up from 32k) that encodes language more efficiently, leading to improved model performance

- Improvements in post-training resulting in reduced false refusal rates, improved alignment, and increased diversity in model responses

- Improved capabilities such as reasoning, code generation, and instruction following

- Increase in Context Window from 4k to 8192

Stay tuned for a walkthrough on fine-tuning and serving Llama-3 on Predibase that will be coming very soon!

Adapters as a First-class Citizen

At Predibase, we’re very bullish on the future where businesses leverage a series of smaller, task-specific models. To this end, we’ve been focused on productionizing adapter-based fine-tuning (i.e. LoRA) as the primary way to fine-tune models in Predibase.

In our Web UI and the SDK, you will see fine-tuned models have now been renamed to Adapters. We’ve also simplified our model builder to expose only the most relevant parameters for users while they’re fine-tuning.

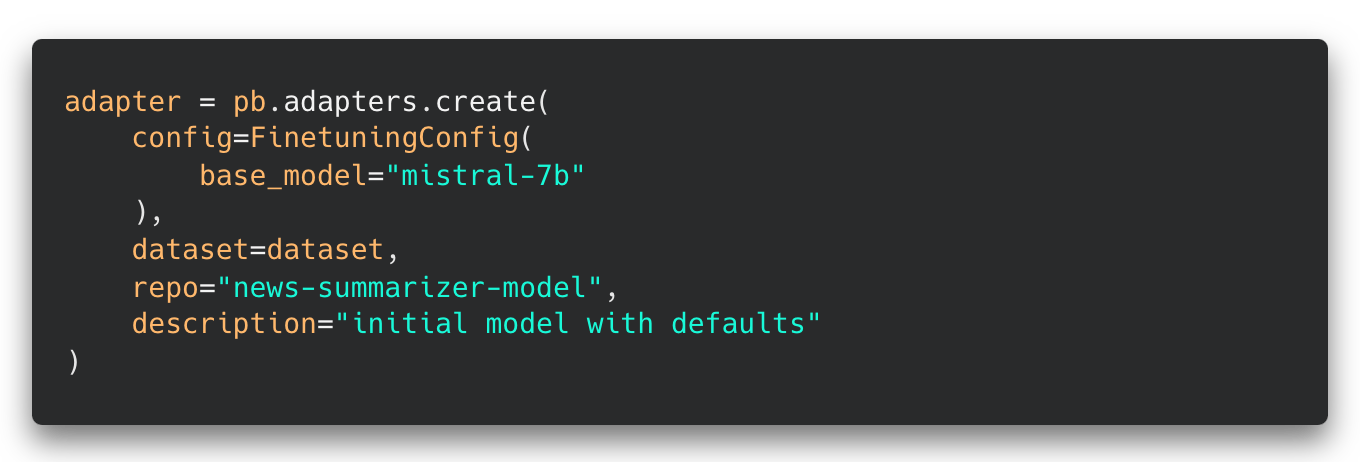

New Python SDK

Lastly, we’re excited to release our brand new Python SDK, which offers improvements in consistency and usability over the previous version. The prior SDK is officially deprecated, but will continue functioning until it is fully sunsetted on May 22, 2024.

Here’s a preview of the functionality with a code snippet for Creating an Adapter (Fine-tuning):

Efficiently fine-tune in <10 lines of code

Get Started with Predibase

We want every organization, large or small, to be able to train best-in-class LLMs and couldn’t be more excited to make our new fine-tuning stack available to all of our customers.

- Test drive our fine-tuning stack with $25 in free credits with the Predibase Free Trial.

- Get all the details on the release with our Changelog and Documentation.