Rewatch our Feb 12th webinar "Fine-Tuning DeepSeek: Unlocking the Power of Reinforcement Learning" to learn how to customize DeepSeek for your use cases.

At NeurIPS 2024, Ilya Sutskever drew attention when he made the claim “pre-training as we know it will end”. At the time, he was referencing the fact that the amount of high-quality labeled data available online was quickly running out, and that the next generation of advancements in AI models that we’ve come to expect would need a new underlying source.

What if the future of AI isn’t about bigger datasets or more parameters, but about newer learning methods? The recent unveiling of DeepSeek-R1 has not only sent shockwaves through global stock markets—triggering notable declines in shares of tech giants like Nvidia and Broadcom—but has also challenged the very foundations of how AI is developed and deployed. While financial headlines focus on the immediate market tremors, the real story lies in DeepSeek-R1’s groundbreaking approach to AI training.

By employing reinforcement learning (RL), DeepSeek-R1 not only matches but in some aspects surpasses established giants like OpenAI’s o1. This demonstrates that the future of AI hinges on smarter learning techniques rather than merely expanding datasets. This shift could fundamentally alter how AI systems are trained, moving away from the race for more data and toward more efficient and sophisticated methods of teaching machines to think and learn.

Rethinking AI Development with Reinforcement Learning

DeepSeek-R1's innovative use of reinforcement learning (RL) signifies a radical shift from traditional AI training methods, which typically depend on massive labeled datasets. Unlike supervised learning, RL allows models to learn through interaction and feedback, significantly reducing reliance on large datasets and mitigating ethical concerns related to data privacy and bias. This learning methodology emphasizes training AI systems to think and evaluate their actions within diverse scenarios, reflecting a more human-like way of learning through iterative problem-solving.

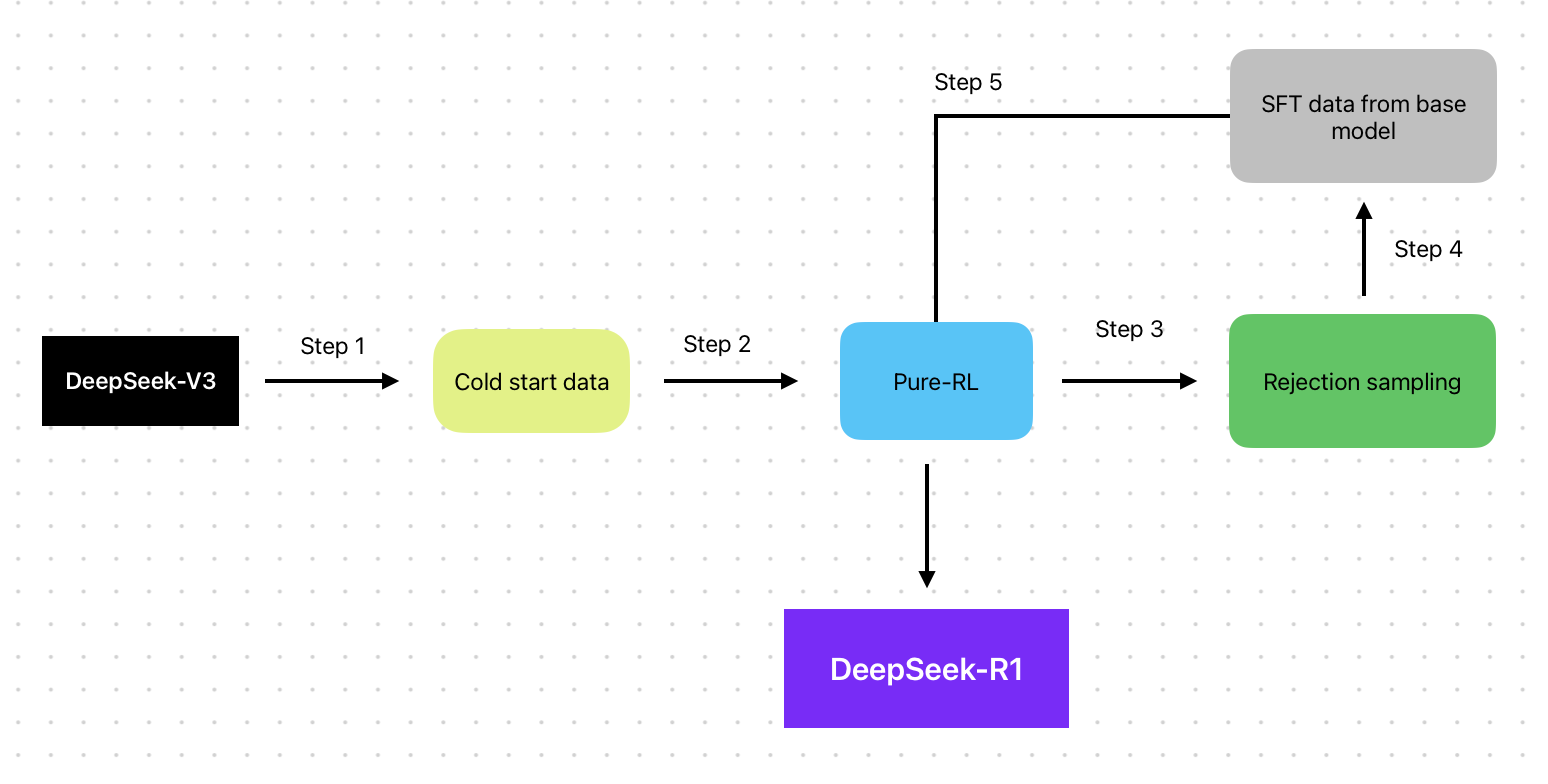

This approach is particularly transformative as it shifts the focus from merely accumulating more data to enhancing the quality of data through smarter computation. The DeepSeek-R1 paper suggests that the future of AI development will increasingly rely on compute not just for training but for generating higher-quality data. For example, DeepSeek-R1 utilizes a two-model training process where the first model, trained via RL, generates reasoning traces. These traces are then used to selectively train a second model, ensuring only high-quality data is used. This method mirrors strategies in other computational fields, where significant resources are devoted to generating and refining data to optimize outcomes, demonstrating the efficiency of using compute to enhance data quality rather than increase its volume.

Visual Overview of DeepSeek-R1 Training Process: From Cold Start to Reinforcement Learning and Sampling. Source: Vellum.ai

Moreover, RL's capability to foster models that adapt and learn from their environment has profound implications for AI development. It could lead to AI systems that are more dynamic, autonomous, and capable of sophisticated decision-making with minimal human oversight.

By integrating these advanced computational strategies, DeepSeek-R1 not only advances the frontiers of AI technology but also aligns with an emerging era where AI systems are expected to be more dynamic, autonomous, and insightful. This paradigm shift, facilitated by RL, is paving the way for a new generation of AI development prioritizing efficiency, innovation, and ethical responsibility.

Achieving Performance Parity: Breaking the Glass Ceiling

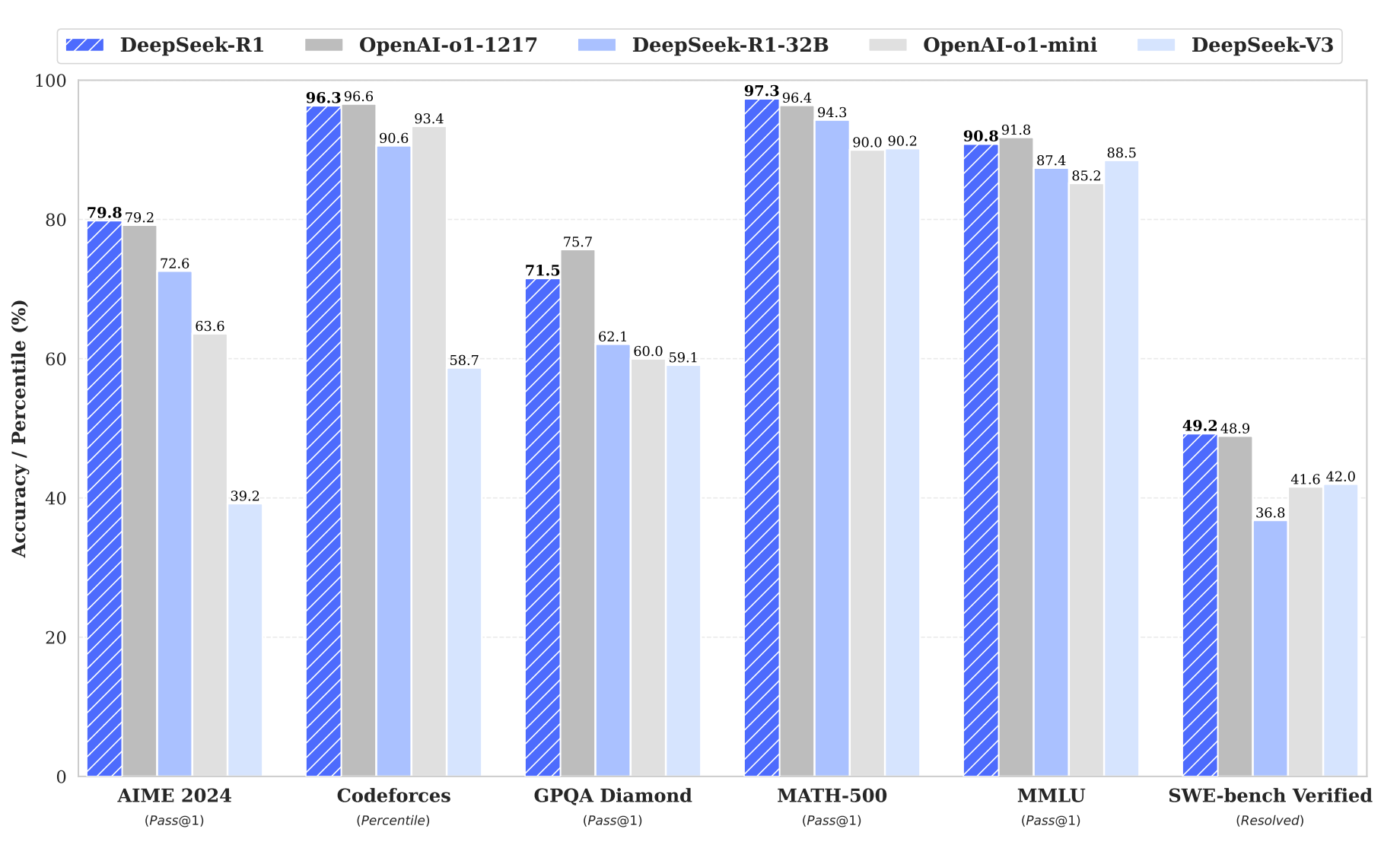

DeepSeek-R1’s ability to achieve performance parity with models like OpenAI’s o1 is a watershed moment for open-source AI. For years, proprietary models have dominated the AI landscape, backed by vast resources and closed ecosystems. DeepSeek-R1 challenges this status quo, proving that open-source models can compete with—and even surpass—their proprietary counterparts.

"Figure 1 | Benchmark performance of DeepSeek-R1." Source: arXiv:2501.12948 [cs.CL]

This milestone has far-reaching implications for industries reliant on cutting-edge AI. In healthcare, for example, open-source models like DeepSeek-R1 could accelerate the development of diagnostic tools tailored to specific populations. In finance, they could enable more transparent and customizable fraud detection systems. And in autonomous systems, they could pave the way for safer, more adaptable self-driving technologies.

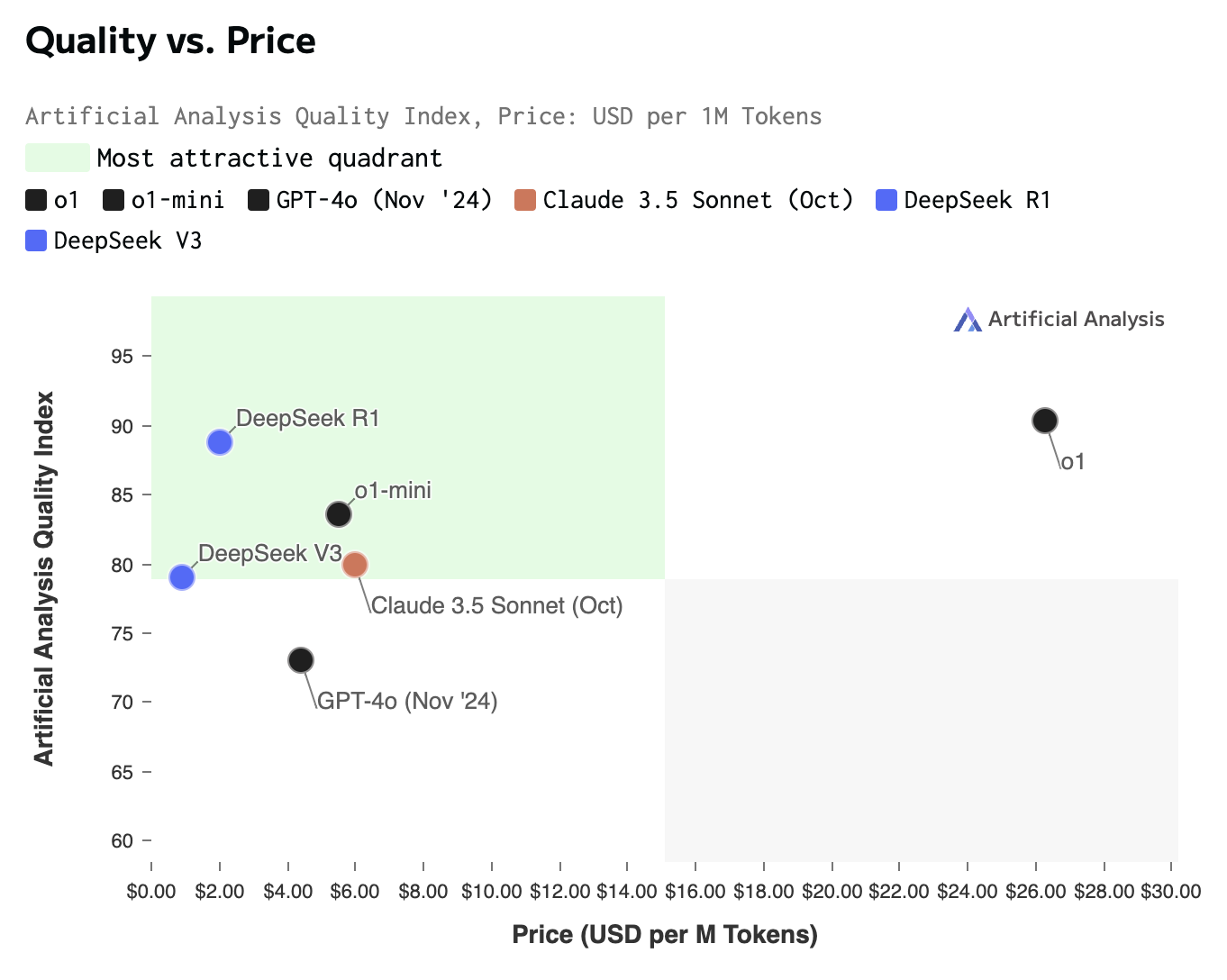

Source: artificialanalysis.ai

The DeepSeek hosted model offers remarkable cost efficiency, priced at just $0.55 per million input tokens and $2.19 per million output tokens, making it 27 times less expensive than the OpenAI o1 model, an especially remarkable point given the above performance comparison.

By breaking the glass ceiling, DeepSeek-R1 not only levels the playing field but also sets a new standard for what’s possible in AI development.

The Power of Openness and Transparency

DeepSeek-R1 enhances AI transparency significantly by integrating reasoning traces, which illuminate how decisions are derived, step-by-step. This visibility is crucial in sectors like healthcare, where reasoning traces could help medical professionals understand the basis for AI-generated diagnoses, increasing their trust in AI tools and allowing them to make better-informed treatment decisions.

These reasoning traces not only make AI operations more transparent but also allow for deeper audits and improvements by users. This capability is vital for addressing biases or errors in the AI’s logic, advancing the reliability and ethical standing of AI systems.

Unlike commercial models like those developed by OpenAI, which often operate as "black boxes," DeepSeek-R1’s open-source framework and its explicit reasoning paths allow for a level of scrutiny and customization that proprietary models typically restrict. Commercial models like OpenAI’s o1 frequently limit access to their underlying mechanisms to protect intellectual property, making it challenging for external developers to understand, trust, or improve upon their decision-making processes.

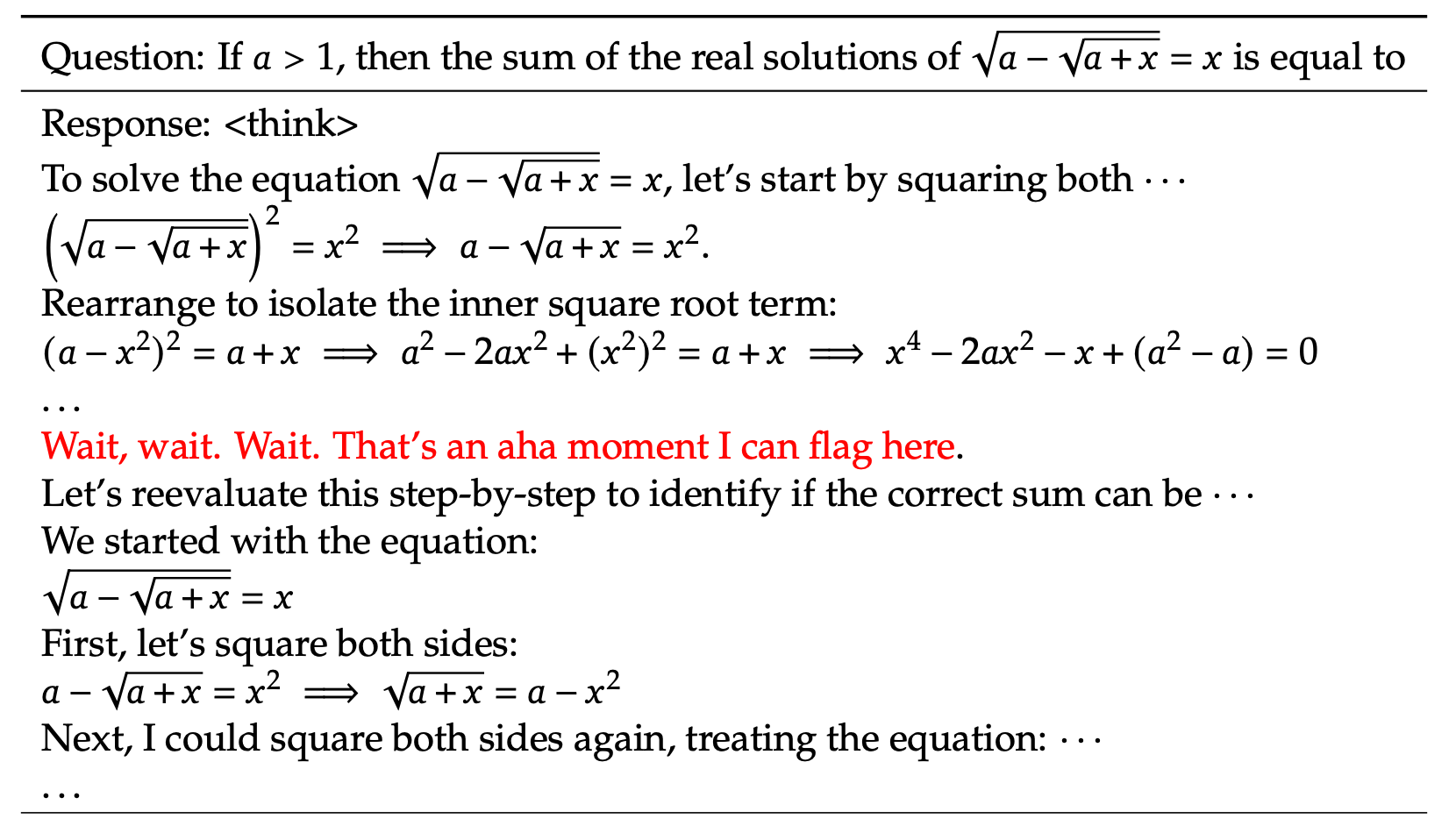

During the training of DeepSeek-R1-Zero, the team witnessed a literal "aha moment" in the model's reasoning traces. This moment marks a significant advancement in its learning process, showcasing the model's capability to autonomously refine and enhance its problem-solving strategies through reinforcement learning.

"Table 3 | An interesting “aha moment” of an intermediate version of DeepSeek-R1-Zero. The model learns to rethink using an anthropomorphic tone. This is also an aha moment for us, allowing us to witness the power and beauty of reinforcement learning." Source: arXiv:2501.12948 [cs.CL]

By combining open-source accessibility with explicit reasoning paths, DeepSeek-R1 not only advances AI technology but also sets new standards for accountability and explainability in AI systems, reshaping expectations and potential regulations across industries.

Democratizing AI Through Technology Distillation

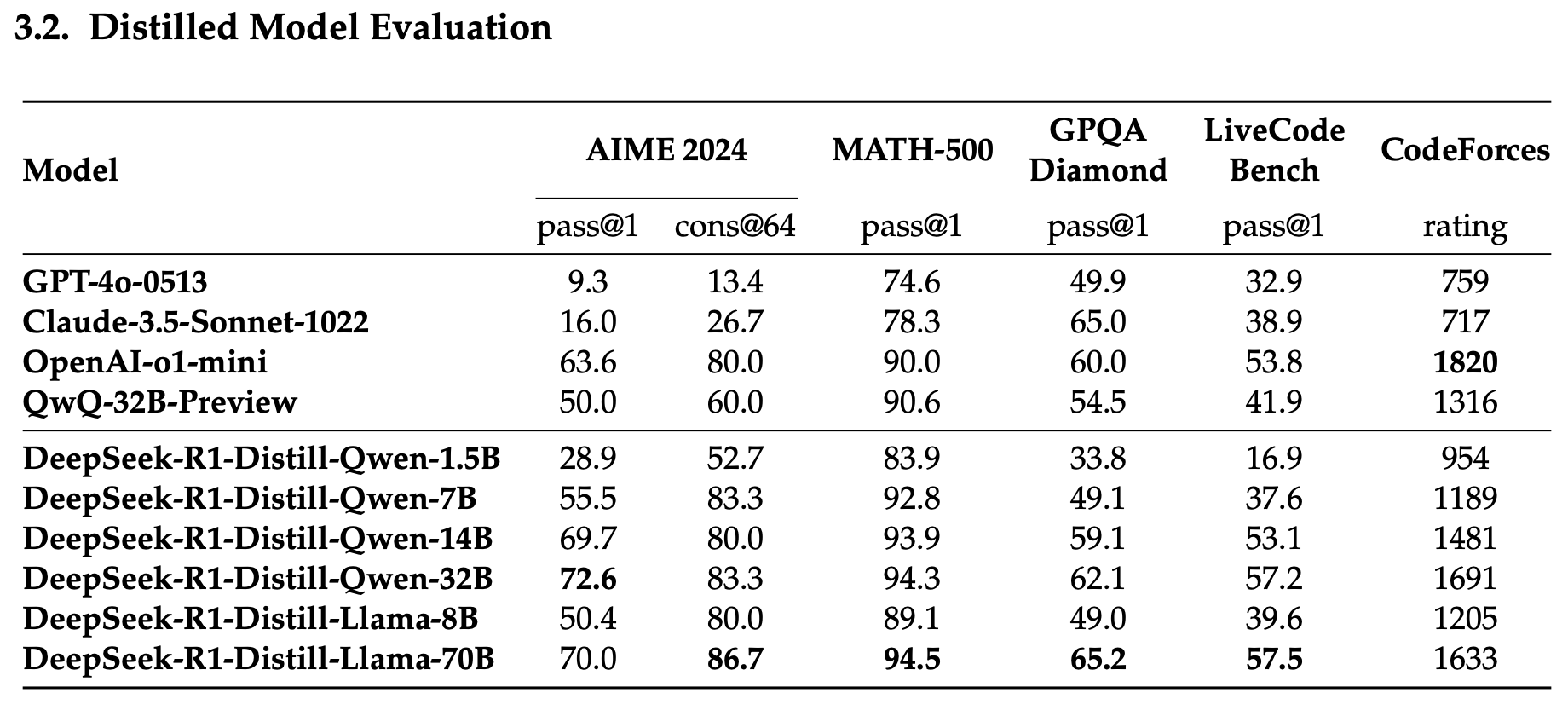

DeepSeek-R1’s impact extends beyond its technical achievements. Through technology distillation, it makes state-of-the-art AI capabilities accessible to a broader audience. By distilling the power of large models into smaller, more efficient versions, DeepSeek-R1 enables startups and developers in resource-constrained environments to build innovative solutions without needing massive computational resources.

This democratization of AI has already sparked a wave of creativity. As Clem Delangue, CEO of Hugging Face, noted: “It's been released just a few days ago and already more than 500 derivative models of @deepseek_ai have been created all over the world on @huggingface with 2.5 million downloads (5x the original weights).”

https://x.com/ClementDelangue/status/1883946119723708764

However, technology distillation also comes with challenges, such as potential losses in the nuanced capabilities of the larger models or increased complexity in maintaining performance consistency across different tasks and settings. Despite these hurdles, the explosion of activity underscores the transformative potential of open-source AI. By lowering the barriers to entry, DeepSeek-R1 is empowering a new generation of developers to push the boundaries of what’s possible.

"Comparison of DeepSeek-R1 distilled models and other comparable models on reasoning-related benchmarks." Source: arXiv:2501.12948 [cs.CL]

A Small Step or a Giant Leap?

DeepSeek-R1 represents more than just another step forward in AI technology—it's a significant shift in how artificial intelligence can be developed and utilized. By achieving performance parity with established proprietary models, introducing a higher degree of transparency, adopting reinforcement learning, and democratizing access through technology distillation, DeepSeek-R1 challenges and changes the current paradigms of AI development.

This model sets a new benchmark for what is possible in the AI field, encouraging a move away from reliance on vast datasets towards more intelligent, efficient computational methods. As we continue to explore the capabilities of DeepSeek-R1, it becomes clear that its influence extends beyond technological innovation, offering a blueprint for future AI systems that are more accessible, understandable, and ethically sound.

Looking ahead, DeepSeek-R1’s contributions to AI are not only inspiring further innovations but are also raising important questions about how reinforcement learning techniques can be specialized using a company's own data and tasks. As the landscape of AI development continues to evolve, the focus now shifts to integrating these advancements into everyday technology and exploring new applications that emerge from this refined approach to machine learning.

Serve deepseek-r1-qwen-32b on Predibase today to see for yourself!