When it comes to deploying LLMs in production, speed isn't just a nice-to-have; it's essential. At Predibase, we have recently launched our Inference Engine 2.0, which significantly improves efficiency and throughput on even the most demanding workloads. Our new engine enables you to squeeze more performance from the GPUs you already own, while reducing infrastructure costs. This performance is enabled by a series of optimizations, including:

- Turbo-Charged Inference - we have fully rewritten Turbo, our proprietary speculative decoding technique for generating additional tokens during inference, enabling even faster performance.

- Multi-Turbo Inference - a new technique that enables multiple speculative decoders to run in parallel on a single base model for further speed boosts.

- Chunked Prefill and Speculative Decoding Integration - we integrated chunked prefill, a technique to keep GPUs highly utilized, with speculative decoding—a combination traditionally seen as incompatible—to provide a packaged offering that benefits from both.

- Improved Support for Embeddings and Classification Models - these models serve as a cornerstone for many enterprise AI workloads so we extended their support in Predibase.

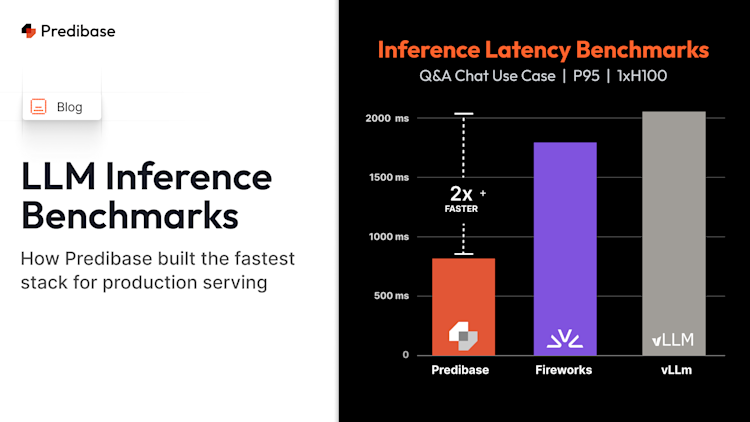

Thanks to these recent improvements, our customers are seeing substantial performance gains. To put our new inference engine to the test, we ran a series of real-world benchmarks across three leading platforms: Predibase, Fireworks, and the widely adopted open-source serving framework, vLLM.

Benchmark Overview and Conditions

To ensure fairness, we tested all platforms under the same load conditions, ramping up request per second (QPS) levels from 1 to 20. Fireworks and vLLM were run using their self-serve deployment options with default configurations without doing any internal optimizations:

- Fireworks: Default self-serve deployment

- vLLM: Deployed on RunPod (a common serverless GPU platform) with default vLLM settings

- Predibase: Default self-serve deployment including speculation & LoRA support out of the box

- Hardware: 1 x H100 & 1 x L40S

- Note: Fireworks does not provide self-serve support for L40S, so only H100 was benchmarked.

- Latency: Benchmarks were measured at P50 and P95 latencies to provide a more holistic picture of response times.

- LoRA Benchmarking Consistency: The same LoRAs were used to benchmark Predibase, Fireworks, and vLLM to ensure consistency.

- Turbo: Predibase provides Turbo LoRA out of the box, a proprietary technique that trains a lightweight custom speculator for your task, resulting in higher throughput and faster inference. Turbo LoRA is applied when the GPU has spare capacity and disabled when the GPU is fully saturated, squeezing the most efficiency out of every GPU cycle.

Predibase consistently outperformed both vLLM and Fireworks across a range of request loads, delivering inference speeds of up to 4 times faster. This was especially apparent at higher loads. Predibase was able to maintain lower latency even as we increased the requests per second, meaning you get sustained high performance for large-scale production jobs. In contrast, other solutions experienced signdegradation at production scale.

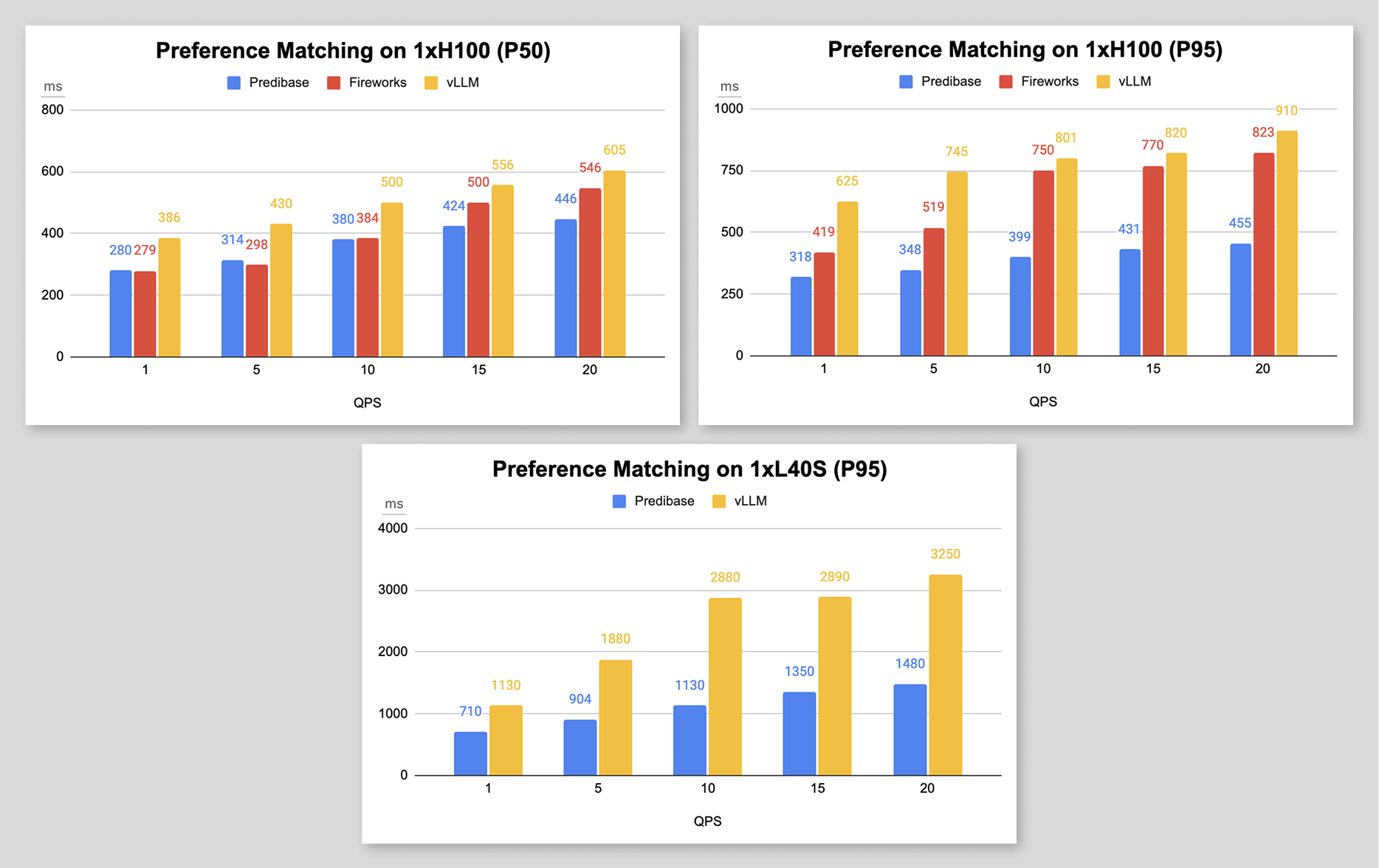

Benchmark Use Case 1: Preference Matching Explanation

This benchmark focused on a real-world customer use case of preference matching with explanations. Examples of preference matching in AI include:

- Matching candidates to jobs

- Advertisements to target personas

- Profile-based dating apps matching

The “explanation” in the task refers to providing a rationale for the selected pairing.

- Tokens:

- Input: ~350 tokens describing context or profilesOutput: ~50 tokens explaining the rationale

- Base LLM: llama3.1-8b-instruct

Preference Matching Benchmark Results:

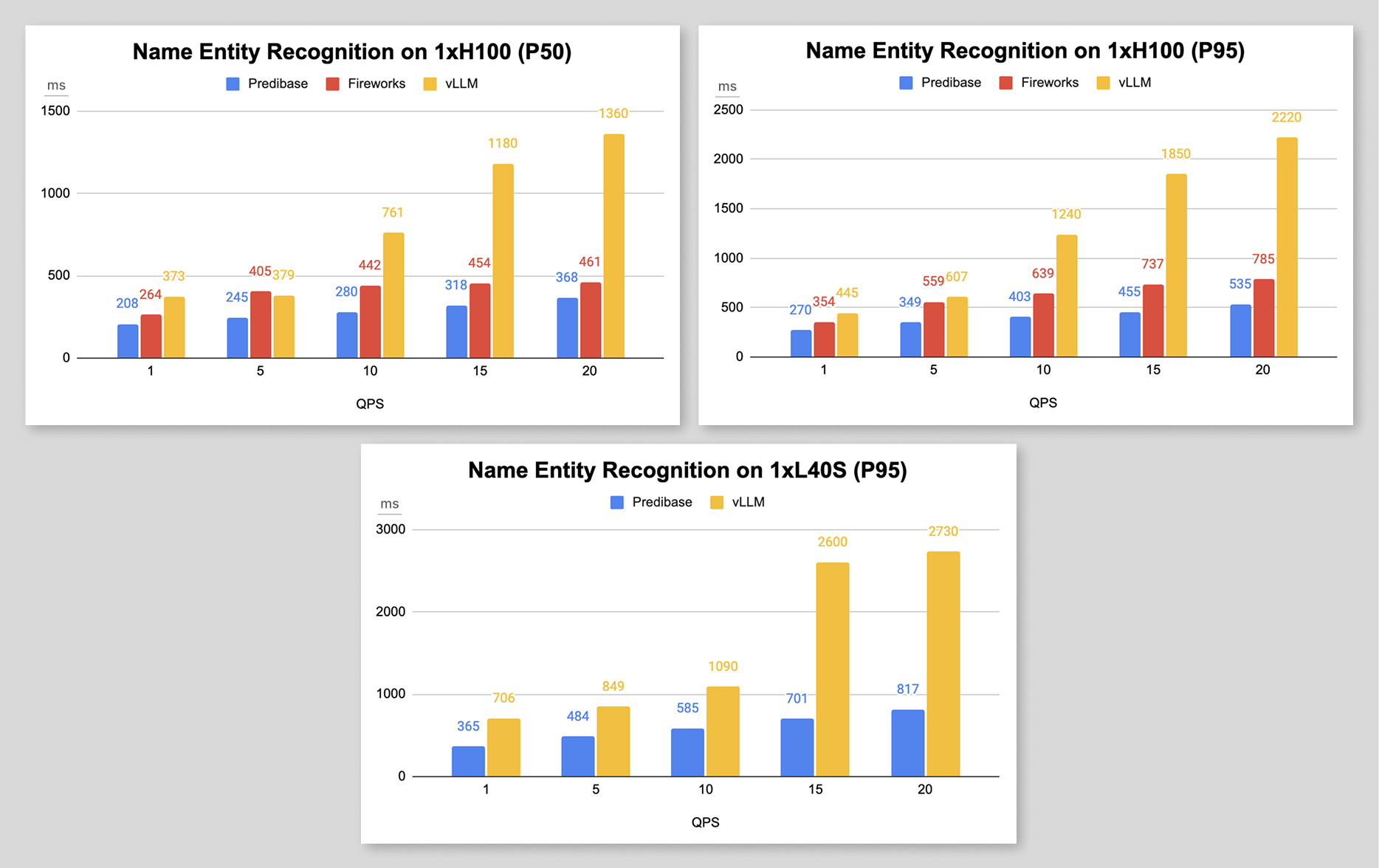

Benchmark Use Case 2: Name Entity Recognition

The CoNLLpp dataset is used for training and evaluating Named Entity Recognition (NER) models, focusing on identifying entities like people, organizations, locations, and miscellaneous names in text. It serves as a standard benchmark in NLP.

- Tokens:

- Input: ~120 tokens describing contextOutput: ~25 tokens extracting the relevant entities

- Base LLM: llama3.1-8b-instruct

NER Benchmark Results:

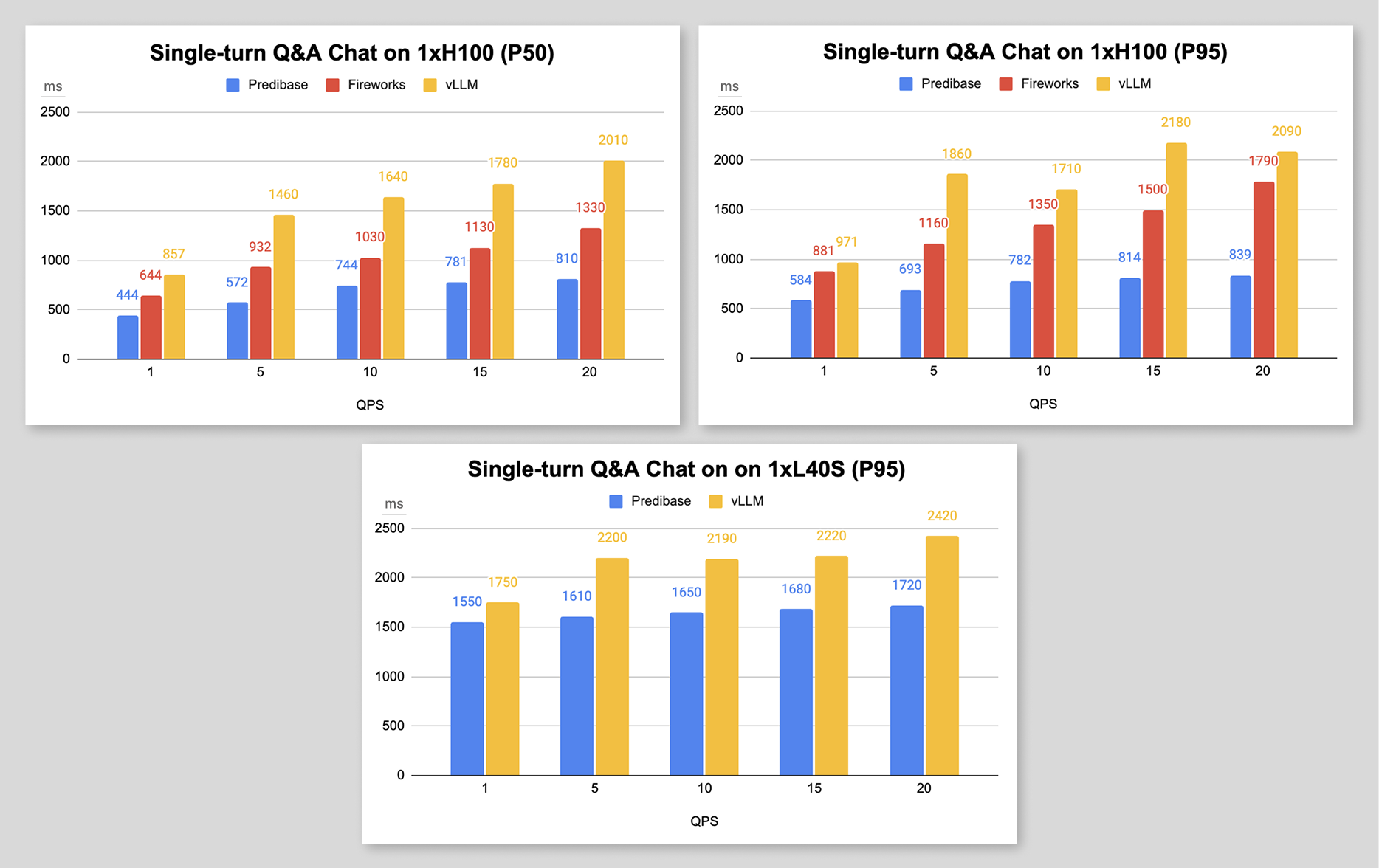

Benchmark Use Case 3: Single-turn Q&A Chat

This single-turn chat use case involves generating concise, accurate responses to standalone user queries without relying on prior context. It's ideal for tasks like quick fact retrieval, definitions, or brief recommendations in customer support and virtual assistants.

- Tokens:

- Input: ~50 tokens describing questionsOutput: ~100 tokens providing answers

- Base LLM: Qwen2.5-7b-instruct

Q&A Chat Benchmark Results:

Benchmark Summary: Up to 4x Faster Latency with Predibase

- Predibase had the lowest latencies 96% of the time across all QPS levels.

- Predibase’s high performance is sustained under heavy loads, showcasing strong scalability and consistency for the most demanding production jobs.

- Fireworks performed well at low QPS, but latency increased significantly at higher traffic, creating bottlenecks for teams running high-scale production workloads.

- vLLM consistently showed the highest latencies, highlighting the operational overhead and tuning complexity of open-source setups.

How We Built the Fastest Inference Engine for Open-source LLMs

Our performance advantage comes from the end-to-end optimizations to our inference stack:

- Our proprietary speculative decoding technique, Turbo LoRa, accelerates token generation without compromising quality, and Predibase’s ability to train a custom task-specific speculator further enhances throughput by tailoring decoding to the workload's structure.

- Optimizations baked in by default provide immediate benefits from our expertise and the extensive work we've invested in identifying, benchmarking, and fine-tuning performance optimizations. No need to configure optimizations manually—you get a set of smart defaults that deliver high performance out of the box.

- Chunked prefill ensures the GPU is never idle.

The Predibase Inference Engine isn’t just fast—it’s also reliable at scale and easy to manage. Unlike open-source serving frameworks like vLLM, which require managing infrastructure, observability, and compatibility issues, Predibase provides a fully managed serving and post-training solution, covering everything from model deployment to inference, scaling, and beyond.

Key Takeaways from our Benchmarking

While speed remains essential in inference, our work with customers has highlighted a few deeper insights worth considering:

- Infrastructure is no longer the bottleneck: With broad access to GPUs and commoditized base inference, the focus needs to shift to the end-to-end solution. Teams should invest in platforms that streamline the entire inference pipeline, not just raw performance.

- Intelligent optimization is where the real value lies: Combining serving and post-training in a unified stack yields significant efficiency gains and a faster time-to-value. Platforms that auto-apply techniques such as chunked prefill, speculative decoding, and training on production data can deliver performance boosts of four times or more.

- Managed beats manual: Fully managed platforms, such as Predibase, provide the benefits of a highly optimized stack without the operational overhead. This lets teams skip the complexity of maintaining infrastructure and stay focused on building impactful AI applications.

- Not all benchmarks are created equal: Effective benchmarking must be tailored to your specific use case, taking into account factors such as GPU architecture, token distribution, and workload characteristics to ensure accurate performance insights and meaningful comparisons.

Next Steps: How to Accelerate Your LLM Deployments

If you're evaluating inference platforms for production LLM workloads, raw token speed alone isn’t enough. You need predictable, low-latency performance, even under load, with minimal engineering overhead.

Predibase outperforms the competition—not just in benchmarks, but where it matters most: in production.

Get Started:

- Watch the Replay of our Next Gen Inference Engineer Webinar

- Get $25 in Credits with our Free Trial

- Schedule a custom demo with an ML Engineer Expert

LLM Inference Frequently Asked Questions

What is model inference?

AI inference is the process of using a trained model to make predictions or generate outputs from new input data. Inference takes place after the model is trained. In the world of LLMs, this is typically defined as the stage where the model "thinks" and produces a text response to a users query or prompt.

How does inference power real-time AI applications?

An inference service can be run in real-time to generate outputs for a wide range of AI applications such as chatbots, fraud detection, call summaries, recommendations and more. Real-time inference requires high performance infrastructure and GPUs to provide low latency responses.

What’s the difference between AI inference and AI training?

Model training is the stage where you “teach” an ML model or LLM by feeding it data and adjusting its internal parameters, such as weights, biases, and so on, until its prediction errors are minimized and the desired response or prediction is generated. Using a labeled (or sometimes unlabeled) training set, the ML algorithm iteratively adjusts those parameters to capture underlying patterns, aiming to generalize well to new, unseen examples and deliver accurate predictions or classifications. Essentially training is the process of teaching a model to do what you want Conversely, model serving or inference is the act of putting a trained ML model or LLM into production so that other systems can call it—typically through a REST endpoint—and receive predictions on demand. In practice, this means provisioning the runtime environment, exposing the model as an online service, and managing the traffic of incoming requests so the model can return results in real time.

How does training vs. inference impact AI infrastructure?

Training is compute-heavy and one-time often requiring many GPUs and distributed infrastructure depending on how large the model or how many parameters it contains. Inference is always-on and must be lightning-fast depending on the application, requiring specialized optimization strategies to ensure responses are delivered in a timely fashion. Hardware and GPU requirements vary significantly depending on model size, traffic patterns or number of requests per second, and the needs of your use case (e.g. chatbots typically require real-time low latency responses to preserve a positive user experience).

What is speculative decoding in LLM inference?

Speculative decoding is a technique that generates multiple token predictions in parallel, speeding up LLM inference and fully utilizing your GPU without sacrificing output quality. Conversely, traditional inference generates one token at a time which is slower.

Can speculative decoding really make LLMs faster?

Yes. Speculative decoding guesses future tokens in parallel, speeding up generation while keeping output quality high. This optimization technique is ideal for real-time apps. Predibase offers Turbo LoRA, a proprietary speculation technique that customizes the speculator to your task resulting in even more performance gains and higher throughput.

Why does inference speed matter in AI?

Inference speed impacts user experience and cost. Faster inference means real-time interaction, lower latency, and more efficient GPU usage. Teams managing production applications invest heavily in improved inference techniques and hardware optimizations to improve throughput, latency, and scalability.