Make sure to sign-up for our monthly newsletter to receive all the latest AI and LLM news, tutorials and product updates.

Happy holidays from Predibase! It has been an undeniably exciting year for AI, and we’re happy to share the first edition of our newsletter, Fine-Tuned. In this edition we look back at some of our best-attended webinars and most-read blog posts as well as share a few recent exciting product updates including support for fine-tuning and serving Mixtral-8x7B.

Going forward, this newsletter will explore emerging best practices for building production AI, share hands-on tutorials, invite you to upcoming webinars and events, and highlight updates to the Predibase platform and our open source projects Ludwig and LoRAX.

Happy New Year!

Featured Event

Fine-Tuning Zephyr-7B to Analyze Customer Support Call Logs

Join us on February 1st at 10:00 am PT to learn how you can leverage open source LLMs to automate one of the most time consuming tasks of customer support: classifying customer issues. You will learn how to efficiently and cost-effectively fine-tune an open source LLM with just a few lines of code at a fraction of the cost of using a commercial LLM and how to easily implement efficient fine-tuning techniques like LoRA and quantization.

Recent Events + Podcasts

LoRA Land: How We Trained 25 Fine-Tuned Mistral-7b Models that Outperform GPT-4

Fine-Tuning Zephyr-7B to Analyze Customer Support Call Logs

5 Reasons Why Adapters are the Future of Fine-tuning LLMs

Data Driven: Powering Real-World AI with Declarative AI and Open Source

Featured Blog Post

LoRA Land: Fine-Tuned Open-Source LLMs that Outperform GPT-4

LoRA Land is a collection of 25 fine-tuned Mistral-7b models that consistently outperform base models by 70% and GPT-4 by 4-15%, depending on the task. LoRA Land’s 25 task-specialized large language models (LLMs) were all fine-tuned with Predibase for less than $8.00 each on average and are all served from a single A100 GPU using LoRAX. Learn more!

From the Predibase Blog

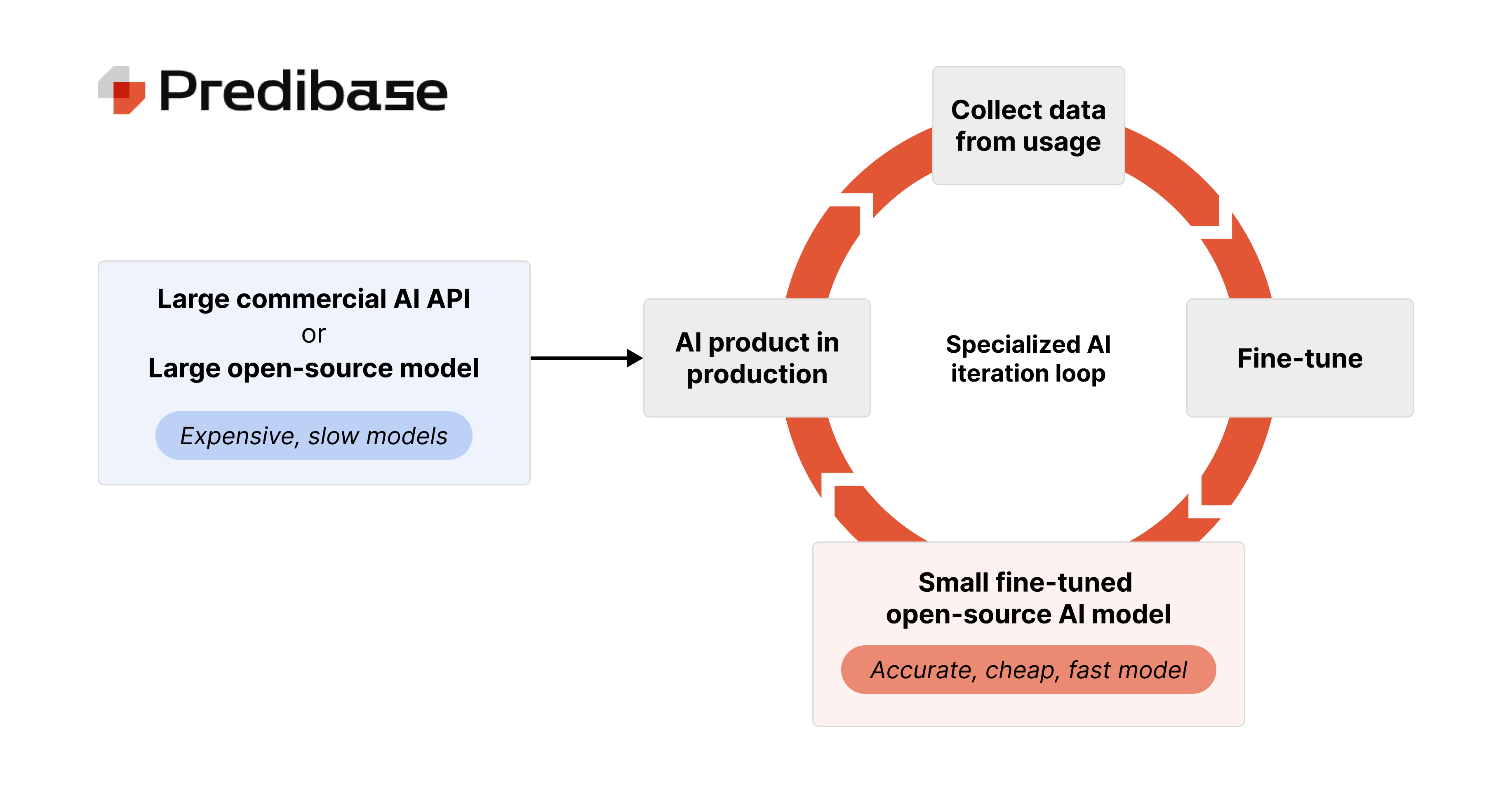

Graduate from OpenAI to Open-Source: 12 best practices for distilling smaller language models from GPT

Fine-Tuning Zephyr-7B to Analyze Customer Support Call Logs

From the Community

Large Language Model Fine-tining - Qlik Dork

How to Fine-Tune LLMs without coding?

TechTalks: How to run multiple fine-tuned LLMs for the price of one

Featured Product Update

We’re excited launch our new prompting experience in the Predibase UI which allows you to easily prompt serverless endpoints and your fine-tuned adapters without needing to deploy them first. This lets teams test their fine-tuned models and compare model iterations all from the UI, enabling much faster test and review cycles.

Full Product Updates

Inference Endpoints:

Predibase now offers instant access to Serverless LLM’s billed on a $/1k-tokens model as part of its Inference Endpoints. To see a full list of the serverless deployments available, visit our docs or our pricing page. Note: We’re constantly adding support for more models, please reach out to support@predibase.com with any requests.

Fine-tuning and Serving OSS Models:

With Predibase, you can now fine-tune and deploy any OSS LLM from HuggingFace up to 70B parameters with ease. Train state-of-the-art models via our fully-featured Python SDK or our intuitive UI and enjoy complete observability into your deployments afterwards.

Dedicated Compute:

Predibase now offers dedicated A100 capacity available on-demand. If you’re looking for access to state-of-the-art GPU’s for training or serving, contact us.

LoRAX New Release:

Predibase released LoRA Exchange (LoRAX) just a few months ago. Since then, we’ve added support for new models including Llama, Mistral, GPT2, Qwen, Mixtral, and Phi as well as new quantization techniques including bitsandbytes, GPT-Q, and AWQ. Stay tuned for even more exciting updates!

Want to try fine-tuning and serving LLMs on the most efficient, cost effective and easy-to-use AI platform out there? Then try Predibase for free with our trial!