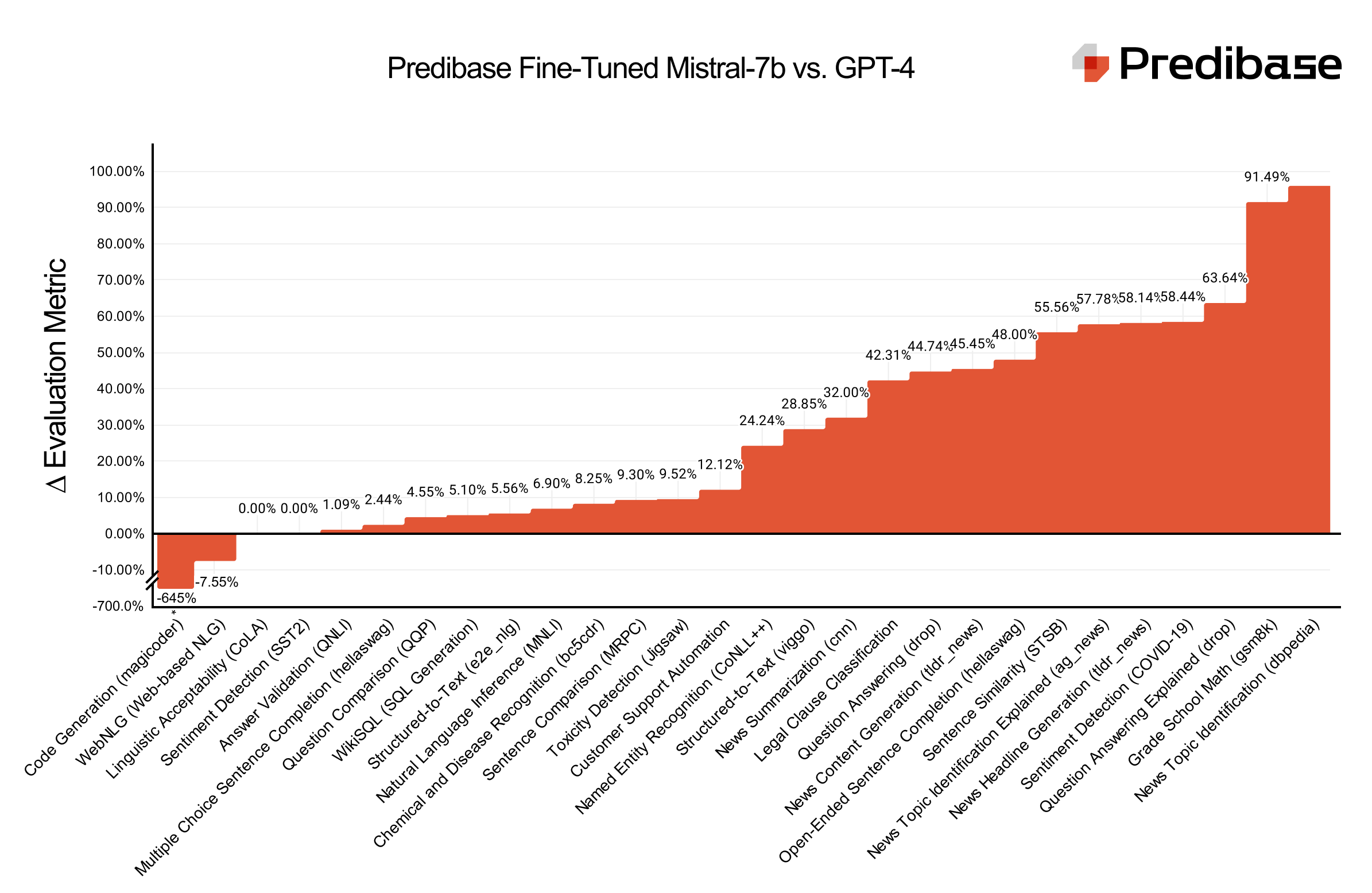

TL;DR: We’re excited to release LoRA Land, a collection of 25 fine-tuned Mistral-7b models that consistently outperform base models by 70% and GPT-4 by 4-15%, depending on the task. LoRA Land’s 25 task-specialized large language models (LLMs) were all fine-tuned with Predibase for less than $8.00 each on average and are all served from a single A100 GPU using LoRAX, our open source framework that allows users to serve hundreds of adapter-based fine-tuned models on a single GPU. This collection of specialized fine-tuned models–all trained with the same base model–offers a blueprint for teams seeking to efficiently and cost-effectively deploy highly performant AI systems.

Join our webinar on February 29th to learn more!

LLM Benchmarks: 25 fine-tuned Mistral-7b adapters that outperform GPT-4.

The Need for Efficient Fine-Tuning and Serving

With the continuous growth in the number of parameters of transformer-based pretrained language models (PLMs) and the emergence of large language models (LLMs) with billions of parameters, it has become increasingly challenging to adapt them to specific downstream tasks, especially in environments with limited computational resources or budgets. Parameter Efficient Fine-Tuning (PEFT) and Quantized Low Rank Adaptation (QLoRA) offer an effective solution by reducing the number of fine-tuning parameters and memory usage while achieving comparable performance to full fine-tuning.

Predibase has incorporated these best practices into its fine-tuning platform and, to demonstrate the accessibility and affordability of adapter-based fine-tuning of open-source LLMs, has fine-tuned 25 models for less than $8 each on average in terms of GPU costs.

Fine-tuned LLMs have historically also been very expensive to put into production and serve, requiring dedicated GPU resources for each fine-tuned model. For teams that plan on deploying multiple fine-tuned models to address a range of use cases, these GPU expenses can often be a bottleneck for innovation. LoRAX, the open-source platform for serving fine-tuned LLMs developed by Predibase, enables teams to deploy hundreds of fine-tuned LLMs for the cost of one from a single GPU.

Serverless Fine-tuned Endpoints: Cost-efficiently serve 100s of LLMs on single GPU.

By building LoRAX into the Predibase platform and serving many fine-tuned models from a single GPU, Predibase is able to offer customers Serverless Fine-tuned Endpoints , meaning users don’t need dedicated GPU resources for serving. This enables:

- Significant cost savings: Only pay for what you use. No more paying for a dedicated GPU when you don’t need it.

- Scalable infrastructure: LoRAX enables Predibase’s serving infrastructure to scale with your AI initiatives. Whether you’re testing one fine-tuned model or deploying one hundred in production, our infra meets you where you are.

- Instant deployment and prompting: By not waiting for a cold GPU to spin up before prompting each fine-tuned adapter, you can test and iterate on your models much faster.

These underlying technologies and fine-tuning best practices built into Predibase significantly simplified the process of creating this collection of fine-tuned LLMs. As you’ll see, we were able to create these task-specific models that outperform GPT-4 with mostly out of the box training configurations.

Methodology

We first chose representative datasets, then we fine-tuned the models with Predibase and analyzed the results. We’ll cover each step in more detail.

Dataset Selection

We selected our datasets from ones that were widely available and either commonly used in benchmarking or as a proxy for industry tasks. Datasets that reflected common tasks in industry include content moderation (Jigsaw), SQL generation (WikiSQL), and sentiment analysis (SST2). We’ve also included evaluations on datasets that are more commonly evaluated in research, such as CoNLL++ for NER, QQP for question comparison, and many others. The tasks we fine-tuned span from classical text generation to more structured output and classification tasks.

Table of LoRA Land datasets spanning academic benchmarks, summarization, code generation, and more.

The length of the input texts varied substantially across tasks, ranging from relatively short texts to exceedingly long documents. Many datasets exhibited a long-tail distribution, where a small number of examples have significantly longer sequences than the average. To balance between accommodating longer sequences and maintaining computational efficiency, we opted to train models with a p95 percentile of the maximum text length.

We define fixed splits for reproducibility based on published train-validation-test splits, when available.

Training Configuration Template

Predibase is built on top of the open source Ludwig framework, which makes it possible to define fine-tuning tasks through a simple configuration YAML file. We generally left the default configuration in Predibase untouched, mostly focusing on editing the prompt template and the outputs. While Predibase allows users to manually specify various fine-tuning parameters, the default values have been refined by fine-tuning 100s of models to maximize performance on most tasks out of the box.

Here is an example of one of the configurations we used:

prompt:

template: >-

FILL IN YOUR PROMPT TEMPLATE HERE

output_feature: label_and_explanation

preprocessing:

split:

type: fixed

column: split

adapter:

type: lora

quantization:

# Uses bitsandbytes.

bits: 4

base_model: mistralai/Mistral-7b-v0.1Simple YAML template for fine-tuning LoRA adapters for Mistral models.

Prompt Selection

When fine-tuning an LLM, users can define a prompt template to apply to each datapoint in the dataset to instruct the model about the specifics of the task. We deliberately chose prompt templates that would make the task fair for both our adapters and for instruction-tuned models like GPT-4. For example, instead of passing in a template that simply says “{text}”, the prompt includes detailed instructions about the task. Single-shot and few-shot examples are provided for tasks that require additional context like named entity recognition and data-to-text.

Evaluation Metrics

We created a parallelized evaluation harness that sends high volumes of queries to Predibase LoRAX-powered REST APIs, which also allows us to collect thousands of responses for OpenAI, Mistral, and fine-tuned models in a matter of seconds.

For evaluating model quality, we assess each adapter on fixed, 1000-sample subsets of held out test data excluded from training. We typically employ accuracy for classification tasks and ROUGE for tasks involving generation. However, in instances where there's a mismatch in the models' output types (for example, when our adapter produces an index while GPT-4 generates the actual class), we resort to designing custom metrics to facilitate a fair comparison of scores.

We plan to publish a comprehensive paper in the coming weeks, providing an in-depth explanation of our methodology and findings.

Results

Adapter name | Dataset | Metric | Fine-Tuned | GPT-4 | GPT-3.5-turbo | Mistral-7b-instruct | Mistral-7b |

|---|---|---|---|---|---|---|---|

Question Answering Explained (drop) | drop_explained | label_and_explanation | 0.33 | 0.12 | 0.09 | 0 | 0 |

Named Entity Recognition (CoNLL++) | conllpp | rouge | 0.99 | 0.75 | 0.81 | 0.65 | 0.12 |

Toxicity Detection (Jigsaw) | jigsaw | accuracy | 0.84 | 0.76 | 0.74 | 0.52 | 0 |

News Topic Identification Explained (ag_news) | agnews_explained | label_and_explanation | 0.45 | 0.19 | 0.23 | 0.25 | 0 |

Sentence Comparison (MRPC) | glue_mrpc | accuracy | 0.86 | 0.78 | 0.68 | 0.65 | 0 |

Sentence Similarity (STSB) | glue_stsb | stsb | 0.45 | 0.2 | 0.17 | 0 | 0 |

WebNLG (Web-based Natural Language Generation)* | webnlg | rouge | 0.53 | 0.57 | 0.52 | 0.51 | 0.17 |

Question Comparison (QQP) | glue_qqp | accuracy | 0.88 | 0.84 | 0.79 | 0.68 | 0 |

News Content Generation (tldr_news) | tldr_news | rouge | 0.22 | 0.12 | 0.12 | 0.17 | 0.15 |

News Headline Generation (tldr_news) | tldr_news | rouge | 0.43 | 0.18 | 0.17 | 0.17 | 0.1 |

Linguistic Acceptability (CoLA) | glue_cola | accuracy | 0.87 | 0.87 | 0.84 | 0.39 | 0 |

Customer Support Automation | customer_support | accuracy | 0.99 | 0.87 | 0.76 | 0 | 0 |

Open-Ended Sentence Completion (hellaswag) | hellaswag_processed | rouge | 0.25 | 0.13 | 0.11 | 0.14 | 0.05 |

WikiSQL (SQL Generation) | wikisql | rouge | 0.98 | 0.93 | 0.89 | 0.27 | 0.35 |

Sentiment Detection (SST2) | glue_sst2 | accuracy | 0.95 | 0.95 | 0.89 | 0.65 | 0 |

Question Answering (drop) | drop | rouge | 0.76 | 0.42 | 0.11 | 0.11 | 0.02 |

News Summarization (cnn) | cnn | rouge | 0.25 | 0.17 | 0.18 | 0.17 | 0.14 |

Grade School Math (gsm8k) | gsm8k | rouge† | 0.47 | 0.04 | 0.39 | 0.29 | 0.05 |

Structured-to-Text (viggo) | viggo | rouge | 0.52 | 0.37 | 0.37 | 0.36 | 0.15 |

Structured-to-Text (e2e_nlg) | e2e_nlg | rouge | 0.54 | 0.51 | 0.46 | 0.49 | 0.18 |

Chemical and Disease Recognition (bc5cdr) | bc5cdr | rouge | 0.97 | 0.89 | 0.73 | 0.7 | 0.18 |

Multiple Choice Sentence Completion (hellaswag) | hellaswag | accuracy | 0.82 | 0.8 | 0.51 | 0 | 0.03 |

Legal Clause Classification | legal | rouge | 0.52 | 0.3 | 0.27 | 0.2 | 0.03 |

Sentiment Detection (COVID-19) | covid | accuracy | 0.77 | 0.32 | 0.31 | 0 | 0 |

Natural Language Inference (MNLI) | glue_mnli | accuracy | 0.87 | 0.81 | 0.51 | 0.27 | 0 |

News Topic Identification (dbpedia) | dbpedia | dbpedia | 0.99 | 0.04 | 0.06 | 0.01 | 0 |

Code Generation (magicoder)* | magicoder | humaneval | 0.11 | 0.82 | 0.49 | 0.05 | 0.01 |

† ROUGE is a common metric for evaluating the efficacy of LLMs but is less representative of the model’s ability in this case. GSM8K would have required a different evaluation metric.

* We observed that when performing fine-tuning the Mistral-7B base model with our default configuration on two datasets (MagicCoder and WebNLG) the performance was significantly lower compared to GPT-4. We are continuing to investigate these results further and details will be shared in our upcoming paper.

Of the 27 adapters we provided, 25 match or surpass GPT-4 in performance. In particular, we found that adapters trained on language-based tasks, rather than STEM-based ones, tended to have higher performance gaps over GPT-4.

Try the Fine-Tuned Models Yourself

You can query and try out all the fine-tuned models in the LoRA Land UI. We also uploaded all the fine-tuned models on HuggingFace so that you can easily download and play around with them. If you want to try querying one of our fine-tuned adapters from HuggingFace, you can run the following curl request:

```

curl -d '{"inputs": "What is your name?", "parameters": {"adapter_id": "my_organization/my_adapter", "adapter_source": "hub"}}' \

-H "Content-Type: application/json" \

-X POST https://serving.app.predibase.com/$PREDIBASE_TENANT_ID/deployments/v2/llms/llama-2-7b-chat/generate \

-H "Authorization: Bearer ${API_TOKEN}"

```The Rise of Small, Task-Specific Models

While organizations have been racing to productionize LLMs ever since the debut of ChatGPT in late 2022, many chose to avoid the pitfalls of commercial LLMs in favor of trying to build their own. Leading commercial LLMs can help teams develop proofs of concept and get started generating quality results without any training data but come with trade offs in terms of:

- Control: Giving up ownership of model weights

- Cost: Paying an unsustainable premium for inference

- Reliability: Struggling to maintain performance on a constantly changing model

To overcome these challenges, many teams turned to either building their own Generative Pre-training Transformer (GPT) from scratch or to fine-tuning an entire model with billions or trillions of parameters. These approaches, however, are prohibitively expensive and require enormous computational resources that are out of reach for most organizations.

This in turn has driven the adoption of smaller, specialized LLMs and efficient approaches like the above-mentioned PEFT and QLoRA. These techniques reduce the training requirements–in terms of compute, time, and data–by orders of magnitude and make developing fine-tuned LLMs like those hosted in LoRA Land accessible even for teams with limited resources and smaller budgets.

We built LoRA Land to provide a real world example of how smaller, task-specific fine-tuned models can cost-effectively outperform leading commercial alternatives. Predibase makes it much faster and far more resource efficient for organizations to fine-tune and serve their own LLMs, and we're happy to provide the tools and infrastructure for teams that want to start deploying specialized LLMs to power their business.

- Try LoRA Land yourself

- Find all of the fine-tuned models on HuggingFace

- Start fine-tuning on Predibase with $25 in free credits