When we started Predibase in early 2021, we were on a mission to democratize deep learning. At the time, AI was called machine learning and mostly in the domain of data scientists or researchers. But we believed that AI’s true impact would be realized once more people could build with it.

A lot has changed since March 2021. In just a few short years, the AI landscape has transformed radically, from primarily tabular models on structured data to general-purpose LLMs that are optimized for unstructured data. And our company has led and evolved with it.

Today, we’re thrilled to announce the next chapter in our journey: Predibase will be joining forces with Rubrik, a leader in cybersecurity. Together, we’ll be building towards a shared vision at the intersection of AI, security, and data – what we believe to be the most important frontier in enterprise software.

Our journey from ML to LLMs

When we launched Predibase, we were the “declarative ML” company. Built on top of Ludwig, an open source project my co-founder had written, our platform made it possible to train deep learning models through a simple configuration-driven language – no ML PhD required.

Our key insight at the time was that while modeling was often in the hands of a few machine learning experts, it was engineering teams who were ultimately responsible for deploying those models into production and on the hook for the impact it unlocked. And those engineers, despite being highly technical themselves, were frequently blocked. There simply weren’t enough AI experts to go around. So we built Predibase for the builders – engineers who wanted to bring ML into their products and just needed a good product with some great docs to figure out how.

We saw early traction in both open source and our managed product with developers looking to unblock themselves. Our most popular feature was among teams who wanted to use extremely powerful (for 2022) pre-trained deep learning models like BERT and fine-tune them on their own data. These models were powerful, out-of-the-box, and game-changers for companies looking to go to production.

Then, almost overnight, the game changed.

With the release of GPT3.5 and ChatGPT, the bar for building with AI dropped significantly. Engineers could now access state-of-the-art models via API, and suddenly deep learning was in the hands of a few orders of magnitude more people. What had been a slow and steady march we had identified towards broader accessibility was an overnight revolution.

Betting on Generative AI

The arrival of LLMs forced us to reflect on what the shift meant for our mission and product.

Through the hype, it was initially hard to build a thesis. We ran a deep analysis of 50+ customer calls we did in early 2023, surveying folks who were interested in GenAI and learning more about their use case. The most common things we heard were:

- Unadulterated enthusiasm, but:

- “I don’t know my use case”

The excitement was there, but it was clear we’d have to make some bets within a fog of war. We applied our experience working in AI for decades prior and made three key assertions within the frothy environment:

- LLMs were not a fad, they were a new computing primitive and would dominate AI workloads. We would do a ground-up rebuild of our product to be LLM-native.

- An ecosystem of models, including open source, would thrive. There would not be “one model to rule them all”. In 2025, with the rise of open models like DeepSeek, Llama, Qwen, Mistral as well as proprietary models like Claude & Gemini, this bet seems more obvious but in 2023 it was borderline controversial.

- Organizational data was going to be a key differentiator, and as companies made the jump from prototype-to-production, they would look increasingly to personalize and tune models with their own data to have a longer-term competitive edge.

The last point was where we made most of our technical investment – we believed competitive value in AI wouldn’t accrue to general-purpose APIs but rather how models were customized with proprietary data. That insight drove our main investments.

Building a leading GenAI platform

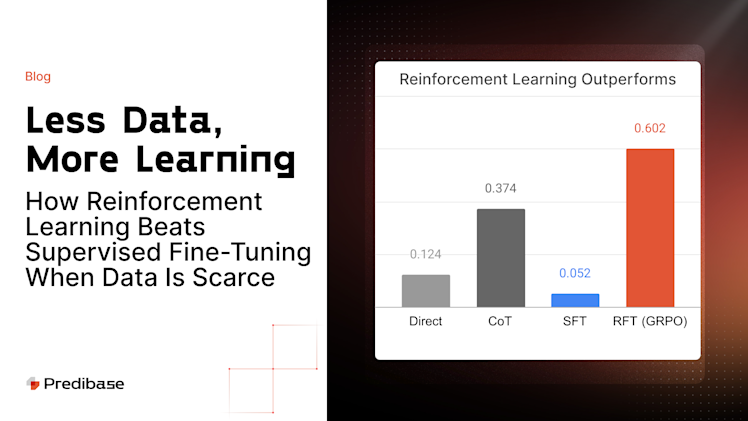

We transformed Predibase to be LLM-first. At first, we focused on post-training – offering tools for supervised fine-tuning (SFT), continued pre-training, and launching the first end-to-end platform for reinforcement fine-tuning (RFT). We saw from early adoption that the further teams got in their AI journey, the more likely they were to take a model off-the-shelf and use one of our post-training methods to make it theirs.

But we noticed LLMs had also changed the conventional user journey of AI practitioners. The order of operations was reversing – you didn’t train a model and then serve it. More often, you would serve a model, and then post-train it. This put model inference as a top priority for our customers.

To lean into this new reality, we built and open-sourced LoRA Exchange (LoRAX) in 2023 – an innovative inference engine that was designed to serve multiple open-source and fine-tuned models efficiently and reliably. LoRAX grew quickly in the open source and became the heart of our own internal inference engine. Since then, we’ve layered on additional optimizations like Turbo LoRA that have been game-changers for organizations deploying real and high-volume LLM applications to production.

Today, we’re incredibly proud of the platform we’ve built on top of our core technology of model customization / post-training and model deployments. And as founders, the proudest moments we’ve had as a team is seeing our platform power real-world production systems at leading organizations like Checkr, Convirza, Marsh McLennan, Nubank and many others.

Why Rubrik, and why now

Through our journey and the changes in AI over the past few years, we’ve held one belief as constant: AI’s most valuable breakthroughs come from adapting models to your data. It’s the singular truth that’s allowed us to deliver models that achieve better quality results, with faster response times and lower costs. A rare example of being able to have your cake and eat it too.

When we met Rubrik’s executive team, it was clear that the same guiding principle was driving their bold vision to become a generational company at the intersection of three major tailwinds: data, security, and AI. And it became clear to us that now was the right time to build together. Despite how far the AI ecosystem has come, we believe we’re still in the very early innings – especially when it comes to enterprise adoption. The barrier isn’t interest or the model layer any longer; it’s data access, security, and, most importantly, trust.

Rubrik sits on top of one of the most important precursors for enterprise AI by providing data infrastructure, backup, and security to more than 6,000 enterprise customers worldwide. In the process of building their business, Rubrik has created a platform with both an understanding of organizational data as well as credentialed access that can unblock some of the most challenging aspects of building secure GenAI.

Our conversations with Rubrik quickly turned into collaboration, and we began exploring how we could build a governed model layer on top of the secure data lake inherent to Rubrik. The more we spoke, and met each other’s customers, the more conviction we built in our joint vision. We’re excited for the new kinds of AI use cases our complementary technologies can unlock, grounded in real enterprise constraints and real enterprise value.

Looking ahead to what’s next

We’re in the early innings of a fast moving and far-reaching tech revolution. While we’re incredibly proud of the technology we’ve built so far, we know that the next inning will require a similar degree of innovation as our first one and we’ll be building more aggressively, not less, in the months to come.

Our future roadmap will be guided by a combination of our customers, both existing and upcoming, as well as our underlying belief that data & security are going to be foundational to building high-value generative AI. If you’ve been following our journey so far or are new to what we’re doing but interested in the intersection of data, security and AI, stay tuned for the latest developments. Or as Bipul has suggested – better still, join us on this journey.

- Join us on Tuesday, July 15th to learn more about how Rubrik + Predibase are powering secure scalable GenAI: register here

- Read the full press release: Rubrik to Acquire Predibase to Accelerate Agentic AI Adoption

Any unreleased services or features referenced in this blog are not currently available and may not be made generally available on time or at all, as may be determined in our sole discretion. Any such referenced services or features do not represent promises to deliver, commitments, or obligations of Predibase or Rubrik, Inc. and may not be incorporated into any contract. Customers should make their purchase decisions based upon services and features that are currently generally available.