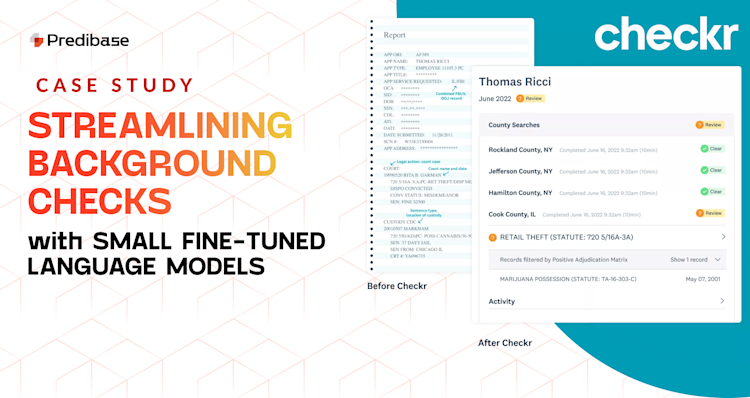

Checkr is a technology company that specializes in delivering modern and compliant background checks for over 100,000 customers of all sizes. Since its founding in 2014, Checkr has leveraged AI and machine learning (ML) to enhance the efficiency, inclusivity, and transparency of the background check process.

I recently had the pleasure of speaking at the LLMOps Summit in San Francisco, where I got to share my experiences at Checkr building a production-grade LLM classification system that helps automate annotations of background checks so that customers can easily adjudicate. By using Predibase to fine-tune a small open-source language model or SLM, we achieved better accuracy, faster response times, and 5X reduction in costs compared to traditional GenAI approaches utilizing commercial LLMs. In this blog, I'll walk you through our journey at Checkr and how we made it happen.

Use case: Automating Adjudication with LLMs

Goal: Classify the most complex records with good accuracy

Adjudication is the process of reviewing background check results to determine a candidate's suitability for hiring based on a company's policies. Checkr provides an automated adjudication solution that has been shown to reduce manual reviews by 95%. We use data collected from various vendors to streamline hiring while creating a process that is unbiased, transparent, and compliant. On average, we conduct millions of background checks each month, and 98% of the data is processed efficiently using a tuned logistic regression model.

The remaining 2%, however, are complex cases that require categorizing data records into 230 distinct categories. Our original solution for this 2% of data involved a Deep Neural Network (DNN) that was able to classify only 1% with decent accuracy, leaving 1% unclassified resulting in more work for the customer. We needed a system with better performance, so we decided to embark on an exploration of different techniques using LLMs.

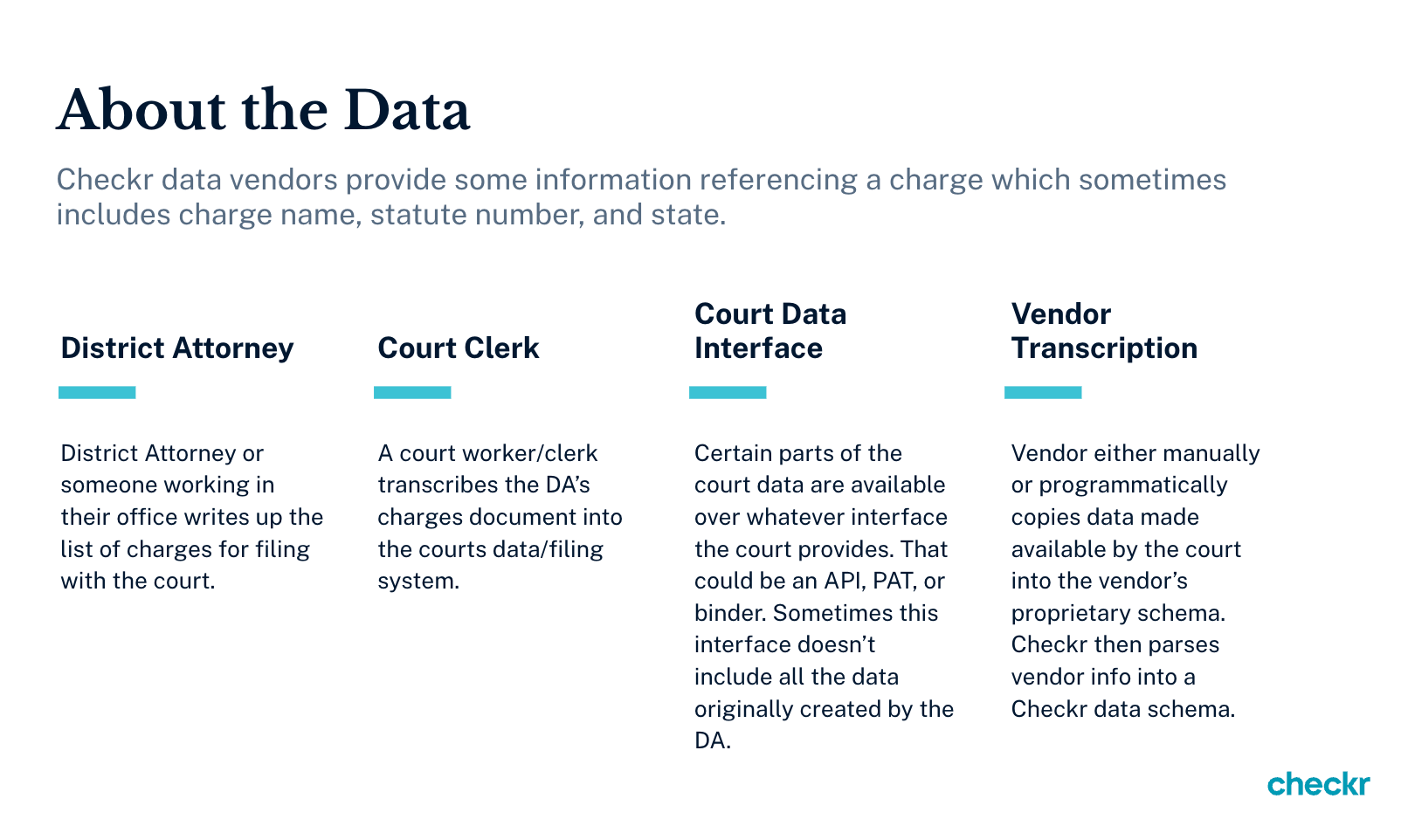

The data we utilize for adjudication is messy and complex, requiring heavy processing and classification.

Challenge: Deliver high accuracy, low latency, and cost efficiency

These complex adjudication cases require careful handling and pose the following challenges and requirements:

- Complex Data: The remaining 2% of background checks involve noisy unstructured text data that is challenging for both human reviewers and even automated models to process. Manual human reviews take hours.

- Synchronous Task: This task is synchronous and must meet low-latency SLAs in order to provide our customers with near real-time employment insights.

- High Accuracy: Our reports are used to make important decisions about a prospective employee's future. Therefore, it’s critical that our models are highly accurate.

- Reasonable Inference Costs: Achieving our goals must be balanced with maintaining reasonable inference costs.

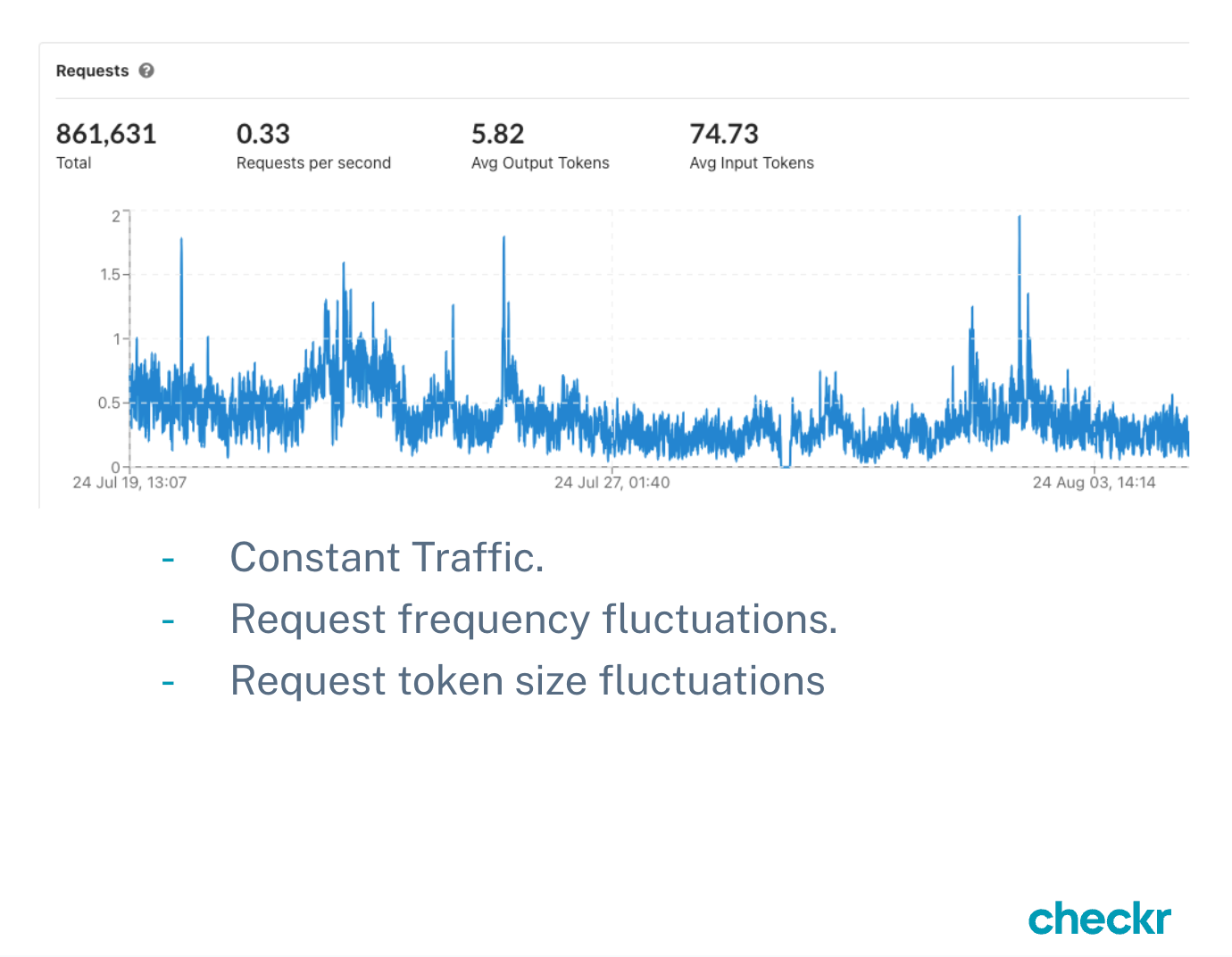

We process millions of tokens a month and need a system that can efficiently scale.

Experiments and Results: From GPT-4 to Fine-tuning Small Language Models (SLMs)

Experimenting with different LLM patterns

In our experiments, I explored several approaches to improve classification accuracy with LLMs. The first experiment involved using GPT-4 as a general purpose “Expert LLM” on the 98% of easier classification cases. The model performed well achieving 87-88% accuracy. However, it only achieved 80-82% accuracy for the hardest 2% of classification cases.

When integrating RAG with our Expert LLM I was able to achieve an impressive 96% accuracy on the easier dataset that was represented well in the training set. However, accuracy decreased for the more difficult dataset due to the training set examples leading the LLM away from a better logical conclusion.

For my next experiment, I fine-tuned the much smaller open-source model, Llama-2-7b and we saw significant improvements across all metrics compared to GPT-4. The fine-tuned model achieved 97% accuracy on the easier dataset and 85% on the difficult dataset. This approach was not only highly efficient, with response times typically under half a second, but also cost-effective.

The encouraging results motivated me to explore other methods and I experimented with combining fine-tuned and expert models. Interestingly, this didn’t net any improvements in performance.

Pattern | Model | Prompt Contents | 98% "easier" dataset | 2% "difficult dataset" | RTT(s) | Cost |

|---|---|---|---|---|---|---|

Expert LLM | GPT-4 | Charge, instructions, 230 categories

| 87.8% | 81.8% | 15 | ~$12k |

Expert + RAG | Extend: GPT-4 + Training Set | Charge, instructions, 6 examples | 95.8% | 79.3% | 7 | ~$7k |

Fine-Tuned LLM | Llama-2-7b | charge | 97.2% | 85.0% | .5 | <$800 |

Fine-Tuned + Expert | Llama-2-chat + GPT-4 |

| No gain | No gain | 15 |

Productionizing our Fine-tuned SLMs with Predibase

During our experimentation we tested several LLM fine-tuning and inference platforms. Ultimately, we landed on Predibase as our platform of choice for their ability to deliver the best performance and reliable support. These are some of the highlights of our work together:

Most accurate fine-tuning results

Our best-performing model was llama-3-8b-instruct, a small open-source LLM that we fine-tuned on Predibase. We achieved an accuracy of 90% for the most challenging 2% of cases, outperforming both GPT-4 and all of our other fine-tuning experiments. Additionally, Predibase consistently provided highly accurate results, whereas some of the other tools we tested were less reliable across fine-tuning runs.

Lowest latency inference

Predibase is specifically optimized for delivering low latency for fine-tuned inference and consistently delivers .15 sec response times for our production traffic which is 30x faster than our GPT-4 experiments. This is critical for meeting the demands of our customers. Best of all, with open-source LoRAX under the hood, we can serve additional LoRA Adapters on Predibase without needing more GPUs.

Significant costs saving

We reduced our inference costs by 5x compared to GPT-4 by fine-tuning and serving SLMs on Predibase. As mentioned above, Predibase also enables us to expand to multiple use cases using multi-LoRA serving on LoRAX, which will further bring down costs as we scale for more use cases.

Easy-to-use platform

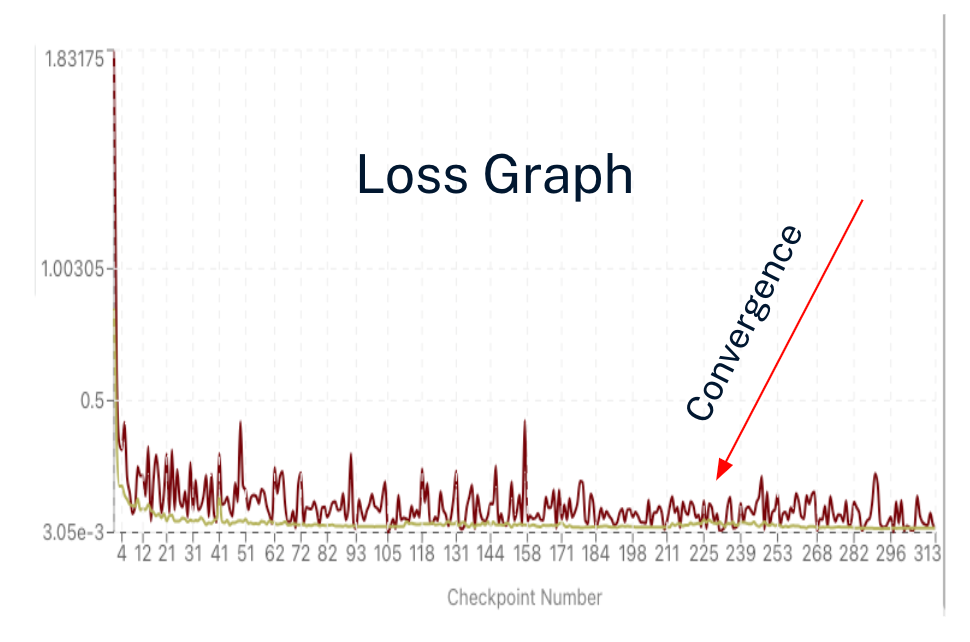

Beyond their robust and developer-friendly SDK, Predibase also offers a user-friendly web app. The web UI makes it easy to manage projects, track model versions, and explore model performance with visualizations like loss graphs. Recently Predibase has also deployed dashboards for production metrics which helps me understand production efficiency and performance across time. Now when I deploy another solution on the same machine I will be able to understand the performance impact immediately.

Lessons learned fine-tuning dozens of models

Given the large size of my dataset—comprising 150,000 rows of training data—I faced a number of challenges during my fine-tuning experiments and learned a lot along the way. To help others on their fine-tuning journey, I captured my key findings below:

1. Monitor model convergence

One key takeaway from my experience was the importance of monitoring model convergence. If the model isn't converging as expected, it's a good idea to try experimenting without the auto-stopping parameter. This approach can help the model reach a global minimum instead of getting stuck at a local one. By doing so, you can ensure that the model is performing at its best and not missing out on potential improvements.

2. Fine-tuned models are not as sensitive to hyperparameters

Deep learning models can be sensitive to hyperparameters. However, I found that during the fine-tuning process, this sensitivity was less pronounced, which was a positive discovery because it required less trials and tuning.

3. Use short prompts to extend cost savings

With a production model in place, I experimented with prompt engineering to boost performance, but it had a minimal impact. The silver lining is that you can significantly reduce token usage by keeping prompts concise, as the model's performance is largely independent of them.

4. High temperature and low top K to identify less confident predictions

When working with classifiers, it's helpful to identify subsets of data where the model's confidence is low. This can be done by calculating a confidence interval, often using the sum of the log probabilities. However, I noticed that this method often resulted in a skewed distribution, with the model appearing overly confident and consistently returning high confidence scores.

To tackle this issue, I experimented with adjusting inference parameters. Lowering the temperature increased next-token variance while lowering top_k decreased the options it could choose from. This combination maintained precision but resulted in a broader distribution of confidence scores and helped identify less confidence predictions.

5. Predibase PEFT: Matching full fine-tuning efficiency at lower costs

In my exploration of fine-tuning methods, I compared Predibase's Parameter Efficient Fine-Tuning (PEFT) approach with traditional full fine-tuning. Predibase leverages techniques like Low-Rank Adaptation (LoRA) which reduces the number of parameters needed for fine-tuning. With this approach, I was able to reduce my training cost and time while delivering comparable performance to full fine-tuning.

Conclusion

Using Predibase we've managed to train highly accurate and efficient small models, achieve lightning-fast inference, and reduce costs by 5X compared to GPT-4. But most importantly, we’ve been able to build a better product for our customers, leading to more transparent and efficient hiring practices.

If you’d like to learn more, you can:

- Watch my talk from the LLMOps Summit

- Download the slides from my talk

- Try Predibase with $25 in free credits

FAQ

What makes a low-latency LLM deployment possible?

Achieving low-latency LLM performance in production requires more than just a fast model—it depends on smart architecture. Technologies like Turbo LoRA optimize inference for reasoning-heavy tasks, cutting latency by up to 3x. When paired with efficient GPU utilization and adapter-based pipelines, you can maintain real-time responsiveness even at scale.

What infrastructure is needed to bring AI products to life?

AI infrastructure for products must go beyond basic model hosting. You need an end-to-end platform that supports model training, evaluation, deployment, and scaling. This includes fine-tuning workflows, observability, auto-scaling GPU clusters, and integration into real-world applications. Declarative ML systems like Predibase enable teams to build and manage these complex workflows with far less overhead.

What are LoRA adapters and why do they matter for LLM deployment?

LoRA (Low-Rank Adaptation) adapters let you fine-tune massive LLMs efficiently by training only a small set of parameters. This not only reduces cost but allows for adapter-based serving, where multiple customizations can be swapped in and out without retraining or merging. It’s a game-changer for teams iterating fast on multiple use cases.

What is Turbo LoRA and how does it work?

Turbo LoRA is an advanced inference technique that accelerates the performance of LoRA-adapted LLMs—delivering near-instant response times without sacrificing quality. By restructuring how adapters are loaded and served, Turbo LoRA enables ultra-fast reasoning for production-grade applications like chatbots, agentic workflows, and recommendation engines. Read more about Turbo LoRA.

How does declarative ML streamline the LLM pipeline?

Declarative machine learning abstracts the complexity of model development and deployment. Instead of writing custom training scripts, you define what you want the model to do, and the system handles how it gets done. When applied to the LLM pipeline, declarative ML simplifies fine-tuning, evaluation, and serving—helping teams move from prototype to production faster.

How do SLM benchmarks compare to traditional LLMs in real-world use cases

Small Language Models (SLMs) often outperform larger models on cost, latency, and efficiency when fine-tuned for specific tasks. Recent SLM benchmarks show that with techniques like LoRA adapters and optimized serving (e.g., Turbo LoRA), SLMs can match or even exceed LLM performance in domain-specific applications—while using a fraction of the compute. At SmallCon, we’ll dive into benchmark results across inference speed, accuracy, and resource usage to highlight where SLMs shine.