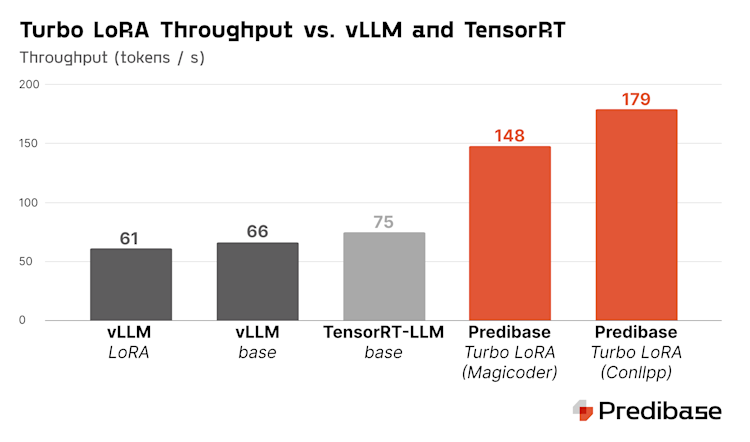

Turbo LoRA is a new parameter-efficient fine-tuning method we’ve developed at Predibase that increases text generation throughput by 2-3x while simultaneously achieving task-specific response quality in line with LoRA. While existing fine-tuning methods focus only on improving response quality – often at the cost of lower throughput – Turbo LoRA actually improves throughput over both standard LoRA and even the base model. This ultimately means lower inference costs, lower latency, and higher accuracy, all in a single adapter that can be created with just one line of code.

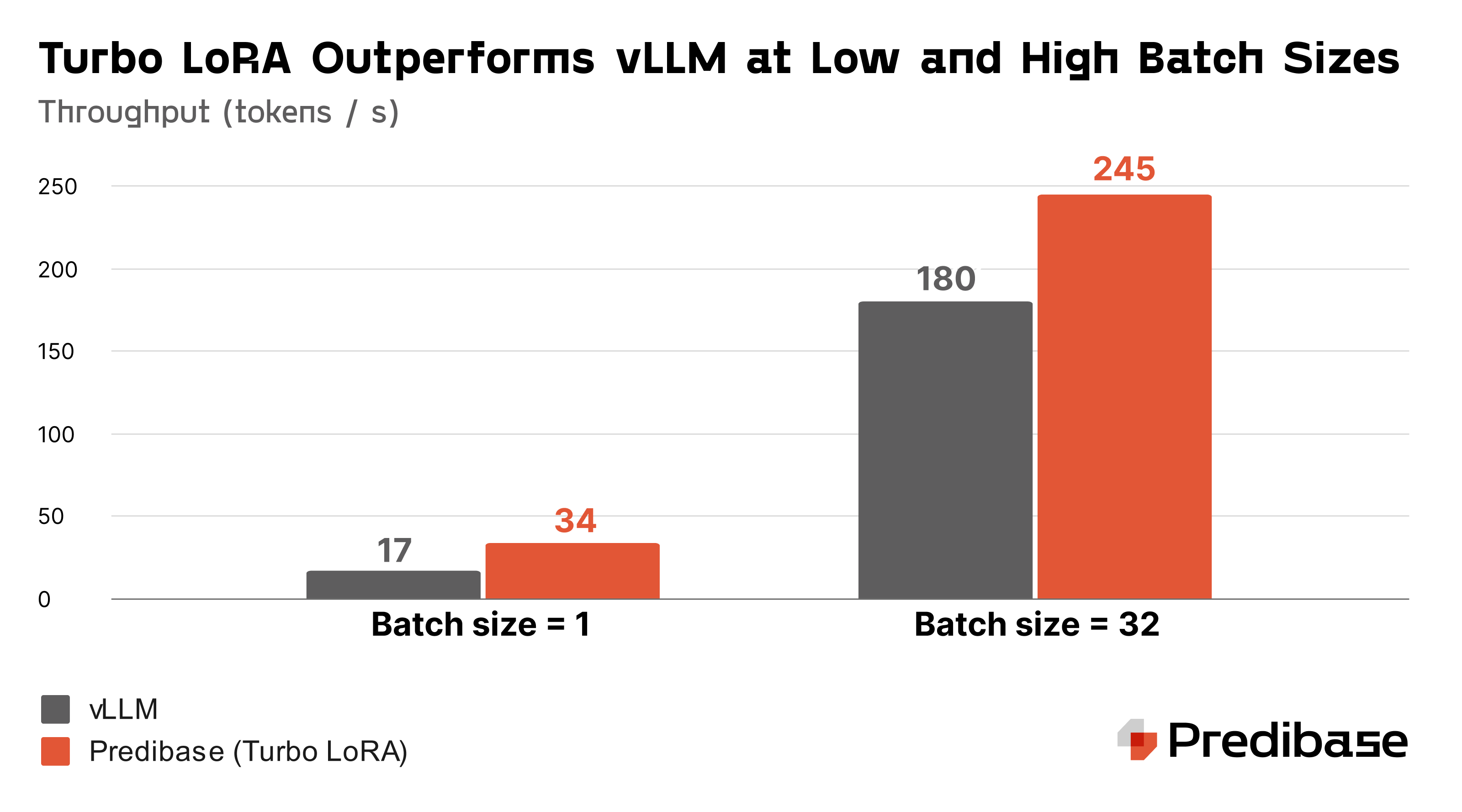

Throughput per request (batch size = 1) on 1x A100 80GB. Turbo LoRA achieves 2-3x higher throughput than OSS solutions for both LoRA and base model inference.

The key to Turbo LoRA’s performance is the marriage of two ideas: low rank adaptation (LoRA) and speculative decoding. While these techniques are most commonly used independently, Turbo LoRA proposes a joint fine-tuning strategy that leverages the more narrow, task-specific set of output sequences to learn to accurately predict several tokens ahead in a single step, without the need for complex verification strategies.

In this blog, we’ll introduce Turbo LoRA and explore the techniques that enable its key benefits, including:

- Faster inference. Speeds up token generation during inference by 2-3x over the base model by generating multiple tokens in a single step.

- More accurate responses. Achieves response quality in line with conventional LoRA.

- Lightweight multi-adapter serving. Introduces only a small number of additional weights on top of LoRA, making it possible to serve dozens of Turbo LoRA adapters on a single GPU with LoRAX.

- Scale to high QPS. Maintains significant speedups even at high batch sizes / queries per second, making it well-suited to high throughput use cases in comparison to existing speculative decoding methods.

Turbo LoRA is now available to try today as part of the Predibase platform for fine-tuning and serving task-specific LLMs. You can get started with Predibase and Turbo LoRA with $25 in free credits by signing up here.

LoRA – Higher Quality, Lower Throughput

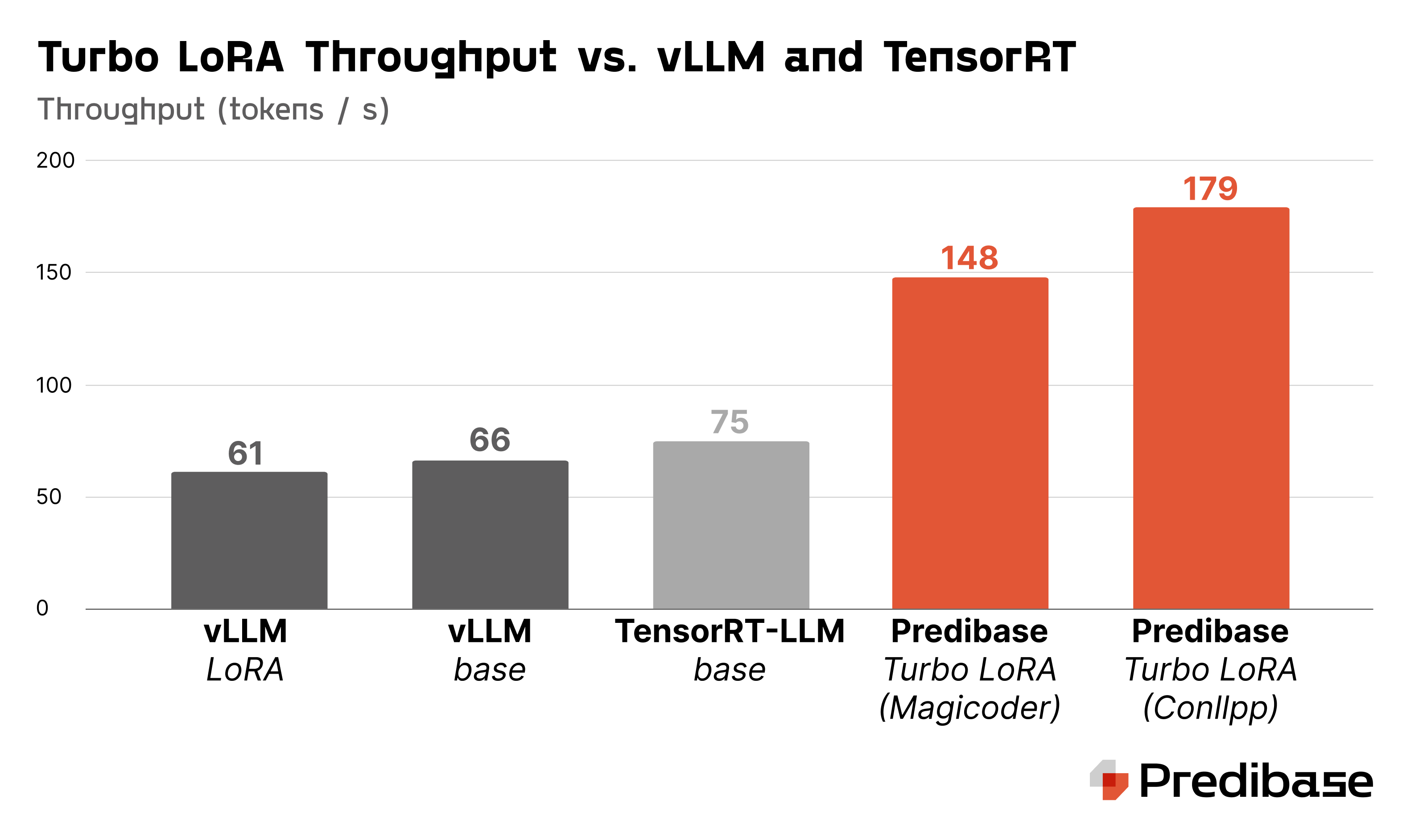

LoRA (Low Rank Adaptation) has become the de facto standard approach for fine-tuning large language models (LLMs) to perform well on specific tasks. Because LoRA has a very small memory footprint compared to the base LLM, hundreds or even thousands of task-specific LoRA adapters can be served on a single GPU using LoRAX, the multi-lora inference engine.

LoRA adapts the LLM to improve response quality by inserting new parameters alongside existing layers (most commonly, the Q, K, V projections).

However, one of the common concerns with using LoRA in production is its speed. Because LoRA introduces additional parameters to the model that need to be activated each step, it also increases the time to generate each token. Merging the LoRA weights back into the base model addresses the speed concern but negates all the other benefits of LoRA during inference – namely the ability to serve multiple tasks simultaneously on the same deployment.

Apple recently demonstrated the value of multi-LoRA inference at the 2024 WWDC and explained their approach in detail in a recent blog. In short: models need to be adaptive to individual customer preferences and behaviors, and need to be specialized to specific tasks in order to get value from the smaller, more cost effective models.

Using our open source LoRAX framework released last year, we demonstrated that hundreds of task-specific LoRAs can be served simultaneously on a single device without noticeable degradation in throughput or latency (a “1 to 100” problem). While it’s important that a system can scale when you need it, most organizations today are just getting started with fine-tuning their first handful of adapters (a “0 to 1” problem), and their most critical challenges are on achieving suitable quality (which LoRA solves for) and reducing cost / latency (which LoRA can make worse when compared against the base model).

In our LoRA Land paper, we demonstrated that small language models like Llama and Mistral can surpass GPT-4 level performance when fine-tuned using LoRA for specific tasks. Having shown that the quality problem can be solved through fine-tuning, we next need to answer the question: “how can we leverage fine-tuning to improve throughput and lower costs?”.

Speculative Decoding – Same Quality, Higher Throughput

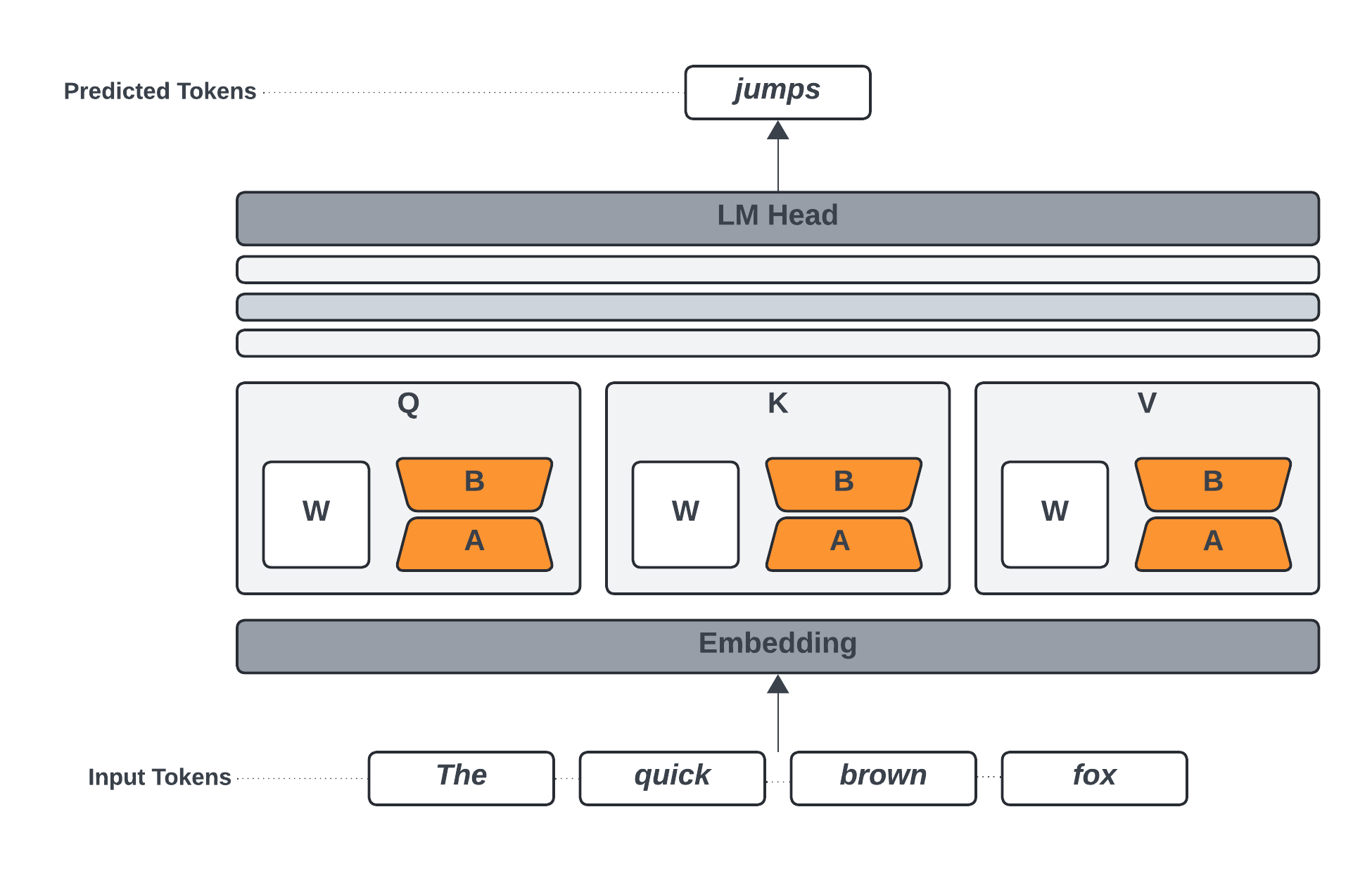

Speculative decoding is a set of techniques used to speed up inference for autoregressive language models that traditionally generate a single token per “step”. Instead of generating one token per step, speculative decoding attempts to guess some number of future tokens, then uses the logits of the LLM itself to “verify” which of the guessed subsequences are correct (meaning: consistent with what the LLM would have otherwise generated). Thanks to this verification step at the end, speculative decoding ensures that the response quality is identical to that of the original model.

Assisted generation is one popular method of speculative decoding that uses a smaller assistant or “draft model” to generate the speculative tokens. The underlying premise is that the cost of generating each token for the assistant model is many times faster than the LLM. So long as the assistant model is consistently able to correctly predict future tokens of the LLM, the overall generation process will be much faster.

Assisted generation uses a smaller assistant model to generate speculative tokens, then uses the LLM for verification.

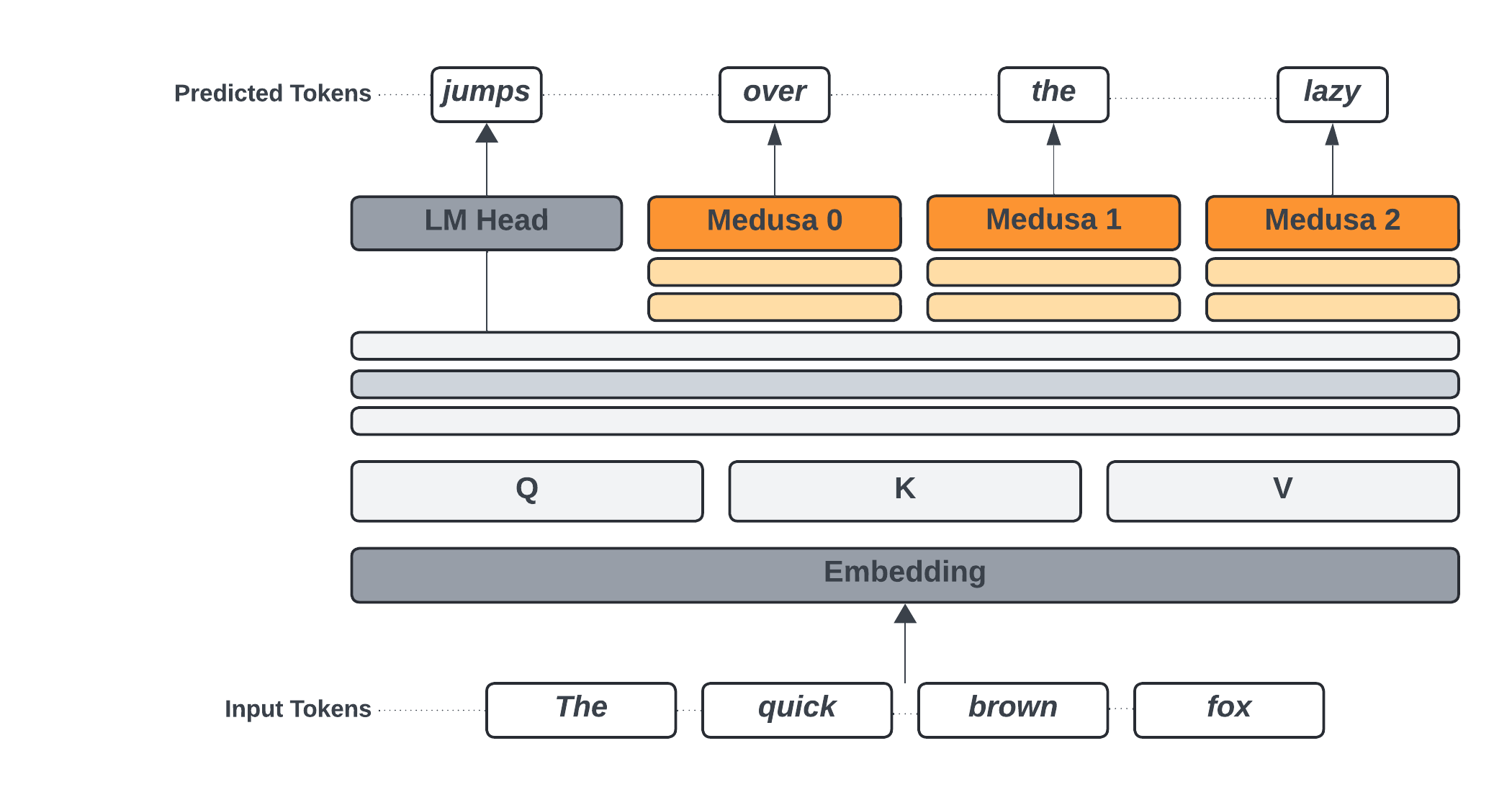

Medusa is a more recent technique that replaces the assistant model with a set of additional layers added to the original LLM called “Medusa heads”. Each head takes as input the last hidden state from the transformer layers of the model, passes it through a multi-layer perceptron block, then finally a fine-tuned replica of the LM head to produce the speculative logits.

Medusa adapts the LLM by inserting new layers at the end of the LLM that sit alongside the LM head. Every Medusa head replicates the base model LM head, introducing significant memory overhead.

Medusa has the benefit of being more operationally simple to serve, as it consists of only a single LLM plus a fine-tuned adapter. However, there are some caveats:

- High memory overhead. Medusa adapters tend to be very large (about 10% of the base model weights), making it impractical to serve more than one Medusa adapter at a time as we would with LoRAs. Most of this can be attributed to the custom LM head per speculative token.

- High computation overhead. Medusa imposes a decent amount of computational overhead due to the multi-layer perceptron, custom LM head per speculative token, and custom tree attention used to test multiple candidate speculative sequences in one step. As such, Medusa tends not to scale well to high batch sizes / many concurrent requests.

The limitations outlined for Medusa mean that, in practice, it tends to be used to speed up base models at low concurrent request volume (latency-sensitive applications). However, there is a strong relationship between the task-specificity of the Medusa adapter and the throughput improvements it can unlock, such that we see the best speedups when the Medusa adapter is trained on a very specific task (e.g., code completion or JSON generation). If we could scalably train many very narrow and task-specific speculative decoding adapters that could be served simultaneously, we could significantly improve the overall performance of inference.

Turbo LoRA – Higher Quality, Higher Throughput

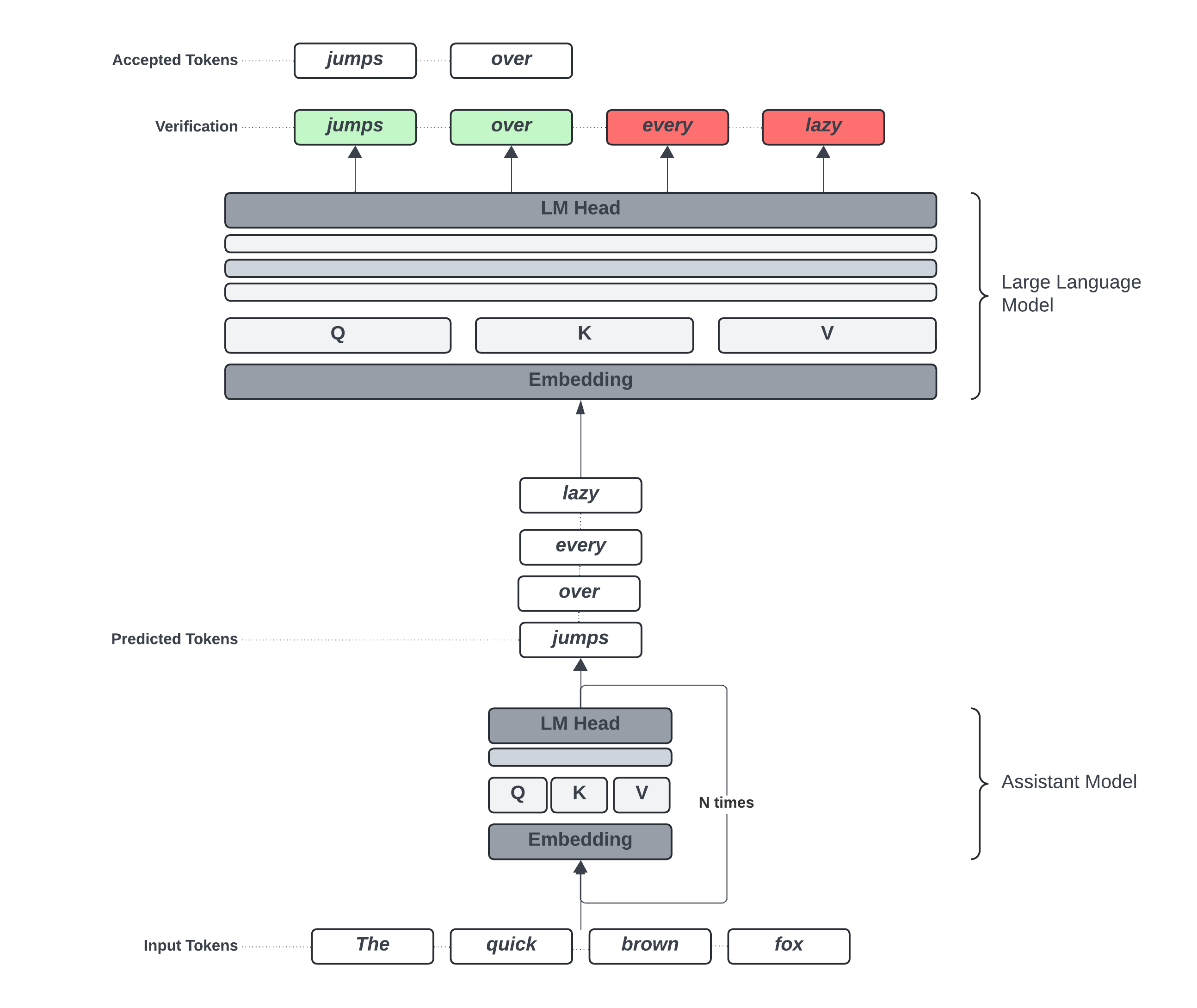

Turbo LoRA marries the model quality benefits of LoRA with the high throughput of speculative decoding. By jointly training both the LoRA adapter and the speculation adapter at once, we take advantage of the more narrow and specific output from training the LoRA to improve speculation quality. In essence: the more constrained the output, the easier it is to predict future tokens.

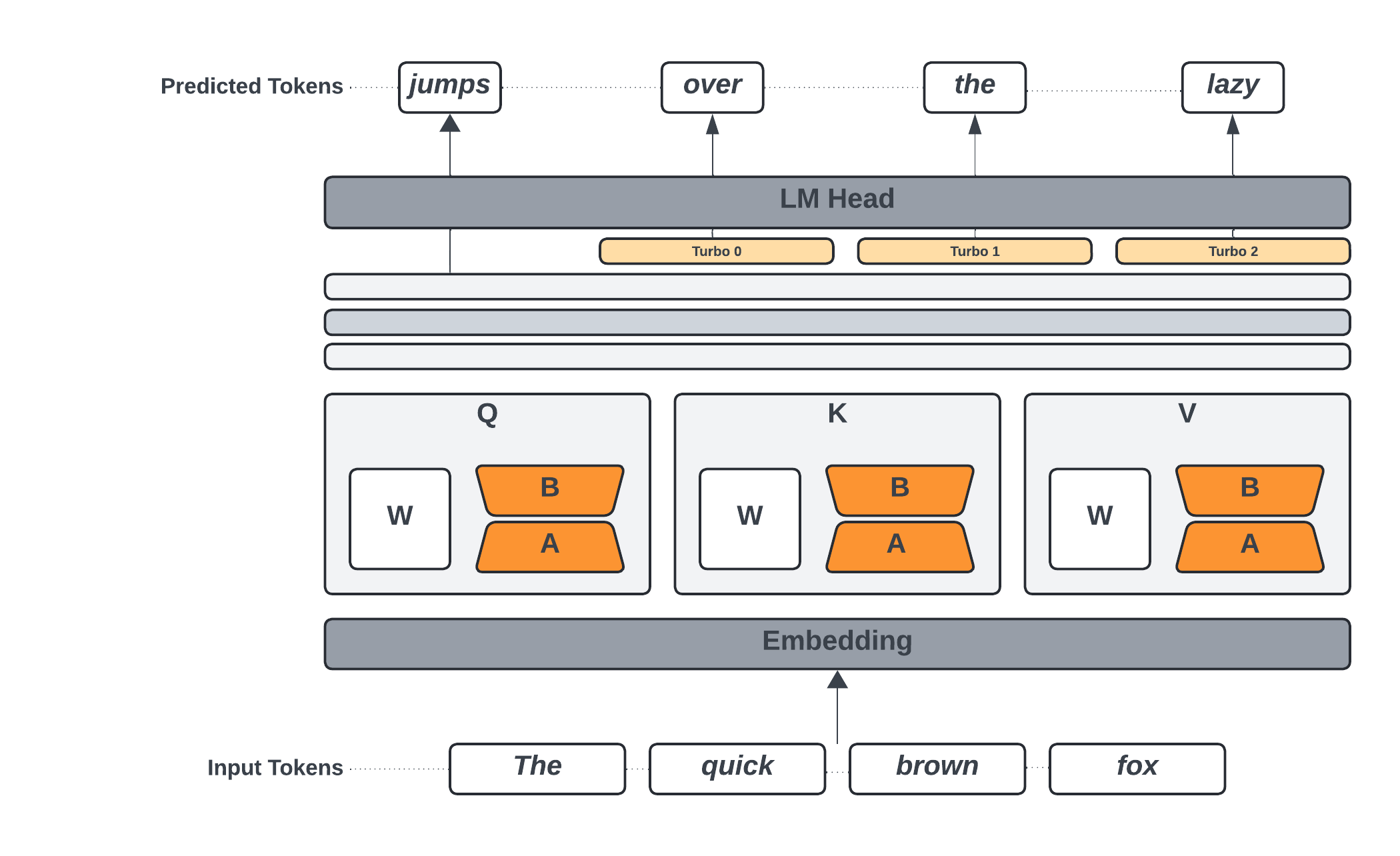

Unlike existing speculative decoding adapters like Medusa, Turbo LoRA is parameter efficient. The number of new parameters introduced by Turbo LoRA is about 1% of the number of parameters introduced by Medusa, comparable to an ordinary LoRA with a higher rank. Taking inspiration from Apple’s Speculative Streaming approach to parameter efficient speculative decoding and HuggingFace’s Medusa v2 architecture, we introduce a series of lightweight projection “streams” between the last hidden state of the transformer blocks and the LM head. The output of these streams are fused together for a single pass through the LM head to generate logits up to N tokens ahead.

Turbo LoRA jointly learns a set of LoRA params to improve response quality with a small set of linear projections to generate speculative tokens. The base model LM head is reused.

Turbo LoRA is also more computationally efficient than existing speculative decoding methods like Medusa, which attempt to validate multiple speculative sequences at once using methods like tree attention. While these methods can improve the hit rate of the speculation process, they also introduce additional compute overhead. As a result, most speculative decoding methods break down under high concurrent requests. Because Turbo LoRA is trained for specialized tasks with constrained output spaces, the top-1 accuracy of the Turbo LoRA speculators is much higher than conventional speculative decoding adapters, and as such, we replace the tree attention with a more lightweight sequence selection process that scales to high concurrent requests.

Total throughput at varying batch sizes on 1x L4 24GB.

The total throughput benefits of Turbo LoRA at high concurrent requests is especially pronounced on the Ada series of Nvidia GPUs (L4, L40S) which have much higher CUDA core to memory bandwidth ratios than the previous Ampere generation (A10G, A100). Speculative decoding achieves its speedups by taking advantage of being memory-bound during the decoding phase of text generation to pack more compute into each step. But this trick breaks down when the batch size is too high, as all the additional compute can become a new bottleneck that exceeds the memory bottleneck.

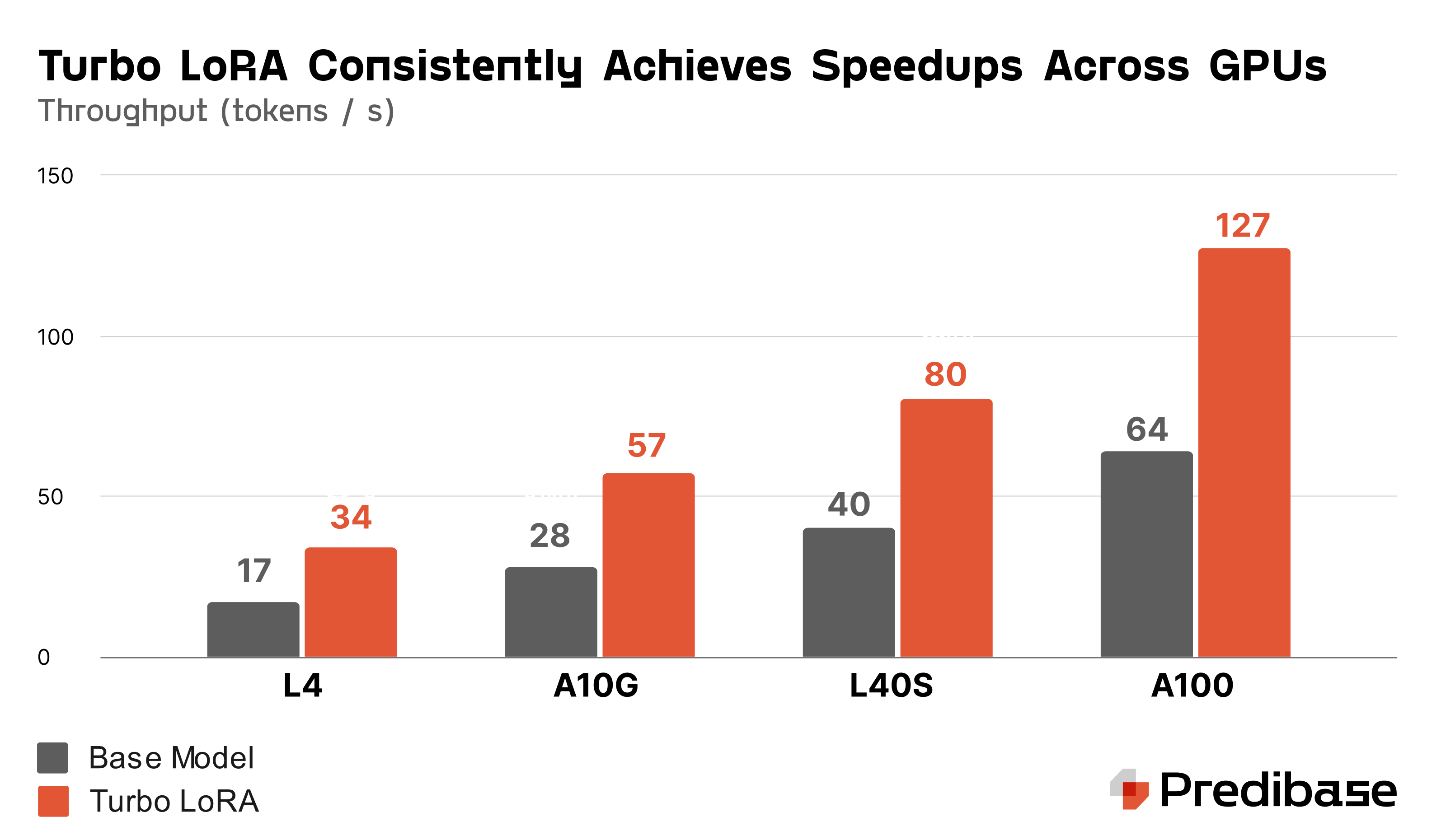

Under normal circumstances where single request end-to-end latency is the dominant metric, Turbo LoRA provides consistent speedups of upwards of 2x across different GPU types.

Because Turbo LoRA is computationally efficient, we’re able to maintain total throughput improvements up to high concurrency by avoiding becoming compute bound. Additionally, because Turbo LoRA is memory and parameter efficient, we can further take advantage of heterogeneous multi-adapter batching pioneered by LoRAX to serve dozens of Turbo LoRA adapters on the same GPU, without the need to switch or offload adapters to host memory.

Using Turbo LoRA in Predibase

Using the Turbo LoRA adapter on Predibase is as easy as enabling a simple boolean flag either through the main Predibase App or through the Predibase SDK. Turbo LoRA adapters are currently available in private beta and are supported for all Mistral-7B models with support for additional model architectures like Zephyr and Phi-3 coming soon.

In the following section, we will walk you through a demo on how to train a Turbo LoRA adapter for the CoNLLPP dataset. The CoNLLPP dataset is widely used for tasks related to named entity recognition (NER), where the primary task is to identify and classify named entities in a text, such as the names of persons, organizations, locations, and miscellaneous entities.

For example, given the following input to the model:

Your task is a Named Entity Recognition (NER) task. Predict the category of each entity, then place the entity into the list associated with the category in an output JSON payload. Below is an example:

Input: EU rejects German call to boycott British lamb .

Output: {"person": [], "organization": ["EU"], "location": [], "miscellaneous": ["German", "British"]}

Now, complete the task.

Input: Only France and Britain backed Fischler 's proposal.

Output:We want the model to generate the following output:

{

"person": [

"Fischler"

],

"organization": [],

"location": [

"France",

"Britain"

],

"miscellaneous": []

}Connecting Your Dataset

The first step is to connect your dataset. You can download the dataset for fine-tuning from this link.

Once you’ve downloaded the dataset, you can either connect to Predibase through the UI by navigating to the Data tab and clicking on File, or you can upload it through the Predibase SDK once you’ve downloaded the SDK using the code snippet below.

from predibase import Predibase

# Login to the Predibase Client

pb = Predibase(api_token="<YOUR PREDIBASE API TOKEN>")

# Connect your dataset using the path where the file was downloaded

dataset = pb.datasets.from_file("/path/conllpp.csv", name="conllpp_demo")Integrating Weights and Biases (Optional)

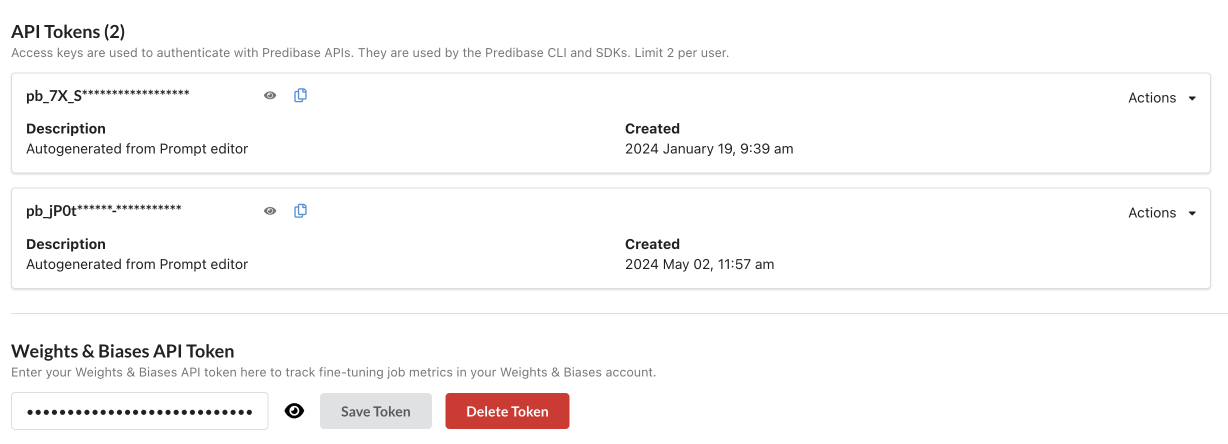

For each training job, Predibase generates learning curves based on metrics such as loss on each set (train and evaluation) so you can track your adapter’s training progress as it learns your task. In addition, Predibase also supports adding in your Weights and Biases token to Predibase to see more fine-grained information such as per step loss, learning rate schedules, etc.

To add your token to Predibase:

- TrainingCreate a Weights & Biases account. Get an API key from your account settings.

- Navigate to the Predibase app and go to Settings > My Profile. Under "Weights & Biases API Token" paste your API key.

- Click "Save Token" to save your API key.

Add your weights and biases token under Settings > My Profile.

Training

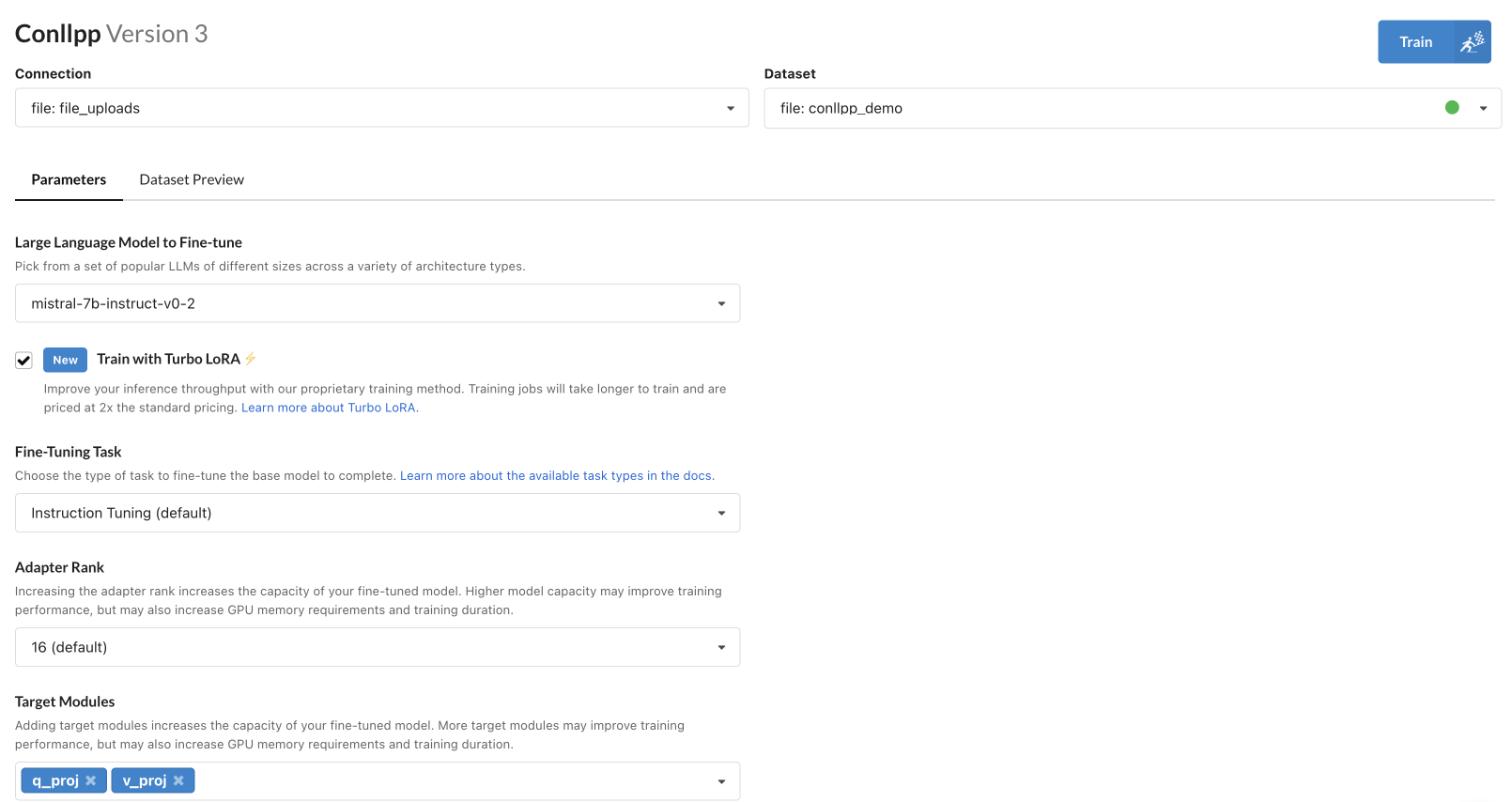

To kick-off a fine-tuning job for the Turbo LoRA adapter, you can navigate to the `Adapters` tab, click on `New Adapter Repository`, set your repository name to `Conllpp` and select your dataset.

Adapter Creation Form In the Predibase App.

Next, select mistral-7b-instruct-v0-2 as your choice of LLM, and select the check box to train with Turbo LoRA. Finally, hit Train to kick off your fine-tuning job. Predibase also lets you customize which LoRA target modules to use, your LoRA adapter rank, fine-tuning task type and learning rate. For now, we will just use the Predibase defaults since they provide strong performance out of the box.

To kick-off a fine-tuning job using the Predibase SDK, you can create a new repository and kick off a job using the code snippet below:

# Create an adapter repository

repo = pb.repos.create(name="Conllpp", description="Conllpp Experiments", exists_ok=True)

# Start a fine-tuning job, blocks until training is finished

adapter = pb.adapters.create(

config=FinetuningConfig(

base_model="mistral-7b-instruct-v0-2",

adapter="turbo_lora", # Set to “lora” to train a regular LoRA adapter

),

dataset=dataset,

repo=repo,

description="Initial model with defaults"

)Once fine-tuning starts, you can monitor training progress through:

- The Predibase App

- Metrics that get streamed out when you run `pb.adapters.create`

- Through weights and biases under the <username>/predibase project

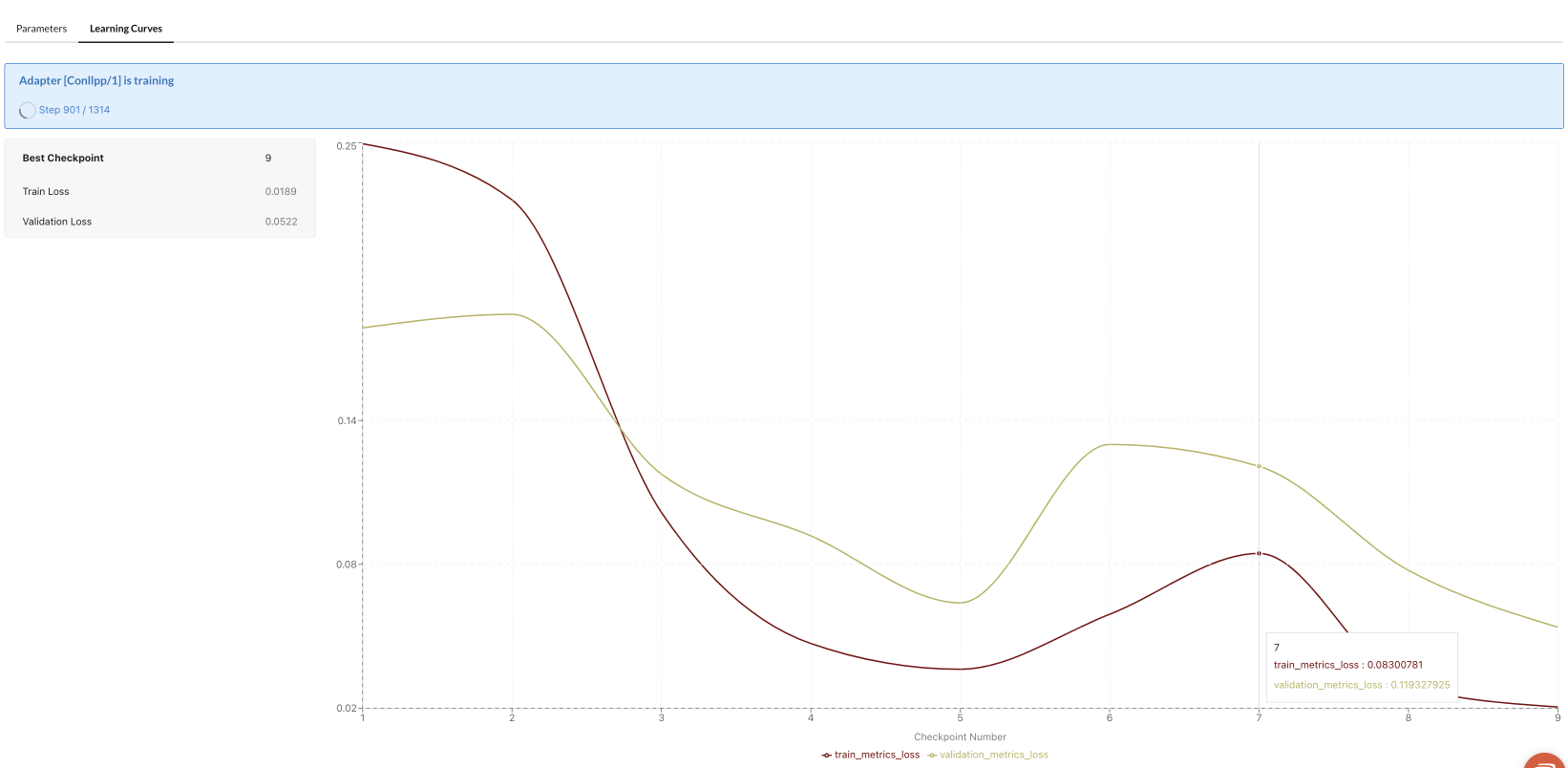

If you’re using the UI, your learning curves will look like this during training. On Predibase, we report loss values at each saved checkpoint.

Training and evaluation loss learning curves during Turbo LoRA training.

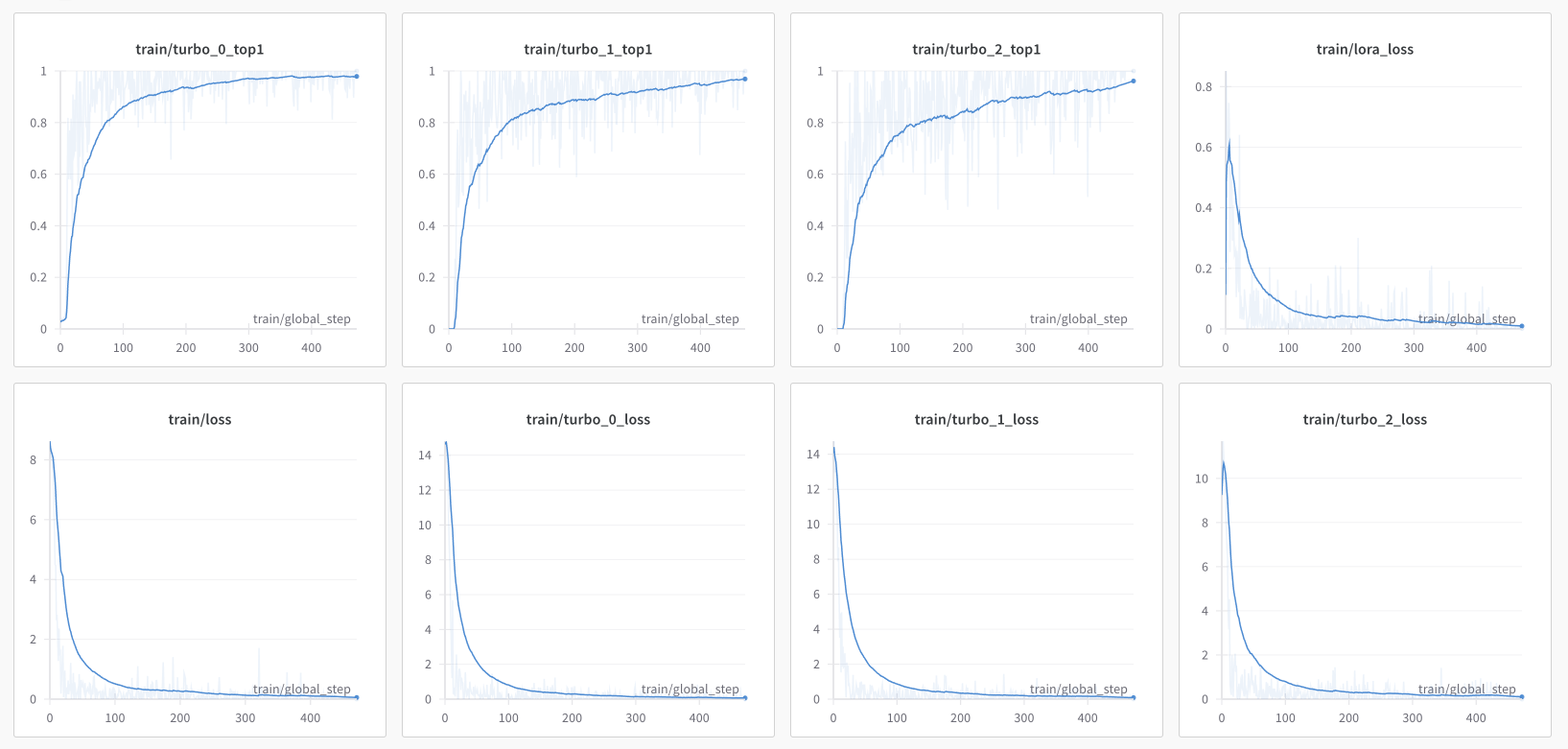

If you observe training progress using Weights and Biases, you will get more fine-grained updates for your training job, some of which are:

- train/turbo_{k}_top1 - This represents the turbo head accuracy of predicting the 1+kth token correctly. You want to see this steadily increase over time. It will be the highest for turbo_0 and be lower for successive turbos.

- train/turbo_{k}_loss - This represents the loss for each turbo projection in the architecture. It'll start with a pretty high value. You want to see this decrease.

- train/lora_loss - This is similar to the original loss values you will see when training regular LoRA adapters. You may notice that these values are now a lot smaller than what we typically see with regular lora training.

- train/loss - This is the combined loss that will also be reported in the Predibase UI. This is what we want decreasing to as low a value as possible. It represents some combination of all of the 3 losses above. It uses a proprietary weighted contribution from each that balances task specific learning with lookahead decoding abilities.

Weights and Biases Training Metrics For Turbo LoRA that show turbo projection layer accuracies, lora loss and overall training loss.

To understand how turbo speedups work and what these predicted probabilities mean, consider the following example:

- turbo_0_top1 accuracy: ~95% (represents the probability of guessing the 2nd token correctly)

- turbo_1_top1 accuracy: ~85% (represents the probability of guessing the 3rd token correctly)

- turbo_2_top1 accuracy: ~75% (represents the probability of guessing the 4th token correctly)

What this means is that, on average:

- We will guess the next token and the 2nd token correctly 95% of the time.

- We will guess the next token, the 2nd token, and the 3rd token correctly approximately 0.95 × 0.85 ≈ 81% of the time.

- We will guess the next token, the 2nd token, the 3rd token, and the 4th token correctly approximately 0.95 × 0.85 × 0.75 ≈ 61% of the time.

Once you train your Turbo LoRA adapter on the ConLLPP dataset, you can train a regular LoRA adapter for comparison by either deselecting the checkbox in the Predibase App or by setting adapter=”lora" in the SDK.

Understanding Fine-Tuning Performance

Inference

You can prompt the base mistral-instruct model or your fine-tuned adapters through both the Prompt UI within the Predibase App or through the Predibase SDK.

Quality

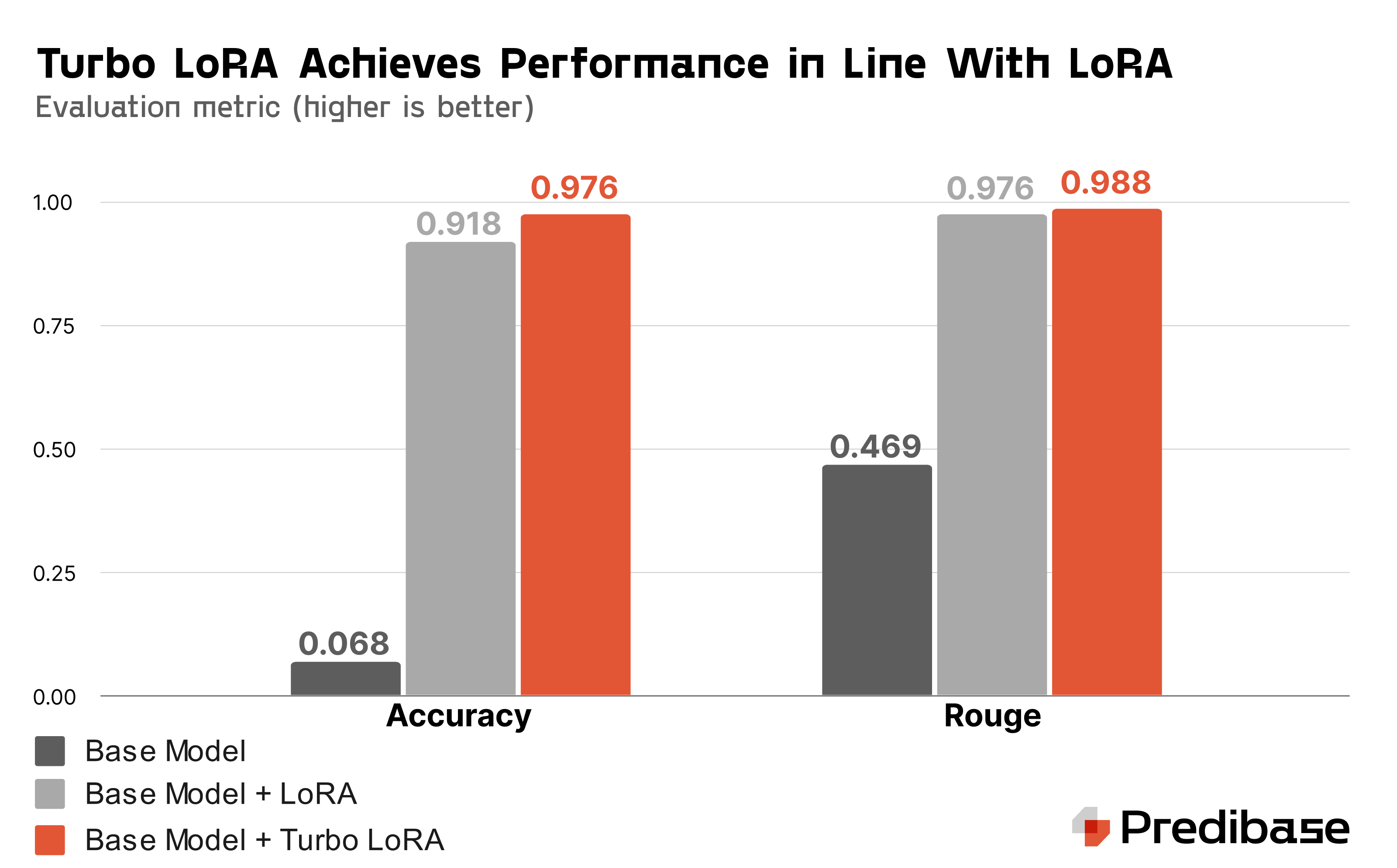

We can assess the performance of the base model, the fine-tuned LoRA adapter, and the fine-tuned Turbo LoRA adapter by comparing their accuracy and ROUGE scores. For efficiency, we use a fixed random subset of 500 samples from a larger evaluation set of 3250 samples. This approach allows for faster evaluation while still providing meaningful insights into the models' performance.

Comparing Accuracy and Rouge on a held out evaluation set on the ConLLPP dataset.

Compared to the base instruct model, which has a low accuracy of 6.8%, the fine-tuned LoRA adapter achieves an accuracy of 91.8%, and the fine-tuned Turbo LoRA adapter reaches 97.6% accuracy. The ROUGE score evaluates the quality of text summaries by comparing them to reference summaries, focusing on overlaps in n-grams, word sequences, and word pairs. Despite the base model frequently generating incorrect answers, it still attains a ROUGE score of 0.469 due to some overlap with the ground truth. In contrast, the LoRA adapter achieves a ROUGE score of 0.976, and the Turbo LoRA adapter reaches 0.988, reflecting their higher alignment with the ground truth.

The Turbo LoRA adapter achieves a higher score than the regular LoRA adapter for two key reasons:

- Increased Model Capacity: By adding a new set of training parameters for each turbo projection, the Turbo LoRA adapter can learn more task-specific weights, enhancing its ability to fine-tune for specific tasks.

- Optimal Placement of Turbo Projections: Placed after the final hidden state and in parallel with the original language model head, the turbo projections access the most information-dense and synthesized data. This allows them to learn speculative tokens effectively and increases the model's capacity to capture task-specific attributes.

In general, the Turbo LoRA adapter should show the same or better quality as the LoRA adapter for the same task.

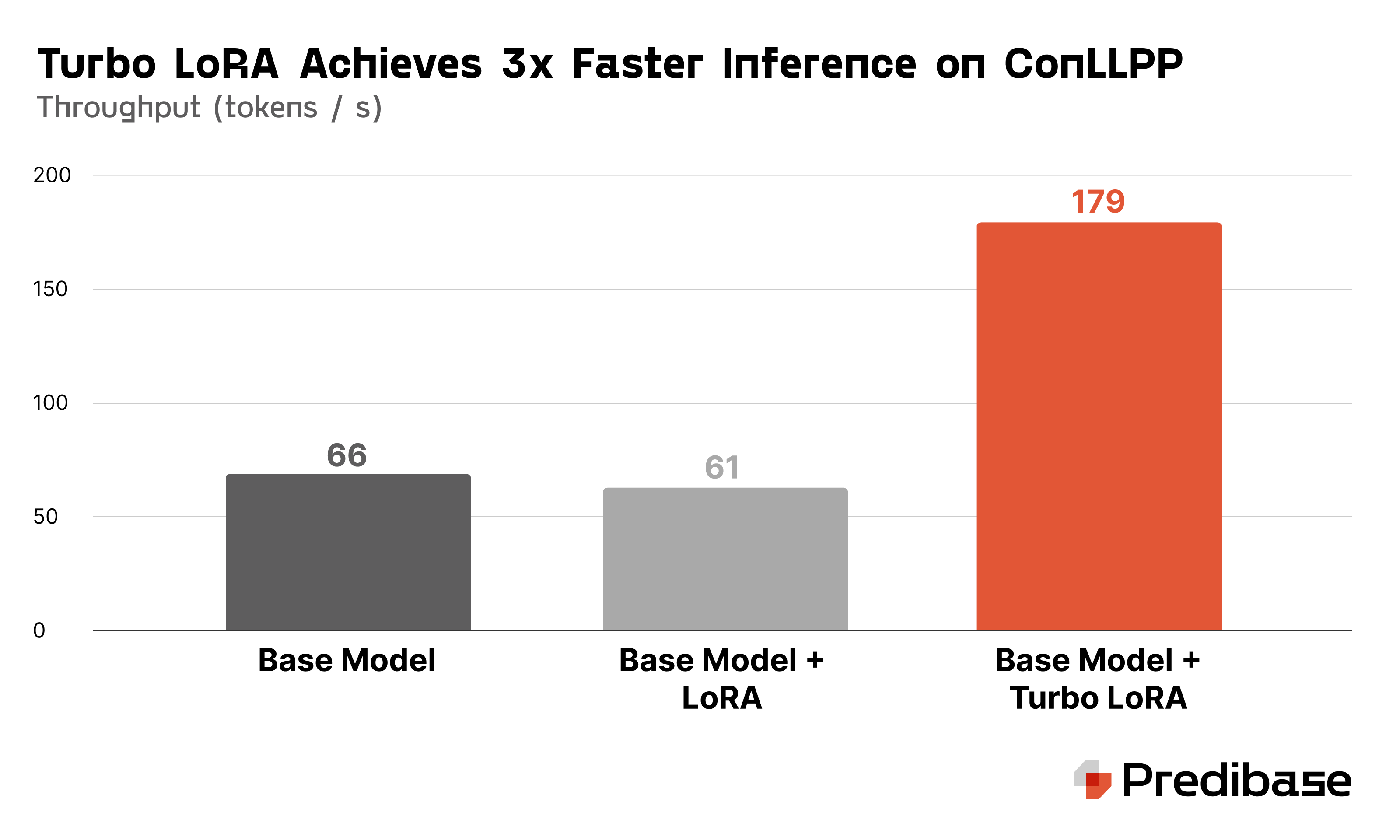

Throughput

We observed that inference using the Turbo LoRA adapter is ~ 2.56x faster than the base model, and ~ 3.44x faster than the regular LoRA adapter for the ConLLPP dataset.

Average throughput (token/s) for base model prompting, base model with LoRA, and base model with Turbo LoRA.

Conclusion

Turbo LoRA in Predibase stands out as the fastest solution for serving fine-tuned large language models (LLMs). It achieves 2-3x throughput improvements over base model inference while maintaining or enhancing response quality in line with LoRA standards. Available to try today in Predibase through our private beta, Turbo LoRA promises significant performance enhancements for your fine-tuned models.

Sign up for Predibase today and take advantage of our $25 free credit to benefit from these optimizations firsthand

FAQ

What is LoRA in LLMs?

LoRA (Low-Rank Adaptation) is a fine-tuning method that adds lightweight trainable parameters to a frozen large language model (LLM). This enables fast, low-cost customization without retraining the entire model. LoRA has become a go-to approach for adapting foundation models to specific tasks or domains.

How does Turbo LoRA differ from standard LoRA for LLMs?

Turbo LoRA is a high-performance serving and inference optimization layer built by Predibase. While LoRA enables efficient fine-tuning, Turbo LoRA speeds up the serving of LoRA-adapted models by up to 3×. It’s ideal for low-latency LLM applications that require fast response times without sacrificing accuracy or modularity.

What is the Turbo model architecture in Predibase?

The Turbo model in Predibase refers to an optimized deployment strategy for fine-tuned models using Turbo LoRA. This architecture supports multi-adapter serving, GPU sharing, and dynamic adapter swaps—enabling faster inference, minimal memory overhead, and lower costs across various LLM workflows.

What is Turbo Flux LoRA?

Turbo Flux LoRA is an internal name for a performance enhancement layer within Predibase’s inference stack. It dynamically loads, compiles, and serves LoRA adapters with minimal latency and optimal throughput. The result: highly responsive LLM deployments, even when serving multiple adapters concurrently.

How does LoRA perform in real-world production settings?

LoRA performance (DRP - Deployment Ready Performance) depends on how it’s trained and served. Turbo LoRA enhances real-time inference by minimizing latency while maintaining high-quality outputs. Benchmarking shows dramatic gains in cost-efficiency and speed when compared to merged model serving.

Can I train OPT or other models using LoRA?

Yes, training OPT (Open Pretrained Transformer) with LoRA is common and supported by many open-source frameworks and by Predibase. Using Turbo LoRA, you can fine-tune OPT or other transformer-based models with minimal overhead, and then serve them with production-grade latency.

How is LoRA creation handled in Predibase?

LoRA creation in Predibase is fully automated: you define the dataset and target task declaratively, and the system handles training, evaluation, and deployment of LoRA adapters. You can also bring your own adapters and plug them into Predibase’s Turbo LoRA inference engine.

What is Lorax and how does it relate to Predibase?

LoRAX (Low-Rank Adaptation Exchange) is Predibase’s open-source contribution to the community. It’s a framework for efficiently training, evaluating, and deploying LoRA adapters across different LLMs—making modular, performant fine-tuning accessible to every team. Learn about LoRAX here.