Fine-tuning open-source language models has become the de-facto way to customize and build task-specific LLMs today. We’ve seen parameter-efficient techniques like LoRA rise in popularity and begin to gain widespread adoption, showcasing what’s possible with adapter-based fine-tuning. The challenge, though, that we hear repeatedly from customers is what to do once they’ve successfully fine-tuned and usually sounds like:

Why do I need to pay 24/7 for an expensive GPU to use my fine-tuned model when I don't have to for using any of the base models?

And it’s a fair question. Dedicated deployments are often more expensive due to per-hour GPU costs while also being overkill if you're looking for inference for small or medium workloads.

We’re excited to announce a solution to this dilemma: Serverless Fine-tuned Endpoints.

What are Serverless Fine-tuned Endpoints?

Serverless Fine-tuned Endpoints allow users to query their fine-tuned LLMs at the same exact per-token price as base models. Now you can finally run inference on your fine-tuned LLMs with a solution that offers:

- a pay-as-you-go, cost-effective solution

- scalability to many LLMs with little impact on performance

- minimal cold start time for production use cases

As one user put it, “I’m so glad I found you all [at Predibase] as all the other LLM offerings require you to deploy dedicated instances to run fine-tuned models.”

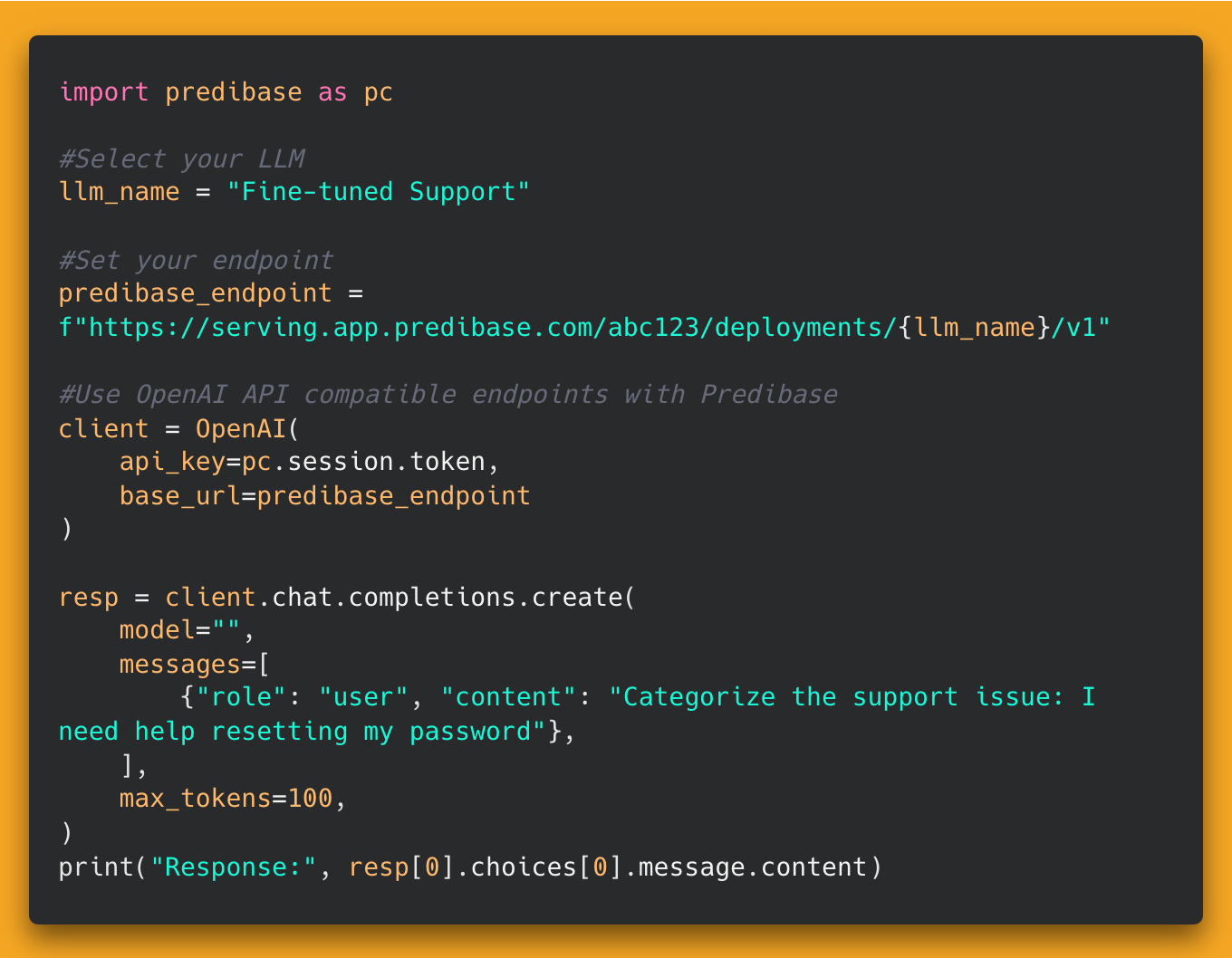

How do I get started?

Using serverless endpoints is as easy as:

How do Serverless Fine-tuned Endpoints work?

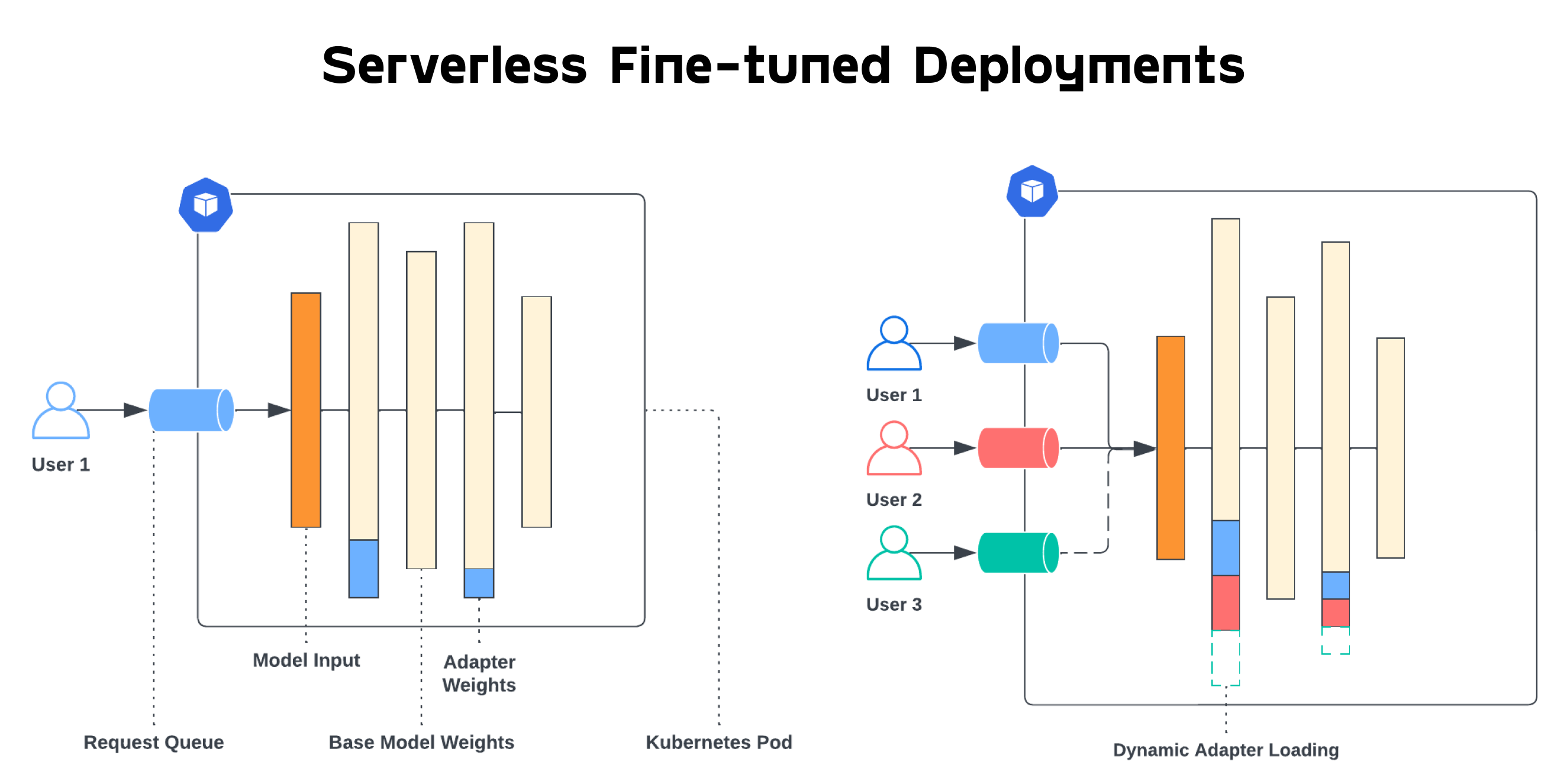

You may be wondering how we’ve made this possible. The secret sauce is LoRA eXchange (LoRAX) a project we open-sourced, and is our approach to LLM serving specifically designed for serving many fine-tuned models at once using a shared set of GPUs. Compared with conventional dedicated LLM deployments, LoRAX consists of three novel components:

- Dynamic Adapter Loading, allowing each set of fine-tuned LoRA weights to be loaded from storage just-in-time as requests come in at runtime, without blocking concurrent requests.

- Tiered Weight Caching, to support fast exchanging of LoRA adapters between requests, and offloading of adapter weights to CPU and disk to avoid out-of-memory errors.

- Continuous Multi-Adapter Batching, a fair scheduling policy for optimizing aggregate throughput of the system that extends the popular continuous batching strategy to work across multiple sets of LoRA adapters in parallel.

At Predibase, we offer always-on and instantly query-able serverless endpoints for pretrained models. Now, with LoRAX and horizontal scaling, we are able to serve customers’ fine-tuned models at scale at a fraction of the overhead compared to other vendors. Specifically, we support serverless fine-tuned models for the following base models:

- Llama-2-7b

- Llama-2-7b-chat

- Llama-2-13b

- Llama-2-13b-chat

- Llama-2-70b

- Llama-2-70b-chat

- Code-llama-13b-instruct

- Code-llama-34b-instruct

- Code-llama-70b-instruct

- Mistral-7b-instruct

- Yarn-Mistral-7b-128k

- Zephyr-7b-beta

- Mixtral-8x7B-Instruct-v0.1

You can read the original LoRAX blog here and see pricing here.

Serverless Fine-tuned Endpoints Performance

Serverless fine-tuned endpoints are designed to be a production-ready solution for running inference on the fine-tuned variants of the most popular base models. We’ll be publishing benchmarks in the next few weeks. If you’re interested in seeing more general benchmarks or use-case tailored benchmarks, reach out to support@predibase.com.

Use-Case: Customer Support Triage

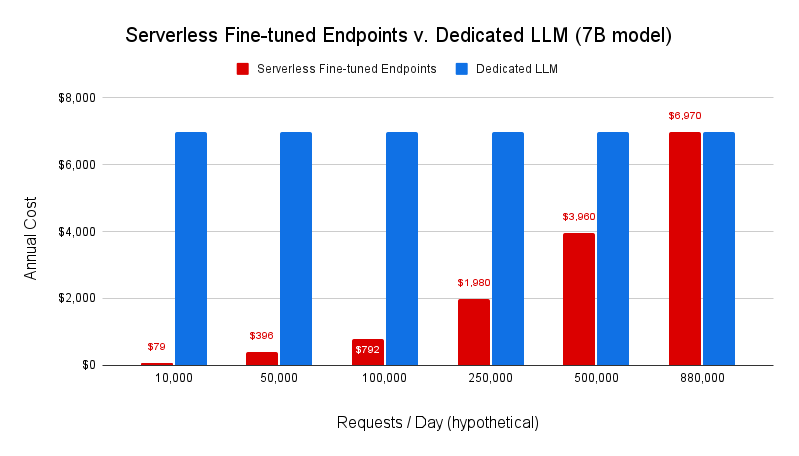

Let’s take a look at an example use-case and see how serverless fine-tuned endpoints can help us reduce the cost of running inference by over 50x.

Background: Let’s assume we’re a tech company using LLMs to automate customer support by classifying incoming support messages into a fixed set of categories. We’ve fine-tuned a mistral-7b model using Predibase on our past years worth of historical support tickets, have analyzed the model metrics, and have built a robust evaluation harness to build confidence in model quality across different scenarios. Now we’re ready to serve our model in production.

Assumptions: We’ll be comparing serverless endpoints to dedicated hardware with the following assumptions:

- Our model will receive on average 10k requests / day

- Each request has on average 100 input tokens and 10 output tokens

- Dedicated Hardware: 1x A10G

- Direct Cost of Hardware: $1.21

- Time Running: 16 hours / day

Price Comparison & Tradeoffs

Using our serverless fine-tuned endpoints, the price would be: 10k requests * 110 tokens * $0.0002 / 1k tokens = $0.22 a day or $6.60 / month.

Using a dedicated A10G deployment running for 16 hours a day, the raw compute cost would be $19.36 / day or $580.8 / month (excluding any markup charged by the LLM platform).

That’s a whopping 88x difference between serverless endpoints and a dedicated deployment!

Keep in mind that if your use-case truly anticipates a large inference volume, then it may be more cost-effective to use a dedicated deployment. We at Predibase are believers in giving users the flexibility to make these tradeoffs based on their use-case and hope that serverless fine-tuned endpoints pave the way in providing a cost-effective option in the future of many specialized, task-specific LLMs.

Conclusion

Predibase is the platform for fine-tuning and serving open-source large language models. If you’re interested in trying out Serverless Fine-tuned Endpoints, sign up for our free trial and receive $25 of free credits.