Authors: Piero Molino, Travis Addair, Devvret Rishi

We’re excited to announce the availability of Ludwig version 0.4 — the open source, low-code declarative deep learning framework created and open sourced by Uber and hosted by the LF AI & Data Foundation. Ludwig enables you to apply state-of-the-art tabular, NLP, and computer vision models to your existing data and put them into production with just a few short commands.

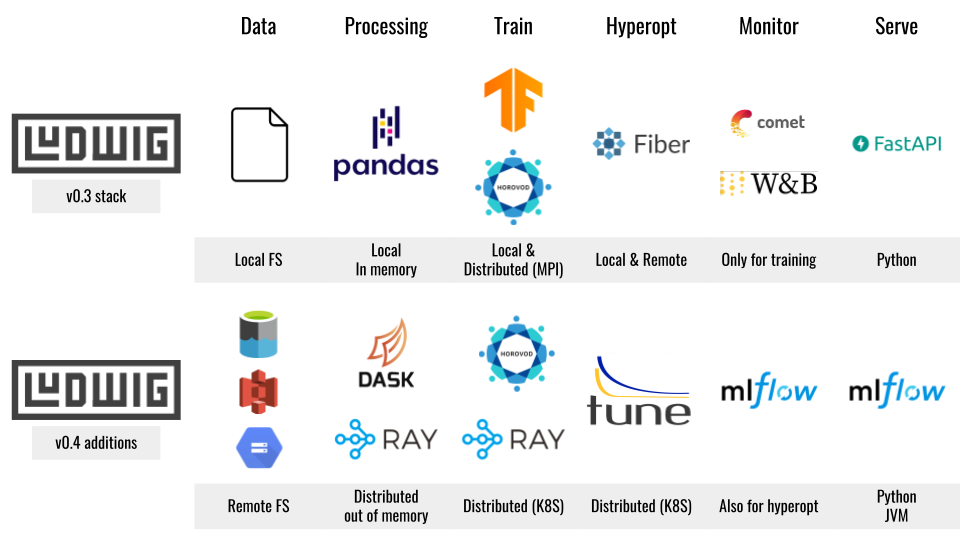

The focus of this release is to bring MLOps best practices to declarative deep learning with enhanced scalability for data processing, training, and hyperparameter search. The new features of this release include:

- Integration with Ray for large-scale distributed training that combines Dask and Horovod

- A new distributed hyperparameter search integration with Ray Tune

- The addition of TabNet as a combiner for state-of-the-art deep learning on tabular data

- MLflow integration for unified experiment tracking and model serving

- Preconfigured datasets for a wide variety of different tasks, leveraging Kaggle

Ludwig combines of all these elements into a single toolkit that guides you through machine learning end-to-end; from experimenting with different model architectures with Ray Tune, to scaling up to large out-of-memory datasets and multi-node clusters with Horovod and Ray, and finally serving the best model in production with MLflow.

Ludwig combines of all these elements into a single toolkit that guides you through machine learning end-to-end

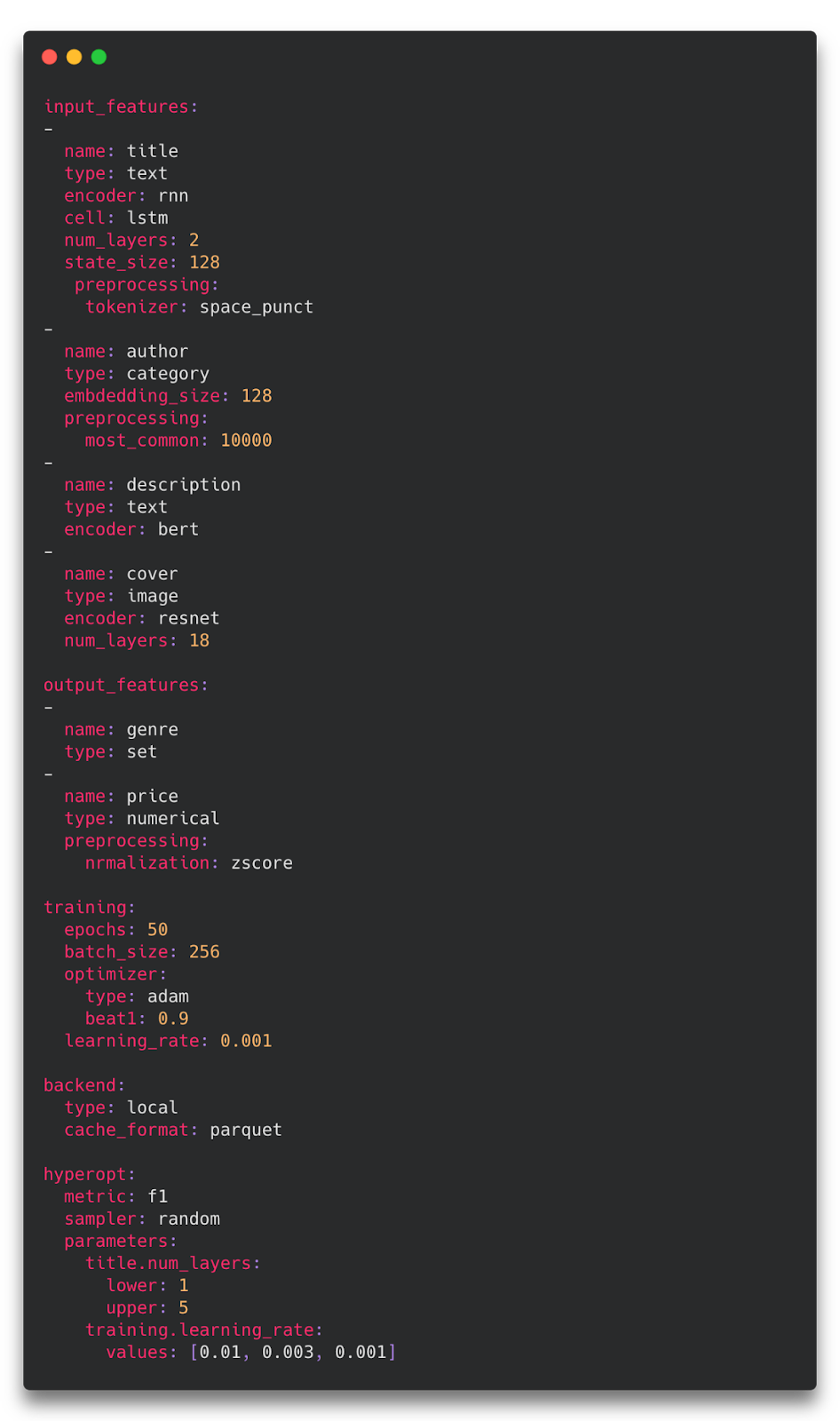

Ludwig makes this possible through its declarative approach to structuring machine learning pipelines. Instead of writing code for your model, training loop, preprocessing, postprocessing, evaluation and hyperparameter optimization, you only need to declare the schema of your data with a simple YAML configuration:

Yaml Configuration

Starting from a simple config like the one above, any and all aspects of the model architecture, training loop, hyperparameter search, and backend infrastructure can be modified as additional fields in the declarative configuration to customize the pipeline to meet your requirements:

Why Declarative Machine Learning Systems?

Flexibility Simplicity

Ludwig’s declarative approach to machine learning presents the simplicity of conventional AutoML solutions with the flexibility of full-featured frameworks like TensorFlow and PyTorch. This is achieved by creating an extensible, declarative configuration with optional parameters for every aspect of the pipeline. Ludwig’s declarative programming model allows for key features such as:

- Multi-modal, multi-task learning in zero lines of code. Mix and match tabular data, text, imagery, and even audio into complex model configurations without writing code.

- Integration with any structured data source. If it can be read into a SQL table or Pandas DataFrame, Ludwig can train a model on it.

- Easily explore different model configurations and parameters with hyperopt. Automatically track all trials and metrics with tools like Comet ML, Weights & Biases, and MLflow.

- Automatically scale training to multi-GPU, multi-node clusters. Go from training on your local machine to the cloud without code or config changes.

- Fully customize any part of the training process. Every part of the model and training process is fully configurable in YAML, and easy to extend through custom TensorFlow modules with a simple interface.

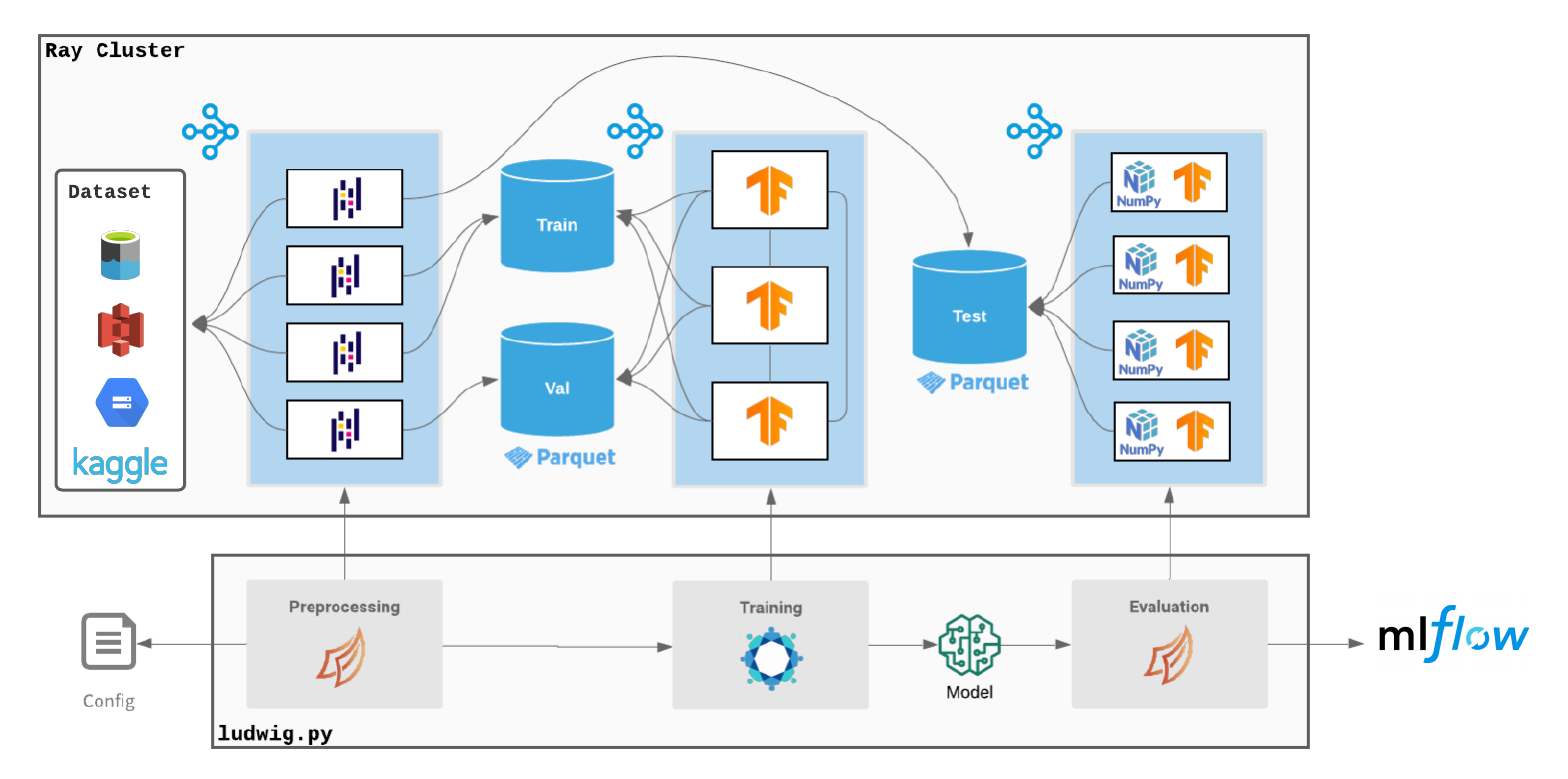

Ludwig distributed training and data processing with Ray

Ludwig on Ray is a new backend introduced in v0.4 that illustrates the power of declarative machine learning. Starting from any existing Ludwig configuration like the one above, users can scale their training process from running on their local laptop, to running in the cloud on a GPU instance, to scaling across hundreds of machines in parallel, all without changing a single line of code.

By integrating with Ray, Ludwig is able to provide a unified way for doing distributed training:

- Ray enables you to provision a cluster of machines in a single command through its cluster launcher.

- Horovod on Ray enables you to do distributed training without needing to configure MPI in your environment.

- Dask on Ray enables you to process large datasets that don’t fit in memory on a single machine.

- Ray Tune enables you to easily run distributed hyperparameter search across many machines in parallel.

All of this comes for free without changing a single line of code in Ludwig. When Ludwig detects that you’re running within a Ray cluster, the Ray backend will be enabled automatically.

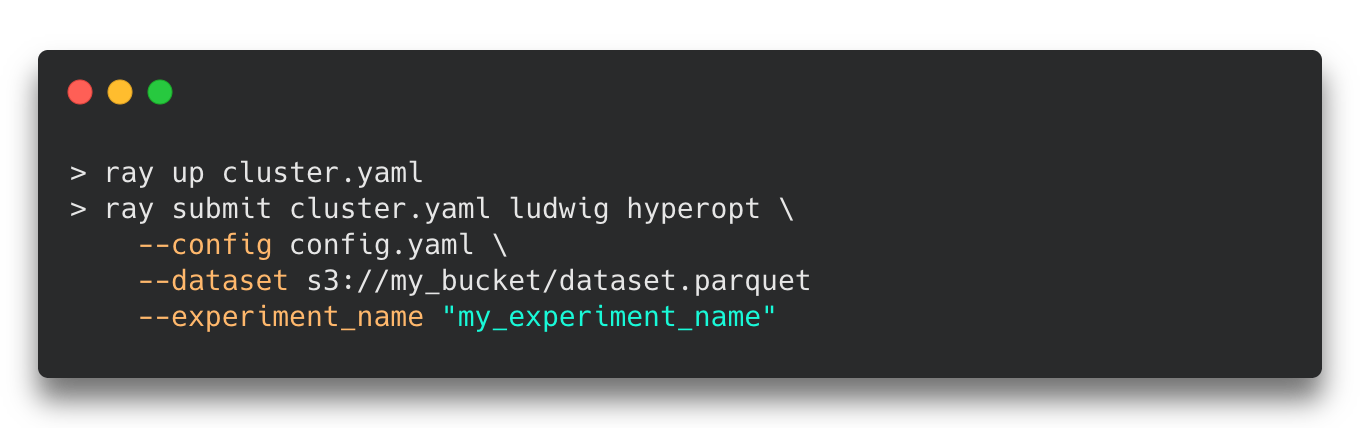

After launching a Ray cluster by running ray up on the command line, you need only ray submit your existing Ludwig training command to scale out across all the nodes in your Ray cluster.

Behind the scenes, Ludwig will do the work of determining what resources your Ray cluster has (number of nodes, GPUs, etc.) and spreading out the work to speed up the training process.

Ludwig on Ray will use Dask as a distributed DataFrame engine, allowing it to process large datasets that do not fit within the memory of a single machine. After processing the data into Parquet or TFRecord format, Ludwig on Ray will automatically spin up Horovod workers to distribute the TensorFlow training process across multiple GPUs.

To get you started, we provide Docker images for both CPU and GPU environments. These images come pre-installed with Ray, CUDA, Dask, Horovod, TensorFlow, and everything else you need to train any model with Ludwig on Ray. Just add one of these Docker images to your Ray cluster config and you can start doing large scale distributed deep learning in the cloud within minutes:

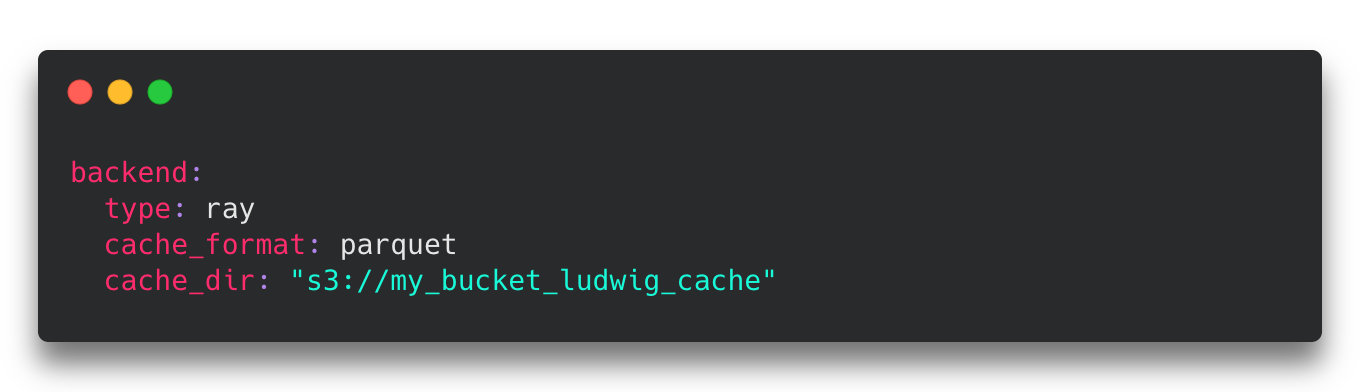

As with other aspects of Ludwig, the Ray backend can be configured through the Ludwig config YAML. For example, when running on large datasets in the cloud, it can be useful to customize the cache directory where Ludwig writes the preprocessed data to use a specific bucket in a cloud object storage system like Amazon S3:

In Ludwig v0.4, you can use cloud object storage like Amazon S3, Google Cloud Storage, Azure Data Lake Storage, and MinIO for datasets, processed data caches, config files, and training output. Just specify your filenames using the appropriate protocol and environment variables, and Ludwig will take care of the rest.

Check the Ludwig User Guide for a complete description of available configuration options.

New distributed hyperparameter search with Ray Tune

Another new feature of the 0.4 release is the ability to do distributed hyperparameter search. With this release, Ludwig users will be able to execute hyperparameter search using cutting edge algorithms, including Population-Based Training, Bayesian Optimization, and HyperBand, among others.

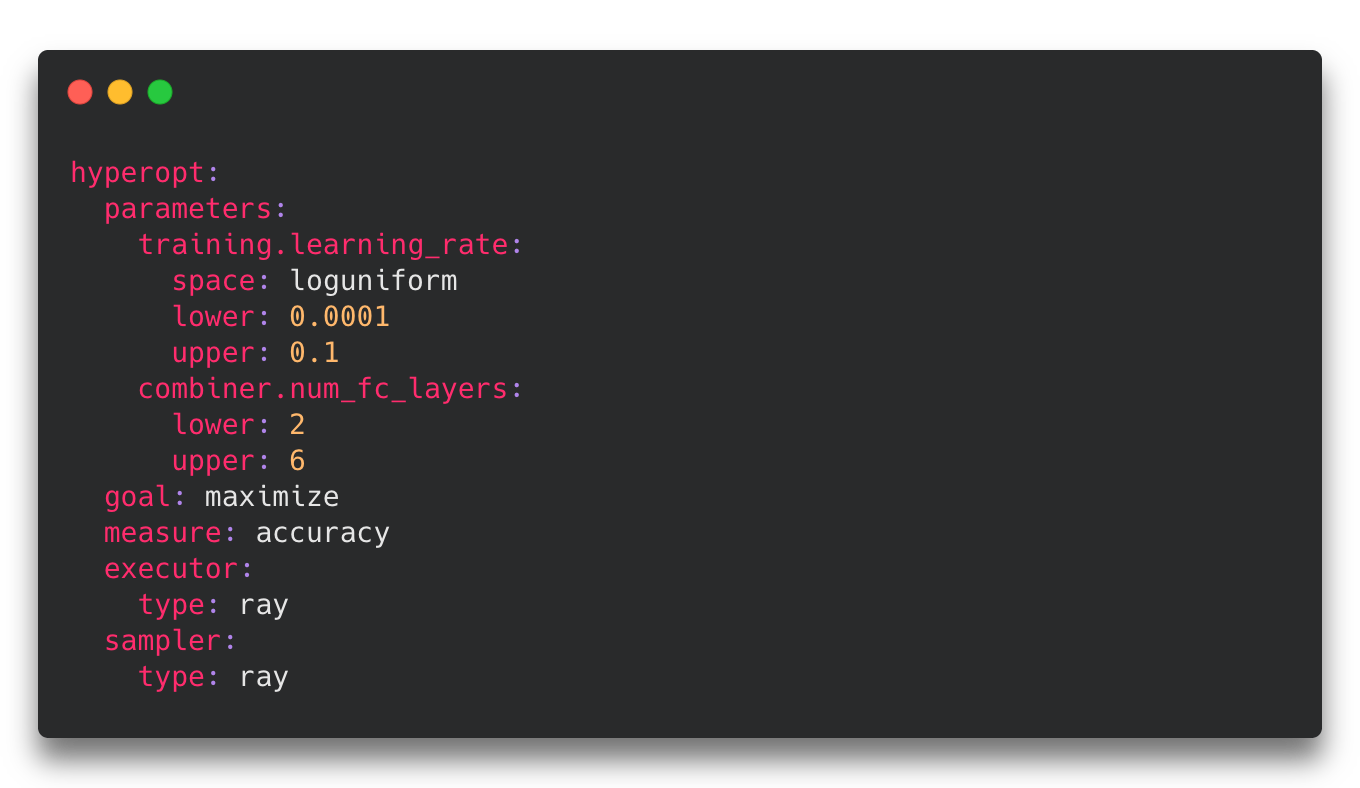

We first introduced hyperparameter search capabilities for Ludwig in v0.3, but the integration with Ray Tune — a distributed hyperparameter tuning library native to Ray — makes it possible to distribute the search process across an entire cluster of machines, and use any search algorithms provided by Ray Tune within Ludwig out-of-the-box. Through Ludwig’s declarative configuration, you can start using Ray Tune to optimize over any of Ludwig’s configurable parameters with just a few additional lines in your config file:

To run this on Ray across all the nodes in your cluster, you need only take the existing ludwig hyperopt command and ray submit it to the cluster:

Within the hyperopt.sampler section of the Ludwig config, you’re free to customize the hyperparameter search process with the full set of search algorithms and configuration settings provided by Ray Tune:

State-of-the-Art Tabular Models with TabNet

The first version of Ludwig released in 2019 supported tabular datasets using a concat combiner that implements the Wide and Deep learning architecture. When users specify numerical, category, and binary feature types, the concat combiner will concatenate the features together and build a stack of fully connected layers.

In this release we are extending Ludwig’s support for tabular data by adding a new TabNet combiner. TabNet is a state-of-the-art deep learning model architecture for tabular data that uses sparsity and multiple steps of feature transformations and attention to achieve high performance. The Ludwig implementation allows users to also use feature types other than the classic tabular ones as inputs.

Training a TabNet model is as easy as specifying a tabnet combiner and providing its hyperparameters in the Ludwig configuration.

We compared the performance achieved by the Ludwig TabNet implementation with the performance reported in the original paper, where the authors trained for longer and performed hyperparameter optimization, and confirmed it can achieve very comparable results in minimal time even when trained locally, as shown in the table below.

In addition to TabNet, we also added a new Transformer based combiner and improved upon the existing concat combiner by supporting optional skip connections. These additions make Ludwig a powerful and flexible option for training deep learning models on tabular data.

Experiment Tracking and Model Serving with MLflow

MLflow is an open source experiment tracking and model registry system.

Ludwig v0.4 introduces first-class support for tracking Ludwig train, experiment, and hyperopt runs in MLflow with just a single extra command-line argument: — mlflow.

The experiment_name you provide to Ludwig will map directly to an experiment in MLflow so you can organize multiple training or hyperopt runs together.

This functionality is also exposed through the Python API through a single callback:

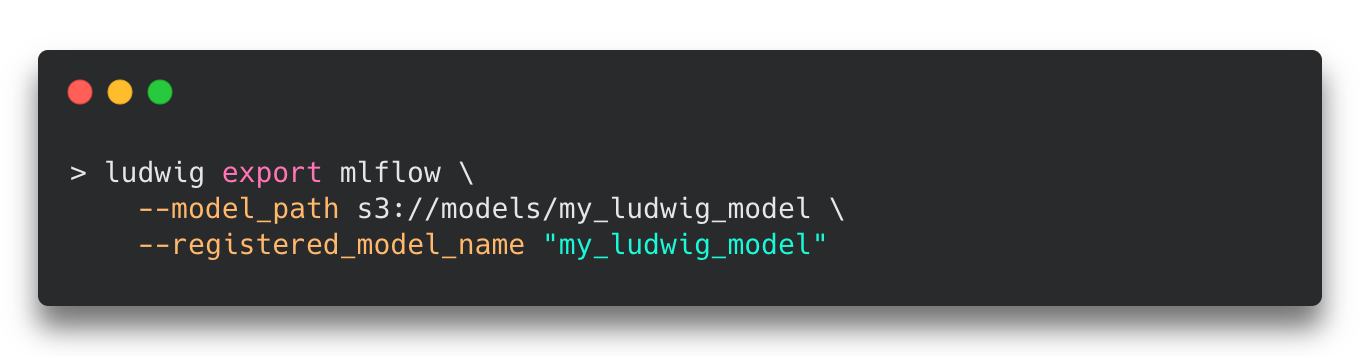

In addition to tracking experiment results, MLflow can also be used to store and serve models in production. Ludwig v0.4 makes it easy to take an existing Ludwig model (either saved as a directory or in an MLflow experiment) and register it with the MLflow model registry:

The Ludwig model will be converted automatically to MLflow’s model.pyfunc format, allowing it to be executed in a framework-agnostic way through a REST endpoint, Spark UDF, Python API with Pandas, etc.

Preconfigured datasets from Kaggle

Since its initial release, Ludwig has required datasets to be provided in tabular form, with a header containing names that can be referenced from the configuration file. In order to make it easy to get started with applying Ludwig to popular datasets and tasks, we’ve added a new datasets module in v0.4 that allows you to download datasets, process them into a tabular format ready for use with Ludwig, and load them into a DataFrame for training in a single line of code.

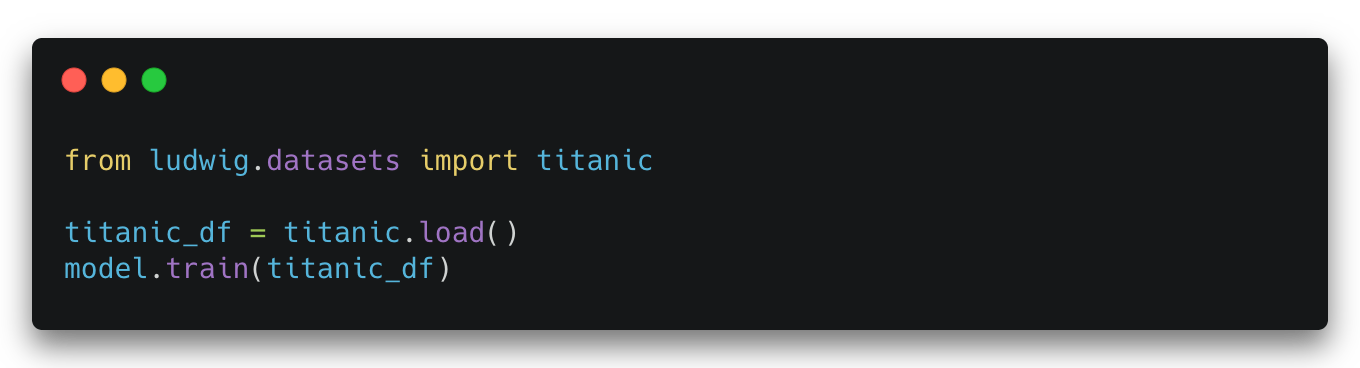

The Ludwig datasets module integrates with the Kaggle API to provide instant access to popular datasets used in Kaggle competitions. In v0.4, we provide access to popular competition datasets like Titanic, Rossmann Store Sales, Ames Housing and more. Here is an example of how to load the Titanic dataset:

Adding a new dataset is straightforward and just requires extending the Dataset abstract class and implementing minimal data manipulation code. This has allowed us to quickly expand the set of supported datasets to include SST, MNIST, Amazon Review, Yahoo Answers and many more. For a full list of the available dataset please check the User Guide, We encourage you to contribute your own favorite datasets!

What’s Next?

Our goal is to make machine learning easier and more accessible to a broader audience. We’re excited to continue to pursue this goal with features for Ludwig in the pipeline, including:

- End-to-end AutoML with Neural Architecture Search — Offload part or all of the work of picking the optimal search strategy, tuning parameters, and choosing encoders/combiners/decoders for your given dataset and resources during model training.

- Combined hyperopt & distributed training — Jointly run hyperopt and distributed training to find the best model within a provided time constraint.

- Pure TensorFlow low-latency serving — Leverage a flexible and high-performance serving system designed for production machine learning environments using TensorFlow Serving.

- PyTorch backend — Write custom Ludwig modules using all your favorite frameworks and take advantage of the rich ecosystem each provides.

We hope that these new capabilities will make it easier for our community to continue to build state-of-the-art models. If you are excited in this direction as we are, join our community and get involved! We are building this open source project together, we’ll keep on pushing for a release of Ludwig v0.5 and we welcome contributions from anyone who is excited to see this happen!

We also recognize that for many organizations, success with machine learning means solving many challenges end-to-end; from connecting & accessing data, to training and deploying model pipelines, and then making those models easily available to the rest of the organization. That’s why we’re also excited to announce that we are building a new solution called Predibase, a cohesive enterprise platform built on top of Ludwig, Horovod, and Ray to help realize the vision of making machine learning easier and more accessible. We’ll be sharing more details soon, and if you’re excited to get in touch in the meantime please feel free to reach out to us at team@predibase.com (we are hiring!).

We really hope that you find the new features in Ludwig v0.4 exciting, and want to thank our amazing community for the contributions and requests. Please drop us a comment or email with any feedback, and happy training!

To get involved, join our community on Slack and follow us on Twitter!

Acknowledgements

A lot of work went into Ludwig v0.4, and we want to thank everyone who contributed and helped, and in particular the main contributors and community members to this release: our co-maintainer Jim Thompson, Saikat Kanjilal, Avanika Narayan, Nimit Sohoni, Kanishk Kalra, Michael Zhu, Elias Castro-Hernandez, Debbie Yuen, Victor Dai. Special thanks to the immense support from the Stanford’s Hazy research group led by Prof. Chris Ré, to Richard Liaw, Hao Zhang and Micheal Chau from the Ray team, and the LF AI & Data staff.