Authors: Justin Zhao, Shreya Rajpal, Daniel Treiman, Jim Thompson, Travis Addai, Piero Molino

Ludwig is an open-source declarative deep learning framework that enables individuals from a variety of backgrounds to train and deploy state-of-the-art tabular, natural language processing, and computer vision models.

Ludwig 0.5 is a complete renovation of Ludwig from the ground up with a focus on scalability, deployment, reliability, and documentation. Ludwig v0.5 migrates our entire backend from TensorFlow to PyTorch and introduces several new features and technical improvements, including:

- Step-based training and evaluation to enable frequent sub-epoch monitoring of model health and evaluation metrics. This is particularly useful when training on large dataset to get feedback more quickly.

- Data balancing: upsampling and downsampling during preprocessing to train on better proportioned datasets.

- End-to-end torchscript export to support low-level optimized model deployment, including preprocessing and post-processing, to go directly from example to predictions.

- Ludwig on Ray with RayDatasets enabling significant training speed boosts for reading large datasets while training Ludwig models on a Ray cluster.

- The addition of MLPMixer and ViTEncoder as image encoders for state-of-the-art deep learning on image data.

- AutoML for tabular and text classification, integrated with distributed hyperparameter search using RayTune.

- Scalability optimizations with Dask, Modin, and Ray, enabling Ludwig to preprocess, train, and evaluate over datasets hundreds of gigabytes in size in tens of minutes.

- Config validation using marshmallow schemas revealing configuration typos or bad values early and increasing reliability.

- More tests. We’ve quadrupled the number of unit tests and end-to-end integration tests and we’ve expanded our CI testing to run in distributed and GPU settings. This strengthens Ludwig’s stability and reliability.

- Export to NVIDIA Triton. Deploy Ludwig models directly Triton servers.

- Consolidate hyperopt around RayTune with the ability to stop and resume hyperopt runs.

- Integration with Aim for experiment tracking. Use Aim to log Ludwig training runs, and use a beautiful UI to compare them and Aim APIs to query them programmatically.

Our team at Predibase and the entire open source Ludwig community is thoroughly invested in improving the declarative ML experience, and, as part of the v0.5 release, we’ve revamped the getting started guide, user guide, and developer documentation. We’ve also published a new end-to-end tutorials with thoroughly documented notebooks on text, tabular, image, and multimodal classification that provide a deep walkthrough of Ludwig’s functionality.

Migrating to PyTorch

Ludwig’s migration to PyTorch comes from a substantial 6 month undertaking involving 230+ commits, changes to 70k+ lines of code, and contributions from 40+ people. This culminated in an article published in the official PyTorch blog describing Ludwig on PyTorch in more detail.

PyTorch’s pythonic design and emphasis on developer experience are well-aligned with Ludwig’s principles of simplicity, modularity, and extensibility. Switching to use PyTorch as Ludwig’s backend of choice was strongly motivated by the increase in productivity in development, debugging, and iteration that the more pythonic PyTorch API affords us as well as the great ecosystem the PyTorch community has built around it. With Ludwig on PyTorch, we’re thrilled to see what developers, researchers, and data scientists in the PyTorch and broader deep learning community can bring to Ludwig.

Feature and performance parity

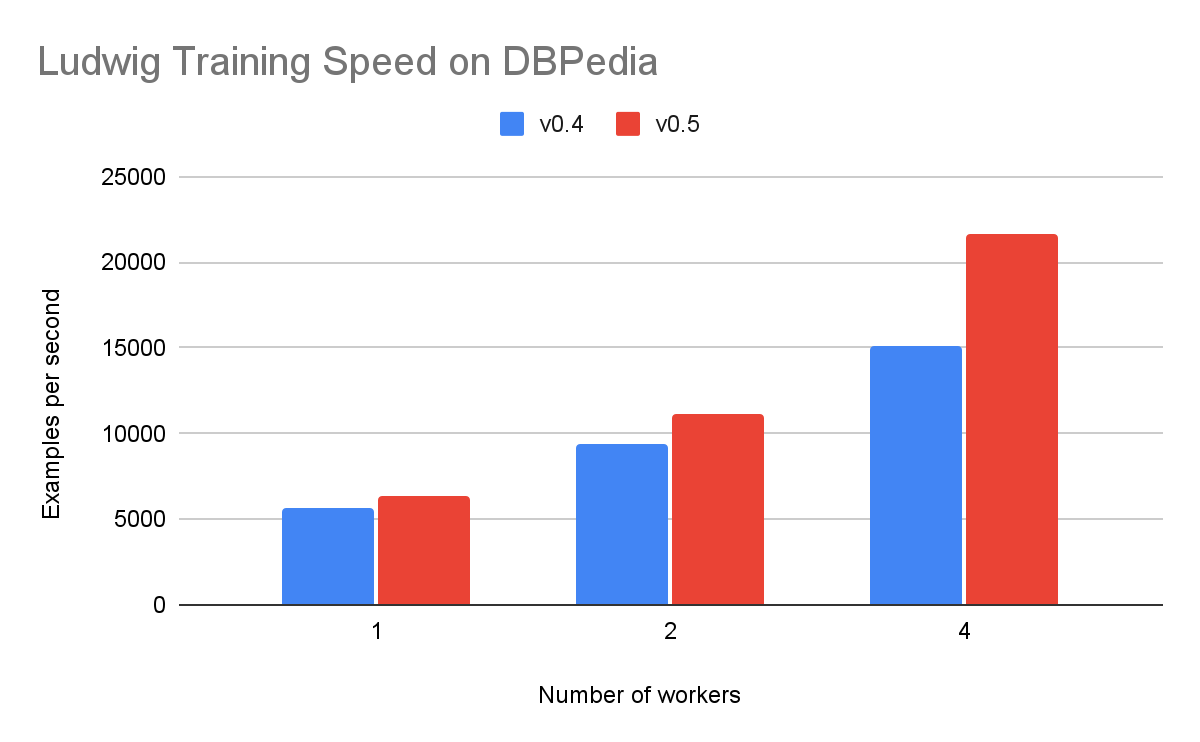

The number of examples per second training a model on the DBPedia dataset (text classification) on a single machine and using distributed training across multiple workers. While the training speed is similar between v0.4 and v0.5 on a single worker, as we add more workers, training speed increases significantly in PyTorch.

Over the last several months, we’ve moved all Ludwig encoders, combiners, decoders, and metrics for every data modality that Ludwig supports, as well as all of the backend infrastructure on Horovod and Ray, to PyTorch.

At the same time, we wanted to make sure that the experience of Ludwig users continues to be performant and delightful. We’ve run extensive comparisons between Ludwig v0.5 (PyTorch-based) and Ludwig v0.4 on text, image, and tabular datasets, evaluating training speed, inference throughput, and model performance, to verify that there’s been no degradation.

Our results, published on the official PyTorch blog, reveal roughly the same high GPU utilization (~90%) on several datasets with significant improvements in distributed training speed and memory usage without impacting model accuracy nor time to convergence.

New features

In addition to the PyTorch migration, Ludwig v0.5 is packed with new functionality, features, and additional changes that make v0.5 the most feature-rich and robust release of Ludwig yet.

Step-based training and evaluation

Ludwig’s train loop is epoch-based by default, with one round of evaluation per epoch (one pass through the dataset).

for epoch in num_epochs:

for batch in training_data.batches:

train(batch)

save_model(model_dir)

evaluation(training_data)

evaluation(validation_data)

evaluation(test_data)

print_results()This is an appropriate approach for small datasets that fit in memory and train quickly. However, when training on big unstructured datasets that used to train large models , it leads to getting unfrequent assessments of model performance. With step-based training and evaluation, users can configure a more frequent sub-epoch evaluation cadence to more regularly monitor metrics and model health.

Use steps_per_checkpoint to run evaluation every N training steps:

trainer:

steps_per_checkpoint: 1000Or checkpoints_per_epoch to run evaluation N times per epoch.

trainer:

evaluate_training_set: falseNote that it is invalid to specify both checkpoints_per_epoch and steps_per_checkpoint simultaneously.

To further speed up evaluation, users can skip evaluation on the training set by setting evaluate_training_set to False.

trainer:

evaluate_training_set: falseData balancing

Users working with imbalanced datasets for binary classification can specify an oversampling or undersampling parameter which will balance the training set during preprocessing.

In this example, Ludwig will oversample the minority class to achieve a 50% representation in the overall dataset.

preprocessing:

oversample_minority: 0.5In this example, Ludwig will undersample the majority class to achieve a 70% representation in the overall dataset.

preprocessing:

undersample_majority: 0.7Data subsampling

When developing models, it can be useful to iterate quickly with a smaller portion of the dataset. Ludwig supports this with a new preprocessing parameter, sample_ratio, which subsamples the dataset.

preprocessing:

sample_ratio: 0.3End-to-end torchscript

Users can export trained ludwig models to torchscript with ludwig export_torchscript.

ludwig export_torchscript –-model=/path/to/model

Models that use binary, number, category, and text features now support torchscript-compatible preprocessing, enabling end-to-end torchscript compilation.

inputs = {

'cat_feature': ['foo', 'bar']

'num_feature': torch.tensor([42, 7])

'bin_feature1': torch.tensor([True, False])

'bin_feature2': ['No', 'Yes']

}

scripted_model = model.to_torchscript()

output = scripted_model(inputs)End to end torchscript compilation is also supported for text features that use torchscript-enabled torchtext tokenizers. We are actively working on adding support for other data types.

AutoML for text classification

In v0.4, we introduced experimental AutoML functionalities into Ludwig.

Ludwig AutoML automatically creates deep learning models given a dataset, its label column, and a time budget. Ludwig AutoML infers the input and output feature types, chooses the model architecture, and specifies the parameters and ranges across which to perform hyperparameter search.

auto_train_results = ludwig.automl.auto_train(

dataset=my_dataset_df,

target=target_column_name,

time_limit_s=7200,

tune_for_memory=False

)Our initial AutoML work focused on tabular datasets, since good performance on such datasets is a current area of interest in the deep learning community. In v0.5, we expand on this work to develop and validate Ludwig AutoML for text classification.

Config validation against marshmallow schemas

The combiner and trainer sections of Ludwig configurations are now validated against official Marshmallow schemas. This centralizes documentation, flags configuration typos or bad values, and helps catch regressions. Other parts of the Ludwig configurations will be covered soon.

Better test coverage

We’ve quadrupled the number of unit and integration tests and we’ve established new testing guidelines for well-tested contributions going forward. We’ve also expanded our CI testing to run in distributed and GPU settings. This strengthens Ludwig’s stability and reliability, and makes it easier for new contributors to submit PRs with confidence.

Export to Triton

Export a Ludwig model in torchscript format with config for Triton serving.

ludwig export_triton \

--model_path=/path/to/model \

--model_name="my model" \

--model_version=1.0 \

--output_path=.Integration with Aim for experiment tracking

Thanks to the contribution from Erik Arakelyan, Ludwig now also integrates with Aim for experiment tracking. Aim enables users to log Ludwig training runs, and use a beautiful UI to compare them and APIs to query them programmatically.

Backward compatibility

Despite all of the code changes, we’ve worked hard to ensure that Ludwig’s simple interface remains consistent and compatible with earlier releases as much as possible. A few minor parameter naming changes in the Ludwig configuration to be aware of:

+------------------------+------------------------------+

| old config name | new config name |

+------------------------+------------------------------+

| training | trainer |

| numeric | number |

| fc_size | output_size |

| tied_weights | tied |

| bias | use_bias |

| weight_regularizer | regularization_{lambda/type} |

| bias_regularizer | regularization_{lambda/type} |

| activation_regularizer | regularization_{lambda/type} |

+------------------------+------------------------------+The hyperopt section has also been refactored to be unified with RayTune’s naming conventions.

Finally, we’ve dropped support for Python 3.6. Please use Python 3.7 going forward.

Stay in the loop

Ludwig thriving open source community gathers on Slack, join it to get involved!

If you are interested in adopting Ludwig in the enterprise, check out Predibase, the declarative ML platform that connects with your data, manages the training, iteration and deployment of your models and makes them available for querying, reducing time to value of machine learning projects.

Don’t want to miss future updates? Join this email list (you can unsubscribe anytime).

We wanted to thank all the collaborators who helped us, including the Ray team, the PyTorch team, the AimStack team, all the Ludwig contributors and in particular the new contributors:

- @vreyespue made their first contribution in #1213

- @Yard1 made their first contribution in #1277

- @EnricoMi made their first contribution in #1442

- @q0w made their first contribution in #1512

- @kriziacicchetti made their first contribution in #1525

- @RebSolcia made their first contribution in #1526

- @noyoshi made their first contribution in #1540

- @louixs made their first contribution in #1552

- @dantreiman made their first contribution in #1576

- @connor-mccorm made their first contribution in #1699

- @hfurkanbozkurt made their first contribution in #1734

- @brightsparc made their first contribution in #1830

- @tirkarthi made their first contribution in #1838

- @jeffreykennethli made their first contribution in #1856

- @rk0n made their first contribution in #1864

- @geoffreyangus made their first contribution in #1882

- @jppgks made their first contribution in #1959