TL;DR: Learn best practices and how to build high-speed, cost-effective serving infrastructure for open-source LLMs. This guide covers GPU autoscaling, inference throughput enhancements with Turbo LoRA, and efficient model deployment via multi-LoRA serving. It also provides insight into Predibase's nextgen inference engine.

The race to production AI isn’t just about model quality—it’s about delivery.

Enterprises today are realizing that even the best-performing LLMs are useless without fast, scalable, and cost-efficient serving infrastructure.

The growing popularity of small open-source language models (SLMs) has helped reduce the burden of expensive, high-end GPUs, making it easier to achieve low-latency without massive investments. And, thanks to fine-tuning, it's now possible to deliver GPT-4o-level accuracy for your use case and any specialized task with models that are 10-100x smaller than large frontier models. However, unlocking your AI stack's full potential involves more than deploying the latest SLM.

AI teams need to address three key inference challenges when serving LLMs in production:

- Scaling efficiently without overprovisioning GPUs

- Maximizing throughput on existing infrastructure

- Increasing serving capacity without incurring massive costs

In The Definitive Guide to Serving Open Source Models, we break down these challenges in detail, discuss the techniques for overcoming them, and share how we’ve built an inference stack that delivers speed and reliability while maintaining cost efficiency. In this blog, we’ve provided an abridged version of the key challenges and how we address them with Predibase’s intelligent serving infrastructure. To get all the details and best practices, make sure to download your free copy of the LLM serving guide.

The Challenge of Provisioning GPUs in a Dynamic Environment

Managing spiky, unpredictable production traffic is one of the toughest challenges when trying to build an efficient LLM serving infrastructure. Customer-facing AI applications often experience uneven traffic patterns—with sharp spikes during peak hours and dips during off-peak times. Unlike traditional web services, scaling LLM workloads dynamically is far more complex due to:

- Limited GPU availability especially for high-demand models like H100s

- Inflexible node configurations imposed by GPU providers (e.g., 8-GPU minimum per node)

- Slow cold starts caused by large model weights and container images that need to be loaded before serving

This lack of flexibility leads organizations to adopt two main provisioning strategies for GPUs:

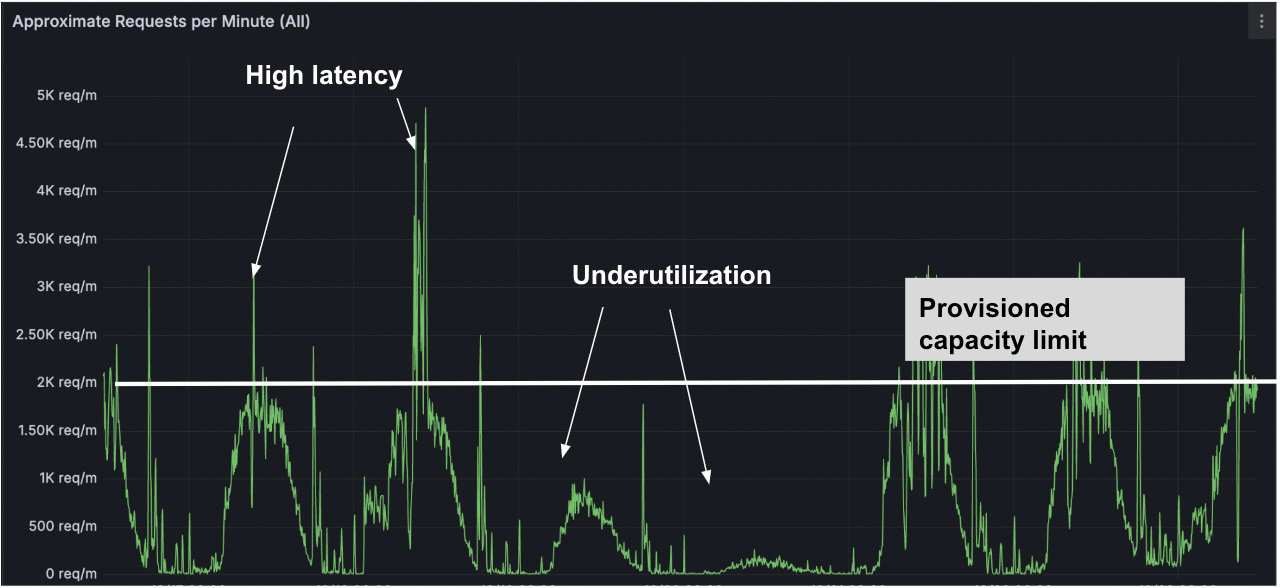

1. Provisioning for average utilization: This reduces costs but may lead to latency issues during peak times.

Provisioning GPUs for average traffic patterns can lead to poor latency during peak loads

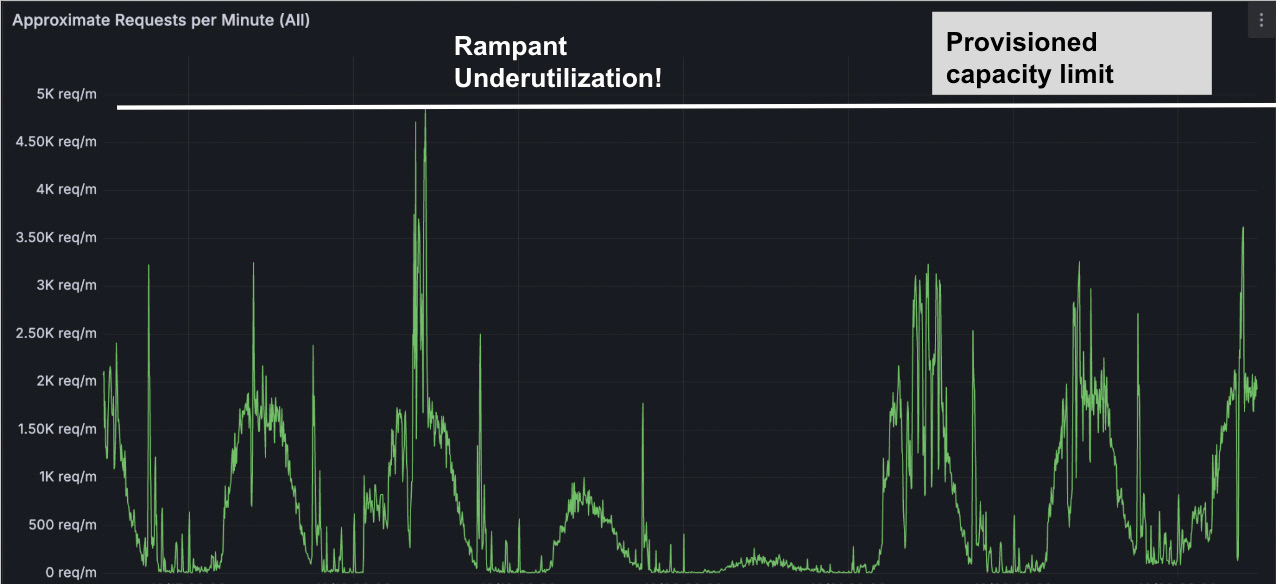

2. Provisioning for maximum capacity: This ensures performance but results in idle GPUs during low-traffic periods. While this approach ensures constant availability, it can lead to significant waste during periods of low demand. This is a hidden cost that businesses often don’t think about when they start using AI.

Provisioning GPUs for maximum traffic leads to costly unused capacity.

These factors make it challenging for organizations to implement efficient, cost-effective inference for production AI.

Production-Grade Infra with Smart Autoscaling Optimized for Throughput

To address these challenges, we’ve implemented several key strategies in our serving stack that enable teams to cost-efficiently accelerate inference without stockpiling mountains of GPUs.

1. Smart Autoscaling with Minimal Cold Start Times

GPU autoscaling is a critical feature for managing AI workloads, ensuring that resources are dynamically adjusted based on real-time demand. Predibase implements an intelligent, unified GPU autoscaling system that manages both real-time inference and batch jobs. During peak traffic, the system can preempt lower-priority batch jobs (like training jobs) to reallocate GPUs to higher priority inference jobs, ensuring rapid responsiveness when demand surges. We also keep GPUs on hand, so pulling additional GPU resources only takes seconds. This efficient resource management translates into major cost savings without sacrificing speed.

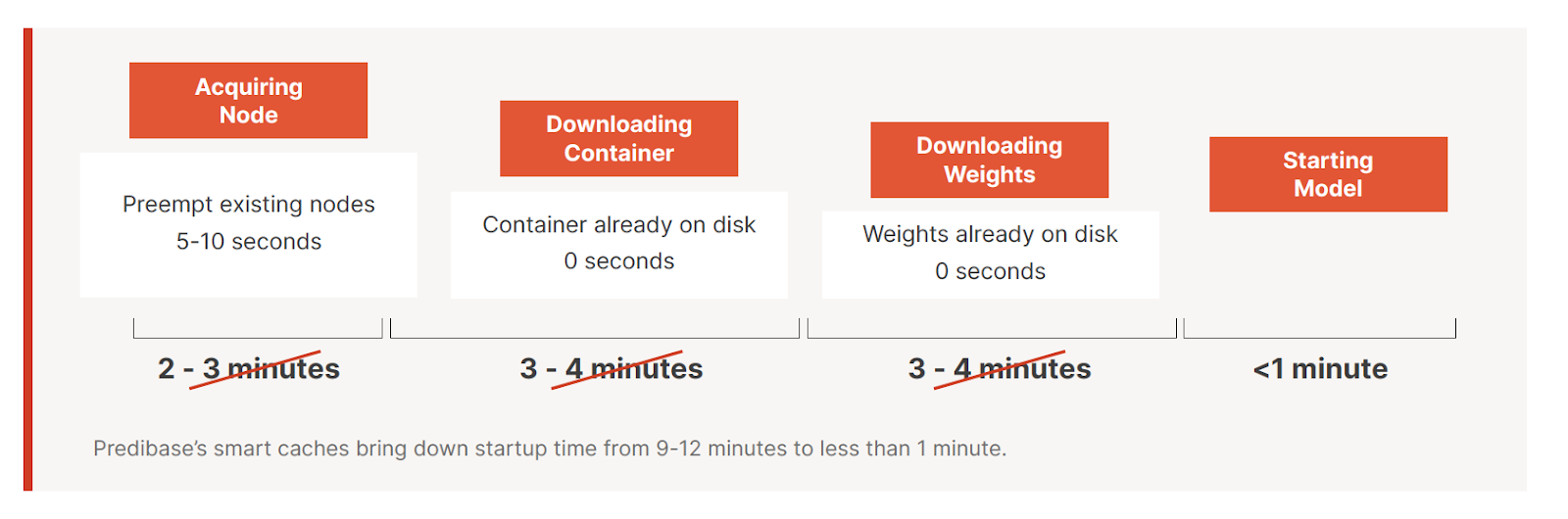

One of the most significant pain points in GPU autoscaling is the cold start delay. Launching a new LLM instance can take 10–14 minutes due to:

- Large model weights (often tens of GBs)

- Heavy container images (8–10 GB)

- Initialization and load times

Through a series of optimizations, we’ve been able to cut this time to under 1 minute for most enterprise replicas by using:

- Smart caching strategies that keep model weights and containers warm

- Dedicated caches for enterprise users on their own instances

- A cache manager that proactively ensures readiness

The combination of smart GPU autoscaling with sub-1-minute cold start times means LLMs can be reallocated and start serving at scale almost instantly—even during traffic spikes—without the need to overprovision GPUs.

Smart GPU autoscaling and cold start time reduction for LLM inference with Predibase infrastructure provides massive inference gains.

2. Maximizing Inference Performance on Existing Infrastructure

Serving models at scale isn’t just about managing costs—it’s also about speed. Latency directly impacts the end user experience as slow response times lead to frustrated users and less engagement. However, improving throughput typically means sacrificing quality or throwing additional costly hardware at the problem.

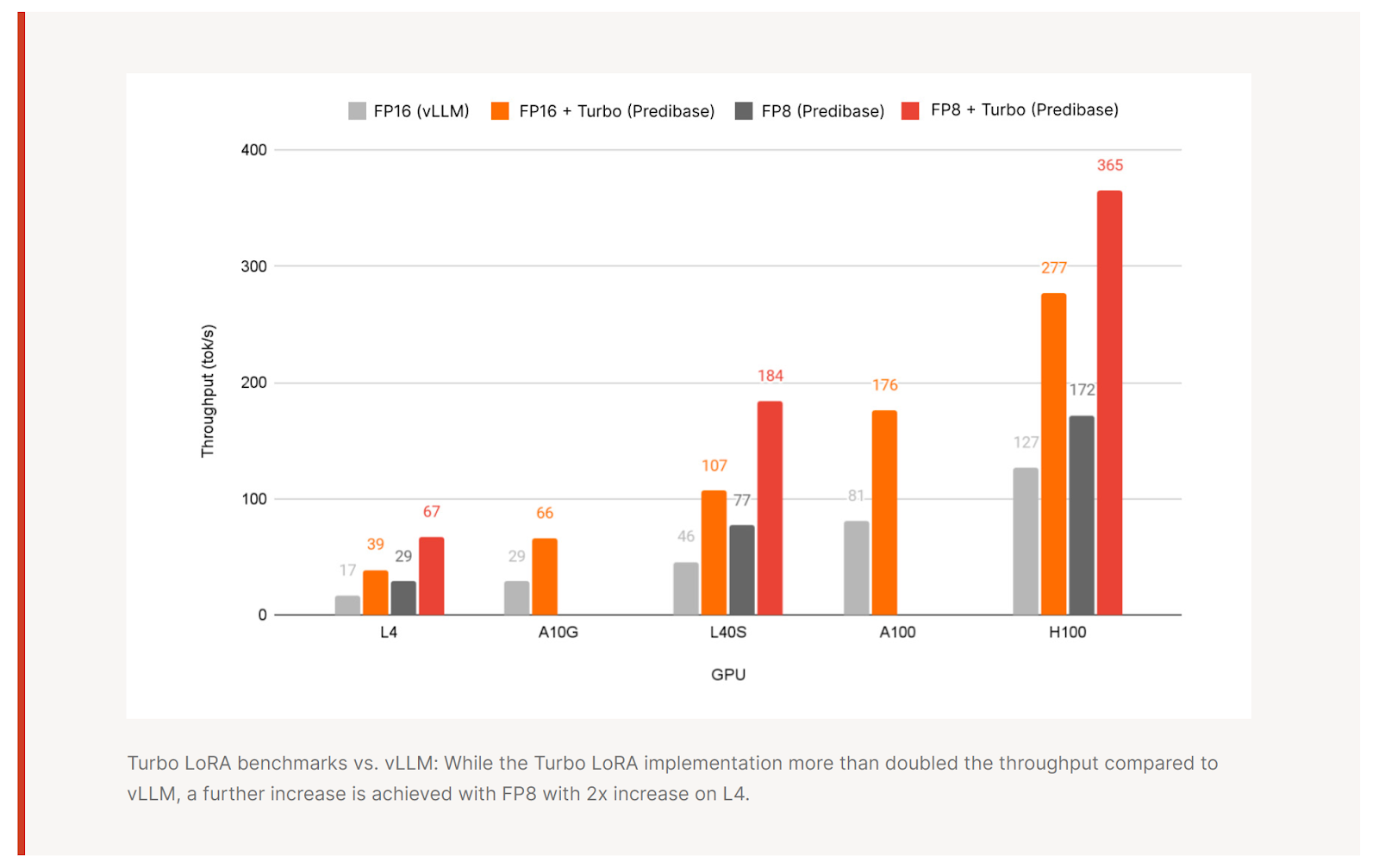

At Predibase, we’re tackling this problem head-on with Turbo LoRA, a novel innovation that breaks the trade-off between throughput and cost. By combining speculative decoding with LoRA fine-tuning in a parameter-efficient way, Turbo LoRA generates multiple tokens per step without bloating memory or sacrificing precision. Unlike alternatives like Medusa, Turbo LoRA’s lightweight adapters (just megabytes in size) make it ideal for both small and large batch serving.

Paired with our FP8 quantization, the performance lift becomes dramatic:

- 50% memory savings over FP16

- Up to 4x throughput gains

- Maintained or improved output quality, even at scale

In head-to-head benchmarks, Turbo LoRA + FP8 outperformed vLLM and other inference frameworks across GPU types and batch sizes—while using fewer resources so you get the maximum bang for your GPU buck.

Serving benchmarks comparing Predibase vs vLLM with Turbo LoRA and FP8. These optimizations provide massive inference throughput gains and lower latency on different GPU configurations.

3. Fitting More Models on a Single GPU with Multi-LoRA Serving

One of the most overlooked costs in AI inference is running a dedicated GPU for every model variant. For enterprises deploying multiple fine-tuned models across customers, languages, or domains, this quickly becomes unsustainable.

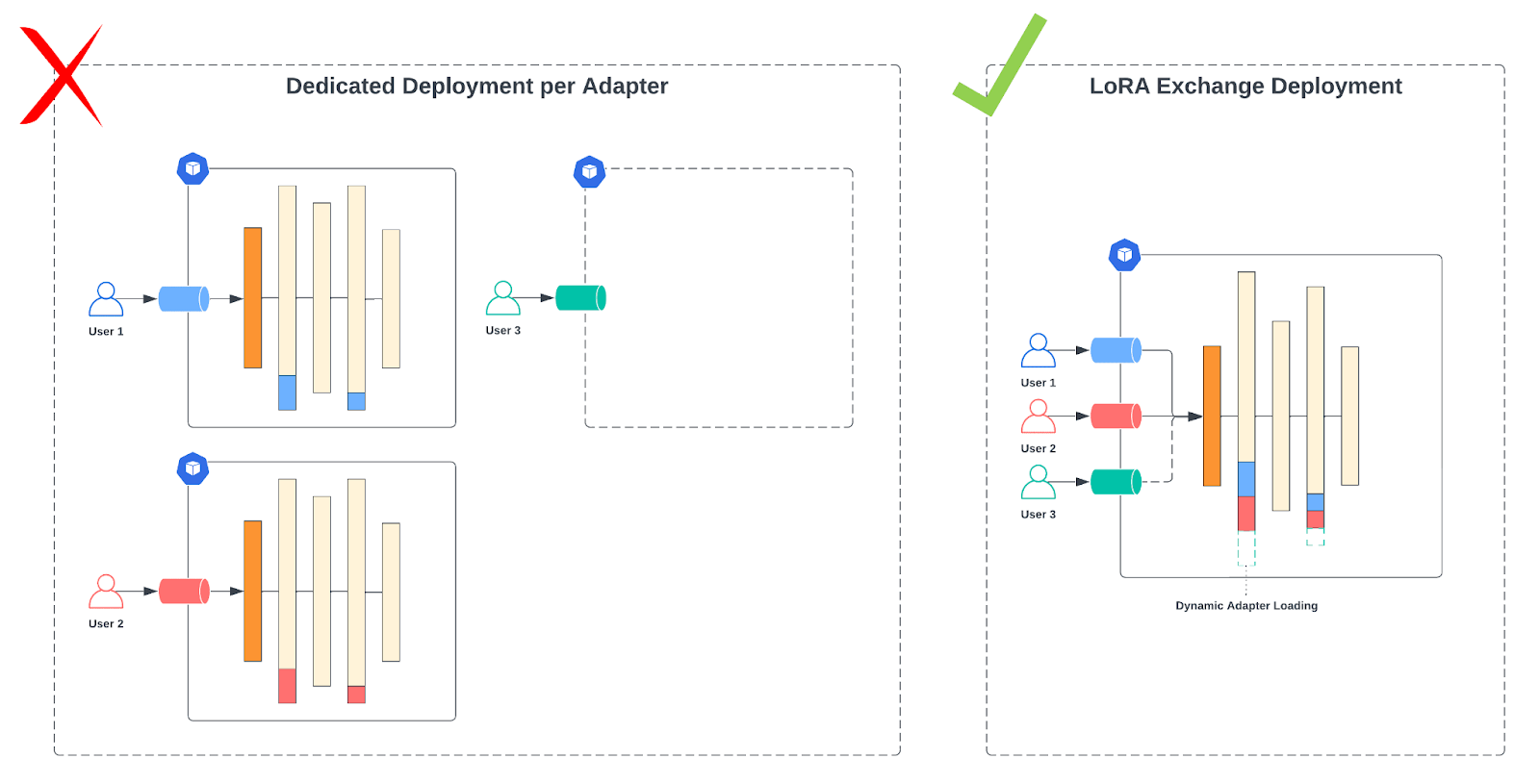

Enter LoRA Exchange (LoRAX), Predibase’s open-source multi-lora serving system designed to:

- Dynamically load and unload fine-tuned adapters on demand

- Batch requests across different adapters, maximizing GPU utilization

- Cache model weights at the GPU, CPU, and disk level for lightning-fast access

With these innovations, LoRAX enables you to serve hundreds of fine-tuned models from a single GPU, with near-instant response times and minimal overhead.

Architecture diagram of multi-LoRA serving on a single GPU using Predibase’s open-source LoRA Exchange (LoRAX) server. Serve multiple fine-tuned LLMs on one base model with dynamic swapping.

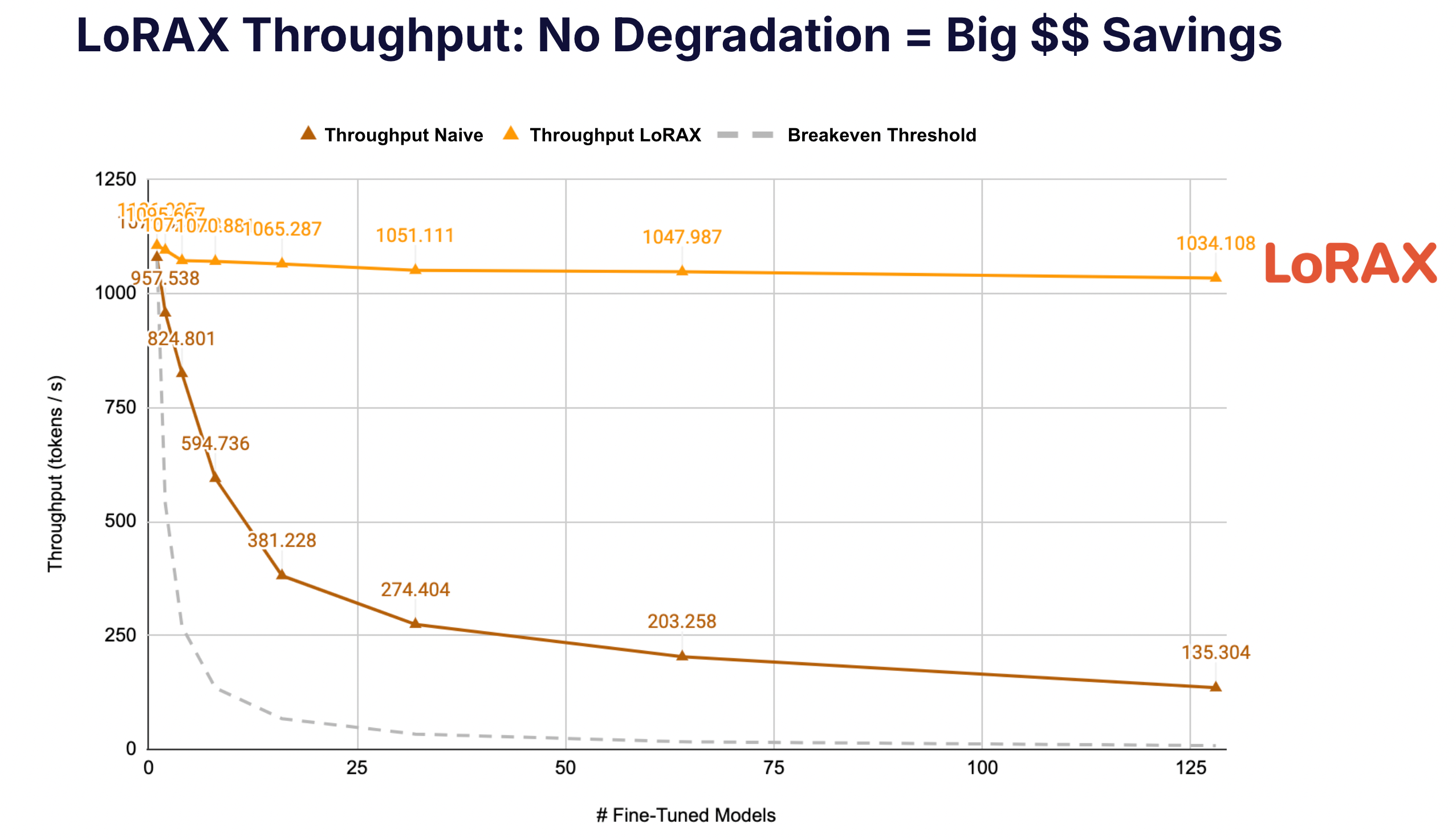

LoRAX really shines by sustaining high throughput even when dozens of adapters are hot‑swapped onto a single base model. The benchmark below highlights how serving multiple LoRA adapters introduces almost no degradation to performance.

Benchmark of LoRAX with increasing adapters shows no degradation in serving performance event at massive scale.

Take Convirza, a leading call center analytics and software company. Using Predibase’s Inference Engine with LoRA Exchange, they served 60+ fine-tuned models concurrently, maintained sub-2-second latency even during traffic spikes, and even reduced inference costs by 10x compared to OpenAI.

Get the Full Inference Guide: Diagrams, Benchmarks, and Deployment Tactics

This post is just the highlight reel. In the full Definitive Guide to Serving Open Source Models, you’ll get:

- A deep dive into cost optimization techniques like adapter batching and autoscaling

- Detailed architecture diagrams of Predibase’s multi-cloud inference architecture with support for VPC

- Additional performance and cost savings benchmarks and comparisons

- Real-world success stories from enterprise AI teams

Download the full guide here to start building the inference engine your models deserve.

Try Predibase for free with $25 in credits or schedule time with one of our LLM experts to discuss your specific use case and inference needs.

FAQ: Frequently Asked Questions about AI and LLM Inference

What is LLM inference in machine learning and how does it differ from training?

Machine learning inference (LLM Inference) refers to the process of using a trained language model to generate predictions or responses based on an input. Unlike training, which involves adjusting model weights based on labeled data, inference is simply the process of running a model to produce outputs—making speed and efficiency critical for real-time applications.

How can I reduce LLM inference latency?

To reduce your AI inference latency, you can implement strategies like GPU autoscaling, model quantization (e.g., FP8), smart caching, and speculative decoding. These approaches optimize compute utilization, reduce cold start times, and boost throughput without compromising output quality.

What is speculative decoding in LLMs?

Speculative decoding is a technique that speeds up inference by generating multiple potential token predictions in parallel. These speculative outputs are later validated, reducing the number of sequential steps required and significantly improving token generation speed. Predibase offers an optimized, more performant version of speculative decoding called Turbo LoRA.

Should I choose open-source or proprietary LLMs for inference?

Open-source LLMs offer flexibility, cost savings, and greater control over deployment. Proprietary models like GPT-4 may offer higher out-of-the-box accuracy, but often come with usage restrictions, higher costs, and limited customization. OSS models like Meta's Llama series, Ali Baba's Qwen3 and DeepSeek R-1 have shown comparable performance to commercial models with a significantly reduced footprint. Once fine-tuned, these smaller open-source models easily outperform commercial models. Check out the fine-tuning leaderboard to see open-source model benchmarks against GPT-4. The right choice depends on your performance needs and infrastructure goals.

What are the top ways to reduce AI inference costs and improve throughput?

There are numerous ways to reduce your LLM serving cost. A few key cost-reduction strategies include:

- Quantization (e.g., FP8 or INT4) to reduce memory use

- Adapter-based fine-tuning instead of full model retraining

- Batching to process multiple requests in parallel

- Pruning to shrink model size without significant accuracy loss

- Smart autoscaling to avoid overprovisioning GPUs

- Multi-LoRA serving to run many variants from a single base model

What is multi-LoRA serving and how does it help?

Multi-LoRA serving allows many LoRA adapters to be loaded onto a single base model. Rather than deploying separate models for every variation, you can dynamically load fine-tuned adapters per request, significantly reducing GPU overhead and boosting utilization. Read about faster LLM inference with Turbo-LoRA.

How does multi-LoRA serving help reduce inference costs?

By enabling dozens or even hundreds of model variants to run on a single GPU, multi-LoRA serving eliminates the need to dedicate one GPU per model. This drastically lowers hardware requirements, leading to major cost savings—especially for multi-tenant or multilingual applications.

How do I deploy LLMs in a VPC or privacy-first environment?

To deploy LLMs securely, you can use platforms like Predibase that support deployment in your Virtual Private Cloud (VPC). This ensures compliance with data privacy policies, keeps sensitive data local, and allows full control over inference pipelines and model access.