GenAI is changing the game but most companies sabotage themselves before they even get their first model into production. They pour thousands of hours and millions of dollars into homegrown infrastructure that crumbles under real-world pressure. GPU shortages derail projects. Engineering teams drown in maintenance instead of innovating. And meanwhile, the competition is shipping features faster, cheaper, and smarter.

This isn't hypothetical—it's reality. Gartner predicts that by the end of 2025 a third of AI projects won't make it beyond the POC phase.

By the the end of 2025, around 30% of generative AI projects will be abandoned after proof of concept due to issues. —Gartner

If you're debating whether to build or buy your GenAI infrastructure, you're at a critical crossroad. One path leads to innovation; the other leads to expensive regret.

The "Full Control" Myth: Why DIY GenAI is a Trap

You've probably heard this before: “Build your own AI infrastructure and get complete control over your models, GPUs, and costs.” This sounds great until reality hits.

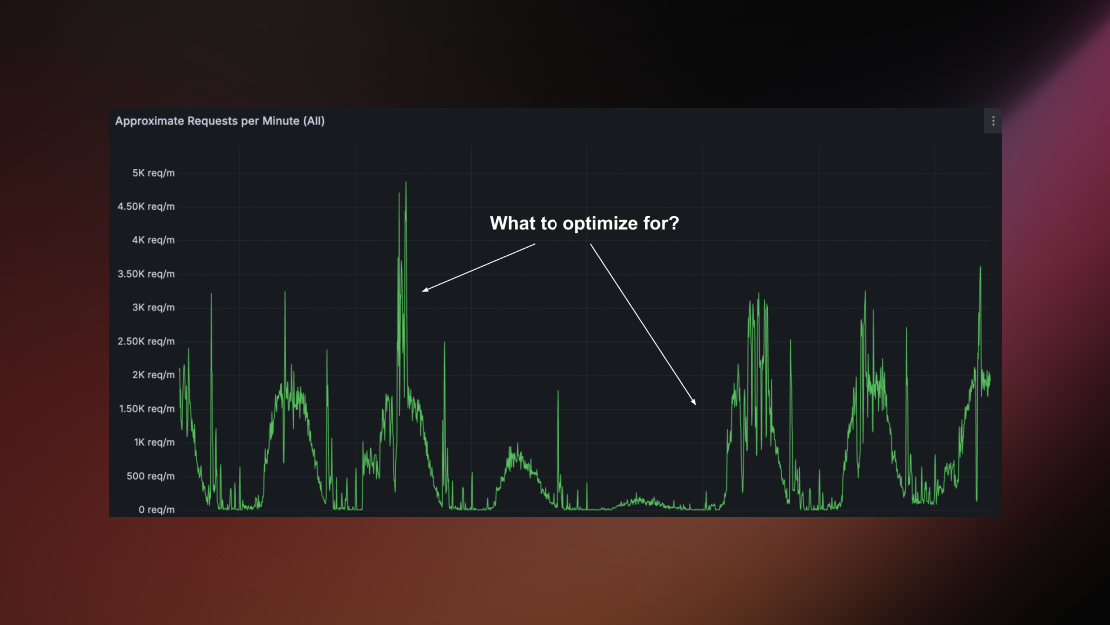

Building GenAI infra from scratch isn't as simple as cobbling together the latest open-source frameworks and writing some glue code. It's optimizing scarce GPU resources, adjusting for volatile real-world traffic patterns, building continuous training loops, and addressing the 24/7 firefighting associated with production AI systems. As an example, the diagram below demonstrates the trade-off challenge of optimizing GPUs for real-world spikes and lulls in traffic.

The challenge of optimizing GPUs for real-world traffic patterns.

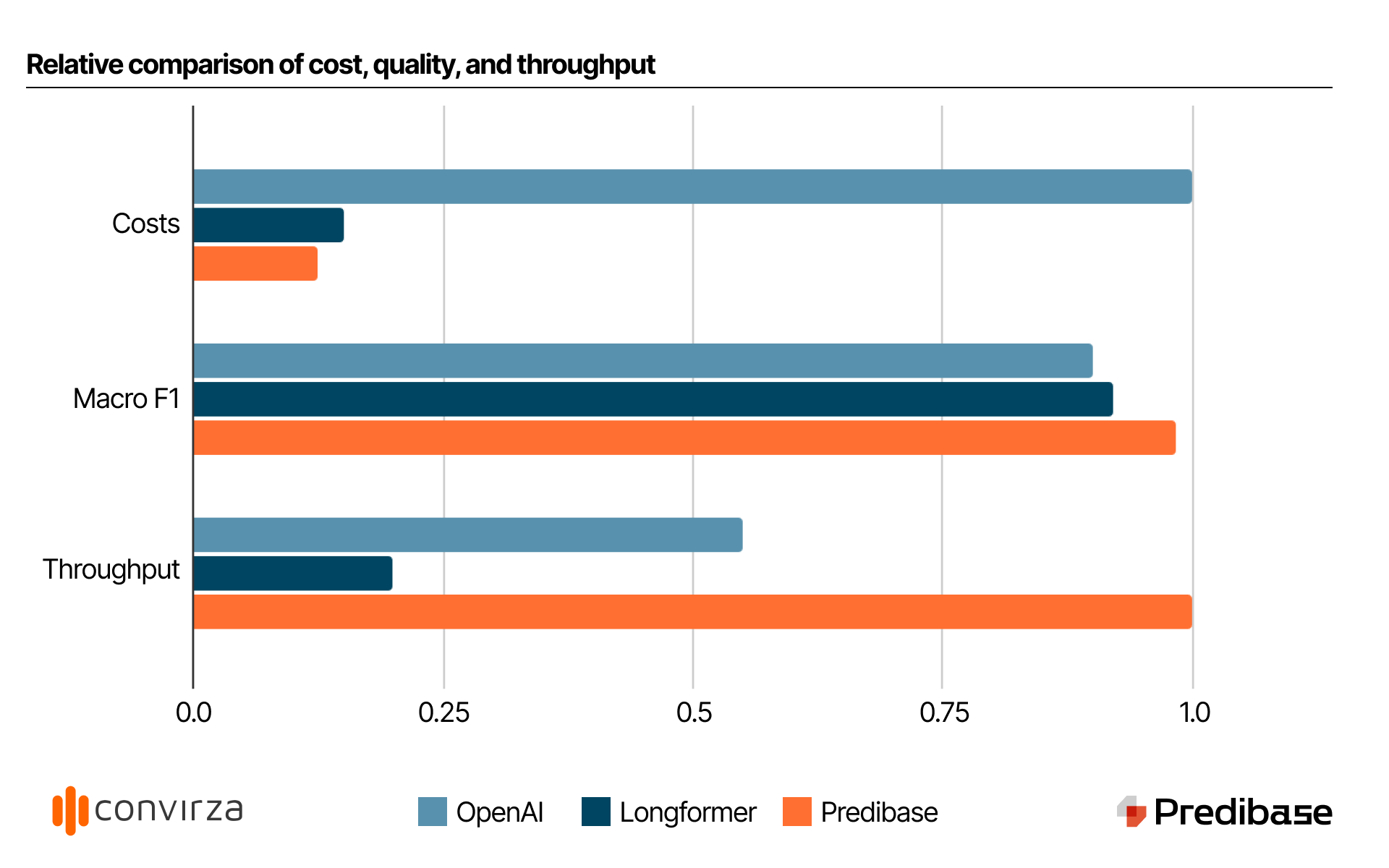

Even AI leaders like Convirza (read their case study or watch their talk) faced the challenge of building their own stack. They started with an ambitious project of customizing dozens of models for a wide range of business critical use cases in their customer service pipeline ranging from generating help desk responses to scoring customer call sentiment. As they scaled for production workloads reaching 1000s of requests a secnd they began to hit performance bottlenecks, and soaring costs. The big unlock: adopting a managed inference solution for open-source LLMs.

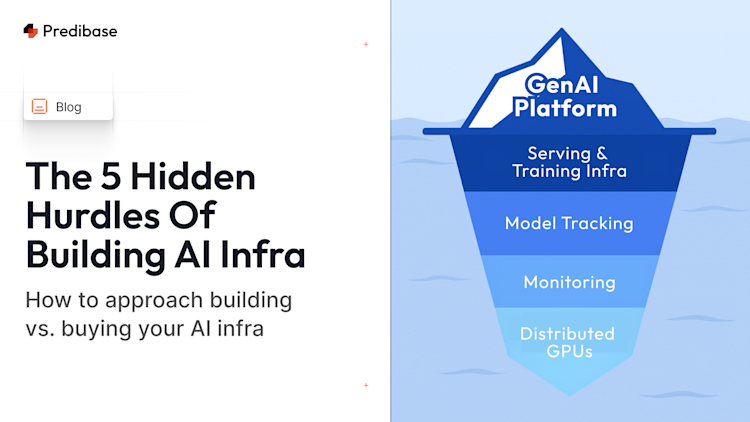

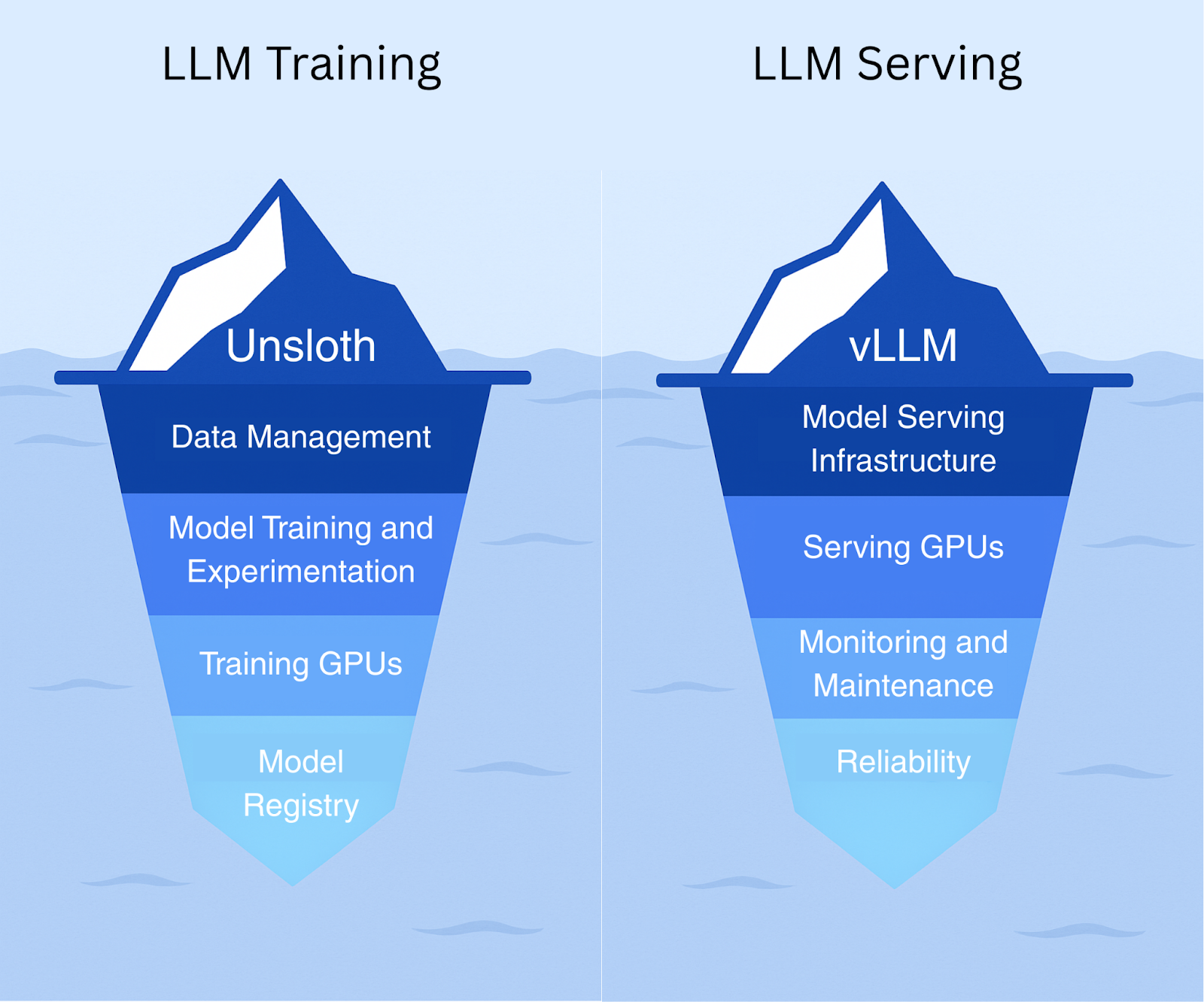

The Iceberg Effect: What's Hidden Will Sink You

Above the surface, it looks simple:

- Open-source models and frameworks

- Quick training and proof-of-concept deployments

Below the waterline, are the often overlooked challenges of serving LLMs:

- Fragile Data Pipelines: Corrupted files, multilingual data, sensitive information leaks

- GPU Hunger Games: Expensive, scarce resources causing massive budget overruns

- Model Drift: Performance silently degrading, causing unreliable outputs

- Maintenance Overload: Engineering teams permanently trapped in troubleshooting

DIY platforms force teams into an 80/20 split—spending most of their time babysitting infrastructure instead of building valuable AI applications.

The hidden "below the surface" challenges and complexities of building your own AI serving and training stack with open-source tools like vLLM and Unsloth.

Managed Platforms are the Cheat Code

Buying isn't about taking shortcuts; it's about being smart. Managed platforms handle the infrastructure mess so your engineers can focus on improving model quality and building innovative GenAI applications.

As an example, when Convirza switched from DIY to a managed serving solution their:

- Operational costs dropped by 10x,

- Model throughput increased by 80%, and

- Engineering time shifted from troubleshooting to innovation.

The Hidden Costs of DIY GenAI

Think open-source is cheap? Think again. Here's a quick overview of platform costs based on our experience working with 1000s of AI teams:

DIY Promise | Actual Cost |

|---|---|

"Free" fine-tuning | $900K+/year in ML engineer salaries |

"Cheap" GPU instances | $500K+ in downtime and crashes |

"Flexible" infra | Millions in lost revenue from delayed launches |

Managed solutions offer predictable pricing, guaranteed performance, and the freedom for your team to focus on your core product, not your servers.

5 Hidden Complexities (That Everyone Underestimates)

From our ebook, Build vs. Managed GenAI Platforms, here’s what catches even smart ML engineers off-guard:

1. GPU Scarcity

High-performance GPUs are expensive and hard to get. DIY teams often struggle with availability and optimizing usage across difference applications and traffic patterns, while managed platforms secure and optimize capacity upfront.

2. Monitoring Mayhem

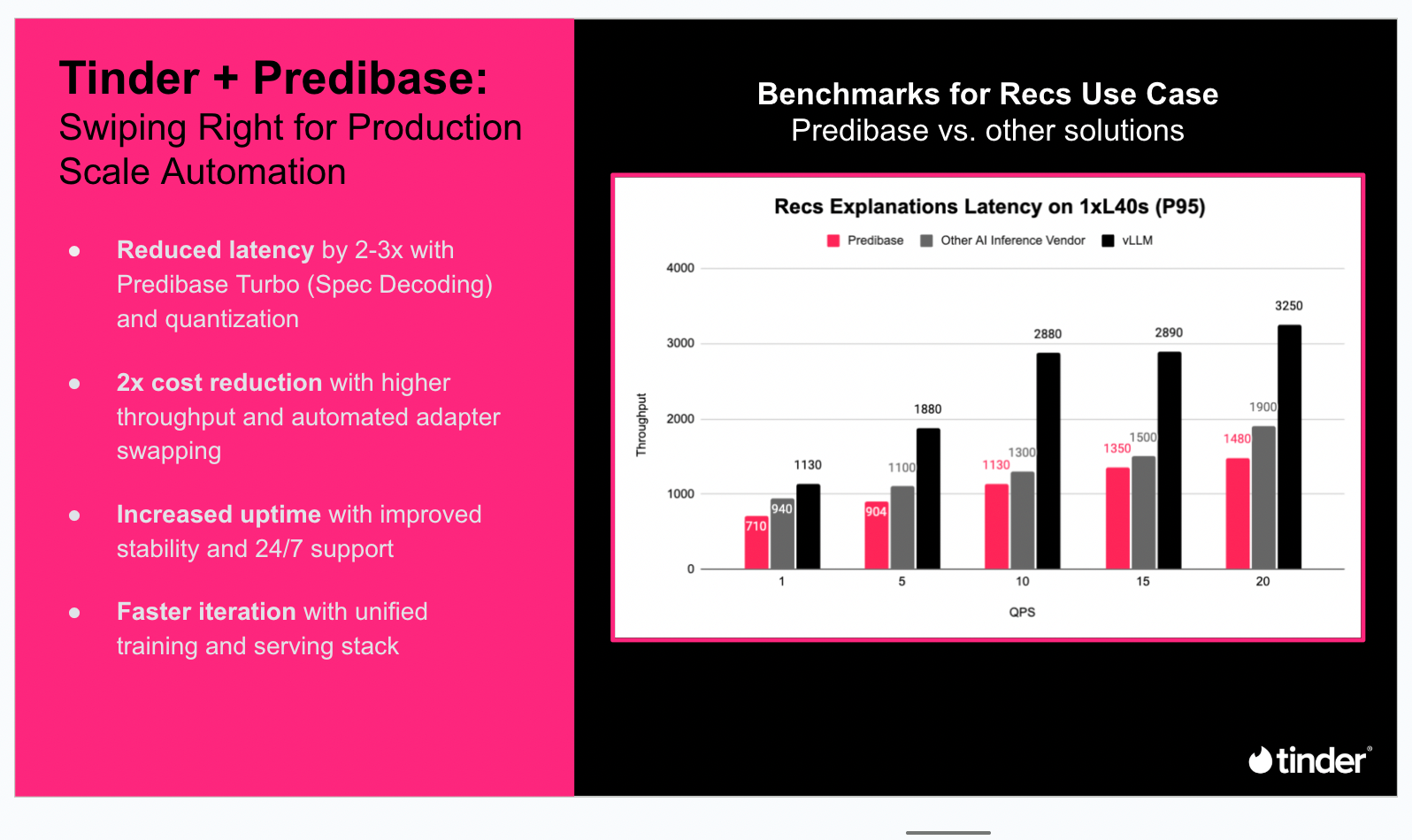

Running your own platform means building your own observability stack: dashboards, alerts, incident response, and on-call rotations. Managed services handle this for you, with real-time alerts and auto-rollback built in. As Tinder recently shared in their talk about their experience using Predibase, "outsourcing your on-call is the real killer feature."

3. Scaling Nightmares

What works for a prototype can collapse in production. DIY systems often hit scaling bottlenecks, while managed platforms scale workloads automatically and reliably. The Tinder benchmarks below show the 2x throughput improvements when going from vLLM to Predibase's managed serving platform.

4. Compliance Headaches

Security and compliance standards like HIPAA, GDPR, and SOC 2 require serious effort. Managed platforms offer built-in controls, audits, and certifications from day one.

5. Talent Shortages

It takes more than one smart engineer. DIY approaches need a full bench: ML, infra, DevOps, security, GPU tuning. Managed platforms reduce that hiring burden dramatically.

Recently shared in their talk at DeployCon, Tinder highlighted their significant cost and latency improvements when benchmarking Predibase's managed serving and training stack vs open-source vLLM.

The ROI Breakdown

Building a GenAI platform in-house can cost $1M–$1.5M annually, primarily due to engineering salaries, infrastructure setup, and maintenance overhead. In contrast, a managed subscription-based platform typically includes these costs in a predictable, pay-for-what-you-use structure, offering built-in infrastructure, upgrades, SLAs, and reduced downtime.

Key savings come from eliminating the need to hire specialized talent, avoiding unplanned outages, and freeing engineers to focus on core ML tasks rather than infrastructure. Over time, subscription services often deliver better ROI by lowering total cost of ownership and accelerating product development.

Metric | In-House | Managed Platform |

|---|---|---|

Cost | $1.5M+/yr | $50K-200K/yr |

Speed | Months delayed | Deploy in days |

Engineering | Burnout & maintenance | Innovation & growth |

The math is clear. Unless GenAI infrastructure itself is your product, a managed platform wins every time. Convirza made the switch to Predibase from their own DIY stack that was running Longformer models and the cost, latency and performance improvements were significant (even compared to OpenAI).

Comparing the cost, accuracy and inference performance (throughput) of using Predibase vs. OpenAI vs. Building their Own infra

Get Started Today

Your competitors aren't waiting. While you read this, they're deploying new features with managed platforms, shipping faster, and innovating instead of troubleshooting.

Don’t be the company reinventing the wheel while others race ahead.

- Download the Comprehensive Guide to Building vs. Buying GenAI Infra for the full playbook.

- Try Predibase for free with $25 in credits.