This is a guest blog from Kevin Petrie, VP of Research at the Eckerson Group. The blog was originally posted here.

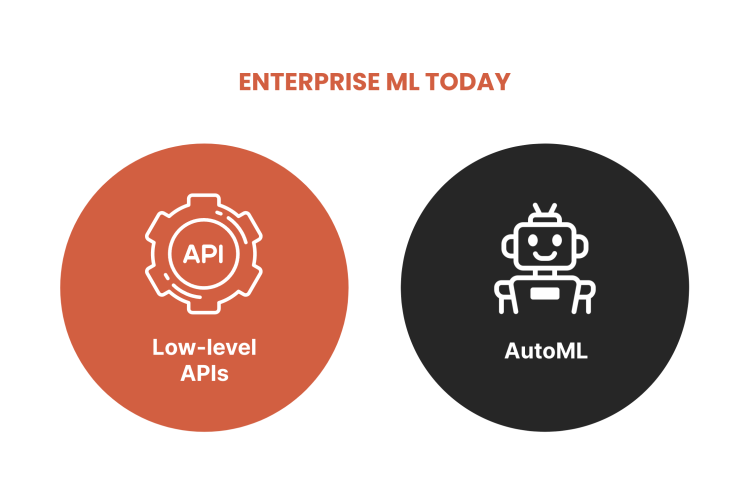

The irony of machine learning (ML) models is that, while they automate business decisions and processes, building them takes a lot of manual effort. Data science teams struggle to handle all the complex programming required to implement ML models in production. Skilled, Python-savvy data scientists and ML engineers might spend months training, tuning, and finally deploying ML models. But if they use AutoML solutions to simplify the model building process, they risk losing visibility and control.

No surprise, then, that three quarters of enterprise respondents to a recent KDNuggets survey said that most of their ML models never make it into production. This is just one of many research findings that indicate a sobering lack of ROI on AI/ML initiatives.

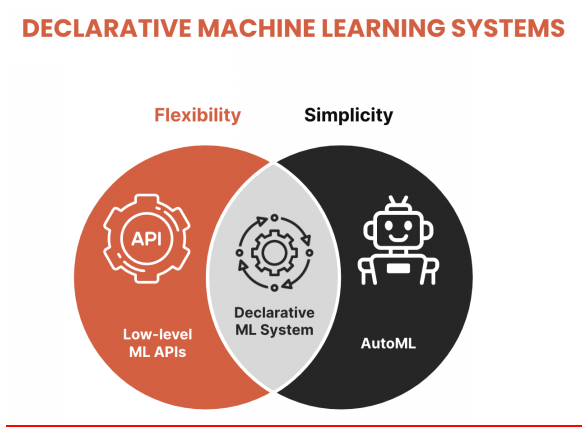

But there is cause for hope. A new approach called Declarative ML, inspired by Declarative programming, might help data science teams bring ML models to fruition with less time and effort.

The Value of Declarative Machine Learning (ML)

Declarative ML has data science teams define what they want to predict, classify, or recommend, then let the software figure out how to do it. They enter intuitive commands without needing to specify the code, rules, or other elements that execute on those commands. If this sounds familiar, it should, because popular languages such as hypertext markup language (html) and structured query language (SQL) take the same approach. These languages achieved mainstream adoption by enabling less skilled users to define what they want without worrying about how it will get done. More advanced users can still inspect what happens under the covers if they want.

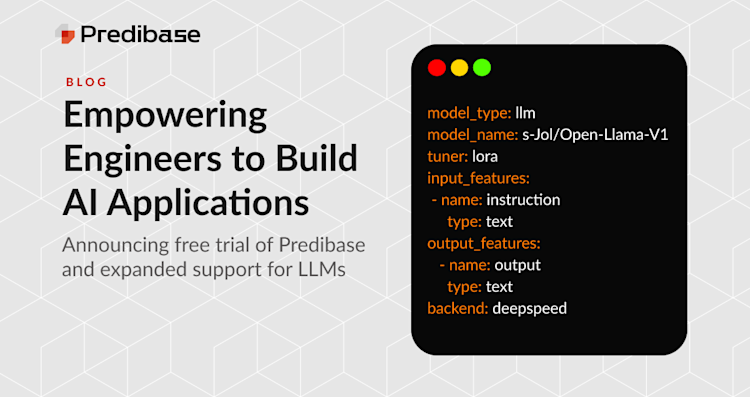

A Declarative ML system trains the ML model(s) on historical data, applying various default features, parameters, and algorithms. Using just a simple configuration file that defines the model and data, Declarative ML allows data scientists and engineers alike to train models without low-level knowledge of frameworks such as PyTorch or TensorFlow. They can accept the default selections or adjust them to meet custom, dynamic requirements, then implement and monitor the models.

This flexible approach supports ML projects that range from the simple to the sophisticated, depending on the use case and the skills of the user. In fact, that user might be a SQL-savvy analyst rather than a python-savvy data scientist. Since it’s declarative, they can inspect and modify the parameters at their preferred level of detail.

Declarative machine learning enables users to specify what they want,and let the software figure out how to do it

Declarative ML is similar to AutoML tools that also make default selections and automate part or all of the ML lifecycle. But AutoML might hide certain elements within automated workflows for the sake of simplicity, reducing the ability of data scientists to customize or explain what is happening. Declarative ML maintains full visibility and control.

Benefits. Designed and implemented well, Declarative ML has the potential to democratize implementation of complex algorithms. It can empower data analysts and engineers to tackle basic ML projects — or even complex projects such as natural language processing or computer vision — without waiting on more ML-savvy colleagues such as data scientists and ML engineers. With Declarative ML, they can improve team productivity by spending less time on minutiae and more time on innovation. They can improve agility by assembling and reusing pre-built, modular features and models. Finally, they can maintain oversight of details to ensure explainability and governance.

SQL Precedent. The history of structured query language (SQL) demonstrates the power of declarative approaches to programming. SQL helped drive adoption of relational databases in the 1980s, 90s, and onward by simplifying data manipulation and retrieval. For example, analysts define which records they want to query, but don’t need to specify how to reach it — e.g., whether to use an index. Decades on, SQL remains analysts’ lingua franca for managing business intelligence projects.

Recently data science teams within several large technology companies have applied a declarative approach to their ML model development. Their projects include the Overton project at Apple, Looper at Meta, and Ludwig at Uber.

Overton. Apple developed this project to enable its data scientists and developers to build and operationalize ML or deep learning without writing code. They provide a schema that includes the data inputs, model tasks, and data flow that supports those tasks. Overton then compiles the schema for frameworks such as TensorFlow or Apple’s CoreML, and finds the right architecture and hyper parameters to support that schema. Overton-based applications have processed billions of queries and trillions of records.

Looper. Meta developed the Looper project for similar reasons as Apple. Looper helps build and operationalize ML models to support real-time use cases such as prediction and classification. Based on high level specifications from the user, Looper recommends configurations of models, parameters, features, etc. based on prepared blueprints. As of April 2022 Looper hosted 700 AI models and generated 4 million AI outputs per second.

Ludwig. Uber’s Ludwig project recommends features and model outputs based on simple user commands, while also enabling users to enter additional specifications for feature processing, model training, etc. They can adapt and scale by adding models, metrics, and functions to a unified configuration system. The startup Predibase offers a Declarative ML system based on Ludwig.

Another innovation in this space is predictive query language (PQL), offered via Predibase, which provides SQL-like commands for managing the ML lifecycle. PQL helps data scientists or data analysts use a familiar declarative interface as they connect with data, then train, deploy, and monitor ML models.

Declarative ML in Practice

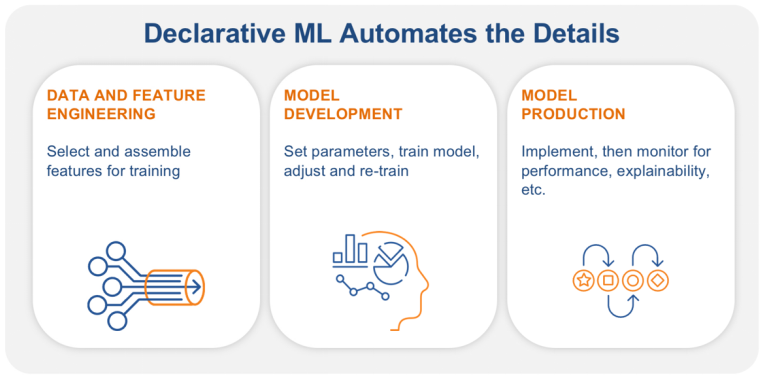

To understand what this looks like in practice, let’s examine how Declarative ML compares with traditional approaches to the three stages of the ML lifecycle: data and feature engineering, model development, and model production.

Data and feature engineering

Traditional approach. In this stage, the data scientist and data engineer ingest and transform data inputs from various sources. They apply common labels to historical outcomes, perform exploratory data analysis, and derive the most telling data inputs — called features — that they think will best predict outcomes.Declarative ML approach. A Declarative ML system will select and assemble features based on a simple specification by the data scientist or even data analyst. For example, to detect fraud using features such as purchase description and transaction type, the user just needs to specify the data types of the features. In this case, the data type for purchase description is “text,” and for transaction type it’s “category.” The Declarative ML system then presents a suggested set of features and model framework based on the data types.

Model development

Traditional approach. In this stage, the data scientist experiments with various ML techniques. These can range from simple linear regressions that define the relationship between features and outputs, to deep learning neural networks that use multiple models for use cases such as image classification or natural language processing. The data scientist spends time training, tuning, and re-tuning parameters to make accurate predictions. They also might need to synchronize elements such as the layered algorithms that feed one another in an artificial neural network for deep learning.Declarative ML approach. A Declarative ML system automates this stage in a big way. Based on a few basic commands from the user, the system creates individual models that perform the tasks listed above. The data scientist or analyst reviews each model and how it relates to the others, approves or adjusts each, and kicks off the automated training process. The system inspects the outputs and adjusts features, parameters, and models to make them more accurate. Users can oversee, approve, or override steps along the way.Similar to AutoML, users can train models without worrying about the minutiae. But Declarative ML does enable users to improve their models by iterating in a transparent and flexible way. They can inspect and make specific changes to features, parameters, and models based on their skills.

Model production

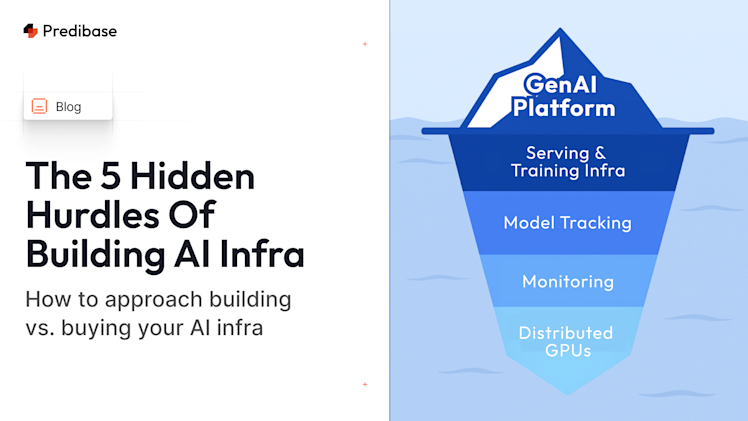

Traditional approach. Once the ML model meets the requirements, the ML engineer takes the trained model from the data scientist and implements it in production applications or workflows. The ML engineer monitors model operations, including its performance, accuracy, cost, and potential bias. They also collaborate with data stewards to govern the model by cataloging key metrics and intervening when necessary to replace or re-train models. This process can become complex given the varying infrastructure, maintenance, and alerting requirements of different models and use cases.Declarative ML approach. A well-designed Declarative ML system can assist this stage by abstracting away complex ML infrastructure while providing metrics for model monitoring. It helps identify drifting data inputs or model outputs; inspect features, parameters, and models — and recommends actions to optimize these elements or fix issues. For instance, Predibase provides “blue-green” updates that allow users to quickly deploy models to a high-throughput, low-latency endpoint.

Conclusion

In summary, Declarative ML:

- Has been adopted by Uber (Ludwig), Apple (Overton), and Meta (Looper).

- Offers a higher abstraction layer that provides flexibility, automation, ease-of-use.

- Can improve efficiency across the entire ML life-cycles of feature engineering, model development, and production.

While we’re early in the adoption curve, Declarative ML has the potential to reduce the time, effort, and skills required to bring ML into production in a wide range of enterprise environments. If you’d like to see what Declarative ML looks like in practice, I’d recommend requesting a demo from Predibase to learn more.