Reinforcement Learning and Fine-Tuning have historically been treated as separate solutions to separate problems. However, DeepSeek-R1-Zero demonstrated that pure reinforcement learning could be used in place of supervised fine-tuning to achieve domain and task specialization with near state-of-the-art performance.

Reinforcement Fine-Tuning (RFT) is a new alternative to Supervised Fine-Tuning (SFT) that applies reinforcement learning to supervised tasks, improving model performance on specific tasks and domains. As the method used to fine-tune DeepSeek v3 into DeepSeek-R1-Zero, RFT marks a paradigm shift for the industry, opening up new domains and use cases previously considered incompatible with SFT, and able to achieve meaningful performance gains with as few as a dozen examples.

Does this mean SFT is dead? Long live RFT? Well, not exactly…

From our team’s testing over the past month, we’ve identified three sufficient conditions for choosing RFT over SFT for fine-tuning:

- You don’t have labeled data, but you can verify the correctness of the output (e.g., transpiling source code).

- You do have some labeled data, but not much (rule of thumb: less than 100 labeled examples).

- Task performance improves significantly when you apply chain-of-thought (CoT) reasoning at inference time.

In this blog, we’ll explain the key differences between RFT and SFT, and break down how the above factors become the criteria for selecting one approach over the other. We’ll walk through various experiments that show how RFT compares to SFT across a variety tasks and quantities of training data. By the end of the blog, you’ll have a simple framework for deciding whether one method is more suitable than the other for your real world use cases, and actionable next steps for getting started with both RFT and SFT.

Bonus: you can try the RFT LoRA we trained (discussed below) yourself. Find it here on Hugging Face.

RFT vs SFT: What are the Differences?

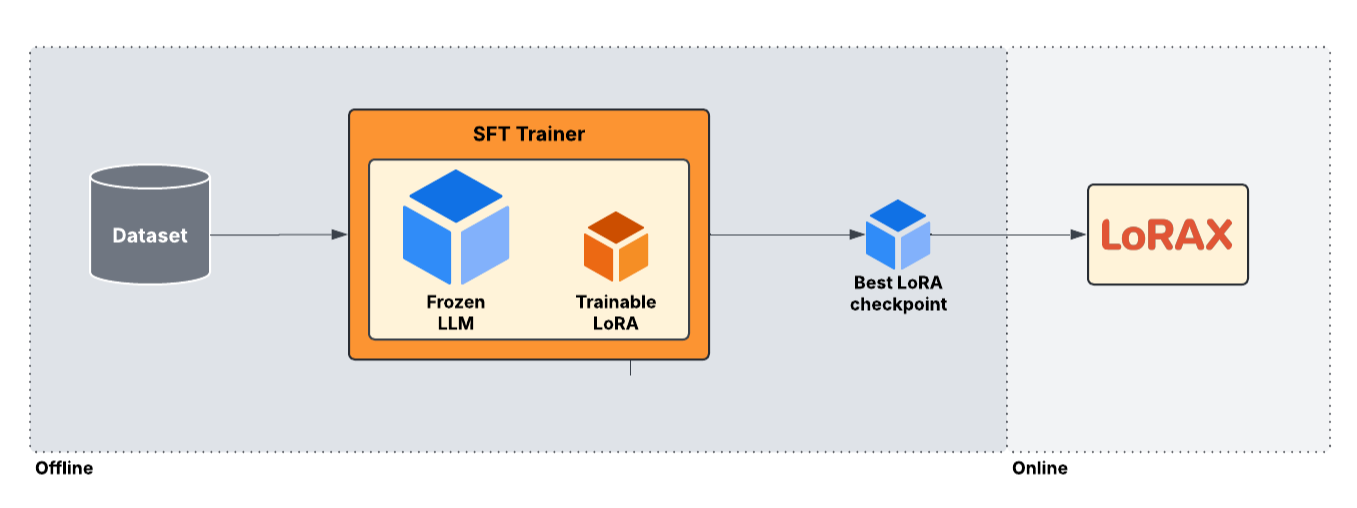

By now most of us have tried — or at least heard of — SFT (supervised fine-tuning). The process starts with a labeled training dataset consisting of prompt and completion pairs, and the objective of the training process is to adjust the weights of (or a LoRA adapter wrapping) the base model to more consistently generate output that matches the target completion from the training data. The entire process is an offline learning algorithm, meaning that the dataset is static throughout the fine-tuning run. This limits model performance: the best the model can do is perfectly mimic the training data.

SFT is an offline process in which the model iteratively learns to generate output that matches the ground truth from a static training dataset. Once this process converges, the final (or best) training checkpoint is deployed to production using an inference system like LoRAX.

RFT (reinforcement fine-tuning) — a term coined by OpenAI in late 2024 — is another name for a technique known as Reinforcement Learning with Verifiable Rewards, an approach pioneered by Ai2 with their work on Tülu 3 from November 2024. While reinforcement learning techniques like reinforcement learning from human feedback (RLHF) have been widely adopted by the LLM community for some time, RFT is unique in that it directly solves for the same type of problem as SFT: improving model performance on specific tasks where there exists a “correct” answer (rather than simply a preferred answer, as is the case with RLHF).

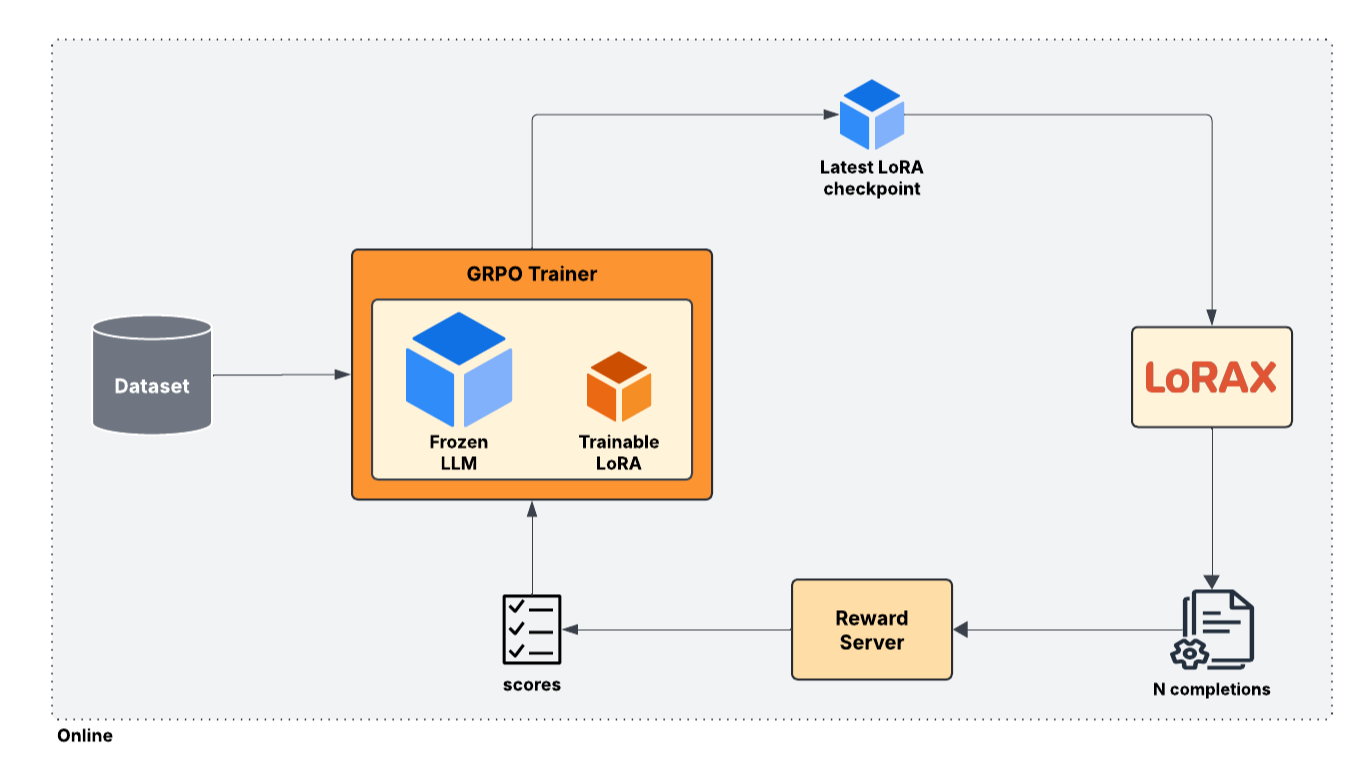

RFT is an online process, where the latest version of the model is used to generate new examples, which are then scored by a separate Reward Server. The scores are then used in the loss computation to incentivize the model to generate outputs with higher reward, and discourage outputs that lead to low reward.

There are several different algorithms that can be used to perform RFT, including Proximal Policy Optimization (PPO), Online Direct Preference Optimization (DPO), and most recently Group Relative Preference Optimization (GRPO). GRPO has rapidly taken off in popularity due to the success of DeepSeek-R1-Zero — which utilized the technique — and for its relative simplicity and reduced GPU memory overhead compared to PPO. In our experiments, we used GRPO because it worked well out of the box with minimal hyperparameter tuning, unlike PPO.

At each step in the GRPO training process, the trainer will produce a new LoRA checkpoint containing the most up-to-date version of the fine-tuned weights. An inference server such as LoRAX will dynamically load in these weights at runtime, and generate N (usually between 8 and 64) candidate completions for every element of the batch (by setting temperature > 0 to enforce random sampling). A separate Reward Server will then be used to verify the correctness and assign a score (a scalar value) for each generation. Comparing the scores within the group, the GRPO trainer will then produce advantages to update the model weights to incentivize generating output that received a higher relative score and discourage low scoring output.

Key differences between SFT and RFT are:

- SFT is offline, RFT is online. This means the data used to steer the training process is evolving over time, such that rather than having a single right answer the model attempts to mimic, the model explores different strategies (e.g., reasoning chains) that might not have been discovered prior to training.

- SFT learns from labels, RFT learns from rewards. This means RFT can be used even for problems where labeled data is scarce or doesn’t exist. So long as you can automatically verify the correctness of the output, you can use RFT. It also means RFT is less likely to overfit or “memorize” the right answer to a prompt, as it is never given the answer explicitly during training.

In the following sections we’ll show how these differences translate into tangible benefits of RFT for certain use cases by comparing performance of RFT and SFT on two different datasets: Countdown and LogiQA.

RFT Needs Verifiers, Not Labels

Unlike SFT, RFT does not explicitly require labeled data, but it does require that you have a way to objectively determine (via an algorithm) whether the generated output is “correct” or “incorrect”. In the simplest case — where labeled data exists — this is as straightforward as verifying that the generated output matches the target output from the ground truth dataset. However, RFT is more general than SFT because it can also be applied even in cases where exact labels don’t exist.

One example is transpiling source code, where the task is to convert a program from one language to another (e.g., Java to Python). In such a case, you may not have the corresponding Python program to use as training data for all your Java programs, but you can use a Python interpreter to run the generated code and verify its outputs match those of the Java program on its test cases.

Another example is game playing, where it’s easy to verify correctness (whether you won or lost the game), but difficult to precisely specify the best strategy for winning (the label). This was demonstrated by Jiayi Pan when he showed the “aha” moment from the DeepSeek paper (where it learned to reason intelligently about the task) could be recreated to solve the very specific task of playing the game Countdown.

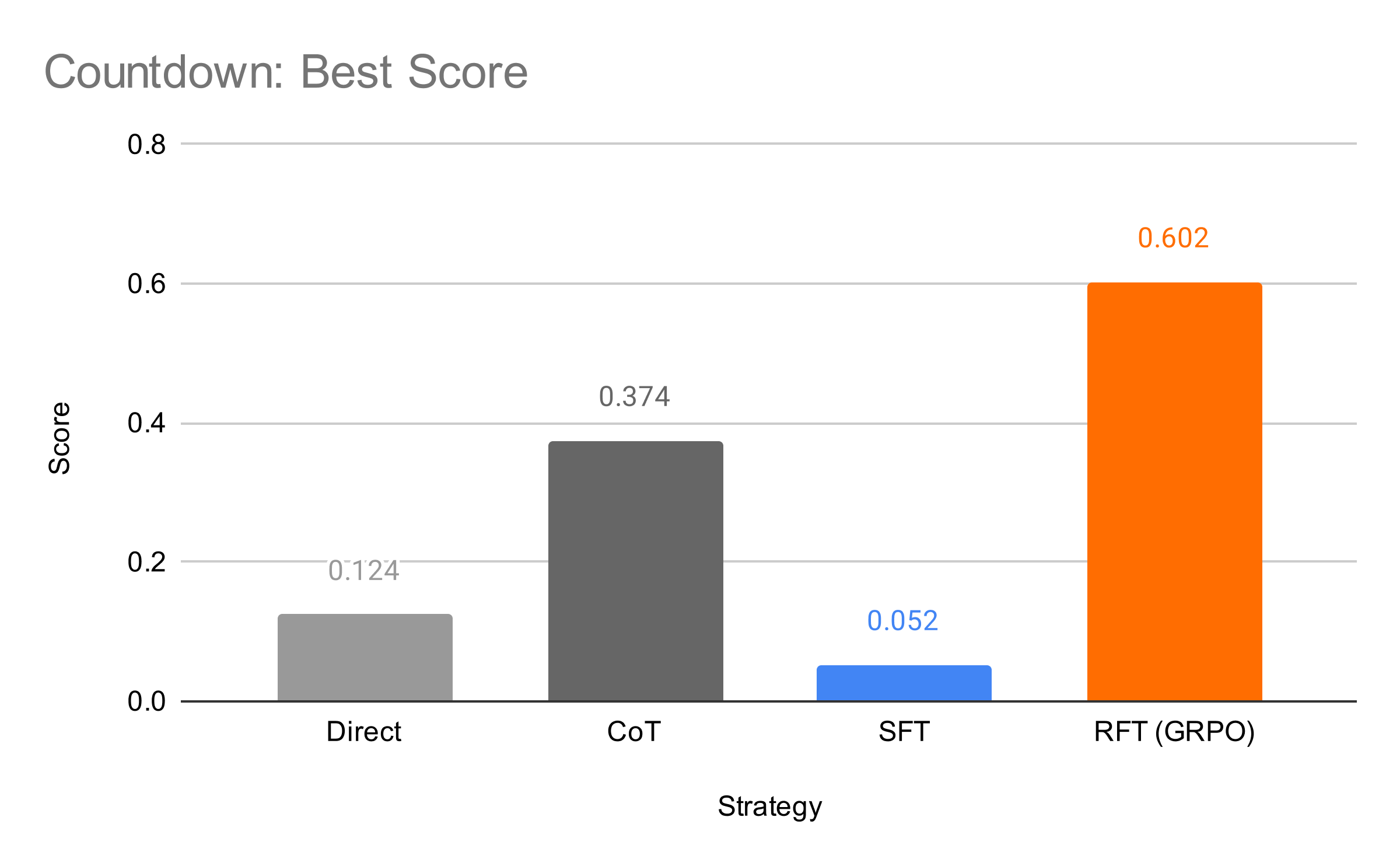

Following Philipp Schmid’s reproduction of these results, we ran a set of tests with RFT (using GRPO) on this dataset. Additionally, using a solver to curate a dataset consisting of problems and ground truth solutions, we fine-tuned models with SFT. For all our experiments (both RFT and SFT) we used Qwen-2.5-7B-Instruct as the base model with LoRA fine-tuning, rank 64, targeting all linear layers, and running for 1000 steps over 1000 examples with batch size 8.

RFT improves accuracy on the task by 62%, while SFT actually hurts performance significantly. Direct here refers to prompting the model to directly provide an answer without reasoning, while CoT refers to chain-of-thought prompting, where we tell the model to “think step by step”.

Despite the fact that we were able to generate a label for this task using a brute force search, it didn’t help the model (we’ll discuss this more in the section on chain-of-thought to come). For more complex problems, brute force solving might be computationally infeasible, while verification is similarly cheap. For CS majors out there, this is analogous the class of problems known as NP — problems for which solution verification in polynomial time is possible, while solving in polynomial time is not guaranteed.

If you’re interested in trying it out, you can find our best performing RFT LoRA on HuggingFace.

RFT Beats SFT When Data is Scarce

When you only have dozens of examples, SFT can be prone to overfitting (memorizing) individual examples, rather than learning general patterns. RFT is more resilient to this memorization error, and can learn general strategies from just a dozen or so examples. Conversely, when you have lots of data (>100k examples), RFT can be slow to train, while SFT can start to derive highly general patterns from the variety of examples.

Revisiting the Countdown game, we ran an additional test of experiments with different dataset sizes: 10, 100, 1000.

0 examples refers to 0-shot prompting, where we provide no demonstrations of the task. At 10 and 100 examples, the same rows used for training are used for in-context learning for consistency. At 1000 examples, we could not perform ICL due to context window limitations.

Even with just 10 examples, RFT is able to improve on base model chain-of-thought by 18%, and improves by 60% at 100 examples (where CoT begins to degrade, likely due to the Lost in the Middle phenomenon). SFT performs consistently poorly at all dataset sizes on this task.

Let’s examine another dataset: LogiQA, a multiple-choice dataset for testing deductive and logical reasoning.

Note that y-axis starts at 0.4 as absolute differences are relatively minor for this task.

In contrast to the Countdown task, the difference between direct prompting and chain-of-thought is relatively minor (+2% at 0-shot, -2% at 10-shot). Also different is that at higher numbers of training examples (100+) SFT starts to outperform RFT for this task. We’ll discuss this more in the next section, but we can get an intuition for why this occurs by looking at the difference — or lack thereof — between direct and chain-of-thought performance on this task. Because reasoning doesn’t help boost performance on the base model, improving the reasoning through RFT doesn’t help as much as one would hope.

But one very notable similarity between the LogiQA results and Countdown is that at a low number of examples (10) RFT outperforms both SFT and base model performance, while SFT overfits and underperforms the base model. This appears to be a general pattern across a variety of tasks, and in fact performance with small amounts of data can be further improved through writing more granular reward functions and increasing the number of generations per step.

As before, you can find our best performing SFT LoRA on HuggingFace here.

RFT Improves Reasoning for Chain-of-Thought

RFT can help improve reasoning strategies when using chain-of-thought prompting or reasoning models like DeepSeek-R1. While SFT can be used to distill strong reasoning from a more powerful teacher model into a smaller student, it cannot be used to improve reasoning capability over the baseline set by the teacher model.

Another nice property of RFT is that it can be used to elicit advanced reasoning capabilities from a non-reasoning base model (the so-called “a-ha” moment from the DeepSeek paper). In our tests, we tried both base Qwen-2.5-7B-Instruct and DeepSeek-R1-Distill-Qwen-7B, and found that they both improved performance through RFT, and in fact Qwen outperformed DeepSeek across the board.

Importantly, not every task benefits equally from chain-of-thought reasoning, even when the task appears to be well-suited to it from a human perspective (e.g., LogiQA). In general, attempting to use SFT to solve a problem directly without chain-of-thought should almost always be used as a baseline, where sufficient labeled data is available.

But in cases where chain-of-thought shows clear improvement over direct answering even without fine-tuning (e.g., Countdown), RFT will be the clear winner due to its ability to refine and improve reasoning strategies during training. The one exception to this is when extremely high quality reasoning data is available (as was recently demonstrated in s1: Simple test-time scaling).

Choosing the Right Fine-Tuning Method

Putting it all together: how should you ultimately decide when to use RFT or SFT to improve model performance on your task? Based on everything we’ve observed to date, here’s our heuristic process for choosing a fine-tuning method:

RLHF is best suited to tasks that are based on subjective human preferences, like creative writing or ensuring chatbots handle off-topic responses correctly. RFT and SFT are best at tasks with objectively correct answers.

Getting Started with RFT and SFT

RFT is just getting started, and we're at the forefront of making it practical and impactful. Watch this webinar, to see how we’re applying RFT with GRPO at Predibase—including how we trained a model to generate optimized CUDA kernels from PyTorch code.

In our next blog, we’ll break down this use case step by step, sharing key takeaways and lessons learned.

If you’re interested in applying RFT to your use case, reach out to us at Predibase, we’d love to work with you. And if you’re looking to try SFT as well, you can get started for free with Predibase today.

Reinforcement Learning FAQ

What is Reinforcement Fine-Tuning (RFT) in machine learning?

Reinforcement Fine-Tuning (RFT) is a method that applies reinforcement learning techniques to fine-tune language models without requiring labeled data. Unlike traditional Supervised Fine-Tuning (SFT), which adjusts model weights based on fixed prompt-completion pairs, RFT optimizes model behavior using a reward function that scores the correctness of generated outputs. This allows models to self-improve by iteratively refining their responses.

How is Reinforcement Fine-Tuning (RFT) different from Supervised Fine-tuning (SFT)?

Supervised Fine-Tuning (SFT) is an offline process that trains models on fixed labeled datasets, making it ideal for large, high-quality data but prone to overfitting with small datasets. Reinforcement Fine-Tuning (RFT) is an online process that improves models using reward-based feedback, eliminating the need for labeled data if correctness can be verified. RFT is better for reasoning tasks like Chain-of-Thought (CoT) and excels when labeled data is scarce. SFT is best for structured datasets, while RFT shines in exploratory learning.

How does reinforcement fine-tuning differ from supervised fine-tuning?

While supervised fine-tuning relies on labeled datasets to adjust model parameters, reinforcement fine-tuning utilizes a reward-based system to iteratively improve model performance, making it more effective in scenarios with scarce labeled data

Why is Reinforcement Learning advantageous when data is scarce?

Reinforcement Learning can be beneficial in data-scarce scenarios because it doesn't rely solely on large labeled datasets. Instead, the agent learns optimal behaviors through interactions, making it suitable for environments where obtaining extensive labeled data is challenging.

When should I use RFT over SFT?

RFT could be the right technique for fine-tuning if:

- You don't have labeled data, but you can verify correctness.

- You have limited labeled data (rule of thumb: fewer than 100 examples).

- Your task benefits from chain-of-thought reasoning (CoT), where step-by-step logical thinking improves results.

What techniques are used in Reinforcement Fine-tuning (RFT)?

RFT uses reinforcement learning algorithms to guide model fine-tuning. The most commonly used techniques include:

- Group Relative Preference Optimization (GRPO): Efficient and widely adopted due to lower GPU memory requirements. Used in DeepSeek-R1-Zero

- Proximal Policy Optimization (PPO): A reinforcement learning method that balances exploration and exploitation but is computationally expensive.

- Online Direct Preference Optimization (DPO): Similar to GRPO, as both optimize model outputs based on relative preference ranking. However, DPO uses a single preference pair, where one response is ranked better than the other, while GRPO calculates advantage across an entire group of generated responses (which can range from 2 to any value of K), allowing for more flexible optimization.

These methods help models iteratively improve performance without needing explicit labeled data.

How does Reinforcement Fine-Tuning (RFT) help with Chain-of-Thought (CoT) reasoning?

RFT improves Chain-of-Thought (CoT) reasoning by allowing models to experiment and refine multi-step reasoning strategies rather than memorizing fixed answers.

- Unlike SFT, which simply learns to reproduce CoT examples, RFT encourages models to discover new reasoning approaches that maximize correctness.

- RFT is particularly useful when the base model benefits from CoT prompting but does not yet execute reasoning effectively.

- Experiments show that RFT-trained models perform better on reasoning-intensive tasks like the Countdown game, where step-by-step logic is critical.

This makes RFT a powerful tool for improving structured reasoning, logic-based decision-making, and mathematical problem-solving.

Does Reinforcement Fine-tuning (RFT) require labeled data?

No, RFT does not require labeled data in the traditional sense. Instead of using prompt-completion pairs, RFT relies on a reward function to determine whether a model-generated output is correct.

However, RFT does require a way to verify correctness (e.g., an automated scoring mechanism). Some examples include:

- Code Transpilation: Use a Python interpreter to check if generated code produces the correct output.

- Math & Logic Tasks: Use a solver or rule-based function to evaluate answers.

- Game AI: Verify success through game outcomes (win/loss states).

If you have labeled data, you can still use it to help define a reward function—but it’s not required for RFT to work effectively.

How can I get started with Reinforcement Fine-tuning?

The best way to get started with Reinforcement Fine-Tuning (RFT) or Supervised Fine-Tuning (SFT) is by joining our early access program at Predibase. Our platform makes it easy to fine-tune and deploy open-source LLMs without the complexity of managing infrastructure.

By requesting a demo, you’ll get:

✅ Hands-on access to our cutting-edge RFT and SFT tools

✅ Expert guidance on optimizing your models for performance and efficiency

✅ Early access to advanced fine-tuning methods like GRPO for RFT

🚀 Be among the first to leverage RFT at scale! Request a demo today and see how Predibase can help you train and serve custom LLMs with minimal effort.