Arnav Garg

Arnav Garg is the Machine Learning Lead at Predibase, where he builds high-throughput LLM infrastructure and leads development of efficient fine-tuning techniques like Turbo LoRA. His work focuses on scalable training, fast inference, and practical ML systems for deploying custom models on user data.

![]()

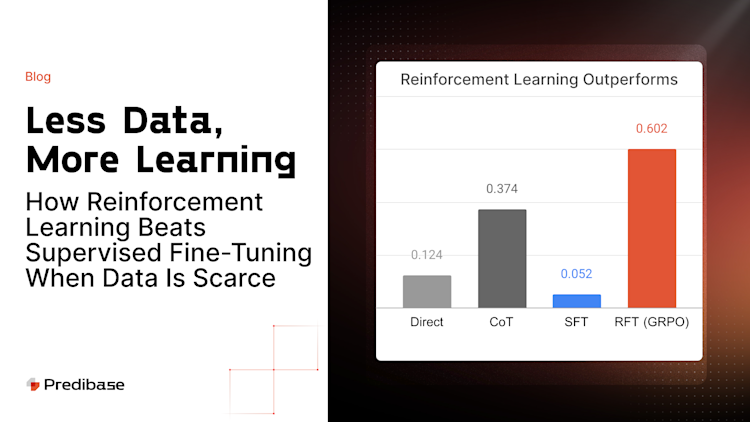

Why Reinforcement Learning Beats SFT with Limited Data

How RFT Beats SFT When Data is Scarce

Read Article![]()

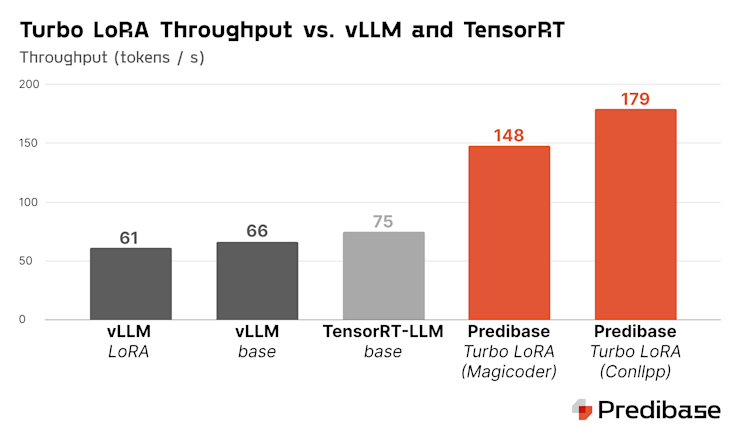

Turbo LoRA: 2-3x faster fine-tuned LLM inference

Turbo LoRA: 2-3x faster fine-tuned LLM inference

Read Article![]()

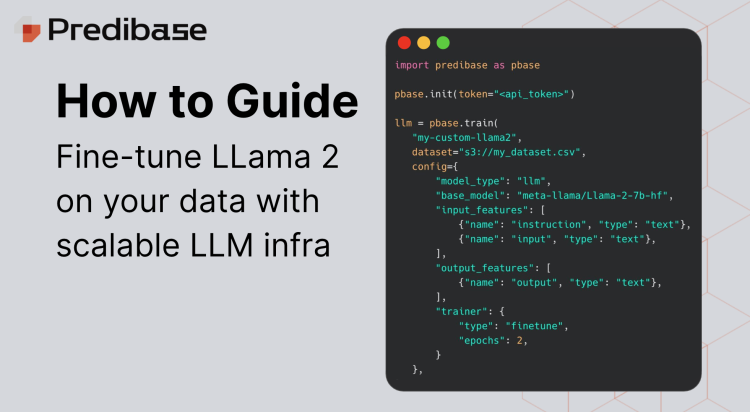

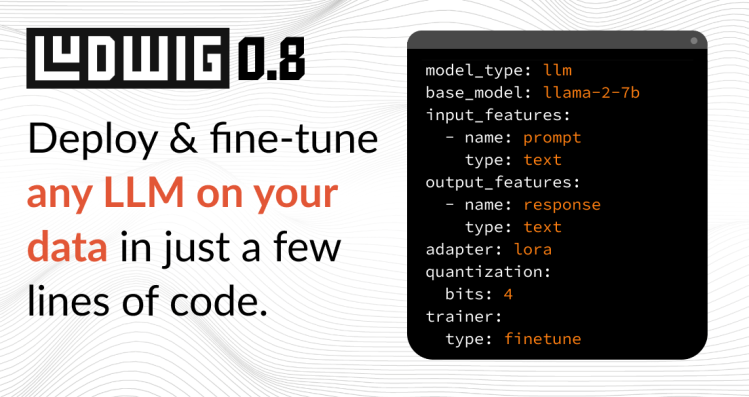

How to Fine-Tune LLaMA-2 on Your Own Data at Scale

How to Fine-tune LLaMa-2 with Scalable Infra

Read Article![]()

![]()

![]()

![]()

Train AI to Write GPU Code via Reinforcement Fine-Tuning

A Deep Dive into Reinforcement Fine-Tuning

Read Article![]()

Fine-Tune CodeLlama-7B to Generate Python Docstrings

Generate Docstrings with Fine-tuned CodeLlama

Read Article![]()

![]()