To learn more about RFT, sign up for our webinar on April 3.

Today we're launching the first end-to-end platform for reinforcement fine-tuning (RFT).

When we started Predibase four years ago, our goal was to make it easy for any developer to build and deploy fit-for-purpose models on their data. Since then, we’ve heard the feedback loud and clear – people see amazing results fine-tuning models to their task but access to high quality labeled data is most frequently the blocker. That changes today.

OpenAI first gave a glimpse of a managed RFT solution during the 12 days of OpenAI, but has been in research preview for just their proprietary models. Since then, DeepSeek-R1 captivated the world’s attention by showing how reinforcement learning can be one of the most powerful techniques to elevate the performance of LLMs in a data and compute-efficient way, and open-sourcing novel techniques like GRPO.

Now, we’re releasing a fully-managed, serverless and end-to-end platform for reinforcement fine-tuning

Try it yourself today in our interactive RFT playground.

What is RFT & Why We’re Excited

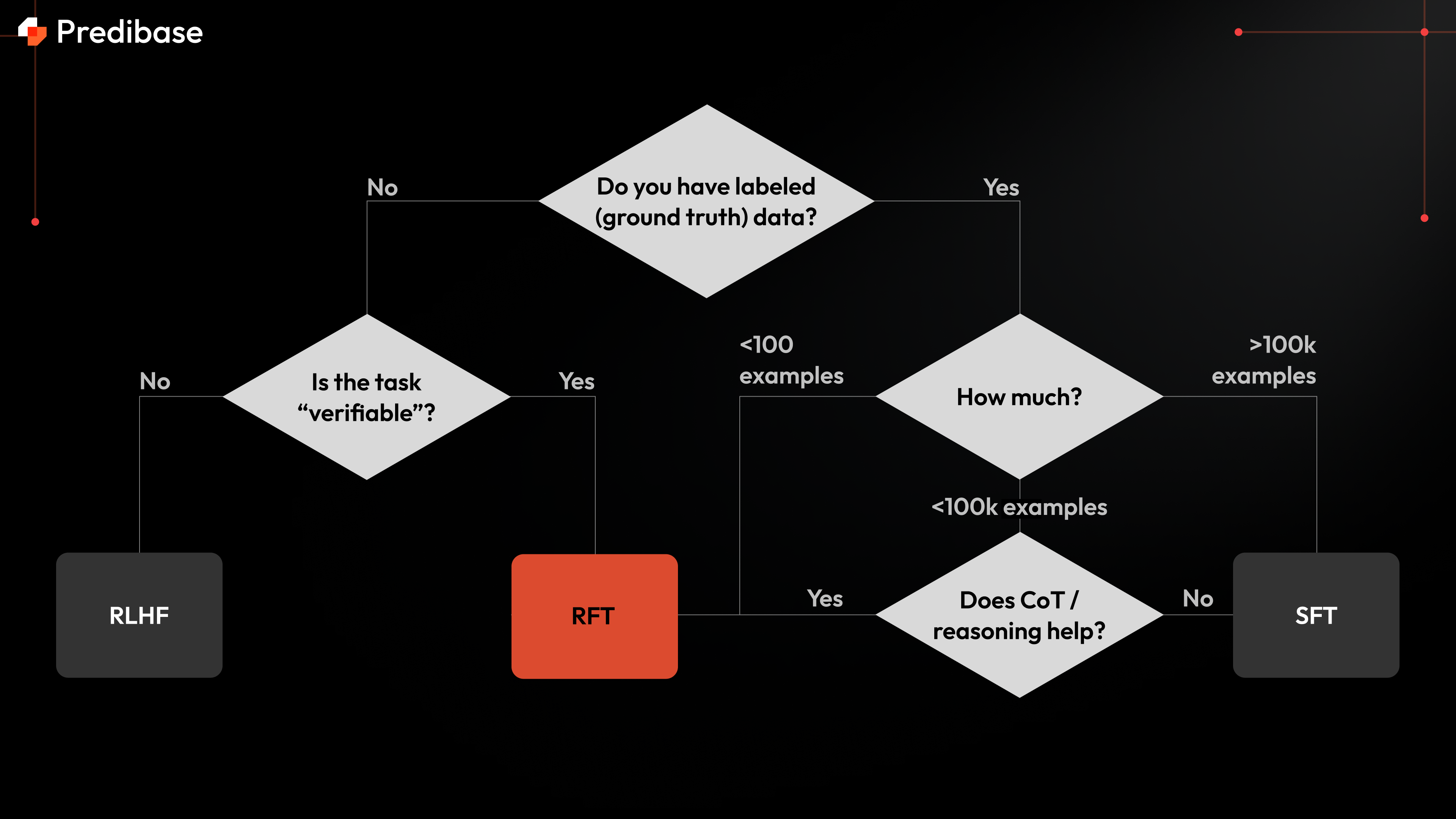

Reinforcement fine-tuning allows an LLM to learn from reward functions that steer and guide the model to outcomes, rather than purely labeled examples as in SFT. The technique works particularly well for reasoning tasks, where models like DeepSeek-R1 or OpenAI o1 perform well, and where you have smaller amounts of labeled data but can write rubrics to help score performance.

In our experience, RFT delivers exceptional results for tasks like code generation, where correctness can be objectively verified through execution, and complex RAG scenarios where factual accuracy and reasoning quality are paramount. In these areas, we've consistently seen RFT provide a meaningful performance lift over even the most capable base LLMs.

What's particularly exciting is that RFT enables continuous improvement—as your reward functions evolve and as you gather more feedback data, your models can keep getting better at solving your specific challenges.

Introducing the Complete RFT Platform

We aimed for our platform to be the first to offer two key things:

- Fully managed, serverless infrastructure

- An end-to-end experience that goes from data to high-performance serving on the Predibase Inference Engine

Fully Managed and Serverless

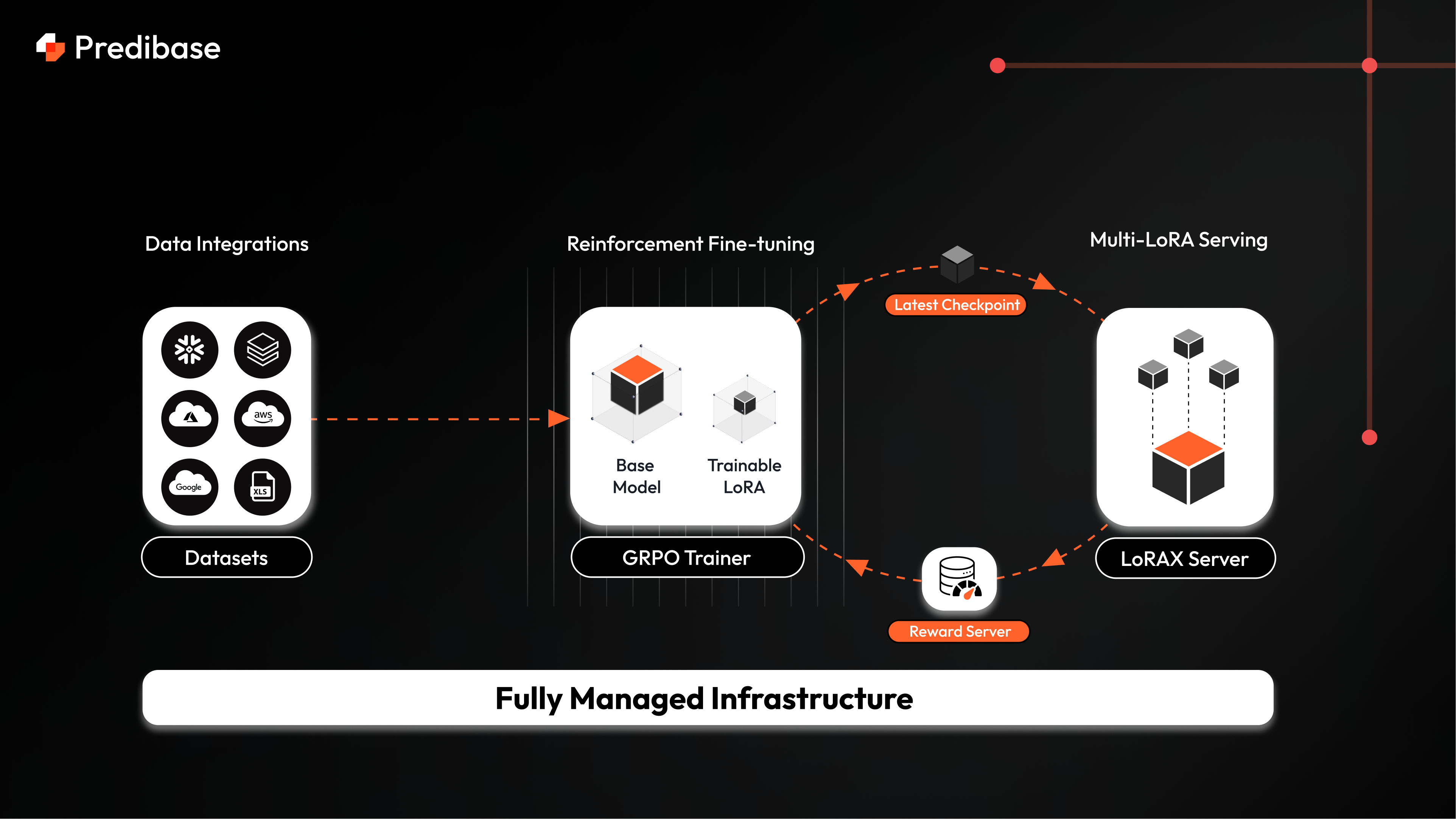

RFT leverages a number of components built natively into the Predibase platform to provide models that continuously learn. At every step, we create a fine-tuned adapter to reflect the latest stage of training and our multi-LoRA serving framework LoRAX can instantly load each checkpoint at each step to generate the latest set of completions. This allows our service to constantly evaluate the latest version of the fine-tuned models with 0 latency, and we overlap training & generation with streaming micro-batches, allowing us to keep GPU utilization near 100% during training.

We also have found that baking in a limited supervised fine-tuning warm-up step to the training recipe before starting GRPO improves performance, and have natively integrated SFT into our RFT workflow. Finally, since every task is unique and often requires the flexibility to write your own custom reward functions we’ve also created a secure reward server to execute user-code in isolated environments in parallel.

End-to-End Platform

In addition to training models with RFT, it’s crucial our customers have a best-in-class serving solution for all models our customers create. The Predibase Inference Engine now natively supports all RFT models trained inside the platform, and is compatible with features like our deployment monitoring and Turbo LoRA to accelerate the throughput of reasoning models trained via reinforcement learning.

Starting today with Predibase, you can truly go from data to a deployed model by connecting an individual dataset into Predibase, training with an SFT warm-up and refining with RFT, and then deploying a high throughput serverless model into production backed by industry-grade SLAs.

Case Study: Beating o1 and DeepSeek-R1 by 3x at GPU Code Generation

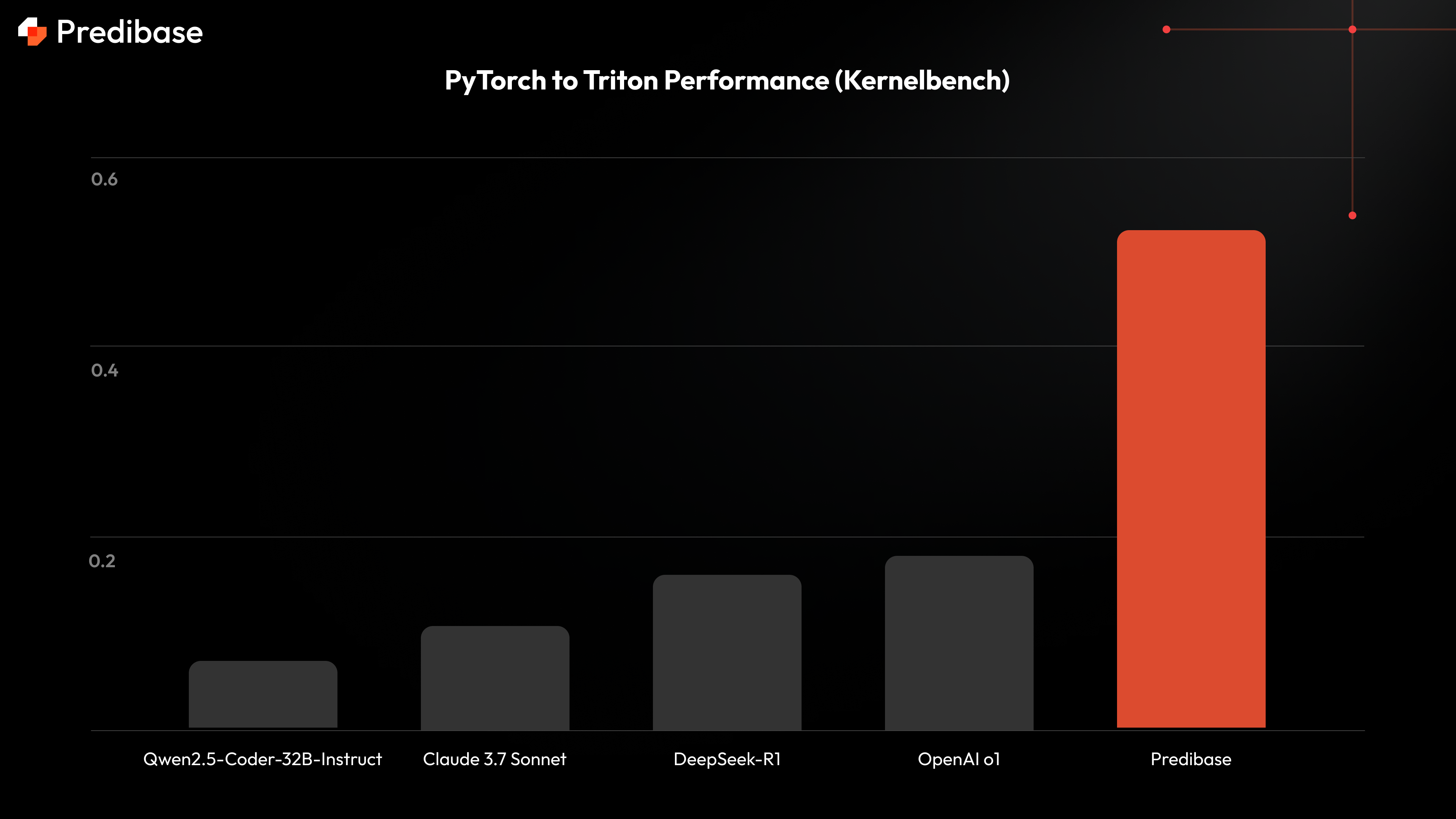

To demonstrate the power of our RFT platform, we developed a specialized model for translating PyTorch code to Triton, a middle-ground implementation to CUDA. This is a task that most LLMs struggle with, requiring deep understanding of both frameworks and complex reasoning about computational efficiency.

Our training process combined cold-start supervised fine-tuning with reinforcement learning (GRPO) and employed curriculum learning to progressively tackle increasingly difficult tasks. We detailed some of these techniques in an earlier blog post.

We ran our benchmarks on the Kernelbench dataset–which has 250 diverse tasks designed to assess an LLM’s ability to transpile code into a valid, efficient kernel–and our model delivered remarkable results.

In our implementation, we fine-tuned a relatively small model that can fit onto a single GPU, Qwen2.5-Coder-32B-instruct, and benchmarked kernel correctness against other larger foundation models including DeepSeek-R1, Claude 3.7 Sonnet and OpenAI o1.

What makes these results particularly impressive is that our model achieved a 3x higher correctness than OpenAI o1 and DeepSeek-R1 and more than 4x the performance of Claude 3.7 Sonnet–despite operating at an order of magnitude smaller footprint.

We’re excited to open source our model today on HuggingFace, alongside the launch of RFT in the platform.

The future of LLM customization is here

Reinforcement fine-tuning represents a significant leap forward in the evolution of LLMs, enabling models to learn from reward functions rather than just labeled examples. With Predibase's end-to-end RFT platform, we're democratizing access to this powerful technique, making it accessible to developers and enterprises alike.

Our platform eliminates the infrastructure complexity and technical barriers so you can just focus on building your next breakthrough. By combining fully-managed, serverless infrastructure with an integrated workflow from data to deployment, we're enabling organizations to build high-performing custom models even with limited labeled data.

The results speak for themselves: our PyTorch-to-Triton model demonstrates that RFT can produce specialized models that outperform much larger foundation models on specific tasks, while requiring significantly fewer resources.

Today, we’re releasing both the v1 of the platform as well as a sneak peek at some of the features we’re actively developing like natural language reward functions. We invite you to experience the power of reinforcement fine-tuning for yourself or request a demo to see the latest application of the technology. Sign up today or join our webinar on April 3 where we'll demonstrate how to build custom models using our RFT platform.

The future of model customization is here—and it's powered by reinforcement fine-tuning on Predibase. Read a deep dive on reinforcement fine-tuning and reinforcement learning in this blog.

FAQ

How is RFT different from traditional fine-tuning?

Unlike traditional fine-tuning, which requires large labeled datasets and retraining the full model, RFT uses a reward model to provide feedback and update the base model efficiently. This dramatically reduces data and compute requirements while improving accuracy.

What are reward functions in LLM fine-tuning?

Reward functions are models or heuristics that assign scores to generated outputs based on how well they match desired behavior. In RFT, these reward signals replace the need for extensive labels, enabling the model to learn from feedback instead of just examples.

Can I use RFT to fine-tune Mistral or LLaMA models?

Yes, RFT on Predibase supports fine-tuning of popular open-source LLMs like Mistral and LLaMA. It’s ideal for customizing these models to your specific domain or task with minimal data.

Is Reinforcement Fine-Tuning better than LoRA?

RFT and LoRA solve different problems. LoRA helps reduce the number of trainable parameters during fine-tuning, while RFT uses reward-guided optimization for more efficient and aligned tuning. In practice, RFT can outperform LoRA in tasks where behavior alignment is key and labeled data is scarce.

How much data do I need for RFT?

You can fine-tune a model with as few as 10–100 labeled examples using RFT on Predibase. It’s built to deliver high accuracy without the traditional data burden.

Does RFT affect the base model’s performance on general tasks?

No. RFT fine-tunes models in a controlled way that focuses on behavior alignment for specific tasks. It does not degrade the model’s general language capabilities.