Your Data. Your Cloud. Our Best-In-Class Infra.

Don’t trade performance for security. Unlock the full potential of open-source AI with our intelligent, scalable, and fully managed platform for training and serving SLMs—all within your private cloud.

Secured in Your Cloud

Train and serve within your cloud—we don’t see, move, or store any data. Predibase is secure and SOC-2 compliant with support for AWS, GCP, and Azure.

Learn More

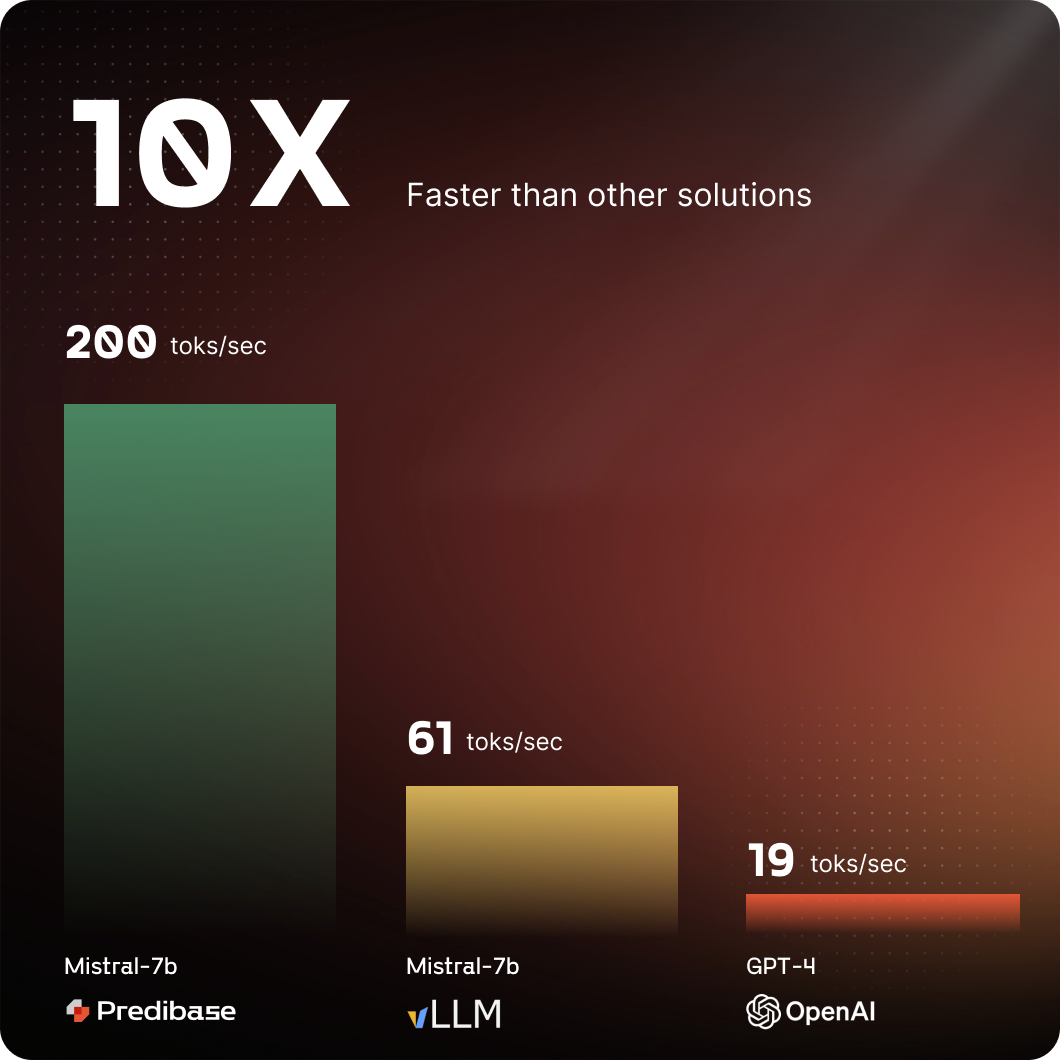

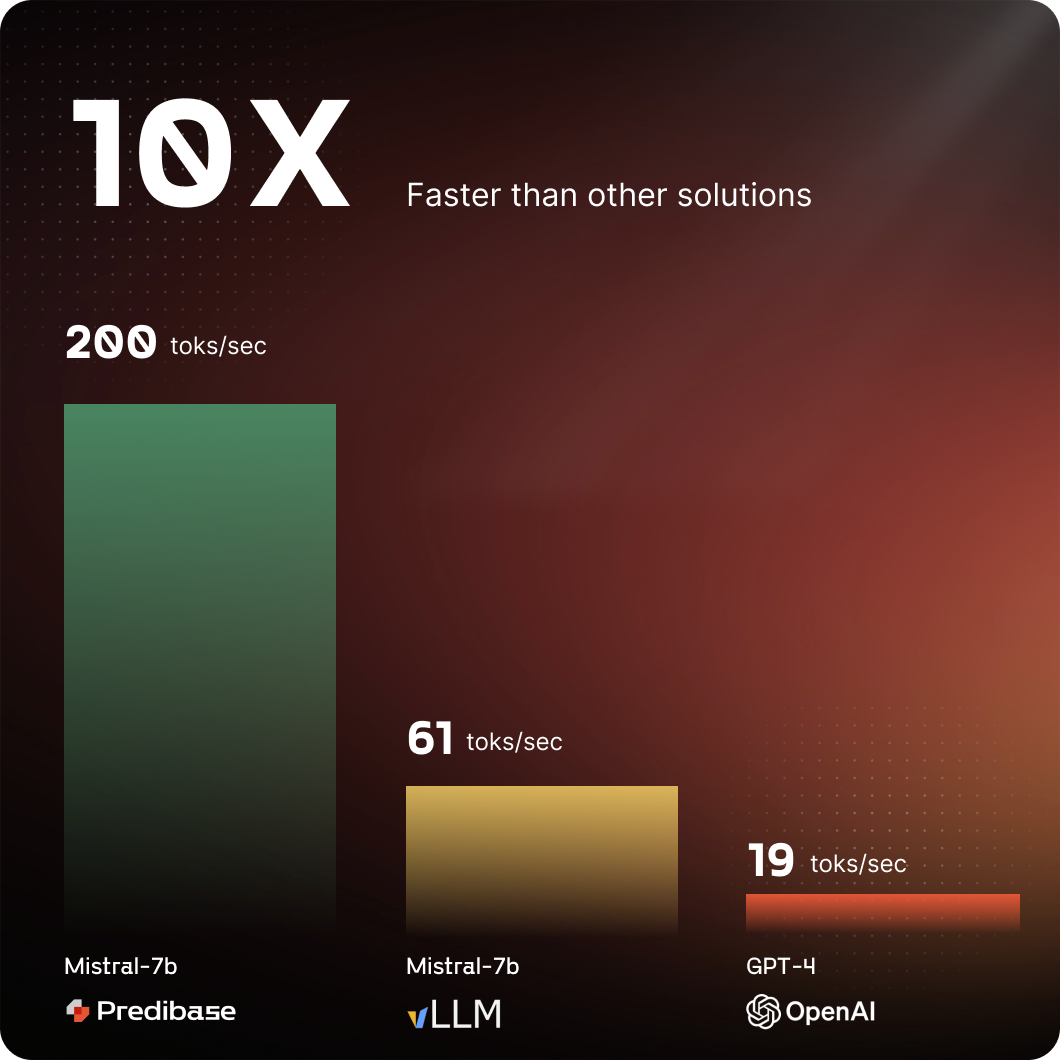

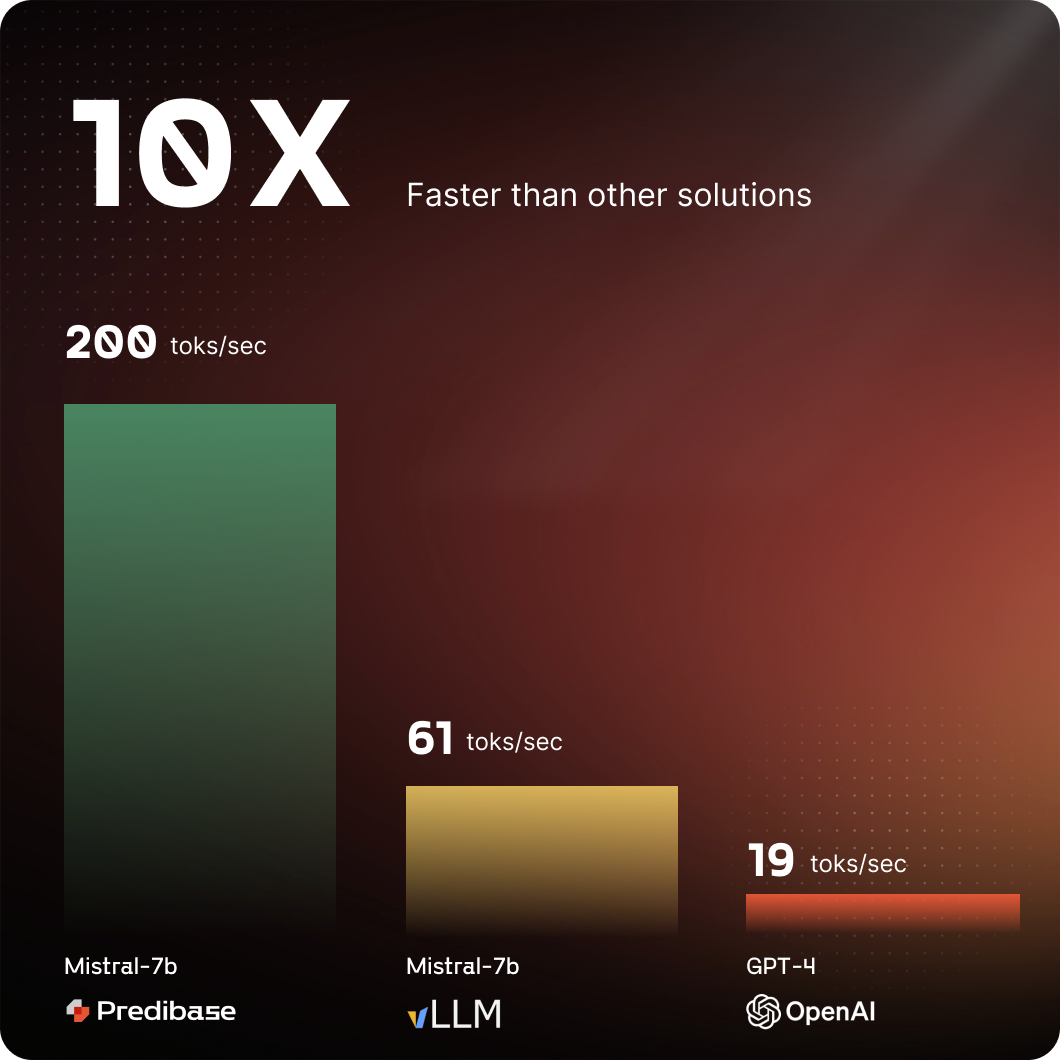

Best-in-Class Performance

Fine-tune high-quality LoRA adapters that outperform GPT-4 and achieve the fastest possible inference with Turbo LoRA and LoRAX.

Learn More

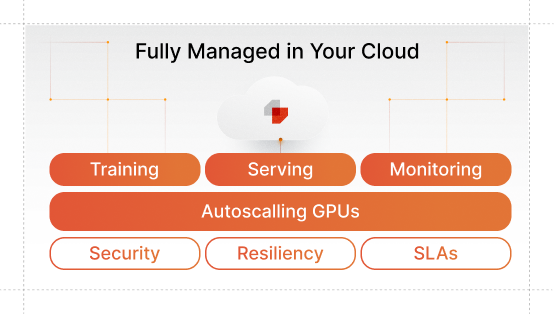

Simplified Management

Take advantage of a fully managed enterprise-grade AI platform that seamlessly autoscales for your specific needs with guaranteed SLAs.

Learn More

With Predibase, I didn’t need separate infrastructure for every fine-tuned model, and training became incredibly cost-effective—tens of dollars, not hundreds of thousands. This combined unlocked a new wave of automation use cases that were previously uneconomical.

Paul Beswick, Global CIO at Marsh McLennan

With Predibase, I didn’t need separate infrastructure for every fine-tuned model, and training became incredibly cost-effective—tens of dollars, not hundreds of thousands. This combined unlocked a new wave of automation use cases that were previously uneconomical.

Paul Beswick, Global CIO at Marsh McLennan

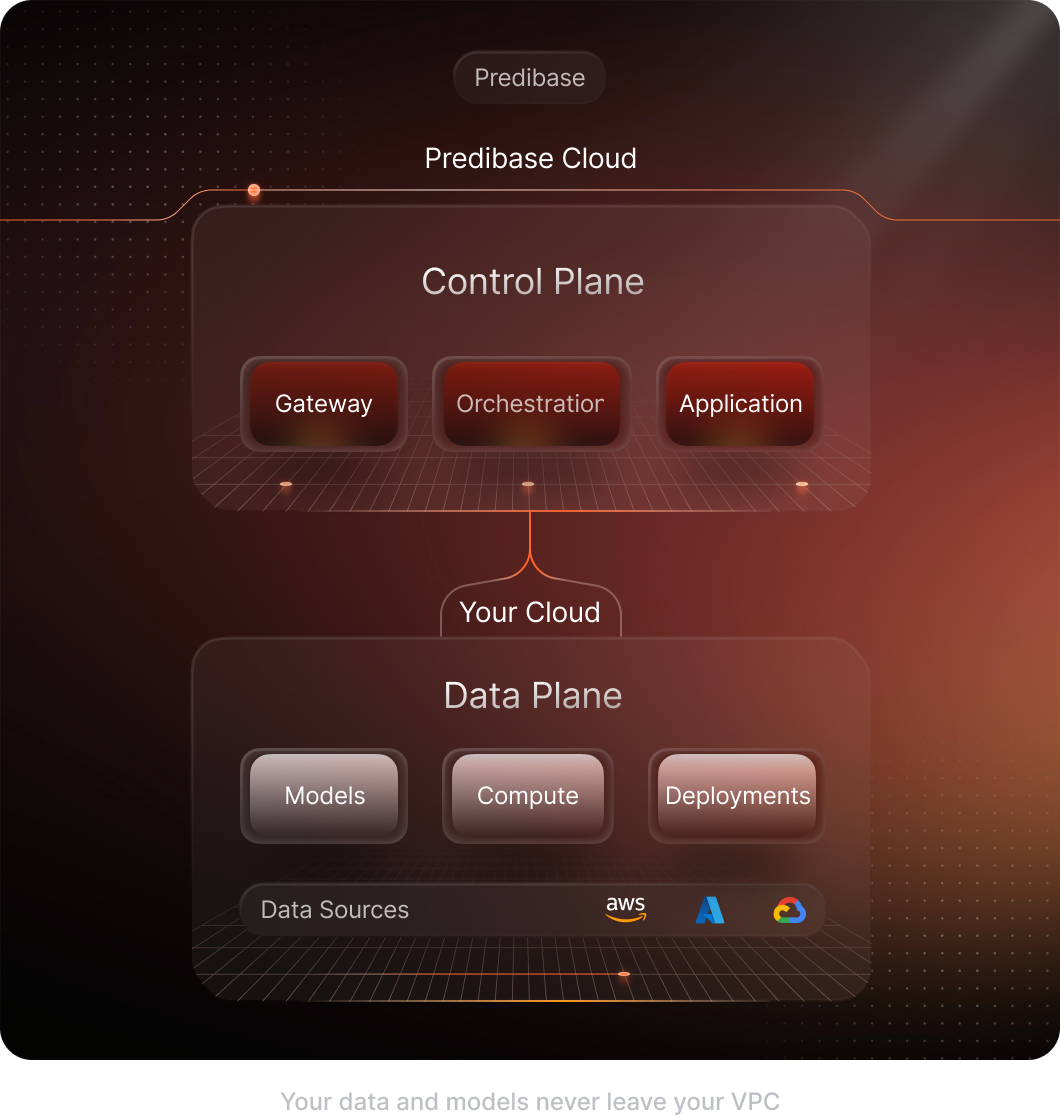

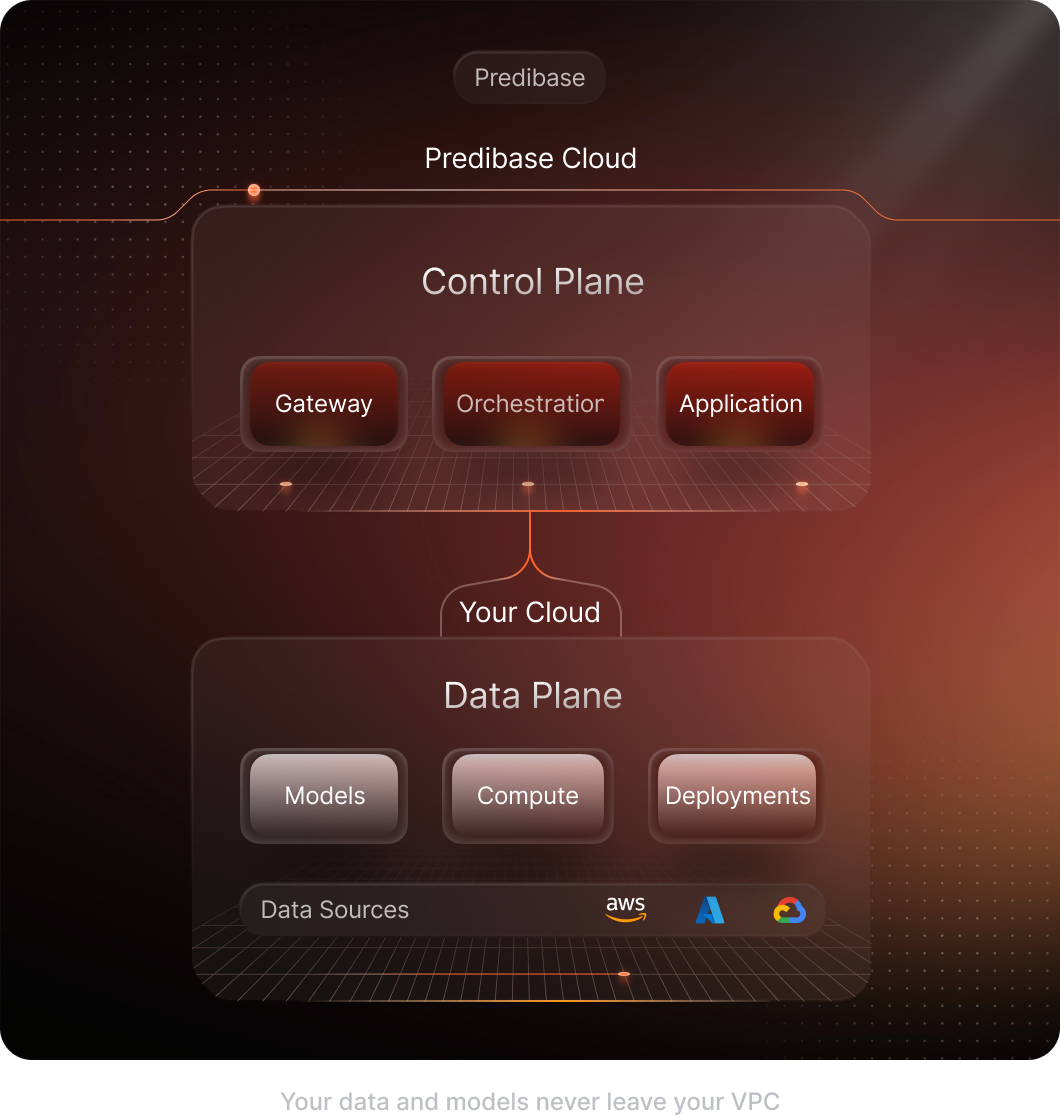

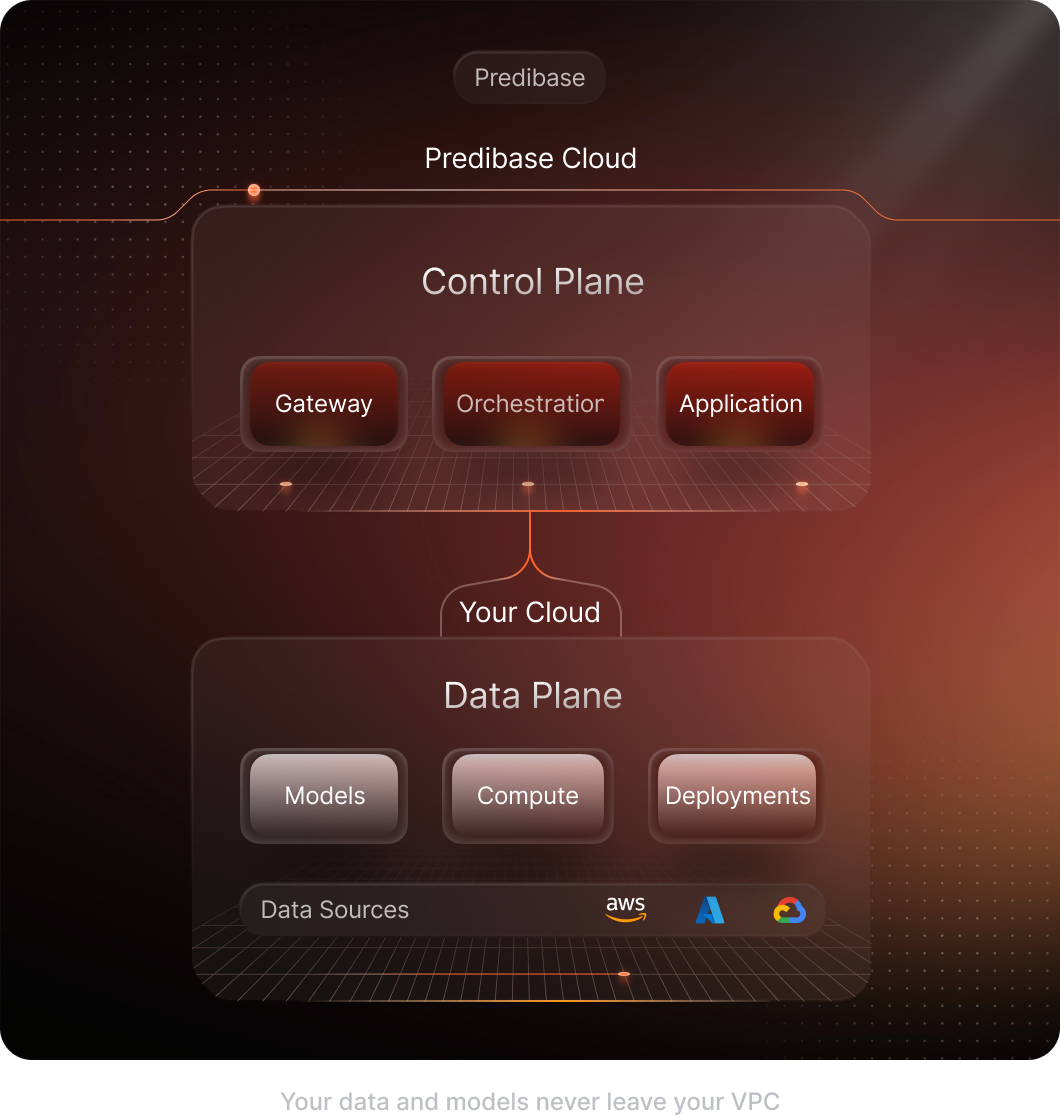

Secure and Private by Design

Everything in Your Cloud

Your models stay within your secure private cloud on AWS, Azure, or GCP.

Bring Infra to Your Data

Deploy our fine-tuning and serving stack directly in your VPC without moving your data.

We Don’t See Anything

Only queries made via the Predibase UI ever leave your cloud instance. Nothing is retained and we never see queries made via the SDK.

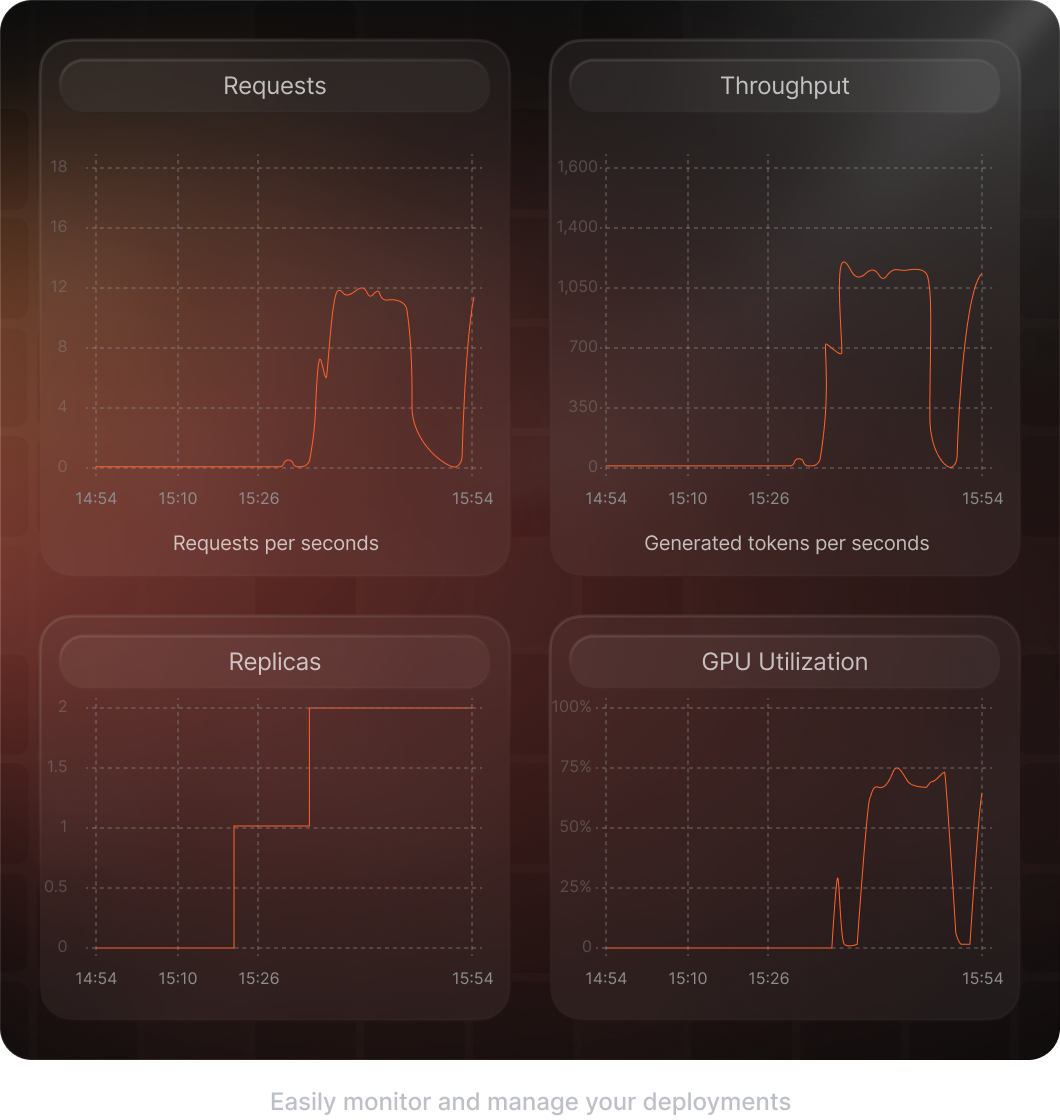

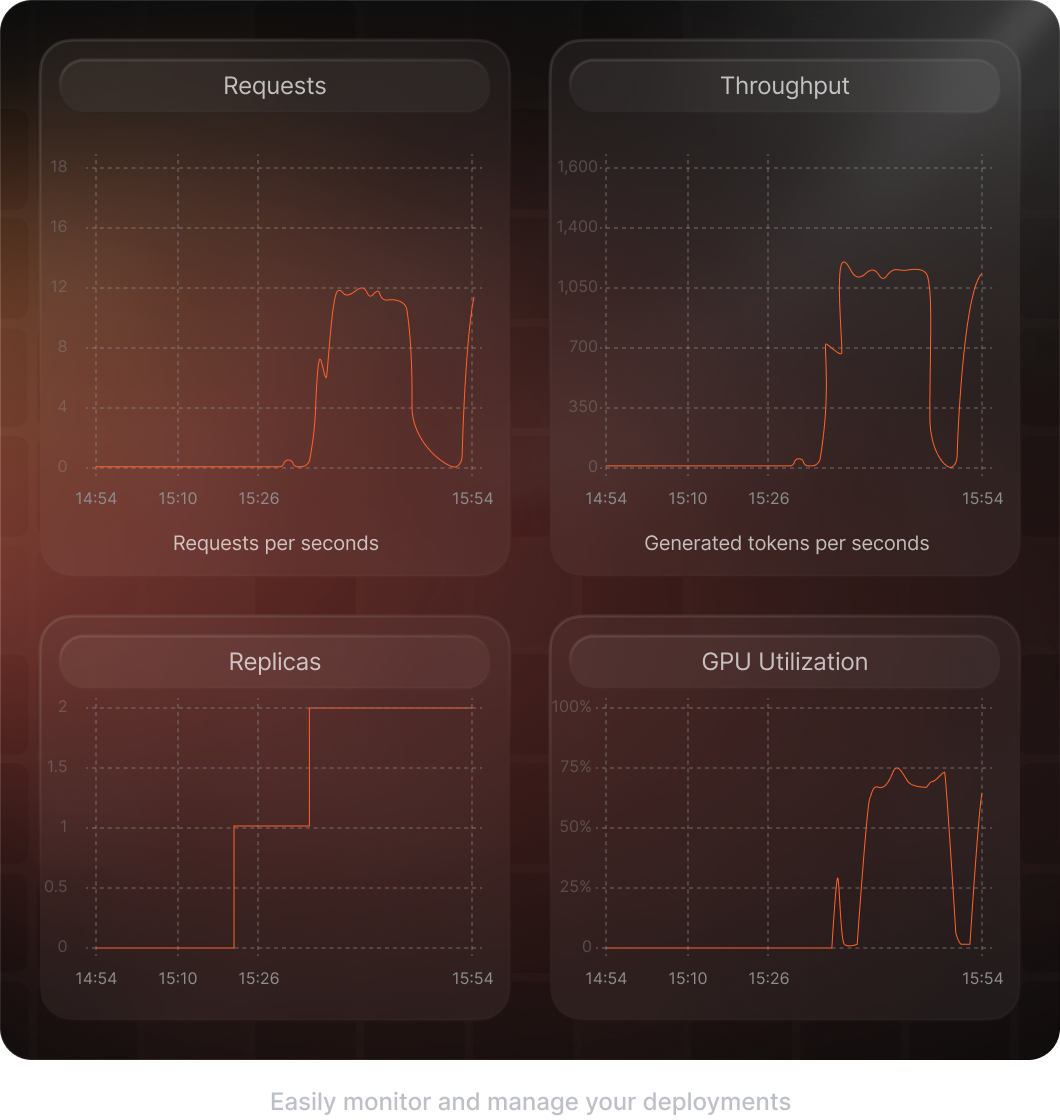

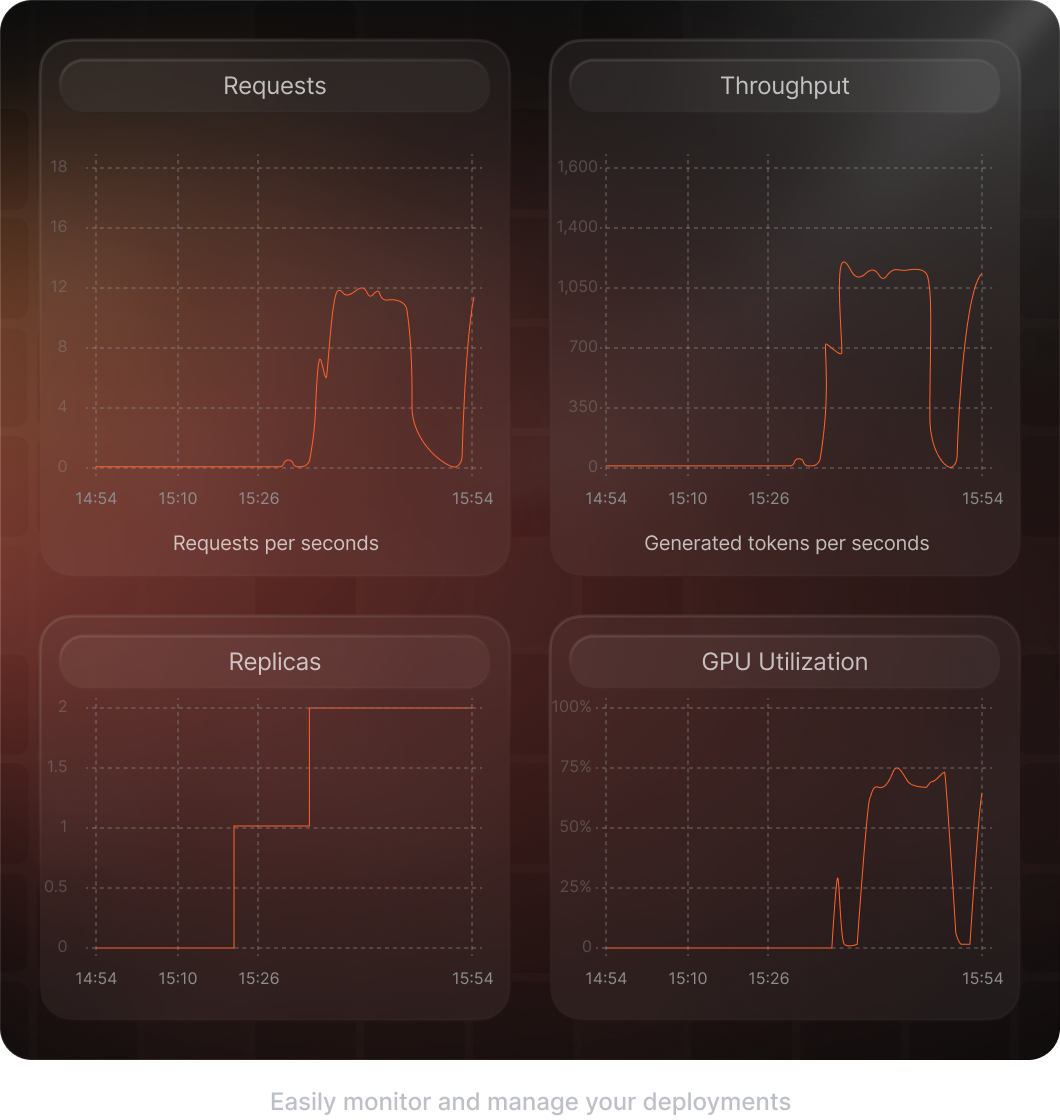

Production-Ready AI

Built for Production

Our intelligent infra autoscales up for any size job and back down to reduce costs.

Deployments You Can Trust

Maintain seamless service with multi-region deployments, fail-over protection, and guaranteed SLAs. Real-time dashboards make it easy to monitor your production jobs.

End-to-End AI Platform

Predibase uses Terraform to spin up a Kubernetes cluster in your account, which includes model training and serving on the latest GPUs.

First Class Fine-tuning and Inference

Superior Developer Experience

Fine-tune, serve, and manage your SLM deployments in a few lines of code or via our user-friendly UI.

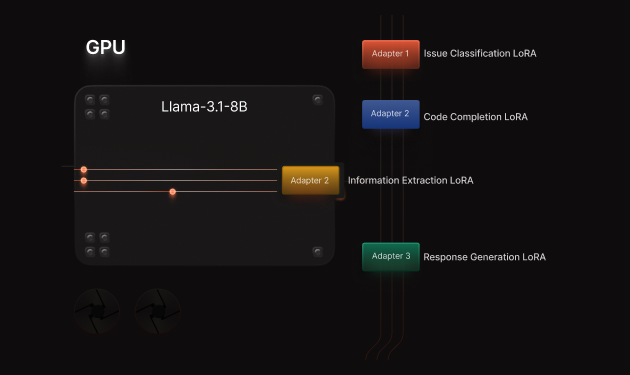

Fastest, Multi-LoRA Inference

Accelerate inference by up to 10x and serve many LoRA adapters on a single GPU with Turbo LoRA and LoRAX.

Higher Quality Models

Improve model accuracy by 10-15% and speed up training by 6x compared to HuggingFace defaults.

Learn More

Predibase Fine-tuning

Learn more about Predibase’s best-in-class platform for training LoRA adapters that outperform GPT-4 for your use case.

Read Article

Predibase Serving

Learn how we make it easy to serve 100s of adapters faster and at a fraction of the cost with our multi-LoRA inference platform.

Read Article

Case Study: Convirza

Learn how Convirza reduced costs by 10x and improved the accuracy of their SLMs by 8% by migrating from OpenAI to Predibase.

Read Article