Train the highest quality LLMs

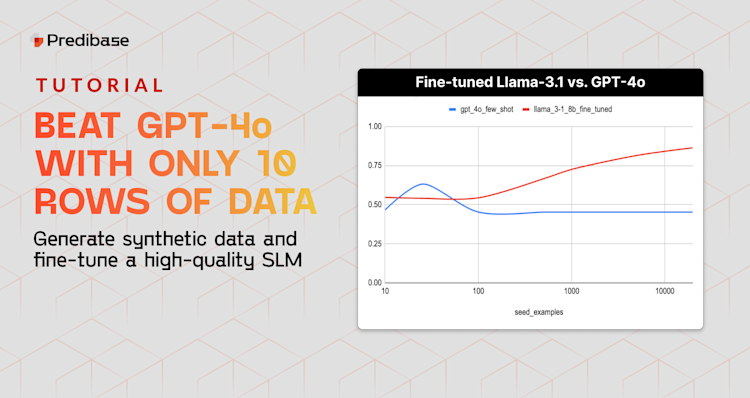

Train task-specific LLMs that outperform GPT-4, now with Reinforcement Fine-Tuning

Introducing Reinforcement Fine-Tuning

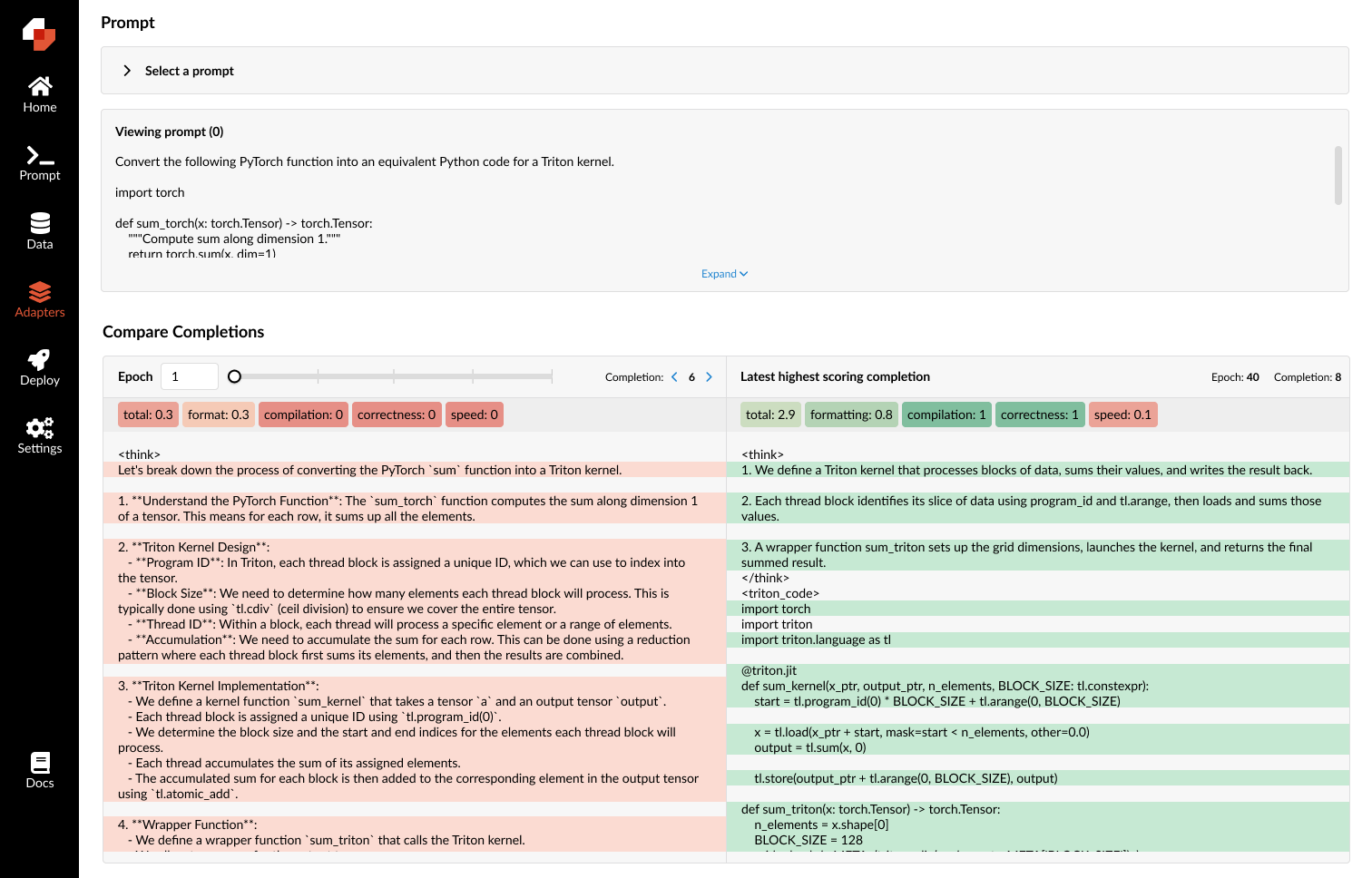

- Intuitive UI

Start training LLMs with RFT track how your model learns with our end-to-end fine-tuning UI.

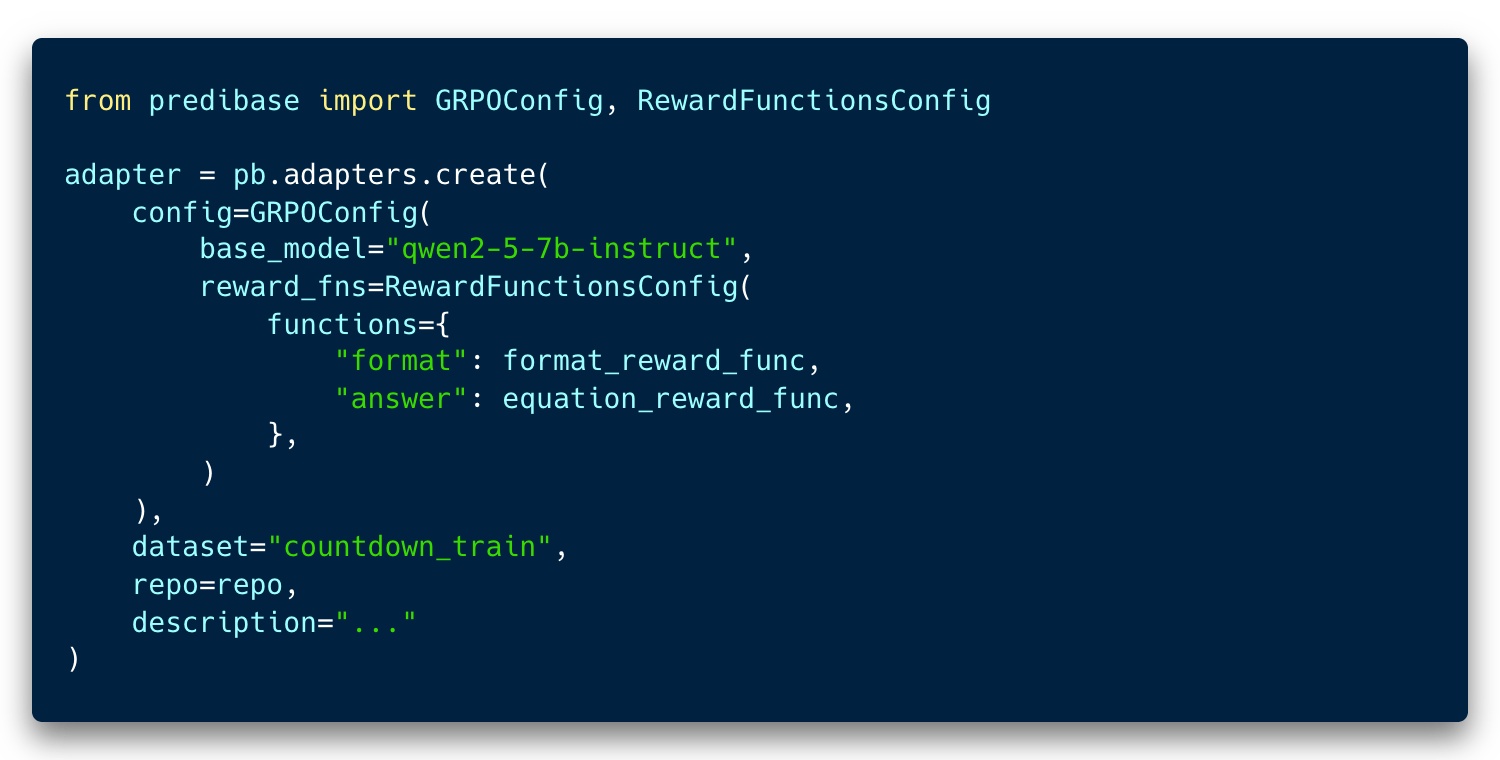

- GRPO SDK

Refine reward functions and customize 100s of training parameters via the SDK to optimize model performance.

Start training LLMs with RFT track how your model learns with our end-to-end fine-tuning UI.

Create Reward Functions with NLP (Coming Soon)

Creating reward functions has never been easier. Simply describe your desired outcome in natural language, and Predibase generates the reward function for you—streamlining your reinforcement fine-tuning workflow dramatically.

Fine-Tune Any Leading Model

Choose the base model that's best for your use case from a wide selection of LLMs including Upstage's Solar LLM and open-source LLMs like Llama-3, Phi-3, and Zephyr. You can also bring your own model and serve it as a dedicated deployment.

See the full list of supported models.

First-Class Fine-Tuning Experience

Powerful training engines

Fine-tuning uses A100s by default but you can choose other hardware to further optimize for cost or speed.

Serverless fine-tuning infra

Pay per token to ensure you’re only charged for what you use. See our pricing.

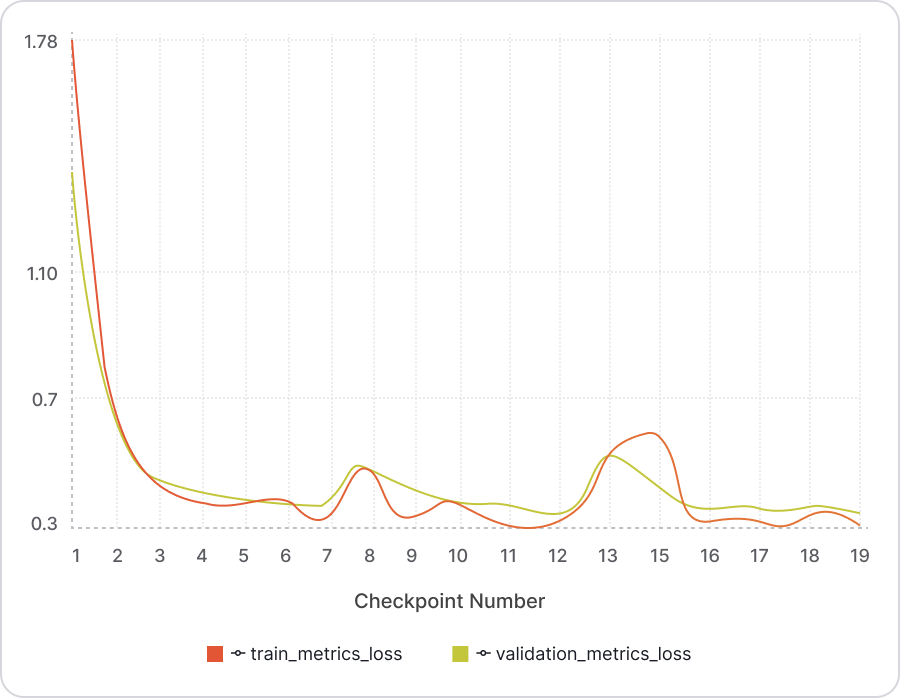

View essential metrics as you train

Track learning curves in real time as your adapter trains to ensure everything is on track.

Resume training from checkpoints

No need to restart an entire training job from the beginning if it encounters an error or you’re not happy with the training performance.

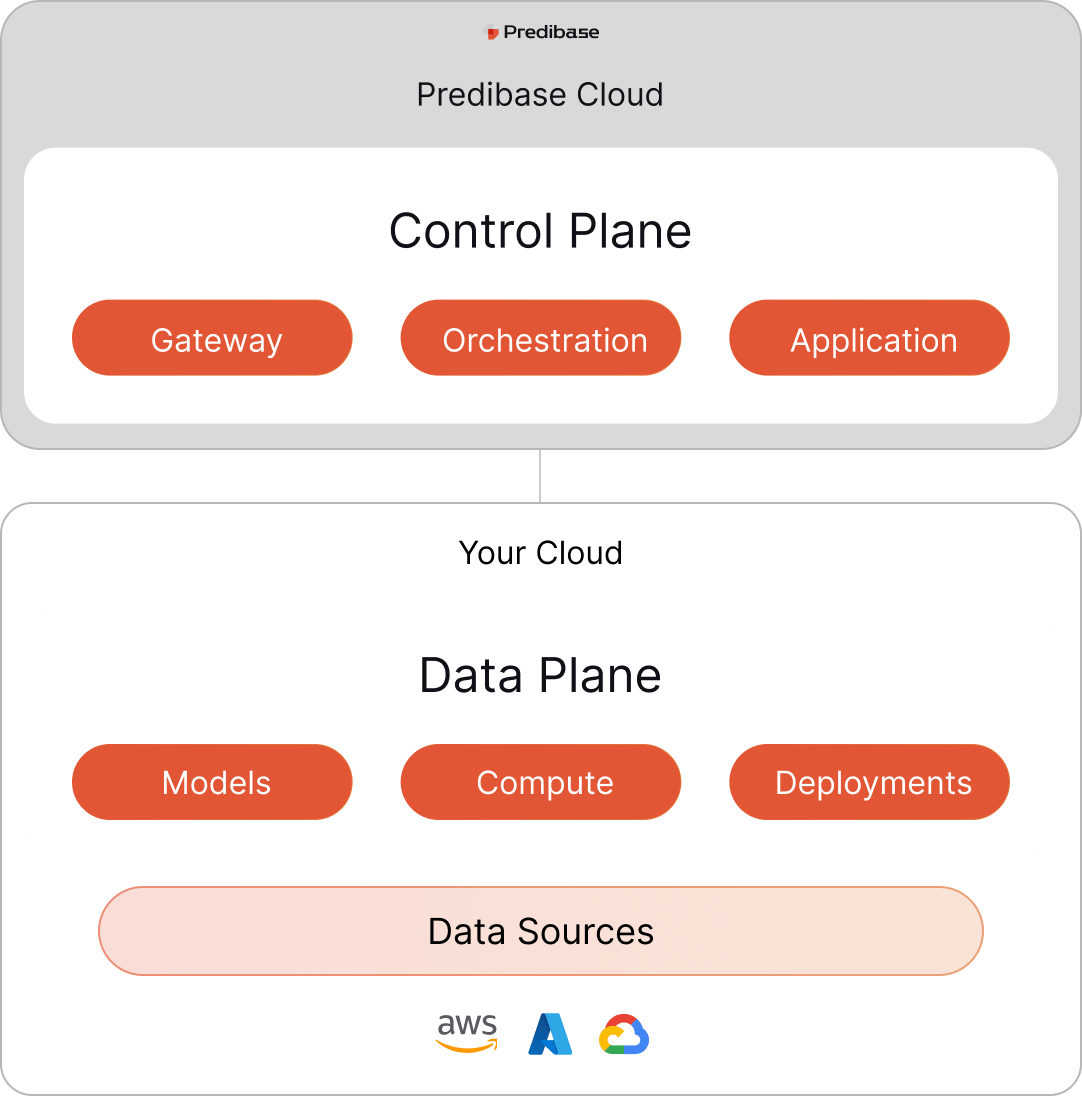

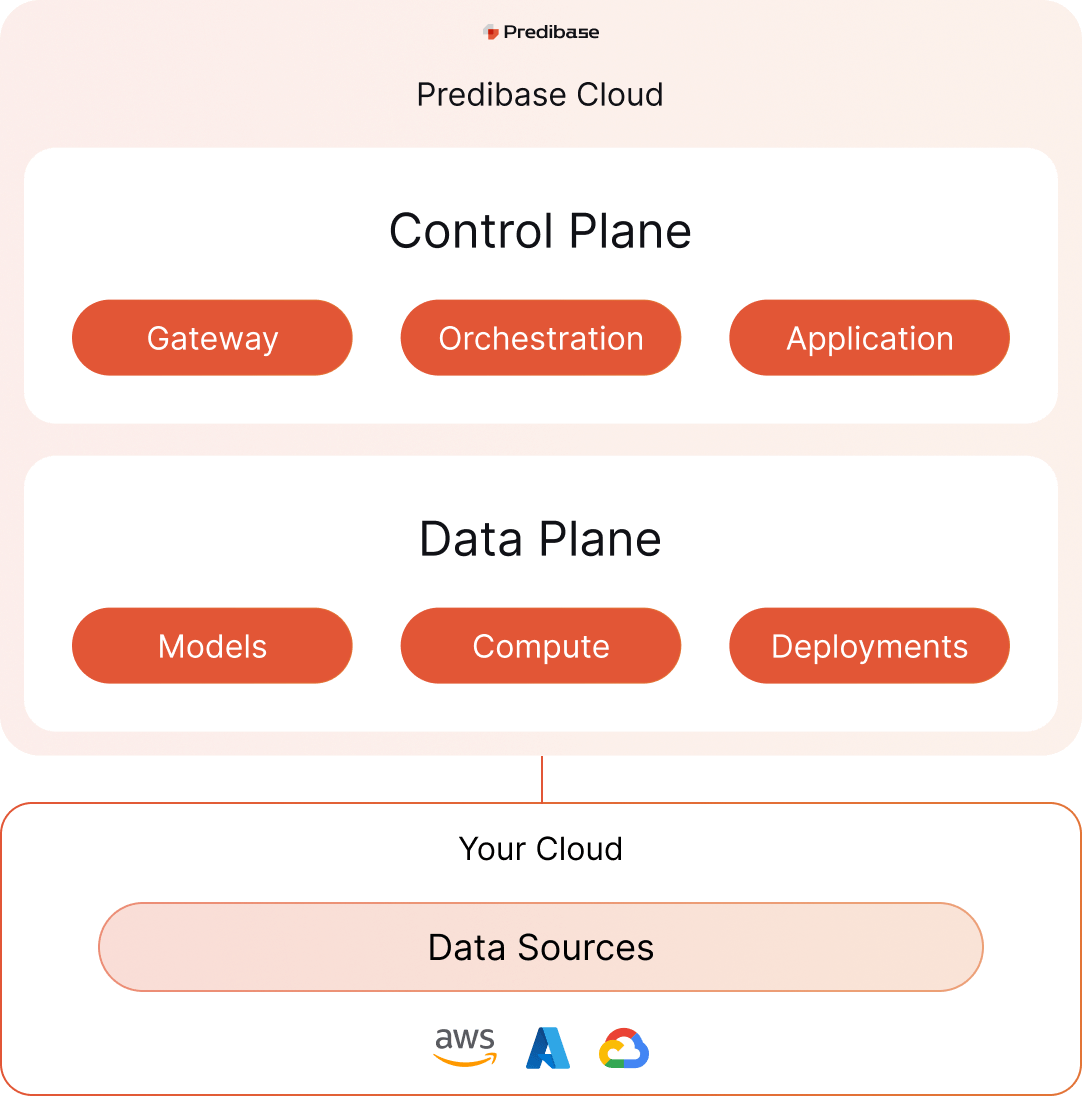

Your Data Stays Your Data

Whether you use our serverless fine-tuning infra or are running Predibase in your VPC, Predibase ensures your privacy by never retaining your data.

- SaaS

- VPC

Fine-Tune One Base Model For Every Task Type & Serve From A Single Deployment

{"text": .....}

{"text": .....}

{"text": .....}Completions

Leverage continued pre-training to teach your LLM the nuances of domain-specific language with unlabeled datasets.

{"prompt": ...., "completion": .....}

{"prompt": ...., "completion": .....}

{"prompt": ...., "completion": .....}Instruction Tuning

Train your LLMs on specific tasks with structured datasets consisting of (Input, Output) pairs.

{"prompt": ...., "chosen": ....., "rejected": ......}

{"prompt": ...., "chosen": ....., "rejected": ......}

{"prompt": ...., "chosen": ....., "rejected": ......}Direct Preference Optimization (DPO)

Ensure your model’s outputs align with human preferences using a preferences dataset complete with prompts, preferred, and dispreferred responses.

{"messages": [{"role": ..., "content": ...}, {"role": ..., "content": ...}, ...]}

{"messages": [{"role": ..., "content": ...}, {"role": ..., "content": ...}, ...]}

{"messages": [{"role": ..., "content": ...}, {"role": ..., "content": ...}, ...]}Chat

Create chat-specific models for conversational AI by fine-tuning with chat transcripts.

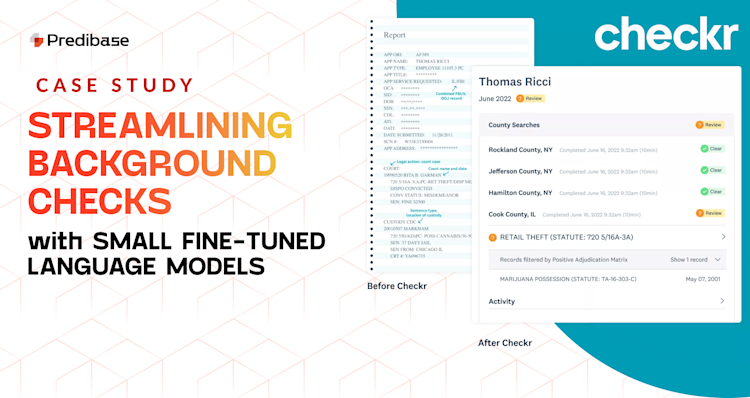

“By fine-tuning and serving Llama-3-8b on Predibase, we've improved accuracy, achieved lightning-fast inference and reduced costs by 5x compared to GPT-4. But most importantly, we’ve been able to build a better product for our customers, leading to more transparent and efficient hiring practices.”

Vlad Bukhin, Staff ML Engineer, Checkr