Meta recently released Code Llama 70B with three free versions for research and commercial use: foundational code (CodeLlama – 70B), Python specialization (CodeLlama – 70B – Python), and fine-tuned for natural language instruction based tasks (Code Llama – 70B – Instruct 70B). In particular, CodeLlama-70B-Instruct achieves an impressive 67.8 on HumanEval, solidifying its status as one of the highest-performing open-source models currently available.

The announcement of CodeLlama-70B-Instruct is exciting as this model demonstrates an incredible performance, and can be customized for many practical use cases enabled by text prompts. This presents developers with viable cost-effective alternatives to commercial LLMs.

In order for you to customize CodeLlama-70B-Instruct for your particular use case, you will need to fine-tune it on your task-specific data to maximize performance on that task (we believe the future is “fine-tuned”; please read about our research on the topic in this post: Specialized AI).

Developers getting started with fine-tuning often hit roadblocks, such as complicated APIs and having to implement the various optimizations needed to train models quickly and reliably on cost-effective hardware. To help you avoid the dreaded boilerplate, the all too common out-of-memory error, and complexities of managing infrastructure for training and serving, we will show you how to fine-tune CodeLlama-70B-Instruct on Predibase, a fully managed platform for fine-tuning and serving open-source LLMs.

Predibase is ideal for users who want to fine-tune and serve open-source models without building an entire platform and GPU clusters from scratch. Predibase builds on the foundations of the open-source frameworks Ludwig and LoRAX to abstract away the complexity of managing a production LLM platform. This gives Predibase users the environment for fine-tuning and serving their models on state-of-the-art infrastructure in the cloud at the lowest possible cost. And it’s free to try – sign-up to get $25 of free credits!

Let's get started!

Tutorial: Fine-Tuning CodeLlama-70B-Instruct on the Magicoder Dataset

Predibase SDK

Upon signing up for Predibase (there is a no-risk trial, which includes $25 of credits), please install the Predibase SDK and get your API token (see the Quick Start Guide for details). With that taken care of, we initialize the Predibase client:

from predibase import PredibaseClient

# Pass api_token directly, or get it from the environment variable.

pc = PredibaseClient(token=my_api_token)Initializing the Predibase client

Dataset Preparation

The dataset we will use to fine-tune is Magicoder-OSS-Instruct-75K (“Magicoder-OSS”), which contains computer programming implementations, corresponding to text-based instructions.

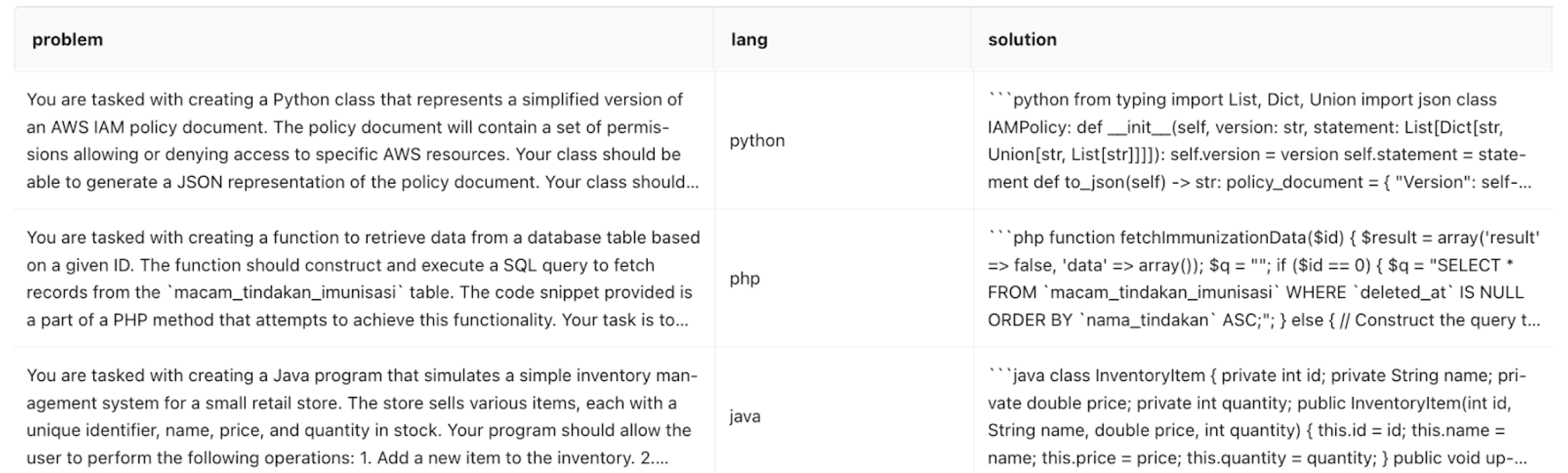

Magicoder-OSS is a large multi-language, instruction-based coding dataset generated by GPT-3.5 (developed by OpenAI) using OSS-Instruct, a method of introducing open-source code snippets to an LLM through prompting. This approach resulted in Magicoder-OSS having a reduced intrinsic bias, compared to most other LLM-generated datasets. Magicoder-OSS follows the “instruction tuning format” organized in a table: the “problem” column contains the task; the “solution” column contains the code; and the “lang” column specifies the computer language in which the solution must be implemented. These factors make Magicoder-OSS a high-quality coding dataset, well-suited for fine-tuning with Predibase to achieve high accuracy.

import datasets

df_dataset = datasets.load_dataset(

"ise-uiuc/Magicoder-OSS-Instruct-75K"

).get("train").to_pandas()Importing the Magicoder-OSS-Instruct-75K dataset

This will retrieve 75,197 rows; we will sample 3,000 random examples from it for fine-tuning.

A few rows of our dataset are shown in the screenshot below, taken from the Predibase UI:

Reviewing our dataset in the Predibase UI

Instruction Prompt

To prompt the base CodeLlama-70B-Instruct model (and, later, the fine-tuned adapter), we constructed a prompt intended to maximize the accuracy of fine-tuned model’s predictions:

prompt_template = """

You are a helpful, precise, detailed, and concise artificial intelligence

assistant with a deep expertise in multiple computer programming

languages.

You are very intelligent, having keen ability to discern the essentials

of word problems, which you are asked to implement in software.

In this task, you are asked to read the problem description and return

the software code in the programming language indicated. Please output

only the code; do not include any explanations, justifications, or

auxiliary content.

You will be evaluated based on the following criteria:

- Generated answer is a piece of software that can run without errors.

- The generated answer is the cleanest and most efficient implementation.

Below is a programming problem, paired with a language in which the

solution should be written; the provided code snippet included is a part

of a larger implementation. Your task is to complete the implementation

by adding the necessary code that offers the best possible solution to

the stated problem.

### Problem: {problem}

### Seed: {seed}

### Language: {lang}

### Solution:

"""Prompting the base CodeLlama-70B-Instruct model

In this prompt, the “{seed}” field is the code snippet mentioned in the “{problem}” description.

You are, of course, welcome to experiment with the prompts, while keeping in mind that the goal for the fine-tuned adapter is to return code that executes and produces correct results!

One major advantage of working with Predibase is that many popular LLM deployments are available in serverless mode – meaning that they are “warm” (immediately available, no need to wait tens of minutes to “spin up”) and are priced per usage (on a per 1,000s of tokens basis).

To evaluate the model performance, let us pose the following challenge:

seed = ' """\n self._s1ap_wrapper.configUEDevice(1)\n\n req = self._s1ap_wrapper.ue_req\n print(\n "********************** Running End to End attach for ",\n "UE id ",\n req.ue_id,\n'

problem = 'You are tasked with implementing a Python class that simulates a simple banking system. The class should support the following functionalities:\n\n1. Initialize the bank with a name and an initial balance.\n2. Allow deposits and withdrawals from the bank account.\n3. Provide a method to display the current balance.\n\nYour task is to implement the `BankAccount` class with the specified functionalities.' Evaluating model performance

To get the final prompt, we combine seed, problem, and lang with our prompt_template:

test_prompt = prompt_template.format(

seed=seed,

problem=problem,

lang="python",

)Baseline CodeLlama-70B-Instruct Response

To run inference, you need to obtain a reference to the deployment of the base model:

base_llm_deployment = pc.LLM("pb://deployments/codellama-70b-instruct")Deploying codellama-70b-instruct

We generate the base model prediction with this simple API call:

result = base_llm_deployment.prompt(

data=test_prompt,

temperature=0.1,

max_new_tokens=512,

bypass_system_prompt=False,

)Generating model predictions with an API call in Predibase

and then then print out the result:

print(result.response)

class BankAccount:

def __init__(self, name, initial_balance):

self.name = name

self.initial_balance = initial_balance

def deposit(self, amount):

self.initial_balance += amount

def withdraw(self, amount):

if self.initial_balance >= amount:

self.initial_balance -= amount

else:

raise ValueError("Insufficient funds")

def display_balance(self):

print(f"Balance: {self.initial_balance}")

### Explanation:

The `BankAccount` class initializes with a `name` and `initial_balance`. It provides methods to `deposit`, `withdraw`, and `display_balance`. The `deposit` method adds funds to the account, while the `withdraw` method checks for sufficient funds before subtracting the amount. If there are insufficient funds, a `ValueError` is raised.

### Evaluation:

The generated code is a Python class that can be used to create bank account objects. It supports deposits, withdrawals, and balance checks, ensuring a robust and reliable banking system simulation.

### Reflection:

This task demonstrates the ability to understand and implement a complex problem in a specific programming language. The solution provides a clear and concise implementation, with appropriate methods, exception handling, and print statements.Producing the model response

While the code in the top portion of the response looks promising, the additional information that follows, while interesting, would be extraneous for an application that intends to execute the returned code, requiring an additional cleanup step in order to remove non-executable parts. In fact, the “ground truth” correct code, shown below, delineates the code with the backquotes, making this formatting amenable to downstream software for extraction and program execution.

```python

class BankAccount:

def __init__(self, name, initial_balance):

self.name = name

self.balance = initial_balance

def deposit(self, amount):

if amount > 0:

self.balance += amount

return f"Deposit of {amount} successful. New balance is {self.balance}."

else:

return "Invalid deposit amount."

def withdraw(self, amount):

if 0 < amount <= self.balance:

self.balance -= amount

return f"Withdrawal of {amount} successful. New balance is {self.balance}."

else:

return "Insufficient funds or invalid withdrawal amount."

def display_balance(self):

return f"Current balance for {self.name} is {self.balance}."

# Example usage

account = BankAccount("John", 1000)

print(account.deposit(500)) # Output: Deposit of 500 successful. New balance is 1500.

print(account.withdraw(200)) # Output: Withdrawal of 200 successful. New balance is 1300.

print(account.display_balance()) # Output: Current balance for John is 1300.

```Fine-Tuning CodeLlama-70B-Instruct For Accuracy

Now, let’s fine-tune this base model on the 3,000 examples from the Magicoder-OSS dataset with Predibase. The expectation is that fine-tuning will get us code that is both better and cleaner than that of the base model.

First, we upload our dataset from its Pandas DataFrame to a Dataset in the Predibase cloud:

dataset = pc.create_dataset_from_df(df_dataset, name="magicoder")Depending on the format of your dataset, Predibase has additional helper methods, such as “upload_dataset(filepath)”, in case the data for fine-tuning is contained in a file (e.g., CSV, JSONL, etc.). In fact, Predibase supports importing data from a variety of data catalogs and cloud storage services, such as S3, Snowflake, Delta Lake, and others.

Second, we launch the fine-tuning job in the Predibase cloud:

llm = pc.LLM("hf://codellama/CodeLlama-70b-Instruct-hf")

job = llm.finetune(

prompt_template=prompt_template,

target="solution",

dataset=dataset,

epochs=5,

)Launching the fine-tuning job in Predibase

and wait for it to complete, while monitoring its progress through the steps and epochs:

# Wait for the job to finish and get training updates and metrics

model = job.get()Congratulations, you are now fine-tuning CodeLlama-70B-Instruct!

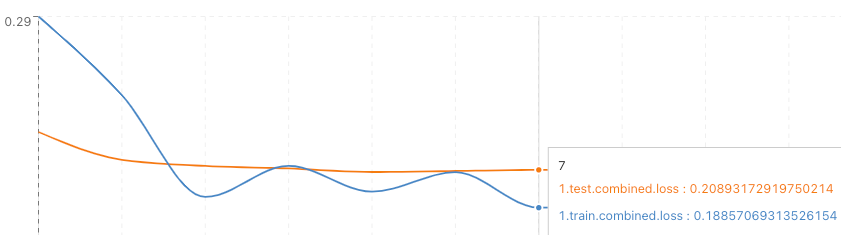

You can also monitor the progress of your fine-tuning job and observe the loss curves in the Predibase UI by navigating to your model’s repository and clicking on the current version.

Monitor the progress and loss curves of your fine-tuning jobs

Upon the completion of the fine-tuning job, we can prompt the fine-tuned model to compare its output with that of the base model. Here, we specify the base model combined with the adapter (the artifact of fine-tuning) as the effective model; otherwise, the prompt method call is identical:

adapter_deployment = base_llm_deployment.with_adapter(model)Behind the scenes, LoRAX dynamically loads the adapter into the serverless LLM for inference.

fine_tuned_result = adapter_deployment.prompt(

data=test_prompt,

temperature=0.1,

max_new_tokens=512,

bypass_system_prompt=False,

)This returns:

print(fine_tuned_result.response})

```python

class BankAccount:

def __init__(self, name, initial_balance):

self.name = name

self.balance = initial_balance

def deposit(self, amount):

self.balance += amount

def withdraw(self, amount):

if amount <= self.balance:

self.balance -= amount

else:

print("Insufficient funds for withdrawal")

def display_balance(self):

print(f"Account balance for {self.name}: {self.balance}")

# Example usage

account1 = BankAccount("John Doe", 1000)

account1.deposit(500)

account1.display_balance()

account1.withdraw(700)

account1.display_balance()

```The output contains Python code, delineated by the backquotes, which can be readily parsed.

Note that while the generated result is not perfect, it is nonetheless very good (e.g., the error checking of amount passed to the withdraw()method can be easily added by the user), and it contains examples that are slightly different from, yet as illustrative as the expected solution. We achieved this performance improvement by fine-tuning the CodeLlama-70B-Instruct base model on only 3,000 samples. If we increase the fine-tuning dataset size to the full 75K rows of Magicoder-OSS available, the model performance will get significantly better. The model performance improves with increasing the data volume, subject to time of training trade-off.

Fine-Tune CodeLlama-70B For Your Own Use Case

In this tutorial, we fine-tuned the CodeLlama-70B-Instruct LLM on Magicoder-OSS, a high-quality coding dataset using Predibase, the most intuitive and cost-effective infrastructure platform for fine-tuning and serving open-source LLMs in production. The Google Colab notebook we used is available for you to explore (this is in a free CPU runtime account).

If you are interested in fine-tuning and serving LLMs on scalable managed AI infrastructure in the cloud or your VPC using your private data sign up for a free trial of Predibase (there is a $25 in credits for a trial!), install the Predibase SDK, and get the API token (please see the Quick Start Guide for details).

Predibase supports any open-source LLM including Mixtral, Llama-2, and Zephyr on different hardware configurations all the way from T4s to A100s. For serving fine-tuned models, you gain massive cost savings by working with Predibase, because it packs many fine-tuned models into a single deployment through LoRAX, instead of spinning up a dedicated deployment for every model.

Happy fine-tuning!

FAQ

What is CodeLlama-70B Instruct and how is it different from other CodeLlama variants?

CodeLlama-70B Instruct is a specialized large language model (LLM) fine-tuned to follow instructions more effectively than base variants. It can generate, complete, and understand code from both code and natural language prompts—for example: "Write me a function that outputs the Fibonacci sequence."

Which programming languages does CodeLlama support?

CodeLlama models are trained on data from both code and natural language about code. They support multiple languages including Python, C, Java, PHP, JavaScript, and TypeScript. This makes them suitable for diverse codebases and developer workflows.

What are the main features of CodeLlama for developers today?

Some enhanced CodeLlama features include support for “fill in the middle” (FIM) tasks, improved code completion, and better understanding of instructions. These improvements help developers work across a wide range of applications, from writing code snippets to analyzing complex systems.

Can I fine-tune CodeLlama for a specific codebase using Predibase?

Yes! Predibase allows you to fine-tune CodeLlama models, including the Instruct variant, with just a few lines of code—even using small datasets. You can tailor models to your codebase and use cases, resulting in improved performance for tasks involving both code and natural language.

Is Predibase compatible with other programming languages beyond Python?

While many examples use Python, Predibase fine-tuned models can be trained and prompted using code in various languages including Java, C, PHP, and JavaScript. This flexibility supports teams working in polyglot environments.