For experienced SQL users, writing complex SQL queries – with multiple inner and outer joins, subqueries, common-table expressions, groupings, partitions, and more – is routine work. However, most developers aren’t as fluent in SQL, and writing complex SQL queries can be cumbersome and time-consuming, with hours spent looking up SQL syntax on Stack Overflow.

Fortunately, large language models (LLMs) can enable developers to translate natural language expressions into code, including SQL code. However, getting an LLM to perform well for a coding task requires a high-quality dataset tailored to that coding task and a robust machine learning (ML) training infrastructure. Until recently, access to both was very limited, and for teams that are not in on the Predibase + Gretel secret, acquiring data, the right tooling, and GPUs are still a challenge. As a result, most developers are forced to rely on cost-prohibitive commercial models from OpenAI, Anthropic, and Cohere or dedicated coding copilot tools. We have a couple of secrets to tell you that will change all of that.

Secret One: You can address data scarcity by designing data

These days, AI developers can design the data they need, on demand, by leveraging a tool like Gretel Navigator, the first compound AI system purpose-built to design high-quality synthetic data using natural language. Navigator combines agentic workflows, task planning, AI feedback, a multitude of tools/models, and data-generation best practices to produce privacy-preserving synthetic data either from scratch or as an augmented version of existing data. Recently, Navigator was used to generate what is currently the world’s largest and most diverse synthetic text-to-SQL dataset (available under Apache 2.0 license). It spans the gamut of domains, SQL complexities and SQL task types, and it quickly became the #1 trending dataset on Hugging Face. Gretel’s dataset makes developing a SQL copilot a whole lot easier, and is an ideal place to start when fine-tuning LLMs for SQL tasks.

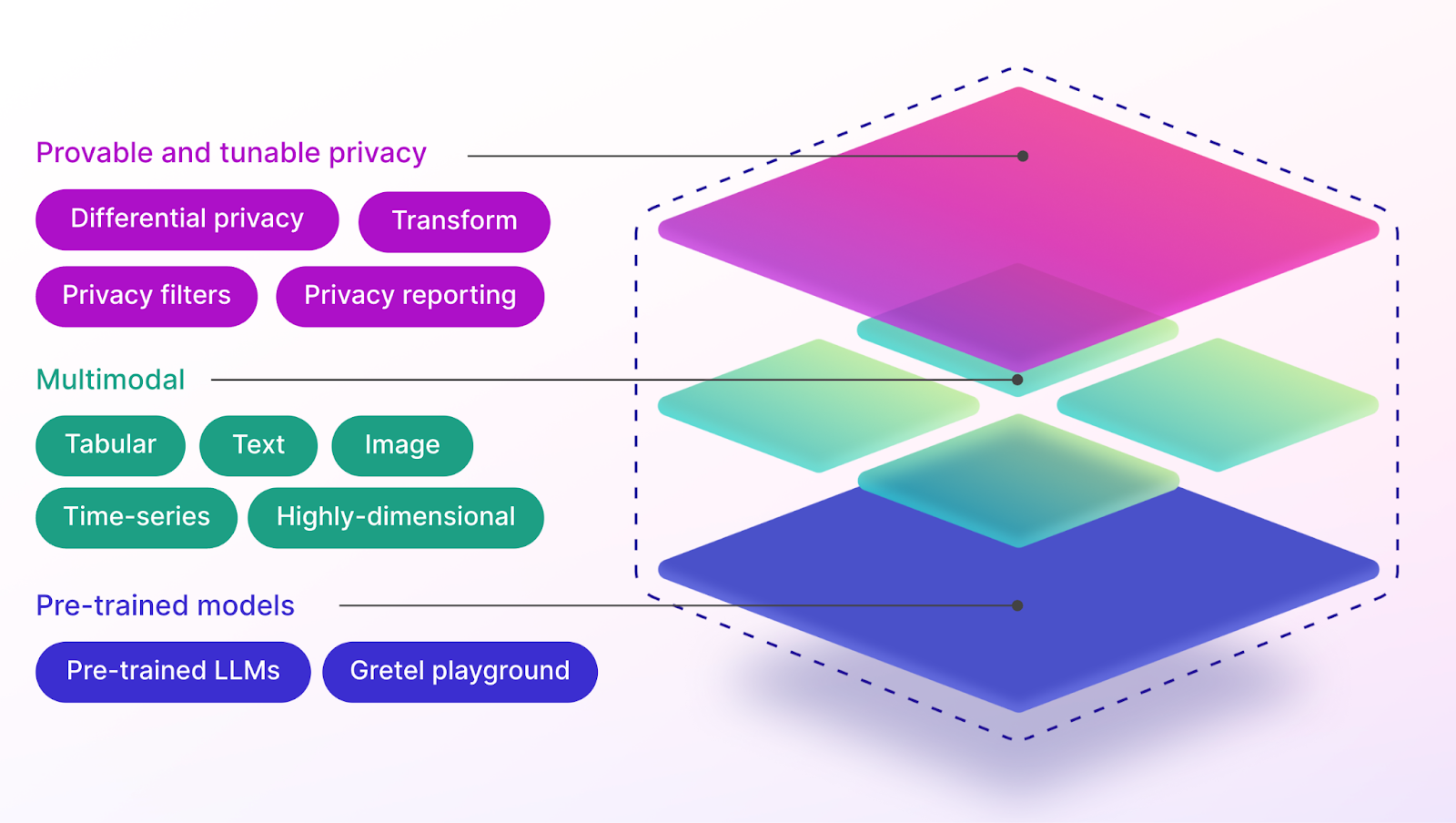

Gretel's Multimodal Synthetic Data Platform

Secret Two: You can train powerful models on a budget

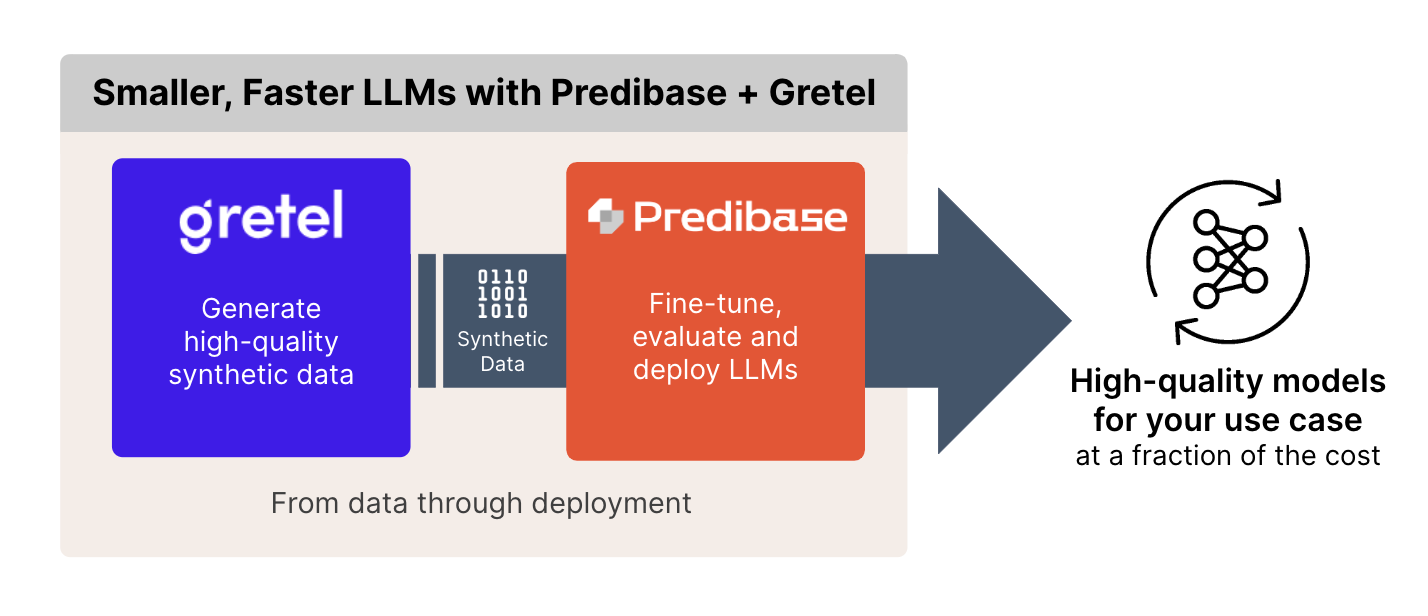

When combining the right data with the right tooling, like Predibase, cost is no longer a bottleneck to creating highly performative models. Recognized as the leading platform for small language models (SLMs), Predibase makes it easy for developers to fine-tune small task-specific models that outperform GPT-4 at a fraction of the cost. Predibase pairs serverless, cost-efficient infrastructure with a first-class LLM training experience that bakes 50+ optimizations into a simple declarative interface. Armed with the Gretel dataset, teams can leverage Predibase to fine-tune a small open-source or open-weight model like Llama-3 for a broad range of SQL tasks without the high cost of a commercial model.

Train small language models for your use case that rival larger, expensive general purpose LLMs

To help get you started, here’s a step-by-step tutorial for fine-tuning and serving your own SQL code generation copilot with open-source LLMs – sample code and data included. Let’s get started!

Tutorial: Fine-Tuning Llama-3 for SQL Code Generation

In this tutorial, we will use Meta-Llama-3-8B-Instruct as our base model, as it is one of the best performing open models of its size on multiple tasks, and it’s easy to fine tune. Please feel free to follow along in this Google Colab notebook.

Predibase SDK

Upon signing up for Predibase (there is a no-risk trial, which includes $25 of credits), install the Predibase SDK and get your API token (see the Quick Start Guide for details). With that taken care of, we initialize the Predibase client:

from predibase import Predibase

# Pass api_token directly, or get it from the environment variable.

pb = Predibase(api_token="my-api-token")

Dataset Exploration & Preparation

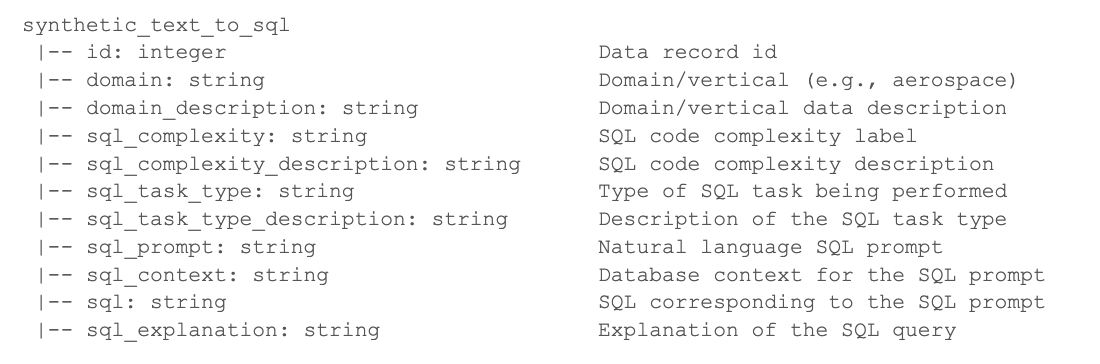

The Gretel Synthetic Text-to-SQL dataset contains 100K examples, covering 100 distinct domains from sports, to different scientific disciplines, to entertainment, and many others. The dataset includes 11 fields shown below:

Gretel SQL Dataset Schema

and here is an example record:

{

"id": 39325,

"domain": "public health",

"domain_description": "Community health statistics, infectious disease tracking data, healthcare access metrics, and public health policy analysis.",

"sql_complexity": "aggregation",

"sql_complexity_description": "aggregation functions (COUNT, SUM, AVG, MIN, MAX, etc.), and HAVING clause",

"sql_task_type": "analytics and reporting",

"sql_task_type_description": "generating reports, dashboards, and analytical insights",

"sql_prompt": "What is the total number of hospital beds in each state?",

"sql_context": "CREATE TABLE Beds (State VARCHAR(50), Beds INT); INSERT INTO Beds (State, Beds) VALUES ('California', 100000), ('Texas', 85000), ('New York', 70000);",

"sql": "SELECT State, SUM(Beds) FROM Beds GROUP BY State;",

"sql_explanation": "This query calculates the total number of hospital beds in each state in the Beds table. It does this by using the SUM function on the Beds column and grouping the results by the State column."

}

While having 100K examples is beneficial for experimentation, due to its ample coverage of many use cases, research shows that for fine-tuning, a smaller dataset can suffice as long as it is high quality and balanced. So for our fine-tuning work, we will use 20K random examples.

import datasets

df_dataset = datasets.load_dataset(

"gretelai/synthetic_text_to_sql"

).get("train").to_pandas()This will retrieve 100,000 rows; we will sample 20,000 random examples from it for fine-tuning. We will also hold 500 rows out for evaluation. Having an evaluation set enables Predibase to perform “early stopping” (i.e., stop the training phase if the evaluation performance saturates).

The “prompt” and “completion” columns are required by Predibase in order to perform fine tuning. We build the prompt column by starting the base model template (obtained from the model repository on HuggingFace) and substituting into it the fine-tuning template.

The base model template for Meta-Llama-8B-Instruct is:

base_model_template = "<|im_start|>user\n {prompt} <|im_end|>\n<|im_start|>assistant\n"and for fine-tuning, we will use the following template:

text_to_sql_training_prompt_template = """\

You are a database management system expert, proficient in Structured Query Language (SQL).

Your job is to write an SQL query that answers the following question, based on the given \

database schema and any additional information provided. Use SQLite syntax.

Please output only SQL (without any explanations).

### Schema: {sql_context}

### Knowledge: This "{sql_task_type}" type task is commonly used for {sql_task_type_description} \

in the domain of {domain}, which involves {domain_description}.

### Question: {sql_prompt}

### Completion:

"""

where the attributes in curly braces will be populated from the columns of the original dataset.

To populate the prompt column, we encapsulate the formatted text_to_sql_training_prompt_template as the value of the {prompt} attribute into the base_model_template; the completion column is just the output sql from the original dataset.

We then concatenate the dataframes containing the “train” and “evaluation” splits, save the combined dataframe as a CSV file, and upload it to Predibase as the dataset:

df_dataset.to_csv(

"/datasets/GretelAI/synthetic_text_to_sql_llama-3-8b-instruct.csv",

index=False

)

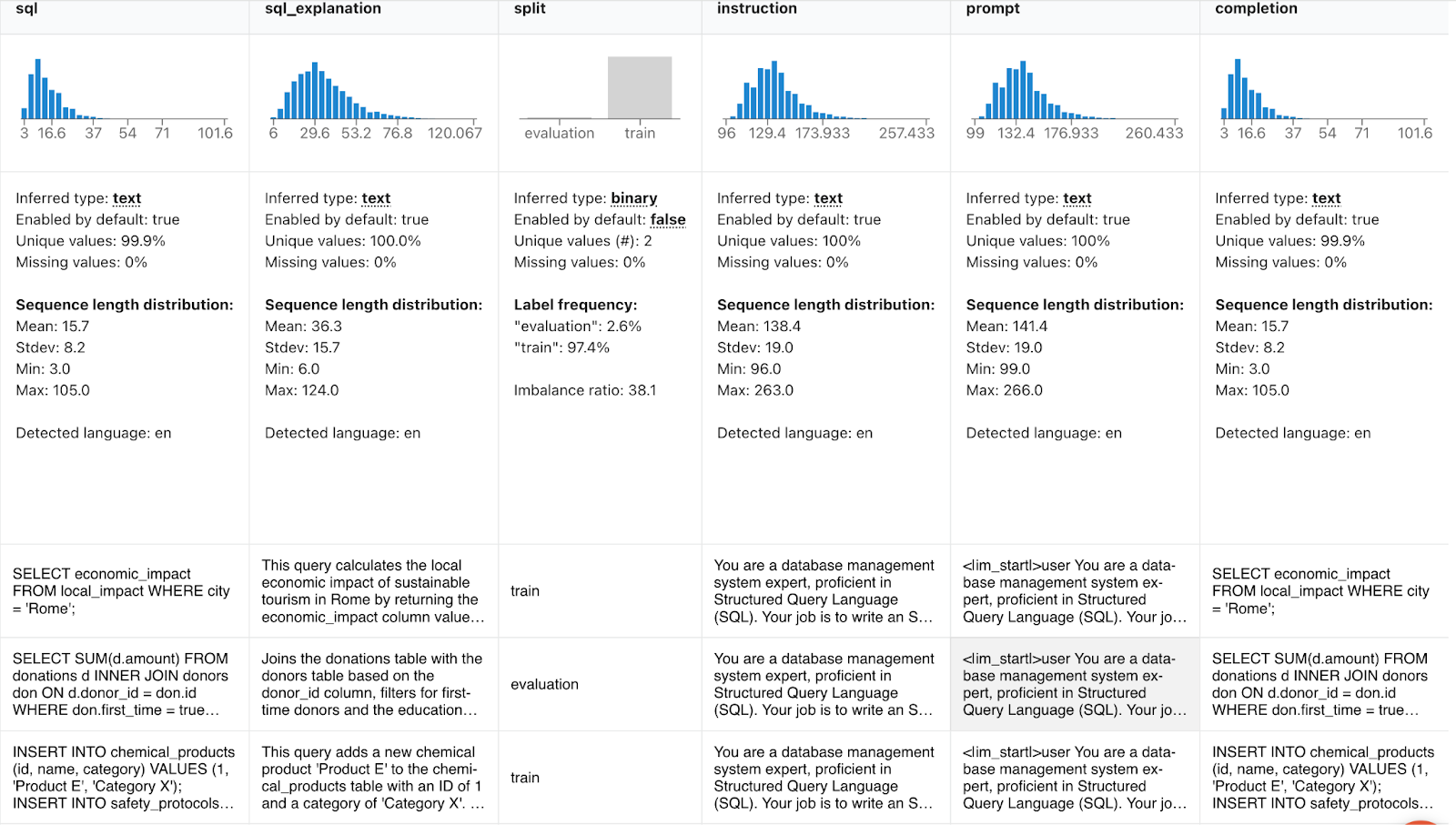

A few rows of our dataset are shown in the screenshot below, taken from the Predibase UI:

Predibase Dataset View

Prompting the Base Model

Before we decide to fine-tune, we should prompt the base model. This is because there is a possibility that the base model will return sufficiently accurate SQL queries, thereby making fine-tuning unnecessary, and hence resulting in time and cost savings. In fact, one should diligently – using formal optimization tools – experiment with different prompts against the base model (e.g., zero-shot, one-shot, few-shot learning, contrastive examples, etc.) and run proper evaluations against established benchmarks before concluding that fine-tuning is justified.

To prompt the base model, we get a reference to the client:

client = pb.deployments.client("llama-3-8b-instruct")

Then we select one of the prompts from the test set (not to be part of train and evaluation sets) and use it to gauge the performance of the base model:

control_prompt = """\

<|begin_of_text|><|start_header_id|>user<|end_header_id|>

You are a database management system expert, proficient in Structured Query Language (SQL).

Your job is to write an SQL query that answers the following question, based on the given \

database schema and any additional information provided. Use SQLite syntax.

Please output only SQL (without any explanations).

### Schema: CREATE TABLE Country (country_id INT, country_name VARCHAR(50)); INSERT INTO Country (country_id, country_name) VALUES (1, 'USA'); CREATE TABLE EV_Sales (sale_id INT, model VARCHAR(50), buyer_country INT, sale_date DATE); INSERT INTO EV_Sales (sale_id, model, buyer_country, sale_date) VALUES (1, 'Tesla Model 3', 1, '2022-08-15');

### Knowledge: This "analytics and reporting" type task is commonly used for generating reports, dashboards, and analytical insights in the domain of automotive, which involves Vehicle safety testing results, autonomous driving research data, electric vehicle adoption statistics, and auto show information.

### Question: What is the total number of electric vehicle sales in each country?

### Completion:

<|eot_id|><|start_header_id|>assistant<|end_header_id|>

"""

And then execute:

client.generate(

control_prompt,

max_new_tokens=256,

).generated_text

This returns:

SELECT dish_name FROM dishes WHERE dish_id NOT IN ( SELECT dish_id FROM sales);whereas the ground truth SQL statement is:

SELECT d.dish_name FROM dishes d LEFT JOIN sales s ON d.dish_id = s.dish_id WHERE s.dish_id IS NULL;The base model’s prediction is incorrect: the most essential part of the query, the LEFT JOIN, is absent.

Hence, we proceed to fine-tune.

Fine-Tuning Meta-Llama-8B-Instruct For Accuracy

Now, let’s fine-tune this base model on the 20,000 examples from the Gretel Synthetic Text-to-SQL dataset with Predibase. The expectation is that fine-tuning will get us SQL queries that are correct and the output is “clean” compared to that of the base model.

Depending on the format of your dataset, Predibase has dataset upload methods in case the data for fine-tuning is contained in a file (e.g., CSV, JSONL, etc.). In fact, Predibase supports importing data from a variety of data catalogs and cloud storage services, such as S3, Snowflake, Delta Lake, and others.

Thus, we will connect our CSV-formatted source data file to a Dataset in the Predibase cloud:

dataset = pb.datasets.from_file(

"/datasets/GretelAI/synthetic_text_to_sql_llama-3-8b-instruct.csv",

name="gretel_ai_synthetic_text_to_sql_llama-3-8b"

)

Next, we create the adapter repository:

repo = pb.repos.create(

name="gretel_ai_synthetic_text_to_sql_llama-3-8b-instruct",

description="Fine-tuning on GretelAI text-to-SQL synthetic dataset with Predibase.")

)

and launch the fine-tuning job:

adapter: FinetuningJob = pb.finetuning.jobs.create(

config={

"base_model": base_model_id,

"epochs": 5,

"learning_rate": 0.0002,

},

dataset=dataset,

repo="gretel_ai_synthetic_text_to_sql_llama-3-8b-instruct",

description='fine-tune "llama-3-8b-instruct" with GretelAI text-to-SQL synthetic dataset (no JSON)',

)

You will see the output similar to the following:

Successfully requested finetuning of meta-llama/Meta-Llama-3-8B-Instruct as `gretel-ai-text-to-sql-llama-3-8b-instruct`. (Job UUID: fe309fd0-f4c7-4b17-ab15-3db12aa5eaf5).Congratulations, you are now fine-tuning MetaLlama-8B-Instruct! 🥳

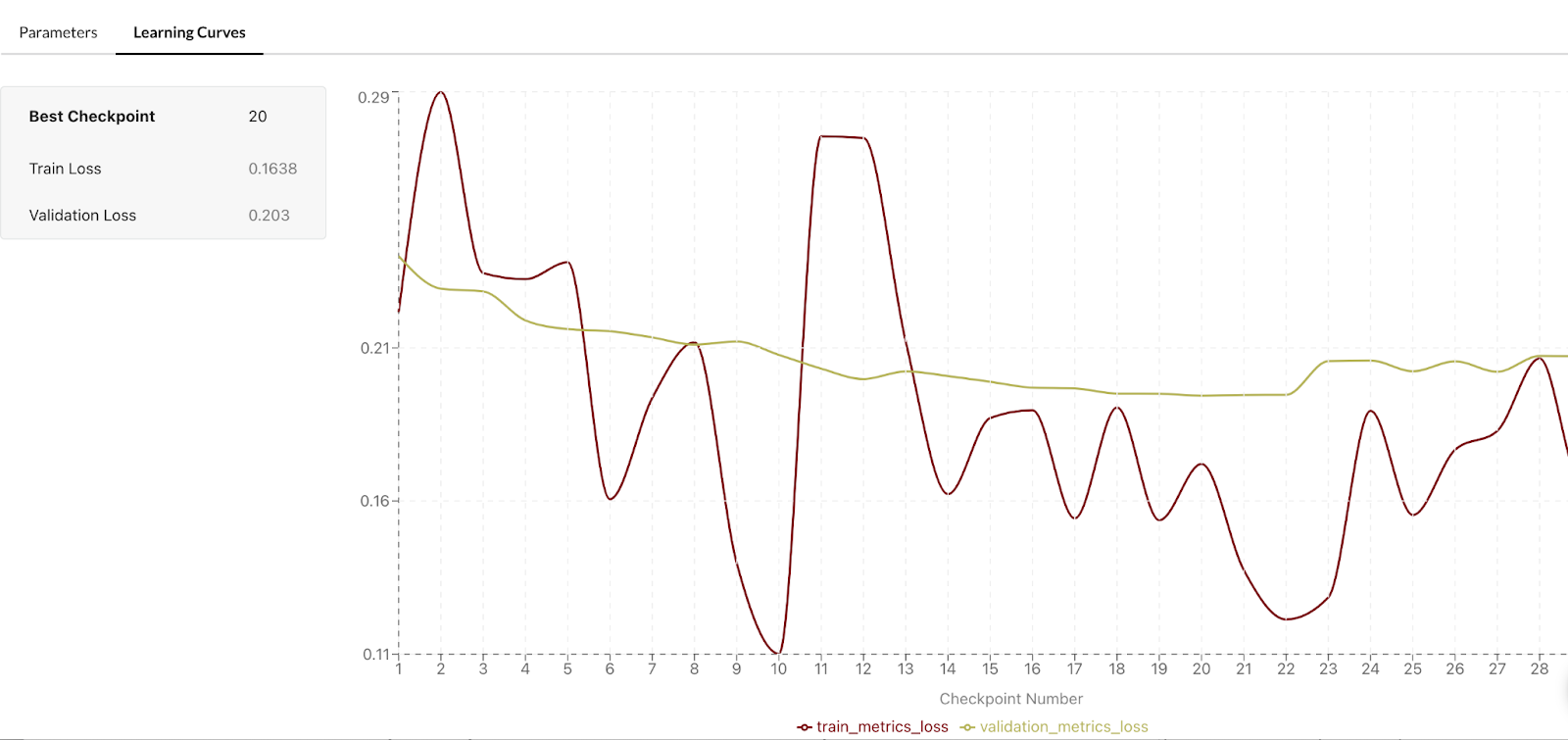

During the fine-tuning run, you can monitor its progress through the steps and epochs in the Predibase UI by navigating to your model’s repository and clicking on the current version. On that screen, you can can observe the loss curves in real time:

Predibase Adapter Fine-Tuning Learning Curves

Upon the completion of the fine-tuning job, we can now prompt the fine-tuned model to compare its output with that of the base model. Here, we specify the adapter repository with the version number (adapter is the artifact of fine-tuning); otherwise, the prompt method call is identical:

client.generate(

control_prompt,

# below, adapter version 1 is used (corresponds to the number of fine-tuning run)

adapter_id="gretel_ai_synthetic_text_to_sql_llama-3-8b-instruct/1",

max_new_tokens=256,

).generated_text

This returns:

SELECT d.dish_name FROM dishes d LEFT JOIN sales s ON d.dish_id = s.dish_id WHERE s.dish_id IS NULL;This is equal to the ground-truth SQL query. So the fine-tuning process increased the accuracy!

We achieved this performance improvement by fine-tuning the Meta-Llama-8B-Instruct base model on 20,000 samples. If we increase the fine-tuning dataset size to the full 100K rows of Gretel Synthetic Text-to-SQL dataset available, the model performance will get significantly better. The model performance improves with increasing the data volume, subject to the time spent on training trade-off.

Eyeballing outputs is useful for getting a sense of the performance of the model, but in order to properly assess it, a full quantitative evaluation should be performed using the right metrics.

Evaluating the Fine-Tuned Model using BIRD-SQL

To properly compare our fine-tuned model with the base model, we use the BIRD-SQL benchmark. BIRD (BIg Bench for LaRge-scale Database Grounded Text-to-SQL Evaluation) emerged as one of the most comprehensive benchmarks for evaluating models on text-to-SQL tasks. It comes with a staggering number of big databases providing important context for both natural language prompts and SQL, and it covers 37 different domains and more than twelve thousand unique question-SQL pairs. You can follow the instructions for setting up and running BIRD-SQL on the benchmark page and GitHub.

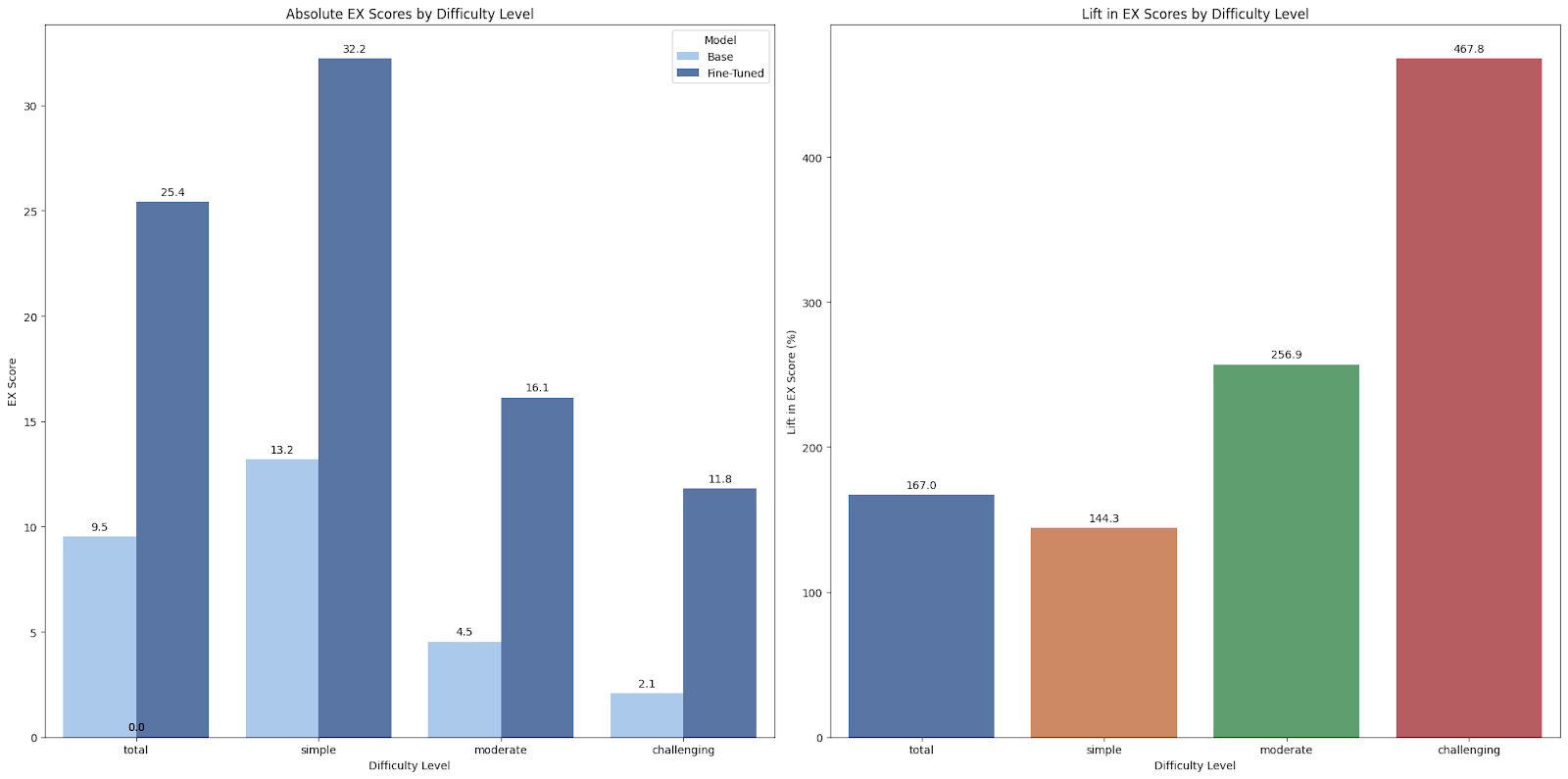

Here we report results for the Execution Accuracy (EX) score which measures the portion of examples in the evaluation set for which the executed results for the predicted and ground-truth SQL are identical. We run evaluation on the DEV set and incorporate external knowledge evidence (designated as oracle knowledge in the BIRD-SQL leaderboard).

We note that the total EX score goes up from 9.5 to 25.4, an overall lift of 167% as a result of fine-tuning on just 20k records of purely synthetic data. What is interesting is that we see much bigger gains for moderate and challenging levels of difficulty, in many ways reflecting the richness and diversity of the data being brought to bear onto the Text-to-SQL problem. Specifically, we see a remarkable 467% lift of challenging problems and a 257% lift on moderate problems. Not bad for something that was quite easy to put together with Gretel and Predibase!

A Llama-3-8B model fine-tuned on a subset of the synthetic text-to-SQL data outperforms the base model by a wide margin on the BIRD-SQL benchmark, delivering an overall lift of 167% and a lift of more than 467% on challenging questions.

Fine-Tune An LLM For Your Own Use Case

In this tutorial, we fine-tuned the Meta-Llama-8B-Instruct LLM on Gretel’s high-quality synthetic Text-to-SQL dataset, using Predibase, the most intuitive and cost-effective infrastructure platform for fine-tuning and serving open-source LLMs in production. The Google Colab notebook we used is available for you to explore (this is in a free CPU runtime account).

If you are interested in fine-tuning and serving LLMs on scalable managed AI infrastructure in the cloud or your VPC using your private data:

- Sign up for $25 in free Predibase credits to try this use case on your own. Predibase offers open-source LLMs for fine-tuning including Llama-3, Phi and Zephyr on different hardware configurations all the way from T4s to A100s. For serving fine-tuned models, you can achieve massive cost savings, because Predibase packs many fine-tuned models into a single deployment through LoRAX. No need to spin up dedicated deployments for every model.

- Sign up for a free account with Gretel! Gretel allows you to generate high-quality synthetic datasets with the same characteristics as real data, so you can improve AI models without compromising on privacy. You can start with the fully-featured free tier, which gives you 15 free monthly credits. Once you are ready to scale, Gretel offers simple pricing that lets you power your most critical use-cases.

Happy fine-tuning!

PS: if you enjoyed this content, please watch our recent joint workshop “Speed Up LLM Development with Gretel and Predibase“ for more details and phenomenal questions from the community.