Welcome to the Predibase AI Resource Center

Your one stop shop for all LLM, generative AI and machine learning tutorials, ebooks, webinars and learning resources.

![DeployCon: A Summit for Teams Serious about Production AI]()

DeployCon: A Summit for Teams Serious about Production AI

DeployCon is an engineer-first summit for teams building the next generation of AI. The top minds in LLMOps from Pinterest, DoorDash, Tinder, Meta share their strategies for deploying, scaling, and continuously improving AI systems in production.

Videos & Webinars

![Precision Vision: Supercharging Vision Language Models with Fine-Tuning]()

Precision Vision: Supercharging Vision Language Models with Fine-Tuning

Watch on-demand webinar: Supercharging Vision Language Models with Fine-Tuning

Videos & Webinars

![The Fastest Way to Serve Open-Source Models: Inference Engine 2.0]()

The Fastest Way to Serve Open-Source Models: Inference Engine 2.0

Watch on-demand webinar: How to serve open-source models 2–4x faster—no tuning, no headaches.

Videos & Webinars

![Shared vs. Private LLMs: Comparing Cost, Latency and Capabilities]()

Shared vs. Private LLMs: Comparing Cost, Latency and Capabilities

Deep dive session why shared LLMs fail at scale, and how to adopt fast efficient private LLMs

Videos & Webinars

![Build vs Buy: Choosing the Right AI Stack]()

Build vs Buy: Choosing the Right AI Stack

Explore the hidden costs of DIY AI, pro/cons of building vs. buying AI infra and how to assess your readiness.

eBooks

![Complete Guide to Reinforcement Fine-Tuning]()

Complete Guide to Reinforcement Fine-Tuning

Read the complete Guide to RFT to learn how to achieve 20%+ higher accuracy than GPT-4 using minimal labeled data including use cases and tutorials.

eBooks

![Intro to Predibase's Reinforcement Fine-Tuning Platform]()

Intro to Predibase's Reinforcement Fine-Tuning Platform

Deep dive webinar on the first end-to-end platform for training and serving LLMs with RFT. Benchmarks and example code included.

Videos & Webinars

![DeepSeek Adoption Survey and Infographic]()

DeepSeek Adoption Survey and Infographic

We survey 500+ AI professionals to separate the reality from the hype, explore use cases and adoption of DeepSeek.

eBooks

![Complete Guide to Serving LLMs]()

Complete Guide to Serving LLMs

Deep dive into 3 essential strategies for mastering fast, efficient and cost-effective LLM deployments.

eBooks

![Case Study: Checkr]()

Case Study: Checkr

Learn how Checkr reduced OpenAI costs by 5x and improved inference 30x with fine-tuned SLMs.

eBooks

![Reinforcement Fine-tuning for DeepSeek]()

Reinforcement Fine-tuning for DeepSeek

In this tech talk and demo, you'll learn how to fine-tune DeepSeek-R1 with reinforcement learning.

Videos & Webinars

![What is GRPO and Reward Functions]()

What is GRPO and Reward Functions

AMA style demo on GRPO fine-tuning and a deep dive on creating reward functions.

Videos & Webinars

![Demo: Predibase Reinforcement Fine-tuning Platform]()

Demo: Predibase Reinforcement Fine-tuning Platform

Introducing the first end-to-end platform for Reinforcement Fine-Tuning (RFT).

Videos & Webinars

![Turbo Reasoning: How to Accelerate Models like DeepSeek]()

Turbo Reasoning: How to Accelerate Models like DeepSeek

Learn how to increase the throughput of reasoning models by 2-4x without sacrificing accuracy.

Videos & Webinars

![Customer Talk: MMC's Agentic AI Assistant]()

Customer Talk: MMC's Agentic AI Assistant

How Marsh McLennan built an AI assistant with fine-tuned SLMs that saves them millions of hours.

Videos & Webinars

![SmallCon: Virtual Conference for GenAI Builders]()

SmallCon: Virtual Conference for GenAI Builders

Talks from HuggingFace, Nvidia, Salesforce and more on practical strategies for small models.

Videos & Webinars

![Optimize Inference for Fine-tuned SLMs]()

Optimize Inference for Fine-tuned SLMs

Learn how to speed up accelerate your LLM inference by 4x, improve reliability, and reduce costs with FP8, Turbo LoRA and more.

Videos & Webinars

![Solution Guide: Developer Platform for Productionizing Open-source AI]()

Solution Guide: Developer Platform for Productionizing Open-source AI

Learn about the fastest and most efficient way to fine-tune and serve pre-trained open-source AI models.

eBooks

Customer Talk: Automating Customer Service

How Convirza analyzes millions of calls with SLMs and multi-lora serving.

Videos & Webinars

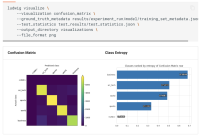

![Notebook: Fine-tune a SLM for Content Summarization]()

Notebook: Fine-tune a SLM for Content Summarization

Learn how to easily fine-tune Llama-3.1-8B to accurately summarize a chat conversations.

Tutorials

![Predibase Wrapped: Hits from 2024]()

Predibase Wrapped: Hits from 2024

Showcase of our biggest AI innovations: continued training, fine-tuning VLMs, Turbo LoRA and more.

Videos & Webinars

![Customer Talk: Clearwater Builds AI Agents with SLMs]()

Customer Talk: Clearwater Builds AI Agents with SLMs

Learn how Clearwater Analytics built and deployed an agentic workflow for their customers.

Videos & Webinars

![Beat GPT-4 with a Small Model and 10 Rows of Data]()

Beat GPT-4 with a Small Model and 10 Rows of Data

Learn how to leverage large language models like Llama-3.1-405b to generate synthetic data to fine-tune a small model that achieves GPT-4 level results.

Videos & Webinars

![Your Models, Your Cloud: Secure LLMs in Your VPC]()

Your Models, Your Cloud: Secure LLMs in Your VPC

Learn about different approaches to securing GenAI workloads and how to customize and host LLMs in your private cloud the easy way.

Videos & Webinars

![Customer Talk: Checkr]()

Customer Talk: Checkr

Learn how Checkr uses Predibase to fine-tune and serve LLM classifiers to automate the background check process—with higher accuracy and a 5x lower cost than OpenAI.

Videos & Webinars

![Notebook: Build a SQL CoPilot]()

Notebook: Build a SQL CoPilot

This example notebook will show you how to fine-tuned Llama-3.1-8b on a high-quality synthetic SQL dataset to create a highly accuracy code generation model.

Tutorials

![Demo: Synthetic Data Generation]()

Demo: Synthetic Data Generation

See how you can generate high quality synthetic data that can then be used to instantly fine-tune your model all within Predibase.

Videos & Webinars

![Notebook: Customer Support]()

Notebook: Customer Support

In this notebook tutorial, we'll show you how to fine-tune Mistral-7B for 3 different customer support use cases and serve on a single GPU with Predibase and LoRAX.

Tutorials

![The Definitive Guide to Fine-tuning LLMs]()

The Definitive Guide to Fine-tuning LLMs

This guidebook provides practical advice for overcoming the four primary challenges teams face when fine-tuning from managing infra to understanding techniques for efficient fine-tuning.

eBooks

![Notebook: Docstring Generation Using CodeLlama-13b]()

Notebook: Docstring Generation Using CodeLlama-13b

This tutorial notebook will help you get started fine-tuning CodeLlama-13b on predibase to generate code documentation.

Tutorials

![Graduate from OpenAI to Open-Source]()

Graduate from OpenAI to Open-Source

Watch this on-demand session to learn our 12 best practices for distilling smaller LLMs with GPT.

Videos & Webinars

![How–and when–to fine-tune and serve LLMs]()

How–and when–to fine-tune and serve LLMs

Learn how to quickly and effectively build end-to-end workflows to customize open-source LLMs on your data in your own cloud incl. best practices like LoRA and quantization.

Videos & Webinars

![Serve 100s of Fine-tuned LLMs for the Cost of Serving One]()

Serve 100s of Fine-tuned LLMs for the Cost of Serving One

Learn about LoRAX the new open-source framework that makes it possible to dynamically serve many LLMs on a single GPU without any degradation to performance.

Videos & Webinars

![Survey Report: A Look at LLMs in Production]()

Survey Report: A Look at LLMs in Production

Insights from 150 developers and data scientists on what it takes to be successful with LLMs, the challenges that still exist, and recommendations moving forward.

eBooks

![Notebook: Fine-tune a Code Generation LLM with Ludwig]()

Notebook: Fine-tune a Code Generation LLM with Ludwig

This detailed tutorial notebook will show you how to use the new features in open-source Ludwig 0.8 to deploy and fine-tune the Llama-2 LLM for a code generation task.

Tutorials

![Panel Discussion: Building Custom LLMs in Production]()

Panel Discussion: Building Custom LLMs in Production

Interactive discussion with LLM experts from Uber, LLamaIndex, Bank of America, and Predibase on avoiding common pitfalls when building production-grade LLM apps, tips for customizing and serving models, and future of GenAI.

Videos & Webinars

![ML Real Talk: 10 Things You Must Know About LLMs]()

ML Real Talk: 10 Things You Must Know About LLMs

In this episode of ML Real Talk, Host Daliana Liu and, Arnav Garg, ML Engineer, delve into all things LLMs from how these complex models are trained to exploring LLM customization like few shot learning to finetuning.

Videos & Webinars

![Efficiently Build Custom LLMs on Your Data with Open-source Ludwig]()

Efficiently Build Custom LLMs on Your Data with Open-source Ludwig

With Ludwig v0.8, we’ve released the first open-source, low-code framework optimized for efficiently building custom LLMs on private data. Learn how to use it.

Videos & Webinars

![How-to Guide: Overcoming Overfit Models]()

How-to Guide: Overcoming Overfit Models

Learn best practices for how to address and avoid overfitting in your ML models.

Tutorials

![Build Your Own LLM in Less Than 10 Lines of YAML]()

Build Your Own LLM in Less Than 10 Lines of YAML

Generalized models solve general problems. The real value comes from training a large language model on you data and finetuning it for a specific ML task. Now you can build your own custom LLM in a few lines of code.

Videos & Webinars

![4 Ways Developers Can Build AI-Powered Apps Faster with Declarative ML]()

4 Ways Developers Can Build AI-Powered Apps Faster with Declarative ML

New declarative machine learning tools make ML accessible to developers by simplifying model development and deployment with a config-driven approach rooted in engineering best practices. Read the ebook to learn more.

eBooks

![Privately Host and Customize Large Language Models for Your ML Task]()

Privately Host and Customize Large Language Models for Your ML Task

There’s a better way to use and finetune LLMs on your own data that is faster, cheaper, and doesn’t require giving away any proprietary data to LLM vendors. Check out this webinar and demo to learn more.

Videos & Webinars

![AutoML 2.0: The future of AutoML]()

AutoML 2.0: The future of AutoML

A new generation of declarative ML systems—like those pioneered at Uber, Apple, and Meta— provide a glass-box approach to automating ML that enables teams to bring new models to market faster with complete flexibility and control.

Videos & Webinars

![Hands-on with Multi-Modal Machine Learning: Predicting Customer Ratings]()

Hands-on with Multi-Modal Machine Learning: Predicting Customer Ratings

Learn how to easily build and evaluate a deep learning model to predict customer ratings using unstructured text and tabular data.

Videos & Webinars

![Santavision: Deep Learning for the Holidays]()

Santavision: Deep Learning for the Holidays

Learn how to use Predibase and open-source Ludwig to rapidly train an image classifier in less than 15 lines of a config file

Videos & Webinars

![Intro to Text Classification on Ludwig]()

Intro to Text Classification on Ludwig

Learn how to build a state-of-the-art text classifier with Ludwig in just a few lines of a configuration file.

Tutorials

![Exploring AutoML for Deep Learning with Ludwig]()

Exploring AutoML for Deep Learning with Ludwig

Ludwig AutoML makes it easy to rapidly build state-of-the-art models for tabular and text classification among other use cases. In this session, we deep-dive into Ludwig AutoML design, development, evaluation, and use.

Videos & Webinars

![Intro to Declarative ML Systems]()

Intro to Declarative ML Systems

Learn how Declarative machine learning systems—like those started at Uber and Apple—provide a glass-box approach to automating ML.

Videos & Webinars

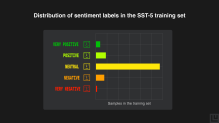

![Guide to Sentiment Analysis with Ludwig]()

Guide to Sentiment Analysis with Ludwig

3-part guide to go from importing data and implementing baseline models to working with BERT and doing Hyperparameter Optimization, all using only open-source Ludwig.

Tutorials