The Developer Platform For LoRA Fine-Tuning

Private. Powerful. Cost-Effective.

The fastest, most efficient way to fine-tune and serve open-source AI models in your cloud.

You can achieve better performance using fewer compute resources by fine-tuning smaller open-source LLMs with task-specific data. We’ve used this approach to innovate customer service and believe that Predibase offers the most intuitive platform for efficiently fine-tuning LLMs for specialized AI applications.

Sami Ghoche, CTO & Co-Founder

How it works

Powerfully Efficient Fine-tuning

Fine-tune smaller faster task-specific models without comprising performance.

Configurable Model Training

Built on top of Ludwig, the popular open-source declarative ML framework, Predibase makes it easy to fine-tune popular open-source models like Llama-2, Mistral, and Falcon through a configuration-driven approach. Simply specify the base model, dataset, and prompt template, and the system handles the rest. Advanced users can adjust any parameter such as learning rate or temperature with a single command.

# Kick off the fine-tune job

adapter = pb.finetuning.jobs.create(

config={

"base_model": "meta-llama/Llama-2-13b",

"epochs": 3,

"learning_rate": 0.0002,

},

dataset=my_dataset,

repo="my_adapter",

description='Fine-tune "meta-llama/Llama-2-13b" with my dataset for my task.',

)

Cost-Effective Model Serving

Put 100s of fine-tuned models into production for the cost of serving one.

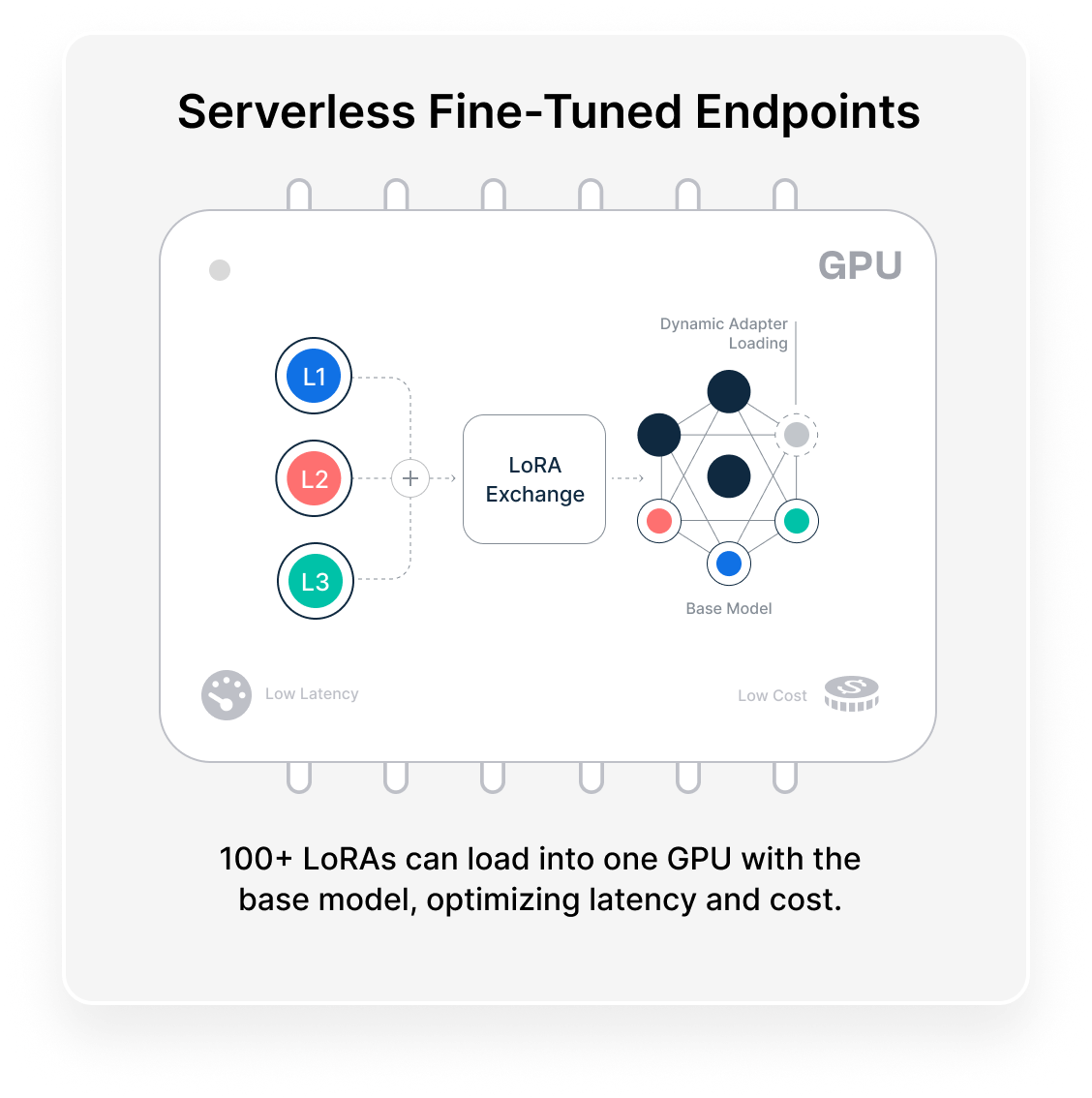

Serverless Fine-Tuned Endpoints

With Serverless Fine-tuned Endpoints you no longer need to deploy your fine-tuned adapters and base model in a dedicated GPU deployment. Predibase offers serverless endpoints for open-source LLMs and, with LoRAX and horizontal scaling, we are able to serve customers’ fine-tuned models at scale at a fraction of the overhead compared to dedicated deployments.

We see clear potential to improve the outcomes of our conservation efforts using customized open-source LLMs to help our teams generate insights and learnings from our large corpus of project reports. It has been a pleasure to work with Predibase on this initiative.

Dave Thau, Global Data and Technology Lead Scientist, WWF

Secure Private Deployments

Build in your environment and serve anywhere on world class serverless cloud infra.

Flexible, Secure Deployments

Deploy models within your private cloud environment or the secure Predibase AI cloud with support across regions and all major cloud providers including Azure, AWS, and GCP. No need to share your data or surrender model ownership.

Learn More

![Read the Report]()

Read the Report

Insights from 150 developers and data scientists on the top challenges with LLMs and how to overcome.

![Watch the Webinar]()

Watch the Webinar

Learn when and how to efficiently fine-tune open-source LLMs on your data in your own cloud including best practices like LoRA.

![Read the Blog]()

Read the Blog

Learn about this new innovative deployment approach that dynamically serves many fine-tuned LLMs on a single GPU at no additional cost.