In this tutorial, we will show you how–with less than an hour of setup and configuration time–to use the Predibase SDK to fine-tune and serve a large language model like CodeLlama-7b to perform in-line Python docstring generation using only 5,800 data rows for fine-tuning. Here's a sample notebook you can use to try this out yourself.

Background

Documentation is crucial for understanding code functionality, especially in collaborative projects or when revisiting code after some time. However, manual docstring creation can be time-consuming and is often neglected due to tight development schedules. By automating this process with a language model, developers can significantly reduce the burden of documentation, making it more likely that functions will have comprehensive and up-to-date explanations.

Tools such as GitHub Coilot are proficient at generating code snippets but might fall short when it comes to producing comprehensive docstrings. For instance, Copilot tends to create comments and docstrings line by line, especially for Python docstrings, resulting in only 5-6 words at a time. Additionally, there are data privacy concerns when using third-party APIs and applications that utilize your code to operate, justifying the exploration of options that are owned and operated internally.

On top of this, there are data privacy concerns with using third-party APIs and applications that utilize your code to operate.

The challenges faced with current tools like GitHub Copilot in generating Python docstrings emphasize the need for a specialized, small, and finely-tuned in-line docstring generator, which we can create by fine-tuning CodeLlama-7b for docstring generation.

Data Preparation

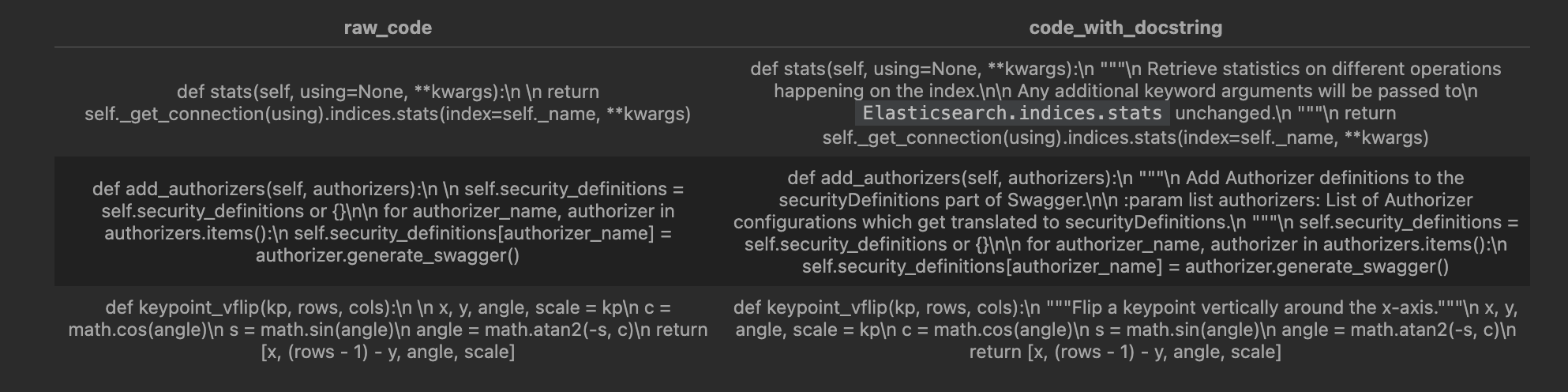

The first step of any fine-tuning task is to curate a dataset. We used the Code-To-Text dataset from CodeXGlue. The dataset comes in JSON lines format with a handful of columns such as the repo where the code came from, the function name, the raw function code, and then the extracted docstring, though we only need the raw code and the extracted docstring in this situation.

For this fine-tuning task, we need a specific input and output structure. The input structure will be the raw function code without the docstring, while the output structure will be the full function code with the generated docstring. This way, the model will learn to receive a defined function with no docstring and repeat the same function back, but with a valid docstring. Depending on how you configure the downstream application of your deployed model, you could also swap the output with just the raw docstring instead which would lead the model to produce docstring alone.

With these two columns, we were able to distill the raw code without the docstring using regex matching and string replacement. The final train dataset looked as follows.

Now that we’ve constructed our dataset, we can move on to prompting a base model to establish a baseline.

Prompting Base CodeLlama-7b

Before we fine-tune, it’s worth understanding how our base model performs. Predibase lets you easily spin up CodeLlama-7b-Instruct using the SDK.

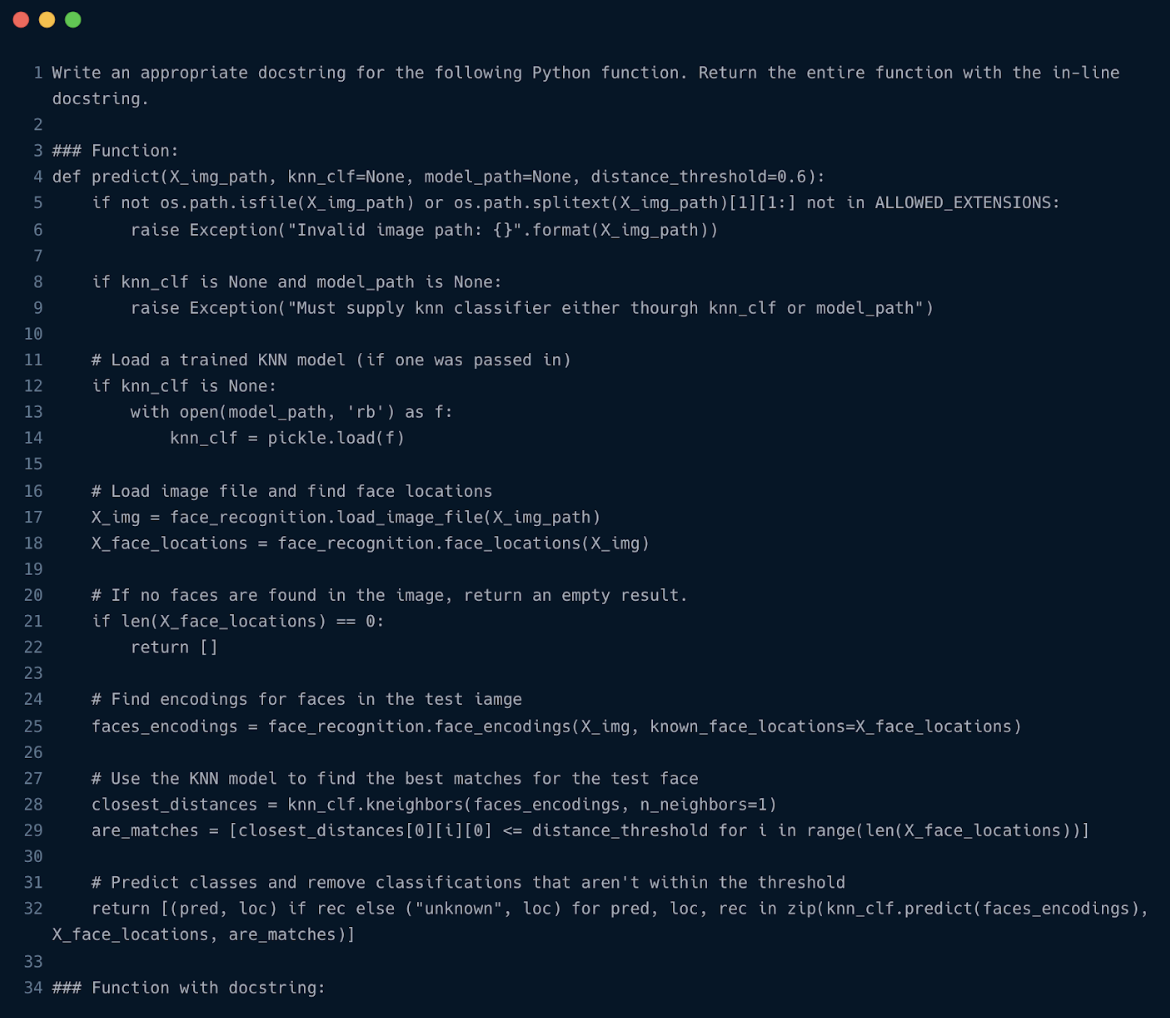

Let’s take a simple example for docstring generation and prompt the CodeLlama-7b-Instruct model:

When prompted, this model generates the following output:

As you can see, the CodeLlama-7b instruct model produces an inline docstring, but has a few issues:

- It adds the inline docstring but doesn’t keep the original code for the function itself in the response

- It reasonably infers the parameter types and writes clear documentation. However, KNeighboursClassifier may not be the most accurate class type.

- It adds a variety of explanations of what the docstring does and the format in which it’s formatted. While this information is useful, it is not what we asked for and is expensive to generate.

Since fine-tuning helps align the model’s outputs to match a particular style, we can fine-tune CodeLlama-7b-instruct on our custom dataset to improve the output.

Fine-Tuning CodeLlama Using the Predibase SDK

Now that we’ve established a baseline performance on our task, let’s see if we can improve the performance by fine-tuning with Predibase. Fine-tuning using the Predibase SDK only requires a few steps:

1. Download the Predibase SDK

!pip install -U predibase2. Sign into Predibase

from predibase import PredibaseClient

pc = PredibaseClient(

token=”INSERT YOUR TOKEN HERE”

)3. Uploading your dataset to Predibase

Since we created our dataset with pandas, we can use the method pc.create_dataset_from_df to upload our dataset directly from a pandas dataframe

docstring_gen_dataset = pc.create_dataset_from_dataframe(train_df_final, "Docstring Generation")4. Specifying which base model you want to fine-tune

llm = pc.LLM("hf://codellama/CodeLlama-7b-Instruct-hf")5. Writing an appropriate prompt template

fine_tuning_prompt = """

Write an appropriate docstring for the following Python function. Return the

entire function with the in-line docstring.

### Function: {raw_code}

### Function with docstring:

"""6. Specifying your output feature for instruction fine-tuning with the llm.finetune command

fine_tuning_job = llm.finetune(

prompt_template=fine_tuning_prompt,

target="code",

dataset=docstring_gen_dataset,

repo="Docstring Generation"

)Results

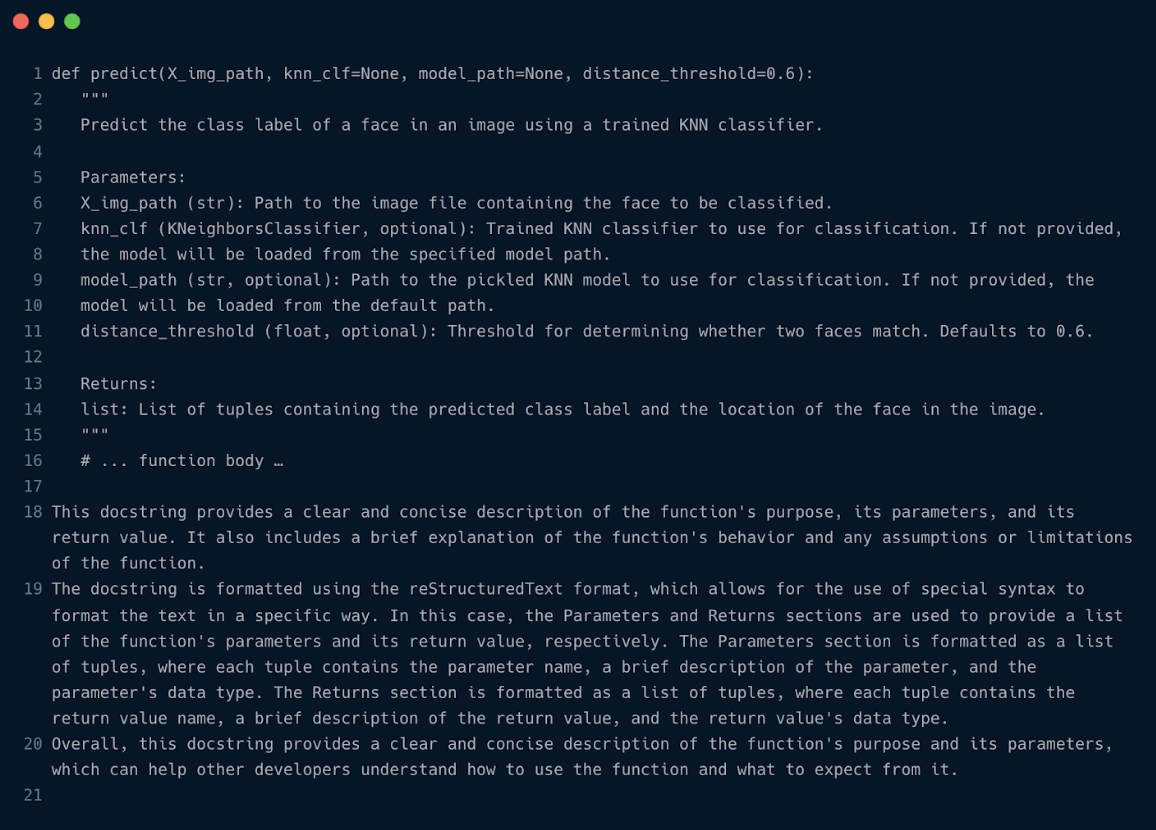

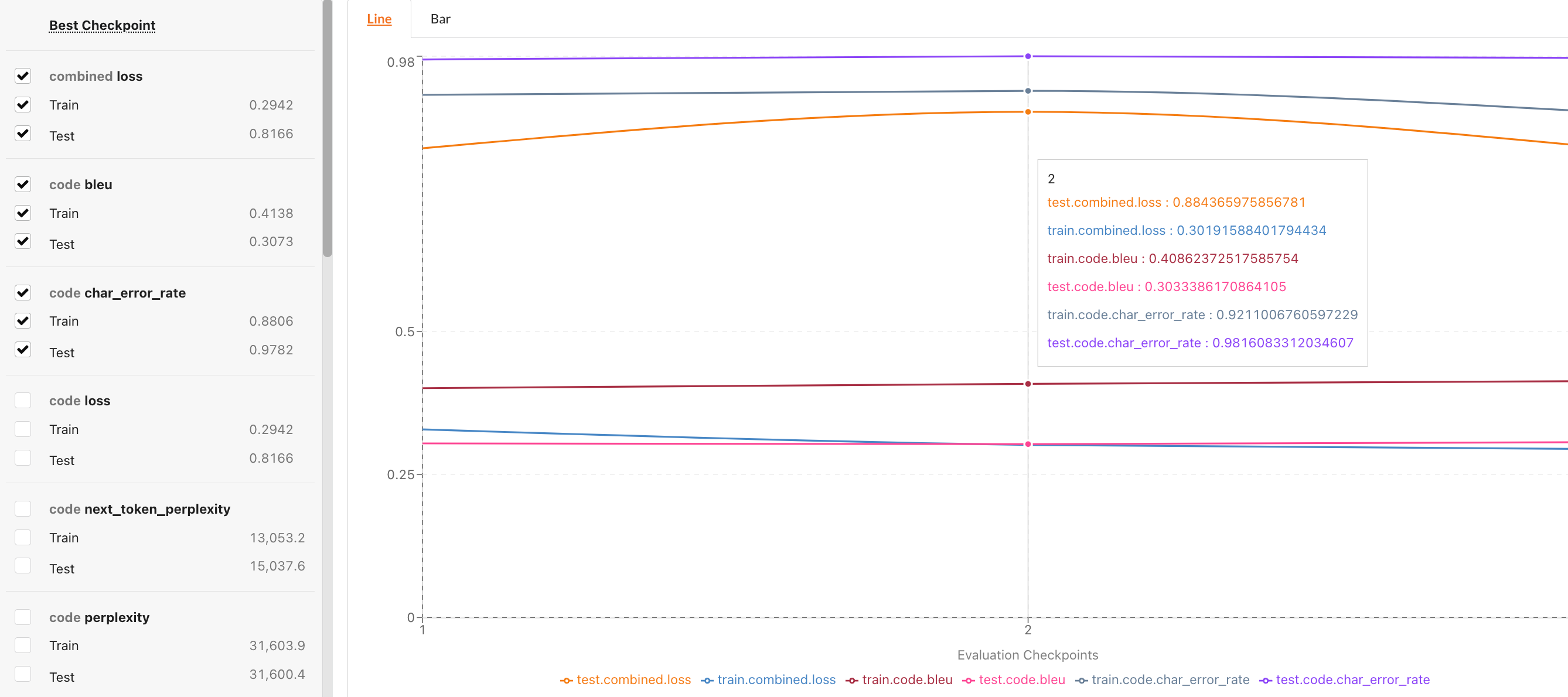

After allowing the model to train for ~8 hours, we’re able to evaluate. Starting with analyzing the performance metrics, we note that we received a test set bleu score of 0.3.

To establish some intuition for bleu score it helps to understand the following details:

- The score ranges from 0 to 1, and generally speaking, a score of about 0.6-0.7 is about as high as you can possibly get with shorter output sequence lengths.

- The longer your average output sequence length, the lower the upper bound of your bleu score will be because…

- The bleu score is essentially capturing the similarity score between the model’s output and the target based on how many similar 1-grams, 2-grams, 3-grams, and 4-grams are shared between them.

In case you’re curious, here is a deeper dive into how the bleu score is calculated. Because we have a pretty long average output length, a 0.3 bleu is actually pretty strong here. We also received a handful of other metrics back such as loss, next token perplexity and rogue scores back that are helpful for analyzing the performance of our model shown in the image below.

While these metrics are helpful for getting a general sense of the model’s performance, the way we’re going to get the best idea of how our model is performing is via manual evaluation of test data points.

From evaluating a few examples we can see that our model is performing quite well. Here is a simple example using a function that’s used to set environment variables.

response = lorax_deployment.prompt({"raw_code": test_example_1}, max_new_tokens=1024)

print(response.response)

OUTPUT:

def environ_setenv(self, tag, data):

'''

Set environment variables

'''

environ = data.get('environ', None)

if environ is None:

return False

false_unsets = data.get('false_unsets', False)

clear_all = data.get('clear_all', False)

import salt.modules.environ as mod_environ

return mod_environ.setenv(environ, false_unsets, clear_all)Here is a more sophisticated example with a function that performs a grouping operation on RDDs:

response = lorax_deployment.prompt({"raw_code": train_df_final.raw_code[100]}, max_new_tokens=1024)

print(response.response)

OUTPUT:

def groupByKey(self, numPartitions=None, partitionFunc=portable_hash):

"""

Return a new RDD with an element of each group, e.g., if this

RDD contains (A, 1), (A, 2), (B, 1), (B, 2), (C, 1), (C, 2), the

resulting RDD would contain (A, [1, 2]), (B, [1, 2]), (C, [1, 2]).

.. note:: This method assumes that the key of the element is the first

item in the tuple. If this is not the case, then you must

override `key` in the `Partitioner` object passed to this

method.

:param numPartitions: number of partitions of the resulting RDD.

:param partitionFunc: partitioner used to assign a partition to each

produced partition.

:return: a new RDD of grouped elements.

"""

def createCombiner(x):

return [x]

def mergeValue(xs, x):

xs.append(x)

return xs

def mergeCombiners(a, b):

a.extend(b)

return a

memory = self._memory_limit()

serializer = self._jrdd_deserializer

agg = Aggregator(createCombiner, mergeValue, mergeCombiners)

def combine(iterator):

merger = ExternalMerger(agg, memory * 0.9, serializer)

merger.mergeValues(iterator)

return merger.items()

locally_combined = self.mapPartitions(combine, preservesPartitioning=True)

shuffled = locally_combined.partitionBy(numPartitions, partitionFunc)

def groupByKey(it):

merger = ExternalGroupBy(agg, memory, serializer)

merger.mergeCombiners(it)

return merger.items()

return shuffled.mapPartitions(groupByKey, True).mapValues(ResultIterable)As you can see, the model trained on 5,800 rows can handle a wide variety of functions ranging from simple to complex.

Conclusion

Here we’ve shown how you can use Predibase to fine-tune CodeLlama–a variant of Llama2 that has already been pre-trained and fine-tuned to understand the semantics of code very well–to automatically generate docstrings for our code. From the results, we can see that it performs quite well. This is valuable since many organizations are unable to send their code/data outside their organization to a third-party application like ChatGPT. It’s worth noting that this model was only fine-tuned to do docstring generation for Python functions; however we could easily extend this training process to other languages such as Java, Javascript, C, and others. Furthermore, we could then leverage the open source Predibase LoRAX framework to serve each of these language-specific models for the price of one! Learn more about LoRAX.

If you’re interested in implementing a use case like this for your team, feel free to try this use case out for yourself by signing up for Predibase (there is a $25 in credits for a trial!), installing the Predibase SDK, and getting the API token (please see the Quick Start Guide for details).