This is a guest post from Ludwig community member, Alex Sherstinsky, in collaboration with Predibase engineer Arnav Garg. We thank Alex for his contributions and insights on fine-tuning.

Tutorial Overview: Fine-tuning Mistral 7B

Base versions of open-source LLMs, such as Llama-2, have shown the effectiveness in capturing the statistical structures of languages but tend to perform poorly out-of-the-box for domain-specific tasks, such as summarization. This tutorial will show you how to efficiently fine-tune the new open-source LLM from Mistral AI (“Mistral 7B”) for a summarization task, motivated by the evidence that the base model performs poorly on this task. We will use the open-source framework Ludwig to easily accomplish this task.

Here is the output of the base Mistral 7B model before fine-tuning:

[This is the prompt]

Summarize this dialogue:

### Dialogue:

A: Hi Tom, are you busy tomorrow's afternoon?

B: I'm pretty sure I am. What's up?

A: Can you go with me to the animal shelter?.

B: What do you want to do?

A: I want to get a puppy for my son.

B: That will make him so happy.

A: Yeah, we've discussed it many times. I think he's ready now.

B: That's good. Raising a dog is a tough issue. Like having a baby ;-)

A: I'll get him one of those little dogs.

B: One that won't grow up too big;-)

A: And eat too much;-))

B: Do you know which one he would like?

A: Oh, yes, I took him there last Monday. He showed me one that he really liked.

B: I bet you had to drag him away.

A: He wanted to take it home right away ;-).

B: I wonder what he'll name it.

A: He said he'd name it after his dead hamster – Lemmy - he's a great Motorhead fan :-)))

### Synopsis: [the output below is generated by the model]

The speaker, A, calls B to ask if he can come with her to a store where animals are sold. B is surprised and wants to know why she needs him. It turns out that she is interested in buying a dog so she can give it to her son as a present. She wants to buy a puppy that will fit in with their life, so the speaker decides to buy a small dog. B tells the speaker that she will have to feed and take care of the new dog for at least ten years. B asks the speaker if he knows which dog the speaker's son liked. A tells B that she has already been to the store, and the son liked a particular dog. B tells A that the speaker's son is probably not going to let A talk him out of buying that puppy. B wonders what the new dog will be named. A tells B that the son wants to name the puppy after one of his favorite bands, Motorhead, and one of the members

This verbose “Synopsis” does not read like a good summary of the “Dialogue” posed to the model.

After we fine-tune this base (“pre-trained”) model using Ludwig.ai, we will see the response similar to the following on the same dialogue sequence:

[this is the prompt]

Summarize this dialogue:

### Dialogue:

A: Hi Tom, are you busy tomorrow's afternoon?

B: I'm pretty sure I am. What's up?

A: Can you go with me to the animal shelter?.

B: What do you want to do?

A: I want to get a puppy for my son.

B: That will make him so happy.

A: Yeah, we've discussed it many times. I think he's ready now.

B: That's good. Raising a dog is a tough issue. Like having a baby ;-)

A: I'll get him one of those little dogs.

B: One that won't grow up too big;-)

A: And eat too much;-))

B: Do you know which one he would like?

A: Oh, yes, I took him there last Monday. He showed me one that he really liked.

B: I bet you had to drag him away.

A: He wanted to take it home right away ;-).

B: I wonder what he'll name it.

A: He said he'd name it after his dead hamster – Lemmy - he's a great Motorhead fan :-)))

### Synopsis: [the output below is generated by the model]

A wants to get a puppy for his son tomorrow. B will join A to help him with that.

The “Synopsis” now offers a concise yet informative summary of the “Dialogue”.

If you are interested in learning how to do this in less than a couple of hours for free without writing much code, and even if you are not immediately familiar with the terminology in this introduction, please read on! We will go through the setup, preparing the dataset, training the models, and validating the result. we will also share the notebooks.

But first, let us take a step back and explore the motivations for fine-tuning.

Background: The motivation for open-source LLMs and fine-tuning

The development and maturity of Generative AI, particularly Large Language Models (LLMs), has brought the promise of as yet unseen levels of automation to business applications and is making a host of new consumer experiences a reality. A lot of innovation is happening in ML, thanks to the numerous educational materials and the availability of open-source datasets, software frameworks, and affordable compute resources. After the capabilities of LLMs were broadly demonstrated by ChatGPT on November 30, 2022, businesses have been steadily working on incorporating LLM-enabled applications into their product strategy.

Challenge to wide adoption: Customization costs

However, it is now clear that building a useful model – attaining the first “L” in “LLM” – is not only an enormous feat of engineering but also an extremely expensive undertaking requiring vast amounts of data and millions of dollars to create just a preliminary “base” ML model. One of the key cost factors is the need for hardware accelerators, which add capital expense (price per part) as well as incur operating costs due to their high energy consumption and cooling requirements.

Thus, the running assumption was that only the largest ML powerhouses with significant resources could build and own these models and sell their usage via a SaaS model to customers.

Unleashing the value of AI: Business model and technical innovation

Fortunately, it seems as though the future, in which a handful of powerful merchants have monopolized the AI market, is not a foregone conclusion. Thanks to a somewhat less restrictive business model and a string of technical innovations, companies of just about any size can take advantage of the power of LLMs cost-effectively, adding only incrementally to cloud budgets.

Meta, Inc. led with the first key enabler, the business model. By releasing the “Llama 2” model as an “Open Source” LLM offering, anybody could use it for educational and/or commercial use.

Not only did this prompt (no pun intended) the other prominent vendors to follow suit, but it also spurred innovation. Crucially, having access to a large general model is not enough to make it worthwhile for a given application (e.g., support agent assistance for auto-insurance, marketing materials co-authoring for vacation cruises, etc.); these models have to be customized (or “fine-tuned”), which, done simplistically, would entail significant computational costs.

So the open-sourcing of pre-trained LLMs in and of itself did not eliminate the economic gap between companies that are hardware-rich and hardware-poor; hardware-haves could still build and customize LLMs, while hardware-have-nots would remain at a competitive disadvantage.

What happened next has ignited a revolution: The techniques called LoRA (Low-Rank Adaptation) from Microsoft, Inc. and QLoRA (Efficient Fine-tuning of Quantized LLMs) from University of Washington, were published, describing the mechanism for fine-tuning LLMs at a fraction of previous memory requirements, with almost no loss of accuracy.

With these – all very recent – advances, the economics of utilizing LLMs have become approachable to many businesses. Companies can now leverage these powerful general models by applying their own proprietary datasets to fine-tune the pre-trained LLMs for their business domains. In fact, full ensembles of powerful purpose-fit models can now be produced and operated cost-effectively by organizations across a broad range of industries and sizes.

Thanks to the generous usage licenses to ML models offered by the leading vendors, and with the help of a growing category of open source software tools and commercial (“platform as a service”) product offerings (typically the former serving as the “open core” for the latter) like Predibase that fine-tune LLMs to custom, including proprietary, datasets, AI is being integrated into everything.

In terms of the business models, the landscape is hybrid, or mixed. In certain cases, the LLM vendors have their own cloud environments available for rent to either train (fine-tune) the models, or only the inference part (“model serving” to blend with the customer’s application workflow), or both (along with providing the accompanying metadata, feedback, and reports).

Essentially, within half a year of ChatGPT waking up the world to AI, new methods like LoRA and QLoRA enabled practically any ML engineer to download an open source pre-trained LLM and fine-tune it for their own use case. In fact, fine-tuning new LLMs using a free-tier Google Colab account has become a popular pastime (we are speaking for ourselves and other ML professionals, of course).

To the last point, a new open-source LLM from Mistral AI, called “Mistral 7B” was released just over a week ago (which, though very recent, equates to dog years squared in AI progress time units). Mistral AI’s 7B parameter model is particularly interesting, because it introduces several architectural innovations resulting in the model’s ability to handle longer sequences at a lower cost as well as execute inference faster, while matching or outperforming older models. Mistral 7B also boasts impressive out-of-the-box performance, with a claim that it outperforms Llama-2-13B on all benchmarks and outperforms Llama-1-30B on many benchmarks, which is very impressive.

This deep dive tutorial will show you how to easily and efficiently fine-tune this new 7-billion parameter open-source LLM for a summarization task. Before diving into the exercise, a few words are in order about why quantized models, which sacrifice numerical precision for other gains, produce excellent results, thereby making practical what was just recently out of reach for most.

The remarkable place of “Low Precision” in Machine Learning

Error tolerance has been a known property of ML systems for quite some time. In fact, errors in the form of the drop-out layers are introduced into the modern machine learning architectures on purpose because they have been demonstrated to impart regularization properties to the network (regularization smooths the behavior of the model, enabling it to do better at predictions when presented unseen inputs). Analyses rooted in Statistical Mechanics have also identified regularization effects inherent in the training process of deep artificial neural networks (DNNs).

Quantization is the method of representing model weights as integers with lower precision, such as using just 4 bits or 8 bits to represent a floating point number that is traditionally represented using 32 bits (this is in contrast to a reduced precision floating point format (e.g., fp16, instead of fp32 or fp64), which is just a truncation of the numerical representation of model weights).

QLoRA builds on the basic idea of quantization by introducing several innovations to save memory without sacrificing performance:

- 4-bit NormalFloat (NF4), a new data type that is information theoretically optimal for normally distributed weights;

- Double Quantization to reduce the average memory footprint by quantizing the quantization constants;

- Performing computations (matrix multiplications) in half precision fp16 format by default.

In practice, however, pre-trained model weights are not always normally distributed, which means that the NF4 format is an approximation that can result in a slight loss of precision in representing the model weights using this datatype. Additionally, since the computations are performed in fp16 instead of in the standard fp32 precision by default, some performance loss is also incurred with the benefit of reduced memory pressure. That being said, our own experiments have shown that NF4 quantization with fp16 computations tends to nearly match the performance of full 16-bit fine-tuning task performance across various tasks.

Quantization can also be viewed as a kind of error, on one hand reducing the precision to cut the memory footprint so that these large models can be trained cheaply on commodity GPUs, and on the other hand, compensating for this loss of numerical precision by contributing a regularization effect.

Tutorial: Fine-tuning Mistral 7B with Ludwig in Colab

We will now adopt the template laid out in this Google Colab notebook to fine-tune the new Mistral 7B LLM on the summarization task using the Linux Foundation-supported Ludwig, an open-source software (OSS) framework designed specifically for building custom AI models, like LLMs and other DNNs. The motivation for choosing Ludwig is that its “low-code” declarative configuration-centric methodology dramatically simplifies the highly detailed and complex task of fine-tuning. You can also check out the DeepLearning.ai webinar, “Efficient Fine-Tuning of Llama-v2-7b,” and the related notebook for the guiding principles behind this work.

Mistral 7B fine-tuning scenarios covered in this tutorial

We will use Ludwig to demonstrate two separate scenarios (both Google Colab notebooks are available at the end of this tutorial):

- Fine-tuning Mistral 7B LLM on a summarization task in the free tier Google Colab notebook and publishing the QLoRA adapter weights to HuggingFace Hub and Predibase.

- Repeating the above task on a paid Google Colab Pro instance (with the A100 GPU) and publishing the complete self-contained fine-tuned model weights to HuggingFace Hub and Predibase.

Once the fine-tuned weights are published, you can run inference through HuggingFace APIs as demonstrated in this notebook, through Ludwig, or a highly optimized and scalable managed inference solution, such as Predibase.

The process for realizing both of the above scenarios is the same:

- Load the custom dataset (if you prefer not to, you can even omit splitting it into training, evaluation, and test portions because Ludwig will do it for you under the hood!).

- Prepare the Ludwig configuration – this is the primary step, requiring attention to detail.

- Run the Ludwig model training API call and observe how the loss decreases.

- Validate the trained model and save it to HuggingFace Hub and Predibase.

- Load the model from your HuggingFace Hub account afresh, or through Predibase’s SDK, and make sure you can run inference on it and confirm that its predictions are of good quality.

A Note on the style of this tutorial

The conversation that follows will be high on YAML and low on Python. While this may seem unusual for introducing code that runs in a notebook, the point is to showcase the “low-code” aspects of the Ludwig framework. While there will be some Python to run a utility, kick-off training, run prediction, and a few other tasks, we will primarily communicate via the language of configuration. This underscores the strength of Ludwig: it accepts the minimal amount of declarative directives from the user through the specified configuration and fills in the gaps using AutoML-like techniques to arrive at the most optimal system execution settings.

Of course, the notebooks will tie the entire workflow together using Python (links at the bottom).

Ludwig Configuration Format

For compatibility with LLMs, Ludwig 0.8 introduced a new model_type called llm and a new keyword base_model that must be specified to indicate the LLM name that one wants to use.

The top-level structure of Ludwig configuration for LLM fine-tuning is (expressed in YAML):

model_type: llm

base_model: account/model_name

input_features:

output_features:

prompt:

adapter:

quantization:

trainer:Consult the Ludwig Large Language Models configuration documentation for an example.

Going forward, we will build the Ludwig configuration for fine-tuning with the Mistral 7B model:

model_type: llm

# base_model: mistralai/Mistral-7B-v0.1

base_model: alexsherstinsky/Mistral-7B-v0.1-shardedSAMSum dataset

We will use the SAMSum dataset to fine-tune Mistral 7B for a summarization application. This dataset contains two main columns: “dialogue” (long) and the ground-truth “summary” (concise); we download it as follows:

import datasets

from datasets import load_dataset, Dataset, DatasetDict

samsum_dataset_dict: DatasetDict = datasets.load_dataset(

"samsum"

)

samsum_dataset_dict

DatasetDict({

train: Dataset({

features: ['id', 'dialogue', 'summary'],

num_rows: 14732

})

test: Dataset({

features: ['id', 'dialogue', 'summary'],

num_rows: 819

})

validation: Dataset({

features: ['id', 'dialogue', 'summary'],

num_rows: 818

})

})This imports the dataset from HuggingFace’s Dataset Hub. In the very near future, Ludwig will also support using HuggingFace Datasets directly from within Ludwig, so you will not have to process your dataset externally – you will just be able to specify the path as "hf://samsum".

Suppose that for fine-tuning we just want to use 1,000 examples. There are a couple of ways to provide this dataset to Ludwig for training. Since there are more than enough examples in the “train” section of the original SAMSum dataset, we can just select any 1,000 of them and tell Ludwig to split them randomly into the 90%, 5%, and 5% proportion (training, evaluation, and test, respectively). The preprocessing section of Ludwig's configuration captures these directives (we use YAML and convert it to a Python dictionary before giving it to Ludwig):

preprocessing:

split:

type: random

probabilities: [0.9, 0.05, 0.05]At this juncture, we should also create an evaluation dataset (e.g., using the following method):

test_dataset: Dataset = samsum_dataset_dict["test"]

df_test: pd.DataFrame = test_dataset.to_pandas()

df_evaluation: pd.DataFrame = df_test.sample(n=10, random_state=200)We can later use this to run inference on the trained model to validate prediction quality (here, by looking at the predictions manually).

Basic LLM Specifications

Every Ludwig model is based on a config, which requires at least one input feature and one output feature to be defined. For example:

input_features:

- name: dialogue

type: text

output_features:

- name: summary

type: textThis example is a simple Ludwig config that tells Ludwig to use the column called dialogue in our dataset as an input feature and the summary column in our dataset as an output feature. This is the simplest Ludwig config we can define - it's just six lines and works out of the box!

A remark about the size of the base Mistral 7B model on HuggingFace

Please note that the official base model line is commented out, and our own is specified instead. The reason is that the free and Pro Google Colab accounts do not have enough CPU RAM to download the official model containing the weights, split into the two pickled files (this would be a non-issue if the Mistral AI team uploaded safetensor weights for the model, since it would clear CPU RAM once the weights for each shard are placed on the GPU). In order to be able to download the model in a resource-constrained notebook, we first shard the original model into smaller, 2GB maximum, files each. This approach makes the download work in the Colab without issues.

The code we used for sharding the original model is:

original_base_model_tokenizer: LlamaTokenizerFast = AutoTokenizer.from_pretrained(

pretrained_model_name_or_path="mistralai/Mistral-7B-v0.1",

trust_remote_code=True,

padding_side="left"

)

original_base_model: MistralForCausalLM = AutoModelForCausalLM.from_pretrained(

pretrained_model_name_or_path="mistralai/Mistral-7B-v0.1",

device_map="auto",

torch_dtype=torch.float16,

offload_folder="offload",

trust_remote_code=True,

low_cpu_mem_usage=True

)

original_base_model.save_pretrained(

save_directory="alexsherstinsky/Mistral-7B-v0.1-sharded",

max_shard_size="2GB",

push_to_hub=True

)

original_base_model_tokenizer.save_pretrained(

save_directory="alexsherstinsky/Mistral-7B-v0.1-sharded",

legacy_format=False,

push_to_hub=True

)This preparation step can be done entirely from one’s development machine, outside of the Google Colab notebook; this provides the advantage of speed and the savings of compute units. However, One must ensure that the development machine has sufficient RAM (the environment used for the present experimentation is Macbook Pro M1 Max with 64GB RAM). Please note that transformers>=4.34.0 is required for this operation (to accommodate “Mistral 7B”).

Baseline evaluation: Inference on original Mistral 7B LLM

Before we fine-tune Mistral 7B for the summarization task, it is helpful to run a prediction on this (sharded) base model to gauge any improvements due to the custom dataset. In order to do this, we will set up the test prompt now; it will be reused to test the fine-tuned model.

samsum_prompt_template: str = """

Summarize this dialogue:

### Dialogue: {dialogue}

### Synopsis:

"""

samsum_test_dialogue: str = """

A: Hi Tom, are you busy tomorrow’s afternoon?

B: I’m pretty sure I am. What’s up?

A: Can you go with me to the animal shelter?.

B: What do you want to do?

A: I want to get a puppy for my son.

B: That will make him so happy.

A: Yeah, we’ve discussed it many times. I think he’s ready now.

B: That’s good. Raising a dog is a tough issue. Like having a baby ;-)

A: I'll get him one of those little dogs.

B: One that won't grow up too big;-)

A: And eat too much;-))

B: Do you know which one he would like?

A: Oh, yes, I took him there last Monday. He showed me one that he really liked.

B: I bet you had to drag him away.

A: He wanted to take it home right away ;-).

B: I wonder what he'll name it.

A: He said he’d name it after his dead hamster – Lemmy - he's a great Motorhead fan :-)))

"""

samsum_test_prompt: str = samsum_prompt_template.format(

**{"dialogue": samsum_test_dialogue}

)Next, we load the pre-trained sharded Mistral 7B LLM:

tokenizer: LlamaTokenizerFast = AutoTokenizer.from_pretrained(

pretrained_model_name_or_path="alexsherstinsky/Mistral-7B-v0.1-sharded",

trust_remote_code=True,

padding_side="left"

)

bnb_config_4bit: BitsAndBytesConfig = BitsAndBytesConfig(

load_in_4bit=True,

load_in_8bit=False,

llm_int8_threshold=6.0,

llm_int8_has_fp16_weight=False,

bnb_4bit_compute_dtype="float16",

bnb_4bit_use_double_quant=True,

bnb_4bit_quant_type="nf4",

)

base_model: MistralForCausalLM = AutoModelForCausalLM.from_pretrained(

pretrained_model_name_or_path="alexsherstinsky/Mistral-7B-v0.1-sharded",

device_map="auto",

torch_dtype=torch.float16,

offload_folder="offload",

trust_remote_code=True,

low_cpu_mem_usage=True,

quantization_config=bnb_config_4bit

)and run inference on it with the one sample samsum_test_prompt defined above:

sequences_generator: TextGenerationPipeline = transformers.pipeline(

task="text-generation",

tokenizer=tokenizer,

model=base_model,

torch_dtype=torch.float16,

device_map="auto"

)

sequences: list[dict] | list[list[dict]] = sequences_generator(

text_inputs=samsum_test_prompt,

do_sample=True,

top_k=50,

num_return_sequences=1,

eos_token_id=tokenizer.eos_token_id,

max_length=512,

return_text=True

)

sequence: dict = sequences[0]

print(f'\n[GENERATED_TEXT] BASE_MODEL_PREDICTION:\n{sequence["generated_text"]}')When we run these commands, we obtain a low-quality result similar to the one shown earlier. This suggests that fine-tuning has the potential to help guide the model in the direction of solving this task effectively and also means that getting the hyperparameters right makes a difference.

We now resume the process of filling out the Ludwig configuration for the fine-tuning phase.

Input/Output dataset features specifications configuration

While under the hood, the features for ML model training are embeddings or statistics representing the data, Ludwig hides this complexity and allows users to specify human-readable parts of the data as features. For the present LLM fine-tuning use case, the input is the dialogue column, and the ground truth output is the summary column; this is specified below:

input_features:

- name: dialogue

type: text

preprocessing:

max_sequence_length: 1024

output_features:

- name: summary

type: text

preprocessing:

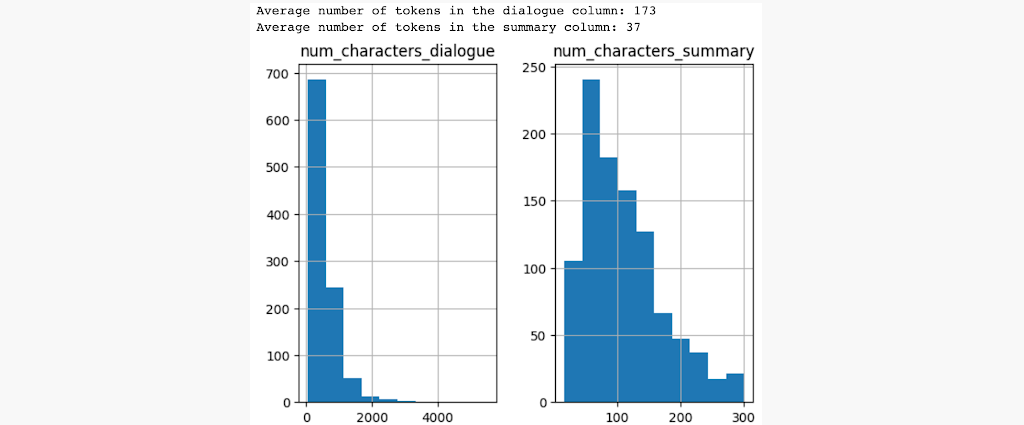

max_sequence_length: 384An important consideration is the maximum number of tokens as it impacts the size of the attention computation, which is very expensive both in terms of time and compute required. A good way to determine the token count limits is to compute the average token counts in the input and output columns and decide accordingly. The accompanying notebooks will calculate and plot this for the SAMSum dataset, justifying the above choices:

The accompanying notebooks calculate and plot the average token counts in the input and output columns for the SAMSum dataset.

Qualitatively, we want to process as much of the input “dialogue” content as possible while also allocating a sufficient number of tokens for the “summary” output to be brief and clear.

Training method for LLM fine-tuning: Prompt configuration

Since the fundamental mechanism of the Transformer architecture (based on which LLMs are implemented) is predicting the next token, textual prompts have become a practical way to influence the training flow toward the desired direction. We will use a simple prompt structure to train the model and validate the quality of results.

prompt:

template: >-

Summarize this dialogue:

### Dialogue: {dialogue}

### Synopsis:One can readily enrich this prompt with a “system” message to nudge the training process to pay attention to advantageous details. This approach is usually considered good practice since the prompts help steer the LLM towards your task by giving it information about the task itself as well as the domain of your task.

QLoRA adapter configuration for the free Google Colab account

We now give the instructions to Ludwig for setting up the underlying Transformer architecture in a way that makes the first use case (fine-tuning execution carried out in the free Google Colab account with the T4 GPU) feasible, including the LoRA layers and quantizing the weights:

adapter:

type: lora

quantization:

bits: 4These four lines of YAML look deceptively simple, but that is the beauty of Ludwig! The fact that underneath lies brilliant science, implemented through carefully crafted code using multiple software libraries, is nice to appreciate; importantly, Ludwig encapsulates this complexity to make the essential decision points easy to declare via configuration parameters. Note that here we use a 4-bit quantization; going from 32-bit to 4-bit normalized float representation seems drastic, but, as we will see during the validation phase, the trained model will perform very well!

Training configuration parameters

The 4-bit quantization is one essential setting required for the Mistral 7B fine-tuning computation to fit into the T4 GPU that comes with the free Google Colab notebook tier. Additionally, the “trainer” section lets one define training parameters which control the learning process:

trainer:

type: fine-tune

epochs: 5

batch_size: 1

eval_batch_size: 2

gradient_accumulation_steps: 16 # effective batch size = batch size * gradient_accumulation_steps

learning_rate: 2.0e-4

enable_gradient_checkpointing: true

learning_rate_scheduler:

decay: cosine

warmup_fraction: 0.03

reduce_on_plateau: 0Ludwig provides several nice out-of-the-box training innovations for LLM fine-tuning:

- Large effective batch sizes via gradient accumulation: This method allows training LLMs with limited GPU memory by breaking the dataset into smaller batches, computing gradients for these batches, and then accumulating them before performing weight updates. It increases the effective batch size without requiring significantly higher GPU memory, enabling the training of large models without compromising training stability and convergence speed.

- Gradient checkpointing: Gradient checkpointing efficiently manages memory by selectively recomputing intermediate activations during the backward pass rather than storing them all in memory, which would require another O(model parameters) amount of memory. Gradient checkpointing partially increases training time, but typically frees up ~40% of the GPU’s memory. This additional GPU memory can be particularly beneficial when fine-tuning LLMs with very large sequence lengths. It is what enables us to set the combined max_sequence_length for the input feature and output feature lengths to be 1408 tokens for the SAMsum dataset (1024 input + 384 output) and train over its larger portion, compared to only about 512 total tokens without gradient checkpointing enabled. This lets us process nearly 3x the total number of tokens!

- Learning rate decay: Learning rate decay is vital for balancing fast convergence and stable optimization in deep learning. Cosine annealing is a specific decay strategy that smoothly reduces the learning rate in a cosine-shaped manner during training epochs. It includes a warmup phase, where the learning rate gradually increases, stabilizing early training stages and aiding the model in exploring the loss landscape effectively, potentially leading to improved model performance on unseen data.

Ludwig also supports other fine-tuning optimizations, such as using paged and 8-bit optimizers to further reduce GPU memory pressure and prevent GPU OOMs during fine-tuning.

Inference configuration parameters

When generating text during inference using a pre-trained or a fine-tuned LLM, one may often want to control the generation process, such as what token decoding strategy to use, how many new tokes to produce, which tokens to exclude, or how diverse one would want the generated text to be. All of these can be controlled through the generation configuration section in Ludwig.

generation:

temperature: 0.1

max_new_tokens: 512In Ludwig, these parameters will be used post fine-tuning at inference time to generate predictions for our dialogue summarization task. While Ludwig sets default values for all of the generation parameters, we will override the values of two specific generation parameters in our config:

- temperature: Temperature is used to control the randomness of predictions. A high temperature value (closer to 1) makes the output more diverse and random, while a lower temperature (closer to 0) makes the model's responses more deterministic and focused on the most likely outcome. In other words, temperature adjusts the probability distribution from which the model picks the next token. In our case, we use a temperature of 0.1, since we want the predicted text to be very objective (because it is a summarization task). Note that the very probabilistic nature of the models at hand is the reason for getting slightly different prediction results across multiple inference runs.

- max_new_tokens: This is the maximum number of new tokens to generate, ignoring the number of tokens in the input prompt. We set this to 512 tokens in order to ensure that the model has the ability to produce the full range of 384 tokens that we have set for our output feature during fine-tuning.

The full set of generation parameters supported by Ludwig can be found in documentation.

The parameters provided via the configuration are used as defaults. Any of the available generation parameters can also be changed dynamically at inference time by passing them to model.predict()after the model is fine-tuned as overrides. For example, to increase the temperature to 0.2, just pass it in through the generation_config parameter, which will only use these overridden parameters for this particular model.predict()call:

predictions: pd.DataFrame = model.predict(prediction_df, generation_config={"temperature": 0.2})Train the Model in the Fine-tuning Regime

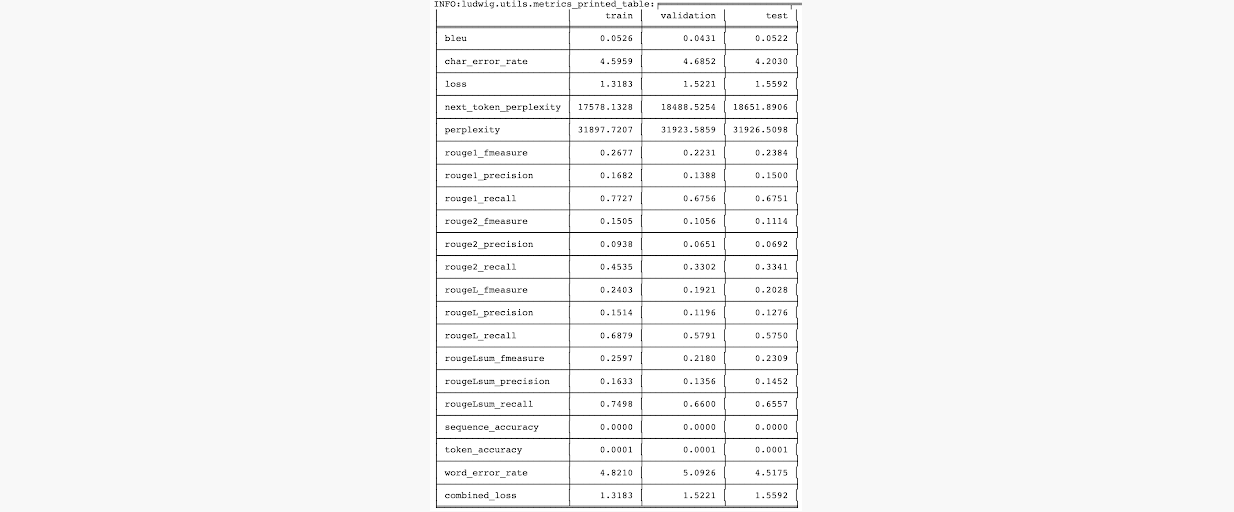

With all configuration sections specified for the first use case (designated as “everything must be able to run in a notebook in the free Google Colab account”), we can now train the model where the logging level is set to “INFO” in order for us to observe the rich and informative output, generated by Ludwig as the model is being trained (e.g., loss and relevant metrics).

qlora_fine_tuning_config: dict = yaml.safe_load(qlora_fine_tuning_yaml)

model: LudwigModel = LudwigModel(

config=qlora_fine_tuning_config,

logging_level=logging.INFO

)

results: TrainingResults = model.train(dataset=df_dataset)For instance, here is a screenshot of the reported metrics after the completion of the first epoch:

Reported metrics after the completion of the first epoch.

After the training is finished, we can use the reference to the trained model in memory to check the quality of its predictions. The corresponding API method is LudwigModel.predict():

df_predictions: pd.DataFrame = model.predict(df_evaluation)[0]We can now print the summaries generated by the model for a review as follows:

df_predictions: pd.DataFrame = predictions_and_probabilities[0]

for dialogue_with_summary in zip(df_evaluation["dialogue"], df_predictions["summary_response"]):

print(f"Dialogue:\n{dialogue_with_summary[0]}")

print(f"Generated Summary:\n{dialogue_with_summary[1][0]}")Here is one such evaluation inference example:

Dialogue:

Don: Do you see me online?

Alfred: Nope

Don: Ok, something's wrong, I'll have to check my settings

Alfred: Ok

Generated Summary:

don is not online.

Save the fine-tuned Model to HuggingFace Hub

If we are satisfied with the results, we can save the fine-tuned model to Hub on HuggingFace where the last argument, the folder on Google Colab, is printed at the start of the training run.

!ludwig upload hf_hub --repo_id "alexsherstinsky/mistralai-7B-v01-based-fine-tuned-using-ludwig-with-samsum-T4-sharded-4bit-notmerged" --model_path /content/results/api_experiment_runLoad the fine-tuned Model from HuggingFace and run inference

The last check is to make sure that we can load the model from HuggingFace Hub and run inference on it. In the very near future, this will be possible using the Ludwig API. For now, we will accomplish this step using the HuggingFace Transformer library:

fine-tuned_model: PeftModelForCausalLM = PeftModel.from_pretrained(model=base_model, model_id="alexsherstinsky/mistralai-7B-v01-based-fine-tuned-using-ludwig-with-samsum-T4-sharded-4bit-notmerged")

samsum_sequences_generator_4bit: TextGenerationPipeline = transformers.pipeline(

task="text-generation",

tokenizer=tokenizer,

model=fine-tuned_model,

torch_dtype=torch.float16,

device_map="auto"

)

samsum_sequences_4bit: list[dict] | list[list[dict]] = samsum_sequences_generator_4bit(

text_inputs=samsum_test_prompt,

do_sample=True,

top_k=50,

num_return_sequences=1,

eos_token_id=tokenizer.eos_token_id,

max_length=512,

return_text=True

)

samsum_sequence_4bit: dict = samsum_sequences_4bit[0]

print(f'\n[GENERATED_TEXT] FINE_TUNED_MODEL_PREDICTION:\n{samsum_sequence_4bit["generated_text"]}')When we run these commands, we obtain a high-quality result, similar to the one shown earlier.

QLoRA adapter configuration for the A100 GPU Colab Notebook

We now give the instructions to Ludwig for setting up the underlying Transformer architecture in a way that supports the second use case (fine-tuning execution carried out in the Google Colab Pro account with the A100 GPU). For this, we must set the quantization to 8 bits:

adapter:

type: lora

postprocessor:

merge_adapter_into_base_model: true

progressbar: true

quantization:

bits: 8where we also added the “postprocessor” subsection, containing the parameter merge_adapter_into_base_model set to “true”, to the “adapter” section of the configuration.

The purpose of the merge capability is to allow users who may not be familiar with Ludwig, to still be able to take advantage of the LLM fine-tuned using Ludwig for their own purposes. For example, users may discover the model we will fine-tune in a moment (call it "alexsherstinsky/mistralai-7B-v01-based-fine-tuned-using-ludwig-with-samsum-A100-sharded-8bit-merged") on HuggingFace Hub and wish to run inference on it.

When the unmerged model, such as "alexsherstinsky/mistralai-7B-v01-based-fine-tuned-using-ludwig-with-samsum-T4-sharded-4bit-notmerged", is saved, the directory contains only the adapter weights. Recalling from the previous section, loading this model required two calls: load the base model, and then add the fine-tuned adapter model to it. The difficulty with this approach is that the user may not be able to easily determine the reference of the base model; this is error prone, at best. However, when the merged model is saved, the directory contains the self-contained model (with the adapter weights already merged into the base model) along with its tokenizer.

Loading this model is accomplished via a single API call:

tokenizer: LlamaTokenizerFast = AutoTokenizer.from_pretrained(pretrained_model_name_or_path=alexsherstinsky/mistralai-7B-v01-based-fine-tuned-using-ludwig-with-samsum-A100-sharded-8bit-merged", trust_remote_code=True, padding_side="left")

bnb_config_8bit: BitsAndBytesConfig = BitsAndBytesConfig(

load_in_8bit=True

)

fine-tuned_model: MistralForCausalLM = AutoModelForCausalLM.from_pretrained(pretrained_model_name_or_path="alexsherstinsky/mistralai-7B-v01-based-fine-tuned-using-ludwig-with-samsum-A100-sharded-8bit-merged",

device_map="auto",

torch_dtype=torch.float16,

offload_folder="offload",

trust_remote_code=True,

low_cpu_mem_usage=True,

quantization_config=bnb_config_8bit

)and then we can run inference on this model in the usual way:

samsum_sequences_generator_8bit: TextGenerationPipeline = transformers.pipeline(

task="text-generation",

tokenizer=tokenizer,

model=fine-tuned_model,

torch_dtype=torch.float16,

device_map="auto"

)

samsum_sequences_8bit: list[dict] | list[list[dict]] = samsum_sequences_generator_8bit(

text_inputs=samsum_test_prompt,

do_sample=True,

top_k=50,

num_return_sequences=1,

eos_token_id=tokenizer.eos_token_id,

max_length=512,

return_text=True

)

samsum_sequence_8bit: dict = samsum_sequences_8bit[0]

print(f'\n[GENERATED_TEXT] FINE_TUNED_MODEL_PREDICTION:\n{samsum_sequence_8bit["generated_text"]}')And here is another high quality summarization output sample:

[this is the prompt]

Summarize this dialogue:

### Dialogue:

A: Hi Tom, are you busy tomorrow's afternoon?

B: I'm pretty sure I am. What's up?

A: Can you go with me to the animal shelter?.

B: What do you want to do?

A: I want to get a puppy for my son.

B: That will make him so happy.

A: Yeah, we've discussed it many times. I think he's ready now.

B: That's good. Raising a dog is a tough issue. Like having a baby ;-)

A: I'll get him one of those little dogs.

B: One that won't grow up too big;-)

A: And eat too much;-))

B: Do you know which one he would like?

A: Oh, yes, I took him there last Monday. He showed me one that he really liked.

B: I bet you had to drag him away.

A: He wanted to take it home right away ;-).

B: I wonder what he'll name it.

A: He said he'd name it after his dead hamster – Lemmy - he's a great Motorhead fan :-)))

### Synopsis: [the output below is generated by the model]

A wants to get a puppy for his son from the animal shelter. B will go with him.

Conclusion: Try Fine-tuning Mistral 7B on Your Own

In this blog, we provided a brief overview of the recent enabling technology advances and favorable business trends, which paved the way for any institution to utilize the power of LLMs cost-effectively while maintaining control of their computing infrastructure operating costs.

We then walked through a hands-on tutorial that illustrates how one can fine-tune an LLM for a practical application with the help of Ludwig, an OSS framework that offers an easy-to-configure declarative, “low-code” interface for setting up and guiding ML model training and execution.

We demonstrated the benefits of tapping Ludwig as a power tool for fine-tuning LLMs on the recently released Mistral 7B LLM (from Mistral AI). First, we showed that thanks to its support for QLoRA, Ludwig can be used to fine-tune Mistral 7B for a summarization task free of charge. Doing so required quantizing weights down to 4-bits, and, as a result, this low memory footprint model can only save adapter weights, meaning that loading it in order to run inference requires two API calls. Hence, second, with a paid subscription in order to utilize a higher-end GPU to satisfy higher memory requirements, we demonstrated the scenario where the fine-tuned model could be saved in a self-contained way so that it could then be loaded in a single API call.

Both notebooks are available, and we welcome the community to explore them:

- Fine-tuning Mistral 7B LLM on a summarization task in the free tier Google Colab (saves only QLoRA adapter weights in HuggingFace Hub):

- Fine-tuning Mistral 7B LLM on a summarization task with A100 GPU in Google Colab Pro (merges QLoRA adapter weights into the base model and saves the complete self-contained fine-tuned model weights to HuggingFace Hub):

Get started with Ludwig today: pip install -U ludwig

If you want to train and deploy LLMs on managed AI infrastructure in the cloud or your VPC using your private data, check out a free trial of Predibase!

Happy fine-tuning!

Frequently Asked Questions about Fine-Tuning Mistral 7B

What is Mistral 7B, and how does it compare to other language models?

Mistral 7B is a 7.3 billion parameter language model utilizing the transformers architecture. It has demonstrated superior performance compared to models like Llama 2 13B on various benchmarks.

How can I fine-tune Mistral 7B on a single GPU?

Fine-tuning Mistral 7B on a single GPU can be achieved using tools like Ludwig and Hugging Face's Transformers library. Techniques such as mixed precision training and gradient accumulation can optimize memory utilization and training efficiency.

What are the benefits of using Ludwig for fine-tuning language models?

Ludwig simplifies the process of fine-tuning language models by providing an accessible interface and automating many aspects of model training, making it suitable for users with varying levels of expertise.

Can I fine-tune Mistral 7B on a single consumer-grade GPU?

Yes, it is possible to fine-tune Mistral 7B on a single high-end GPU (such as an NVIDIA RTX 3090 or A100) by using techniques like low-rank adaptation (LoRA), mixed precision training, and gradient accumulation.

What are the hardware requirements for fine-tuning Mistral 7B?

Fine-tuning requires a GPU with at least 24GB of VRAM. If you have limited resources, methods like 8-bit quantization and parameter-efficient tuning can help reduce memory usage.

How long does it take to fine-tune Mistral 7B?

The training time depends on the dataset size, batch size, and GPU power. On a single high-end GPU, fine-tuning can take anywhere from a few hours to multiple days.