Large language models (LLMs) have exploded in popularity recently, showcasing impressive natural language generation capabilities. However, there is still a lot of mystery surrounding how these complex AI systems work and how to use them most effectively.

In this comprehensive guide, we will demystify large language models by exploring 10 key things every practitioner should understand:

1. What Exactly Are Large Language Models?

Large Language Models (LLMs) are state-of-the-art machine learning architectures specifically designed for understanding and generating human language.

LLMs utilize the transformer architecture and they are trained on massive amounts of text data - hence the designation "large." Beyond their vast training datasets, LLMs also have immense architectural complexity with billions of parameters. They learn deep statistical representations of language by predicting the next word in a sequence during training.

Upon training, LLMs can perform various tasks without requiring task-specific training data, a phenomenon termed "few-shot" or "zero-shot" learning. For instance, they can answer questions, generate coherent text, translate languages, and even assist in code-writing, among many other tasks without having been trained to perform any of these tasks.

2. Why Should You Care About LLMs?

LLMs' advanced comprehension of language enables a diverse array of applications, from conversational AI and content creation to programming assistance and sentiment analysis. They are able to generate surprisingly human-like text while answering questions, translating languages, summarizing documents and more. There are many use cases that LLMs are capable of doing in production right now, such as:

- Text Classification

- Information Extraction

- Content Creation

- Structured Generation

- Q&A / Search

LLMs are rapidly transforming how machines understand, generate and interact using human language. Here are the top 5 use cases for LLMs in production.

3. How Are LLMs Trained?

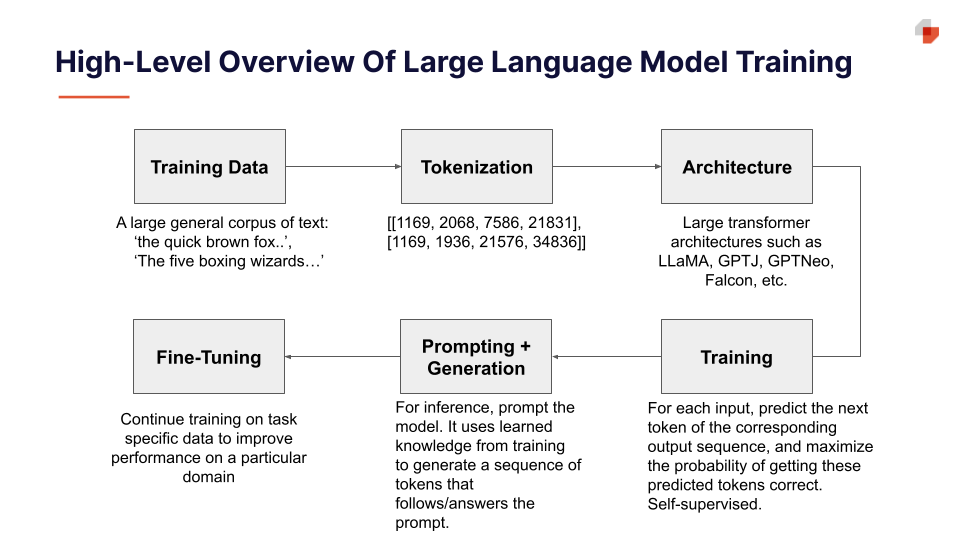

The training process for LLMs involves predicting the next token (typically a word or subword unit) in a sequence given the previous context. Through this self-supervised pretraining on vast corpora, the model learns the probabilities and patterns of language, grammar, facts about the world, reasoning abilities and even biases inherent in the data.

At a high-level, there are 6 steps to training an LLM:

- Training Data: The language model is trained on a vast amount of textual data, such as books, articles, websites, and other sources. The more diverse and extensive the training data, the better the model's understanding of language. As you can expect, such a large model is trained on a vast amount of text before it can remember the patterns and structures of language.

- Tokenization: The text is tokenized, meaning it is divided into smaller units such as words, subwords, or characters. Each token is assigned a unique numerical representation.

- Architecture: The language model typically consists of multiple layers of transformer neural networks. Transformers are powerful models that can analyze and capture the relationships between different tokens in the text.

- Training Process: The language model is trained to predict the next token in a sequence of text. It learns to understand the statistical patterns in the training data, such as the likelihood of certain words appearing after others. This process involves optimizing the model's parameters using techniques like backpropagation and gradient descent.

- Context and Generation: During inference, when the model is given a prompt or question, it uses its learned knowledge to generate a sequence of tokens that follows the given context. The generated tokens form coherent and contextually relevant text based on the model's understanding of language.

- Fine-tuning: Depending on the specific use case, the language model may undergo additional fine-tuning on domain-specific data to improve its performance in a particular area.

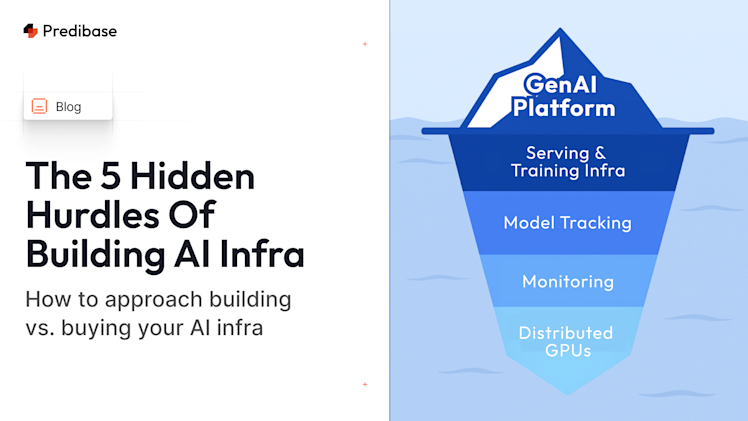

4. Should You Train an LLM From Scratch?

For most practitioners, training an LLM from scratch is not feasible or necessary. It requires immense computational resources costing millions of dollars along with rare access to web-scale training data. Luckily, many capable pre-trained LLMs are openly available including Llama2, Falcon, Bloom, etc. These models already encode substantial linguistic knowledge that can be readily leveraged.

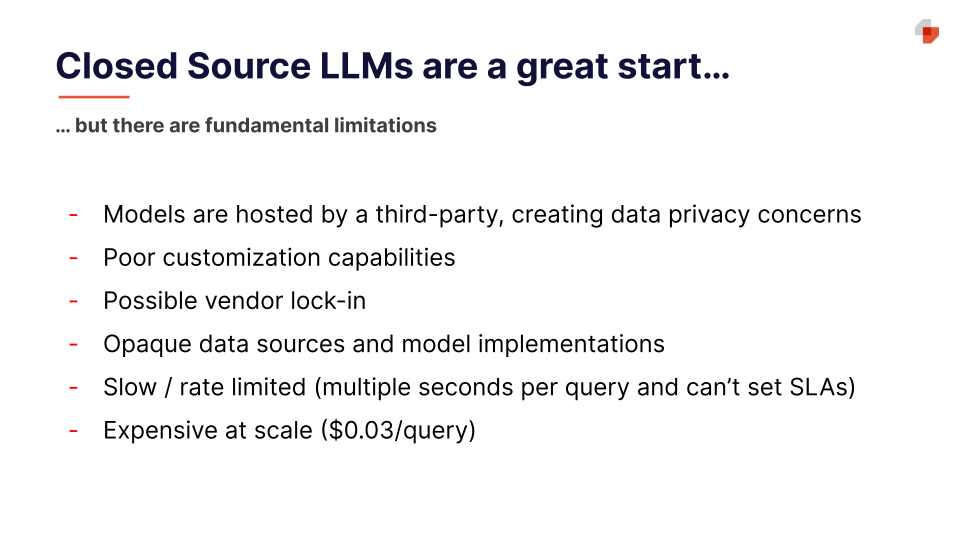

5. How Do LLMs Compare to Proprietary Offerings Like OpenAI?

Many impressive proprietary LLM services like OpenAI's GPT-4 rightfully capture headlines. However, numerous capable open source LLMs have been released. For example, Llama2 offers competitive performance on par with commercial offerings.

The key advantage of public LLMs is their free usage and open architectures. They can also keep your data private, without any third-party vendor lock-in. With the right tuning and prompting, open source LLMs unlock immense value.

6. What Is Prompt Engineering?

Prompting is how we provide context and define tasks for LLMs. Prompt engineering is the process of iteratively refining prompts to improve the specificity, accuracy and coherence of LLM-generated text. This involves extensive experiments with prompt structure, content, length, formatting and more. Superior prompting is key to engaging LLMs productively.

7. Can You Use LLMs Without Any Training Data?

Yes, zero-shot learning allows applying LLMs to new tasks and classes without any examples, purely based on the knowledge learned during pretraining. For instance, an LLM trained only on news could categorize unseen genres like technology articles through zero-shot learning, using its contextual understanding to generalize.

8. How Can You Improve LLMs With Limited Data?

When you have a small labeled dataset, few-shot learning is highly effective. It involves providing just a few examples (e.g. 5-10) in the prompt for the LLM to recognize new classes and tasks without full fine-tuning. This augments pretrained knowledge with limited data.

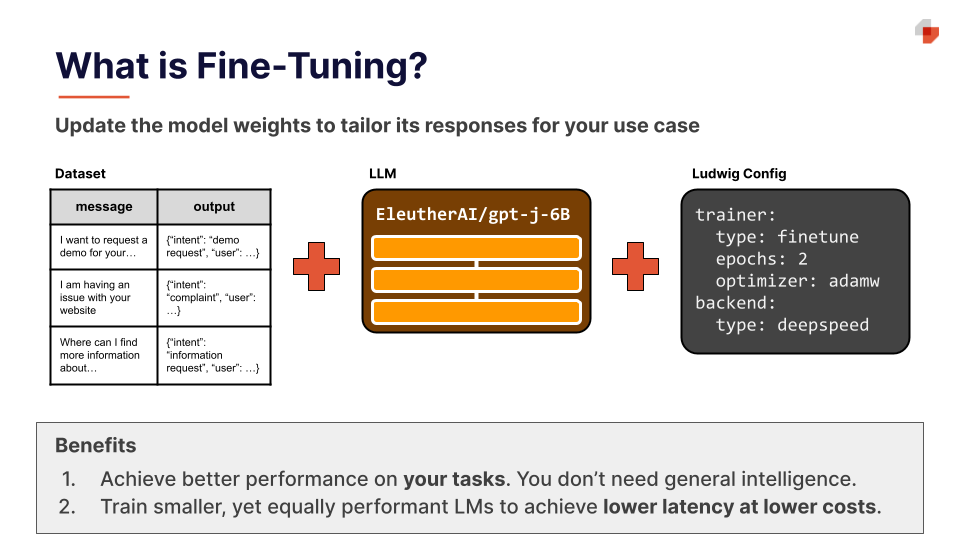

9. What Is Model Fine-Tuning?

Fine-tuning for large language models (LLMs) involves taking a pre-trained language model and further training it on a specific dataset to tailor its behavior for a particular task or domain.

Large language models, like those from OpenAI's GPT series or BERT from Google, are initially trained on vast amounts of text data, enabling them to generate coherent text, answer questions, and perform various NLP tasks. However, these generic pre-trained models might not always be optimal for specialized tasks or domains.Instead of training a model from scratch (which is resource-intensive), we take the pre-trained LLM and train it further on a smaller, task-specific dataset. This process helps the model adapt its vast general knowledge to the nuances and requirements of the particular task at hand.

For instance, an LLM trained on a generic corpus can be fine-tuned on medical literature to answer medical queries or on legal documents to assist with legal tasks. The initial broad training provides the foundational language capabilities, while the fine-tuning sharpens and refines the model's responses for specific applications. This approach harnesses the power of LLMs in situations where training a robust model from scratch would be impractical due to data or resource constraints.

This process can be computationally expensive and time-consuming, especially for large models.

On the other hand, parameter-efficient fine-tuning (PEFT) is a method of fine-tuning that focuses on updating only a subset of the model's parameters. This approach involves freezing certain layers or parts of the model to avoid catastrophic forgetting, or inserting additional layers that are trainable while keeping the original model's weights frozen. This can result in faster fine-tuning with fewer computational resources, but might sacrifice some accuracy compared to full fine-tuning. Some popular PEFT methods include LoRA, AdaLoRA and Adaption Prompt (LLaMA Adapter). Fine-tuning using PEFT is particularly suitable when the new task to be fine-tuned for shares similarities with the original pre-training task.

Learn more about how to fine-tune Llama-2 on your data here.

10. When Should You Use Prompting vs. Fine-Tuning?

This question often boils down to the performance with prompting, the computational and financial resources you have, the volume of the data you have and the quality of the dataset itself. Prompt engineering alone can solve many tasks impressively and is fast and lightweight. But for complex reasoning with diverse data, fine-tuning adapts LLMs more deeply.

Some good reasons to fine-tune are to gear the knowledge towards domain specific terms/acronyms/language, teach it how to respond in a certain style, teach it how to not respond in certain scenarios when there is not enough information, reduce hallucinations, etc.

Step 1: Prompt First

Begin your journey with prompt engineering. It's often the quickest and most efficient way to tackle various tasks using Language Model Models (LLMs). This approach provides a fast feedback loop, is computationally inexpensive, and requires relatively few resources.

Step 2: Collect Example Prompts and Responses

You can collect these examples from a number of sources including (1) hand-labeled / curated examples, (2) ground truth business data (e.g. call transcripts), or (3) responses from the LLM in production. If the responses you’re getting back from the LLM in production are not the results you would want or expect, you should put a process in place to correct the response.This process will be crucial in refining your prompt and understanding its limitations. A good way to store this data is to create 4 columns for each example where the response falls short (more on how to use this later):

- Prompt template/prompt

- Input sent to the LLM

- Actual response from the LLM

- Expected response

Step 3: Improve Your Prompt

Incorporate techniques like Retrieval-Augmented Generation (RAG) to enhance the quality of responses. This step involves leveraging external knowledge to improve the LLM's performance based on your collected data. You'll also gain insights into your data's quality and whether you have enough of it based on your model's performance if you’re doing RAG for your task over your data.

Step 4: Consider Fine-Tuning

When you've accumulated around 1,000 high-quality samples of prompt failures and expected responses, it's an opportune moment to contemplate fine-tuning. This process teaches the model how to handle scenarios where regular prompting alone isn't sufficient, augmenting the model’s knowledge and gearing it more towards your task. You can use parameter efficient methods like LoRA to fine-tune quickly and cheaply. With the format mentioned above, you can either perform supervised fine-tuning (the easiest) or reinforcement learning with human feedback (RLHF).

Step 5: Iterate and Repeat

Continue refining your approach iteratively. Repeat steps 3 and 4 as necessary to continually enhance your model's performance. This iterative process allows you to adapt to changing data and evolving requirements effectively.

By following these five steps, you can effectively navigate the decision between prompting and fine-tuning, ensuring that you optimize your use of LLMs for various tasks. As a rule of thumb, iterate extensively on prompting first, then fine-tune only if necessary, balancing benefits with resources required.

Get started today

LLMs are still in their early stages. In the next few months, you’ll see continued advances in multimodal learning, reasoning, interpretation, and grounding promise to unlock even more AI applications.

The best way to learn LLMs is to use it to build your own project. You can try Predibase for free to get started with finetuning and prompt engineering using open-source models.