Register for our upcoming Ludwig 0.8 webinar to get a deep dive on all the new features and learn how to fine-tune open-source LLMs.

Since its initial open-source release by Uber in 2019, Ludwig has made it possible for any developer to use state-of-the-art deep learning in their applications through its low-code, declarative interface. With the rise of large language models (LLMs) like OpenAI’s ChatGPT and Meta’s LLaMA-2, every organization is looking to build applications that leverage custom LLMs tuned using private data sources. The need to democratize this capability through tools like Ludwig is no longer just valuable, it has become mission critical.

With Ludwig v0.8, we’re releasing the first open-source, low-code framework optimized for efficiently building custom LLMs with your private data. We have introduced a number of new features and capabilities in Ludwig that have made this possible, including:

- Declaratively Fine-Tune Large Language Models with the new “llm” model type, allowing users to build text-based Generative AI systems like chatbots, code assistants, etc. (fully compatible with HuggingFace transformers) without worrying about the infra needed to make everything work with large models and large datasets.

- Integration with Deepspeed in one config parameter for data + model parallel training, enabling Ludwig to train models too large for a single GPU, or even a single node.

- Parameter efficient fine-tuning (PEFT) techniques like Low-rank adaptation (LoRA) dramatically reduce the number of trainable parameters and speed up fine-tuning considerably.

- Quantized training with 4-bit and 8-bit compression (QLoRA), which enables fine-tuning LLMs on a single GPU.

- Prompt templating to combine multiple columns from a structured dataset into a single text input to the LLM.

- Zero-shot and In-Context Learning (including retrieval augmented ICL) for applying LLMs to solve previously unseen supervised ML tasks with little to no labeled data and no additional training.

Our goal with Ludwig v0.8 was to bring all the latest techniques for training LLMs together in one package, where everything just works. No struggling to figure out how Deepspeed works with PEFT, or how PEFT works with quantization, or how all of this runs on Ray. We solved the infra challenges so you can focus on higher-value activities like building models.

Take a look at the Ludwig v0.8 release notes for complete details, and be sure to download or upgrade Ludwig today to take advantage of these exciting new features:

pip install -U ludwig[llm]Efficiently Fine-Tune Large Language Models for Generative AI

In Ludwig v0.7, we introduced a number of new features and optimizations for efficiently training large pretrained models including LLMs, but these models were restricted to Predictive AI tasks like classification and regression.

Now in Ludwig v0.8, we’re for the first time adding support for Generative AI tasks like chatbots, code generation, text summarization, and much more. All of this is made possible using the new “llm” model type.

Here’s an example Ludwig configuration for instruction tuning the base LLaMA-2-7b model to build a custom chatbot:

model_type: llm

base_model: meta-llama/Llama-2-7b-hf

quantization:

bits: 4

adapter:

type: lora

prompt:

template: |

### Instruction:

{instruction}

### Input:

{input}

### Response:

input_features:

- name: instruction

type: text

output_features:

- name: output

type: text

trainer:

type: finetune

learning_rate: 0.0003

batch_size: 1

gradient_accumulation_steps: 8

epochs: 3

backend:

type: localExample of a Ludwig configuration for instruction tuning the base LLaMA-2-7b model.

The full example is available in this free notebook.

Fine-Tuning for Both Generative and Predictive tasks

Another challenge when customizing large language models is knowing how to train the model for the specific task at hand.

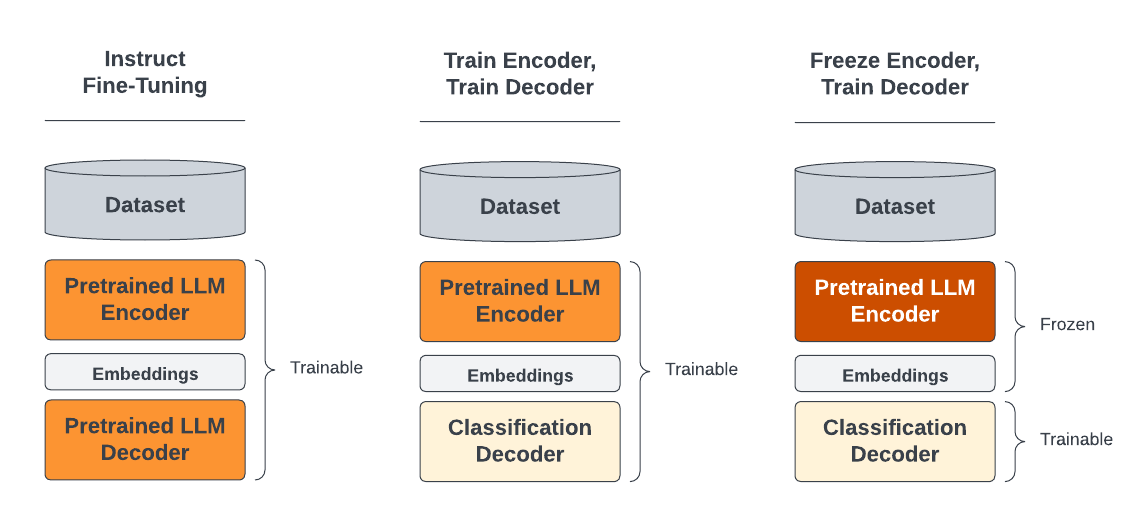

For generative tasks like chatbots and code generation, fine-tuning both the language model’s encoder (which produces embeddings) and its head / decoder (which produces next token probabilities) is the straightforward and preferred approach. This is supported neatly in Ludwig by the previously shown example config above.

For predictive tasks, the temptation is often to take an existing text generation model and fine-tune it to generate text that matches a specific category in a multi-class classification problem, or a specific number for a regression problem. However, the result is a model that comes with a couple of drawbacks.

- Text generation models are slow to make predictions. Particularly auto-regressive models (like LLaMA and most popular LLMs) require generating text one token at a time, which is many times slower than generating a single output.

- Text generation models can hallucinate. This can be mitigated by using conditional decoding strategies, but if you only want a model that can output one of N distinct outputs, having a model that can output anything is overkill.

For such predictive (classification, regression) tasks, the solution is to remove the language model head / decoder at the end of the LLM, and replace it with a task-specific head / decoder (usually a simple multi-layer perceptron). The task-specific head at the end can then be quickly trained on the task-specific dataset, while the pretrained LLM encoder is either minimally adjusted (with parameter efficient fine-tuning) or held constant. When holding the LLM weights constant (also known as “linear probing”), the training process can further benefit from Ludwig optimizations like cached encoder embeddings for up to a 50x speedup.

Different ways to fine-tune LLMs, from most heavyweight to least.

In most frameworks, it would be a lot of work to take an LLM that generates text and adapt it to do classification or regression, but in Ludwig it’s as simple as changing a few lines in the YAML config:

input_features:

- name: review

type: text

encoder:

type: auto_transformer

pretrained_model_name_or_path: meta-llama/Llama-2-7b-hf

trainable: true

adapter: lora

output_features:

- name: sentiment

type: categoryTraining Llama-2-7b to do text classification with a trainable encoder and LoRA adapter.

Full example of this can be found in the Ludwig repository.

input_features:

- name: review

type: text

encoder:

type: auto_transformer

pretrained_model_name_or_path: meta-llama/Llama-2-7b-hf

trainable: false

preprocessing:

cache_encoder_embeddings: true

output_features:

- name: sentiment

type: categoryTraining Llama-2-7b to do text classification with a frozen encoder and cached encoder embeddings.

Large Model Training with Deepspeed

Ludwig has always been optimized for large-scale training, having native support for data-parallel distributed training systems including DDP, Horovod, and Ray.

Now in Ludwig v0.8, we’re adding first-class support for large model training through Microsoft’s Deepspeed framework, making it perform distributed training with models that are too big to fit into memory on any single GPU. Unlike purely data parallel frameworks like DDP and Horovod, Deepspeed shards parameters across GPUs and collects them on demand when needed during the forward and backward training phases, allowing it to scale horizontally across GPUs to very large models.

Like Ludwig, Deepspeed also comes with its own declarative configuration parameters, which are nearly fully supported through the backend configuration section of the Ludwig config:

backend:

type: ray

trainer:

use_gpu: true

strategy:

type: deepspeed

zero_optimization:

stage: 3

offload_optimizer:

device: cpu

pin_memory: true

bf16:

enabled: trueRun Deepspeed on Ray using ZeRO Stage 3 with optimizer offload to CPU and bfloat16.

Ludwig offers the ability to run Deepspeed either natively on the command line or orchestrated by Ray.

deepspeed --no_python --no_local_rank --num_gpus 4 \

ludwig train --config imdb_deepspeed_zero3.yaml --dataset ludwig://imdbLaunching Ludwig through the Deepspeed command line.

Detailed example can be found here.

Reducing Memory Overhead with Ray + Deepspeed

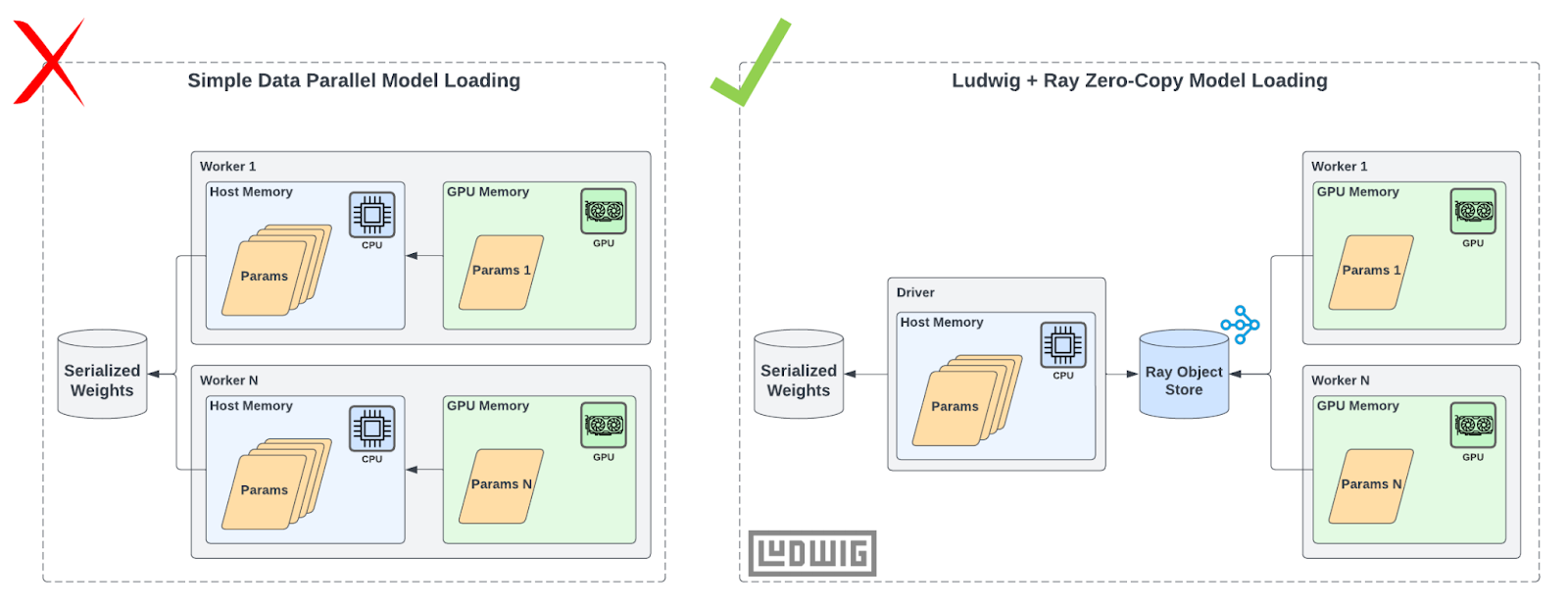

One of the unique benefits of running Deepspeed with Ludwig on Ray as opposed to running Deepspeed standalone is the ability to reduce host memory pressure with zero-copy reads.

One of the first problems you’re likely to encounter when fine-tuning an LLM is a host out of memory error. This is because before you even get to multi-GPU training with model parallel frameworks like Deepspeed, you need to load the pretrained checkpoint into host memory. To make matters worse for machines with multiple GPUs, because Deepspeed is also a data-parallel framework, you actually need to load the checkpoint into host memory once for each GPU in your job!

In Ludwig v0.8, we solved the duplicate checkpoint loading issue by loading the model weights into memory once before training begins and inserting them as numpy arrays into the Ray object store, from which we can then zero-copy read the weights directly from shared memory into each GPU worker process. For a training job with one node and 8x GPUs, this is effectively an 8x reduction in host memory pressure from the model weights.

Comparing simple data parallel model loading with Ludwig + Ray zero-copy model loading.

You can learn more about getting started with Ludwig on Ray here.

Parameter Efficient Fine-Tuning (PEFT)

One of the biggest barriers to cost-effective fine-tuning for LLMs is the need to update billions of parameters each training step. Parameter efficient fine-tuning (PEFT) describes a collection of techniques that reduce the number of trainable parameters during fine-tuning to speed up training, and decrease the memory and disk space required to train large language models.

PEFT is a popular library from HuggingFace that implements a number of popular parameter efficient fine-tuning strategies, and now in Ludwig v0.8, we provide native integration with PEFT, allowing you to leverage any number of techniques to more efficiently fine-tune LLMs with a single parameter change in the configuration.

One of the most commonly used PEFT adapters is low-rank adaptation (LoRA), which can now be enabled for any large language model in Ludwig with the “adapter” parameter:

adapter: loraAdditionally, any of the LoRA hyperparameters can be configured explicitly to override Ludwig’s defaults:

adapter:

type: lora

r: 16

alpha: 32

dropout: 0.1Adapters can be added to any LLM model type, or any pretrained auto_transformer text encoder in Ludwig with the same parameter options.

In Ludwig v0.8, we’ve added native support for the following PEFT techniques with more to come:

- LoRA

- AdaLoRA

- Adaptation Prompt (aka, LLaMA Adapter)

Quantized 4-bit Training (QLoRA)

Even with Deepspeed, PEFT, cached encoder embeddings, etc. it still takes multiple GPUs to train even a “modest” 7 billion parameter LLM. What if we could train a 7 billion parameter model on a single commodity GPU, or a 70 billion parameter model on a single A100?

In Ludwig v0.8, we’ve added support for 4-bit and 8-bit quantized training with LoRA (QLoRA), allowing users to train massive models on even modest GPU hardware. All it takes to enable this functionality is combining the “lora” adapter with the new “quantization” parameter in the LLM configuration:

adapter:

type: lora

quantization:

bits: 4The full example can be run on a single free GPU in a Google Collab notebook.

Prompt Templating

One of the unique properties of large language models as compared to more conventional deep learning models is their ability to incorporate context inserted into the “prompt” to generate more specific and accurate responses.

In Ludwig v0.8, we’ve introduced a new “prompt” parameter as part of the LLM model type, which we’ve also added to all text input features. The prompt can be used to:

- Provide the necessary boilerplate needed to make the LLM respond in the correct way (for example, with a response to a question rather than a continuation of the input sequence).

- Combine multiple columns from a dataset into a single text input feature (see TabLLM).

- Provide additional context to the model that can help it understand the task, or provide restrictions to prevent hallucinations.

Prompt templates in Ludwig use Python-style placeholder notation, where every placeholder corresponds to a column in the input dataset:

prompt:

template: "The {color} {animal} jumped over the {size} {object}"When a prompt template like the above is provided, the prompt with all placeholders filled will be used as the text input feature value for the LLM.

Dataset:

| color | animal | size | object |

|-------|--------|------|--------|

| brown | fox | big | dog |

| white | cat | huge | rock |

Inputs:

"The brown fox jumped over the big dog"

"The white cat jumped over the huge rock"Prompt templates can also be used to iterate on the prompt used for in-context learning (see below). For a given “context” retrieved and a given sample row to predict on, you can experiment with different prompt templates and measure their effect on model performance:

prompt:

template: >

Here is the context:

{__context__}

Now answer the question:

{__sample__}Finally, Ludwig provides a lighter alternative to specifying the full prompt template via the “task” param in cases where you only wish to provide additional context on the task and leave the rest of the boilerplate untouched.

prompt:

task: "Classify the sample input as either negative, neutral, or positive."In this case, Ludwig will use a default template that includes the task, sample, and the additional context (if provided).

Zero-Shot and In-Context Learning

A key benefit of the declarative interface is that it allows us to abstract away the tricky infrastructure challenges that surround the rapidly evolving LLM landscape, so users can focus on their tasks and experiment with different models by swapping a single parameter of the config file.

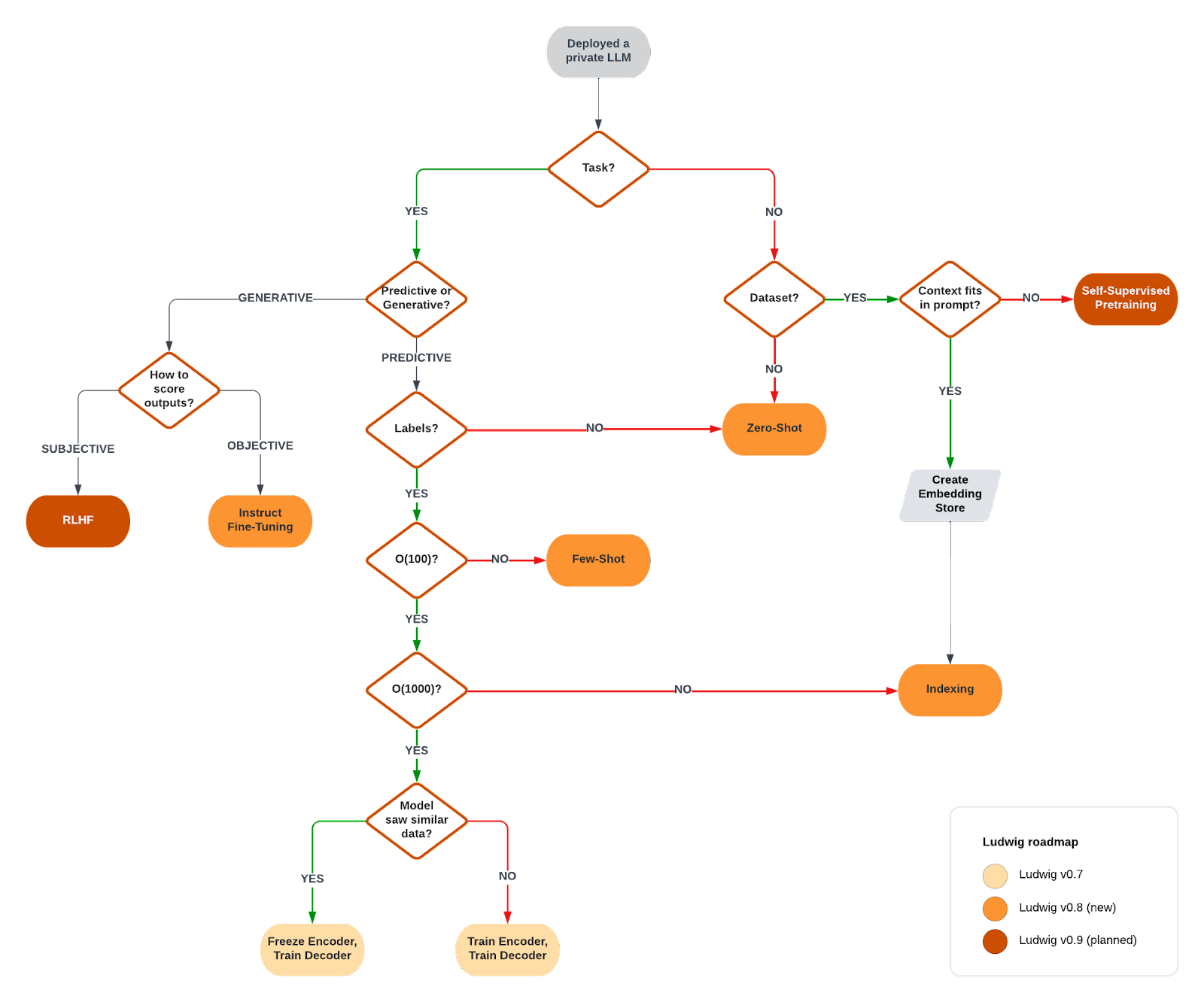

One of the most common questions we hear from users is about when it’s appropriate to use fine-tuning vs. a different approach to model customization (for example: in-context learning, continued pretraining, or RLHF). The reality is it’s not always clear what approach will work best for your task, which is why you need a framework that will guide you through the process each step of the way.

Ludwig LLM roadmap

With Ludwig v0.8, we allow users to easily progress from zero-shot learning, to in-context learning, to parameter efficient fine-tuning, and finally to full fine-tuning with just a couple parameter changes to the config.

prompt:

template: "Rate sentiment of this review: {review}"Zero-shot learning

prompt:

template: "Rate sentiment of this review: {review}"

retrieval:

k: 3Few-shot / In-context learning

prompt:

template: "Rate sentiment of this review: {review}"

trainer:

type: finetuneFine-tuning

This allows users to have a consistent user experience for assessing the value of fine-tuning against more lightweight prompt engineering and in-context learning by comparing evaluation metrics on a consistent held out test dataset for the task.

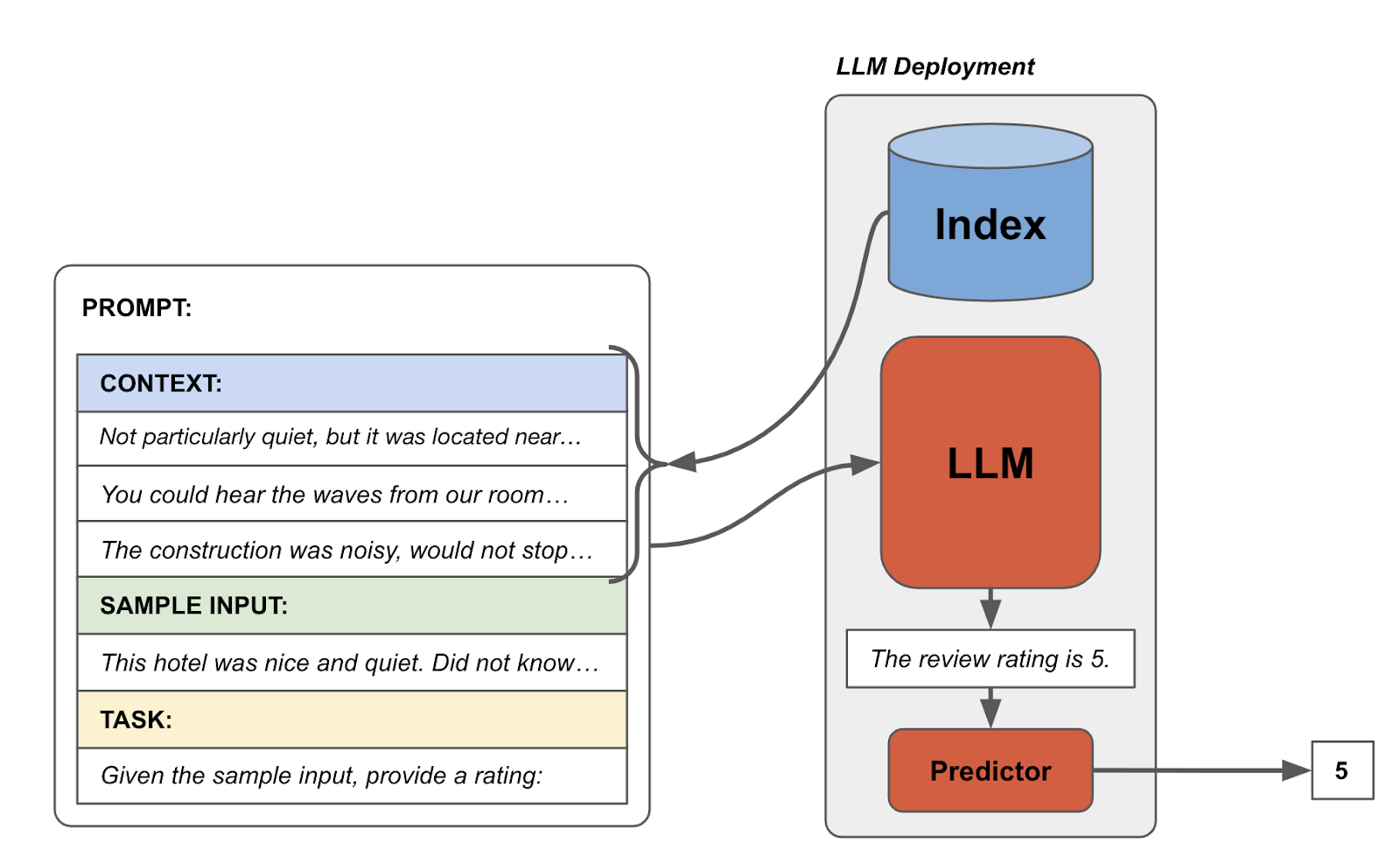

Retrieval-Augmented In-Context Learning

In addition to improving performance on question answering tasks (retrieval augmented generation), information retrieval systems (e.g., embedding model + vector index) can also be a useful way to improve results with in-context learning on predictive tasks.

The idea is to take the “training” split of your dataset, embed it using a semantic embedding model like a Sentence Transformer, insert these embeddings into a vector database like FAISS, and then insert the top k most relevant training samples (with their labels) into your prompt during evaluation on the “test” split to improve prediction performance with in-context / few-shot learning.

How retrieval-augmented in-context learning works.

We integrated this entire process in Ludwig, and you can configure it in the retrieval section of the prompt configuration:

prompt:

task: "Given the sample input, provide a rating."

retrieval:

type: semantic

k: 3

model_name: paraphrase-MiniLM-L3-v2Ludwig configuration for retrieval-augmented in-context learning.

Detailed example can be found here.

Start Customizing LLMs with Ludwig v0.8

If you’re curious to explore building and customizing your own open-source LLM, we encourage you to try out Ludwig v0.8, and let us know what you think! You can find us on GitHub or Discord if you’d like to get in touch. You can also check out the latest Ludwig docs for Ludwig 0.8 here.

In addition to the new LLM features we discussed here, there’s a lot more we didn’t cover that we encourage you to explore, including:

- Uploading your fine-tuned LLMs directly to HuggingFace:

You can directly push fine-tuned LLM model weights from Ludwig to a pre-existing or new public or private HuggingFace Hub model repository, with support to make new commits with updated model weights as you iterate through fine-tuning. Uploading to HuggingFace is as simple as running:

ludwig upload hf_hub --repo_id <repo_id> --model_path </path/to/saved/model>Additional arguments such as whether to make the repository public or private, as well as add a commit message are also supported.

• RoPE Scaling to increase context lengths to 8K, 16K, 32K, and beyond during fine-tuning: Large language models like LLaMA-2 face a limitation in the length of context they can consider, which impacts their capacity to comprehend intricate queries or chat-style discussions spanning multiple paragraphs. For instance, LLaMA-2's context is capped at 4096 tokens, or roughly 3000 English words. This renders the model ineffective for tasks involving lengthy documents that surpass this context length. RoPE Scaling presents a way to increase the context length of your model at the cost of a slight performance penalty using a method called Position Interpolation. You can read more about it in the original paper here.

There are two parameters to consider for RoPE scaling: type and factor. Ludwig supports two types of interpolation, `linear` and `dynamic`. `dynamic` is shown to have better performance over larger context windows. To pick the right `factor`, the typical rule of thumb is that your new context length will be the base `context_length` * `factor`. So, if you want to extend LLaMA-2 to have a context length of ~ 16K tokens, you would set the factor to 4.0.

model_parameters:

rope_scaling:

type: dynamic

factor: 4.0• Supercharging Image and Audio preprocessing by over 50% using Daft:

Until v0.7, Ludwig used Ray Datasets for parallelizing image and audio downloads from remote URLs. Although this worked fine in most cases, we noticed that it was consistently a bottleneck during image and audio preprocessing since it didn’t saturate network bandwidth leading to suboptimal download throughput, often led to out of memory errors because of sensitive dataset partitioning heuristics, and produced noisy logs for failed url downloads.

In Ludwig 0.8, we switched URL read parallelization from Ray Datasets to Daft, a new, fast distributed Python dataframe library optimized for machine learning workloads with a backend written almost entirely in Rust. Check it out here! Daft can run locally, but also integrates with the Ray Datasets ecosystem, which makes it even easier to integrate into Ludwig’s preprocessing pipeline. Daft gives Ludwig a 50% speedup over native Ray Datasets for URL downloading, and an overall 40% speedup for all of preprocessing for both the single machine and distributed cases. It also significantly reduces the amount of disk spillage when running with Ray, and collapses failed image reads internally reducing the verbosity of logs. There’s nothing you have to change in your Ludwig config to use this - it comes out of the box with Ludwig 0.8. In the near future, we plan to integrate Daft more deeply into Ludwig. Stay tuned.

• Support for PyTorch 2.0 model compilation and Pandas 2.0 PyArrow types.

We’re excited for the community to try fine-tuning large language models with Ludwig v0.8!

In the v0.9 release, we’ll be continuing to add even more LLM training and customization options including continued pretraining and direct preference optimization. We’ll also be adding capabilities to train custom embedding models to support retrieval-augmented generation (RAG). If any of this sounds interesting and you’d like to contribute, please reach out! We’d love to collaborate with you.

If you’re interested in training and deploying LLMs on managed AI infrastructure, check out Predibase!

We’d like to acknowledge the contributions of:

- Arnav Garg and Geoffrey Angus for building out the core LLM fine-tuning and in-context learning capabilities into Ludwig.

- Travis Addair for contributing the Deepspeed integration and QLoRA support.

- Jay Chia, Sammy Sidhu, and Clark Zinzow from Eventual for contributing the Daft integration for image and audio preprocessing into Ludwig.

- Justin Zhao, Wael Abid, Jeffery Kinnison, Joppe Geluykens, Kabir Brar, and Connor McCormick for various bug fixes and improvements.