LLM Fine-tuning Use Case

Content Summarization

Summarizing key information from unstructured text has applications across many domains like customer support, healthcare, recruiting, and more. However, manually creating clear and accurate summaries is time-consuming and requires expert knowledge — that’s where LLMs come in. Learn how to fine-tune a small open-source language model to automatically generate highly accurate summaries for any use case.

The Predibase Solution

Generate accurate summaries with LLMs.

- Fine-tune a small language model (Llama-3.1-8b) on a dataset of dialogues.

- Instantly deploy and query your high-quality dialogue summarizer on serverless endpoints.

- Use automatically generated summaries to reduce the time needed to interpret large volumes of text, thereby speeding up decision-making.

Fine-tune and Serve your Own Small Language Model for Text Summarization

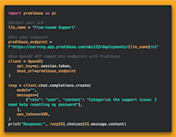

Easily and efficiently fine-tune open-source Meta-Llama-3.1-8B-Instruct to generate high-quality summaries of lengthy conversations. Instantly serve and prompt your fine-tuned LLM for dialogue summarization cost-effectively with endpoints built on top of open-source LoRAX.

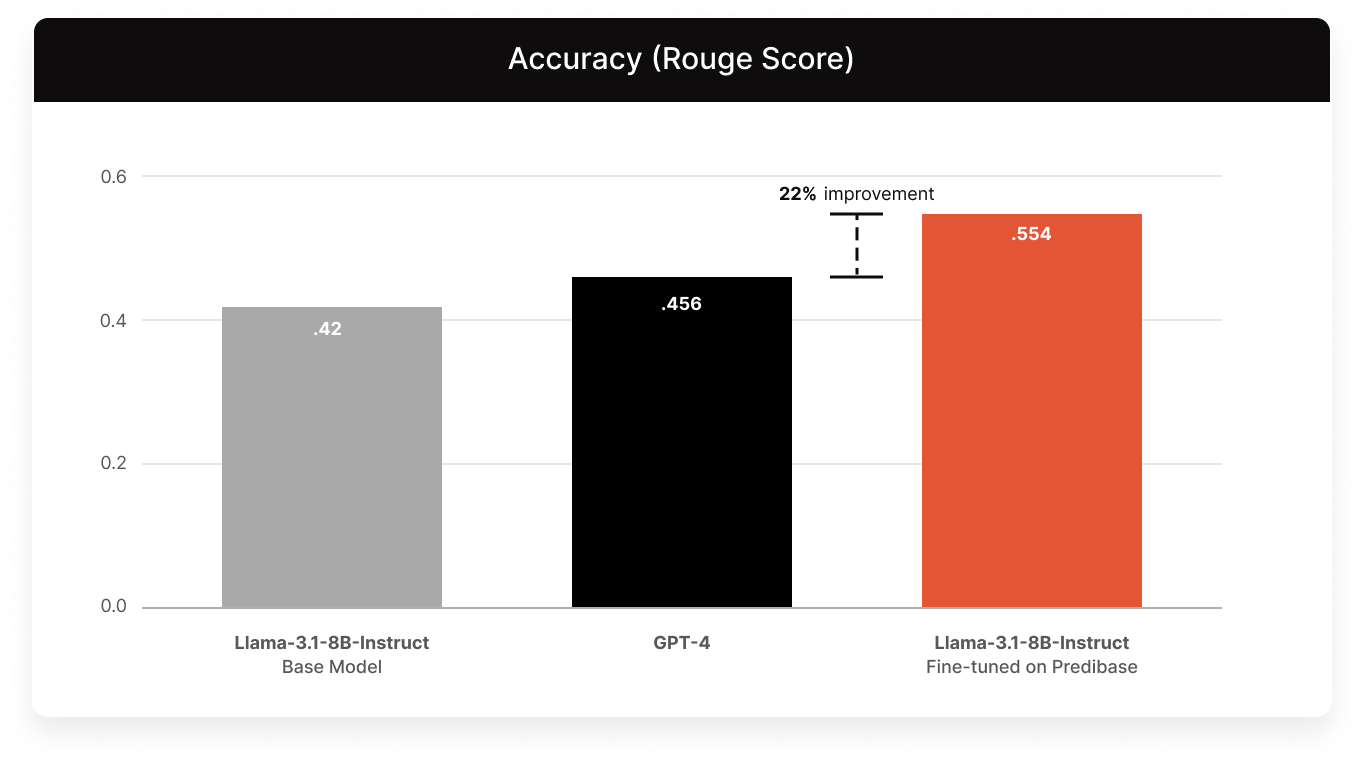

Performance Evaluation: Predibase vs. GPT-4

Small language models fine-tuned on Predibase outperform GPT-4. The following diagram shows the performance of our fine-tuned SLM (Llama-3.1-8B-Instruct) compared to the base model and GPT-4 using the ROUGE-1 metric. The fine-tuned SLM exhibits a 22%improvement over GPT-4.

Resources to Get Started

![Download the Notebook]()

Download the Notebook

Download our free notebook to fine-tune and deploy your own summarization model using conversation data.

![Visit our Blog]()

Visit our Blog

Check out the Predibase blog for our latest thought leadership and tutorials on all things GenAI and LLMs.

![Try Out Code Generation]()

Try Out Code Generation

Visit our solution page for a SQL Copilot use case to try out fine-tuning for code generation.