LLM Fine-tuning Use Case

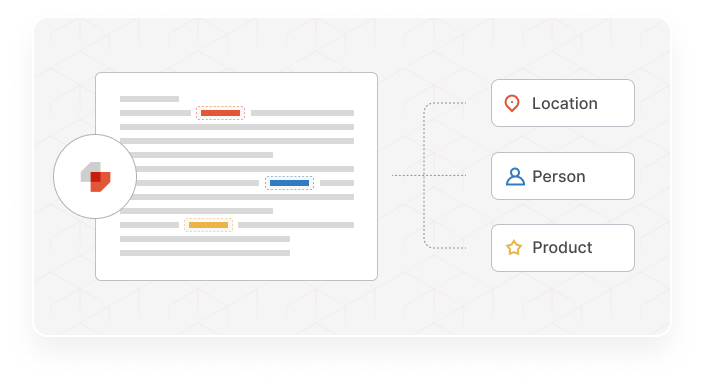

Structured Information Extraction

Extracting structured information from unstructured text has applications across industries like extracting patient information from medical reports or financial data from quarterly call transcripts. However data extraction can be a manual, time-consuming endeavor. Learn how to fine-tune open-source LLMs to automatically generate structured outputs for downstream use.

The Predibase Solution

Generate structured outputs from unstructured text

- Efficiently fine-tune open-source LLMs like Llama2 with built-in best practice optimizations like LoRA and quantization

- Instantly deploy and prompt your fine-tuned LLM on serverless endpoint

- Automate the generation of structured outputs like JSON

Fine-tune and serve your own LLM for Information Extraction

Easily and efficiently fine-tune Llama-2 with built-in optimizations such as quantization and LoRA to generate structured JSON outputs. Instantly serve and prompt your fine-tuned LLM with cost-efficient serverless endpoints built on top of open-source LoRAX. Read the full tutorial.

Resources to Get Started

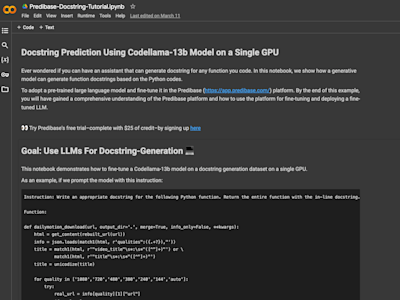

![Read the Tutorial]()

Read the Tutorial

Check out our step-by-step tutorial to learn how you can fine-tune an LLM extract structured outputs from unstructured text.

![Download the Guidebook]()

Download the Guidebook

Download our definitive guide to fine-tuning to get practical advice and best practices for tackling the 4 biggest challenges of fine-tuning.

![Visit the Resource Center]()

Visit the Resource Center

Visit the Predibase resource center to see our collection of ebooks and webinars on topics from multimodal ML to computer vision.