LLM Fine-tuning Use Case

Customer Service Automation

A customer service call can cost an organization upwards of $40. Automating customer support processes can reduce overhead by millions of dollars. Learn how to fine-tune open-source LLMs to automatically classify support issues and generate a customer response.

The Predibase Solution

Streamline customer support operations with LLMs

- Efficiently fine-tune open-source LLMs like Llama2 on customer support transcripts

- Instantly deploy and prompt your fine-tuned LLM on serverless endpoints

- Automate issue identification and generate content for agents to respond

Fine-tune and serve your own LLM for Customer Support

Efficiently fine-tune any open-source LLM with built-in optimizations like quantization, LoRA, and memory-efficient distributed training combined with right-sized GPU engines. Instantly serve and prompt your fine-tuned LLMs with cost-efficent serverless endpoints built on top of open-source LoRAX. Read the full tutorial.

Resources to Get Started

![Watch the Webinar]()

Watch the Webinar

Learn how to easily and efficiently fine-tune Zephyr-7B to accurately predict the Task Type for customer support requests.

![Read the Tutorial]()

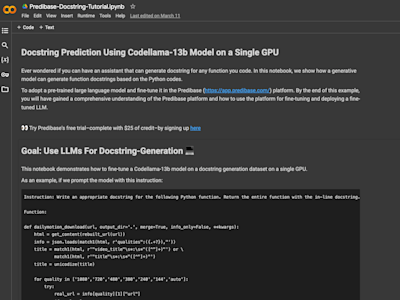

Read the Tutorial

Check out our step-by-step tutorial to learn how you can fine-tune an LLM for customer support - notebook and data included.

![Read the Guidebook]()

Read the Guidebook

Download our definitive guide to fine-tuning to get practical advance and best practices for tackling the 4 biggest challenges of fine-tuning.