Google recently released Gemma, a state-of-the-art LLM, licensed free of charge for research and commercial use. Gemma models share technical and infrastructure components with Google’s Gemini, which enables its 2B and 7B parameter versions to achieve best-in-class performance for their sizes, compared to other open models. In fact, Gemma surpasses significantly larger models on key benchmarks.

Gemma models come in two categories: base model and instruct model, both having 2B and 7B versions. In particular, Gemma-7B achieves an impressive 64.3 on MMLU, establishing its status as one of the highest-performing open-source models currently available.

Gemma models are well-suited for a variety of text generation tasks, including question answering, summarization, and reasoning. Furthermore, Gemma can be customized for many practical use cases enabled by text prompts. This presents developers with viable cost-effective alternatives to commercial LLMs.

If you want to improve the performance of Gemma for your use case, you will first want to fine-tune the model on your task-specific data (we believe the future is “fine-tuned”; please read about our research on the topic in this post: Specialized AI).

For teams new to fine-tuning, the process can be complex. Developers face numerous roadblocks, such as complicated APIs and the challenge of implementing the different optimization techniques to ensure models train quickly, reliably and cost-effectively. To help you avoid the dreaded OOM error, we have made it easy to fine-tune the “instruct” variant of Gemma-7B (“Gemma-7B-Instruct”), for free on commodity hardware using Ludwig – a powerful open-source framework for highly optimized model training through a declarative, YAML-based interface. Ludwig provides a number of optimizations out-of-the-box—such as automatic batch size tuning, gradient checkpointing, parameter efficient fine-tuning (PEFT), and 4-bit quantization (QLoRA)—and has a thriving community with over 10,000 stars on GitHub.

Let's get started!

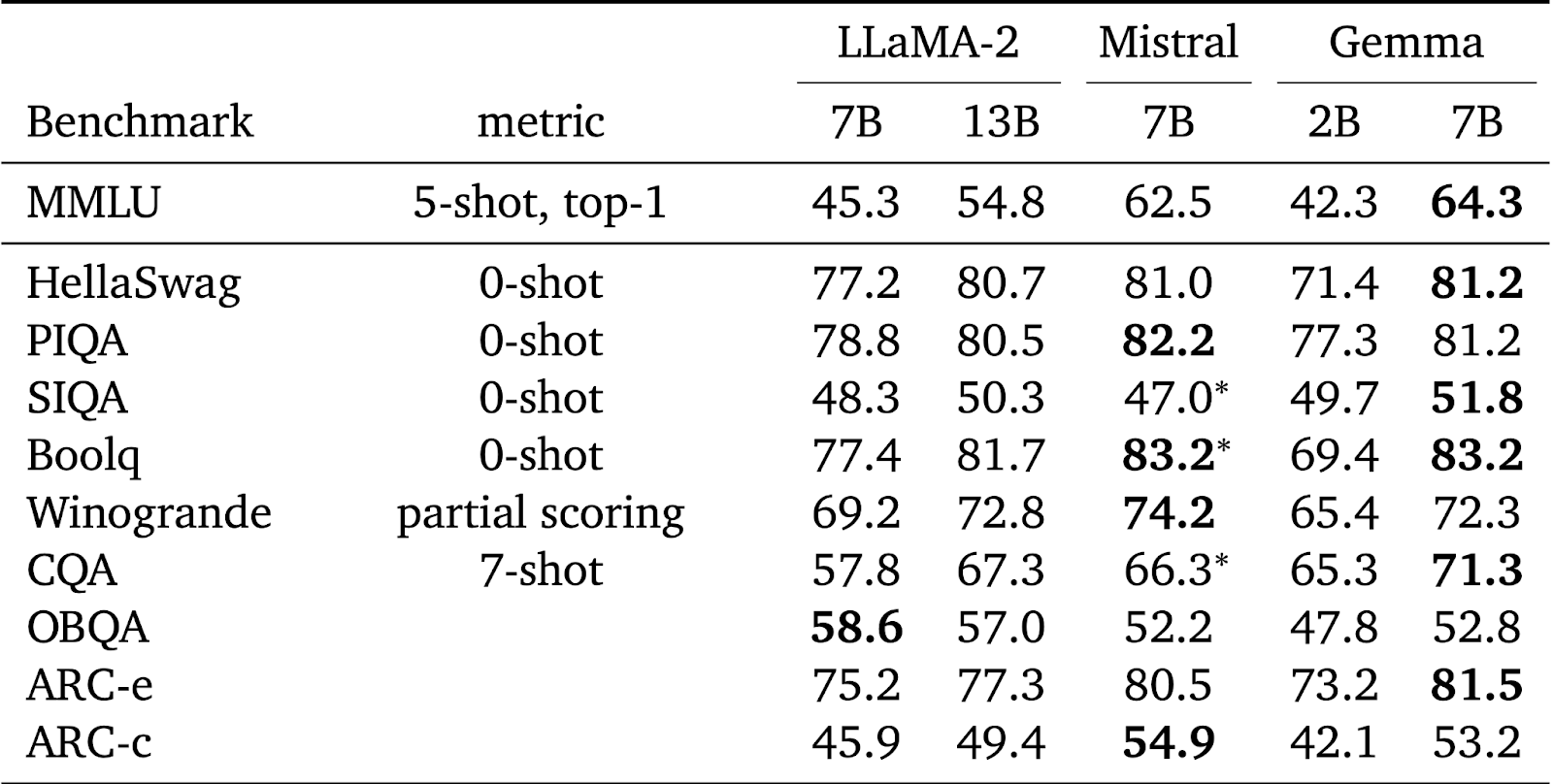

Gemma-7B Performance Benchmarks

As we mentioned earlier, Gemma-7B has shown strong results in comparison to the significantly larger (e.g., 70B parameter) models against a number of benchmarks:

Google's Gemma Benchmarks: https://storage.googleapis.com/deepmind-media/gemma/gemma-report.pdf

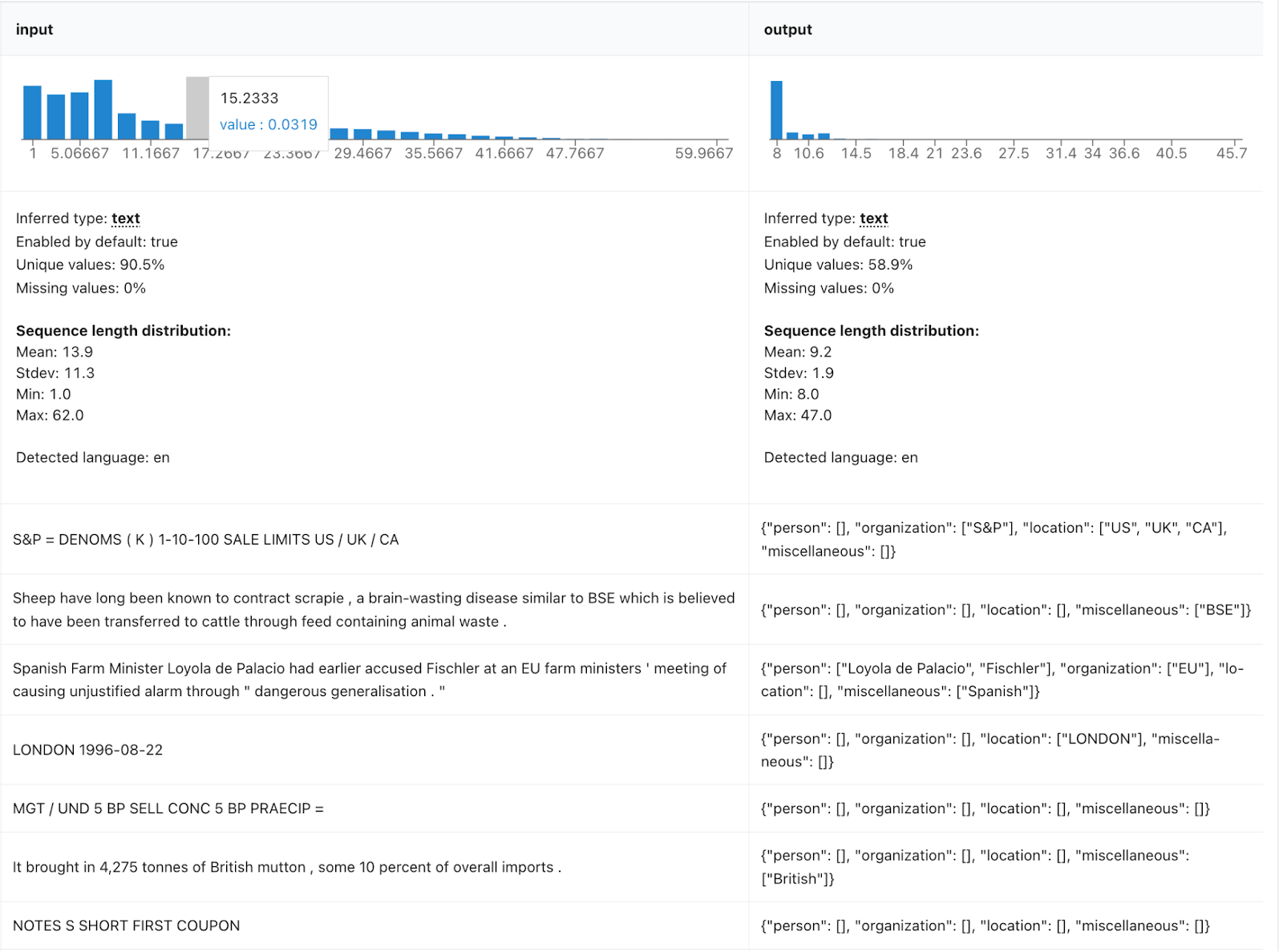

Our Fine-Tuning Dataset

For this fine-tuning example, we will be using CoNLL-2003 dataset, which focuses on named entity recognition (we use the English part, since Gemma supports only the English language):

A screenshot of the CoNLL-2003 dataset that we used to fine-tune Gemma-7B-Instruct.

How to Fine-Tune Gemma-7B with Ludwig

In order to economically fine-tune a 7B parameter model, we will use 4-bit quantization, adapter-based fine-tuning, and gradient checkpointing in order to reduce the memory overhead as much as possible. By doing so, we can fine-tune Gemma-7B-Instruct on a commodity GPU (e.g., T4 in the free Google Colab instance). As Ludwig is a declarative framework, all one needs to do to fine-tune an LLM is to provide a simple configuration. Here is the configuration we will use for fine-tuning Gemma-7B-Instruct:

model_type: llm

base_model: google/gemma-7b-it

input_features:

- name: prompt

type: text

preprocessing:

max_sequence_length: 137

output_features:

- name: output

type: text

preprocessing:

max_sequence_length: null

prompt:

template: |-

Your task is a Named Entity Recognition (NER) task. Predict the category of

each entity, then place the entity into the list associated with the

category in an output JSON payload. Below is an example:

Input: EU rejects German call to boycott British lamb . Output: {{"person":

[], "organization": ["EU"], "location": [], "miscellaneous": ["German",

"British"]}}

Now, complete the task.

Input: {input} Output:

preprocessing:

split:

type: fixed

column: split

global_max_sequence_length: 170

adapter:

type: lora

target_modules:

- q_proj

- v_proj

quantization:

bits: 4

generation:

max_new_tokens: 64

trainer:

type: finetune

optimizer:

type: paged_adam

batch_size: 1

eval_steps: 100

train_steps: 50000

learning_rate: 0.0002

eval_batch_size: 1

steps_per_checkpoint: 1000

learning_rate_scheduler:

decay: cosine

warmup_fraction: 0.03

gradient_accumulation_steps: 16

enable_gradient_checkpointing: trueSample Ludwig code for fine-tuning Gemma-7B-Instruct

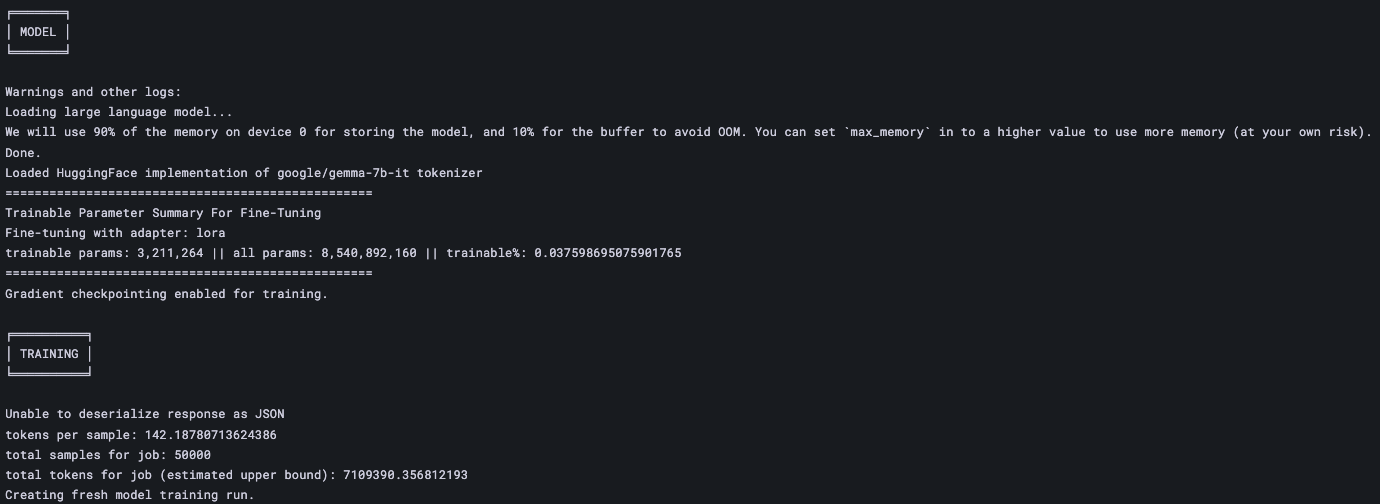

After you kick off your training job, you should see a screen that looks like this:

Screenshot of our fine-tuning job Ludwig job for Gemma-7B-Instruct

Congratulations, you’re now fine-tuning Gemma-7B-Instruct!

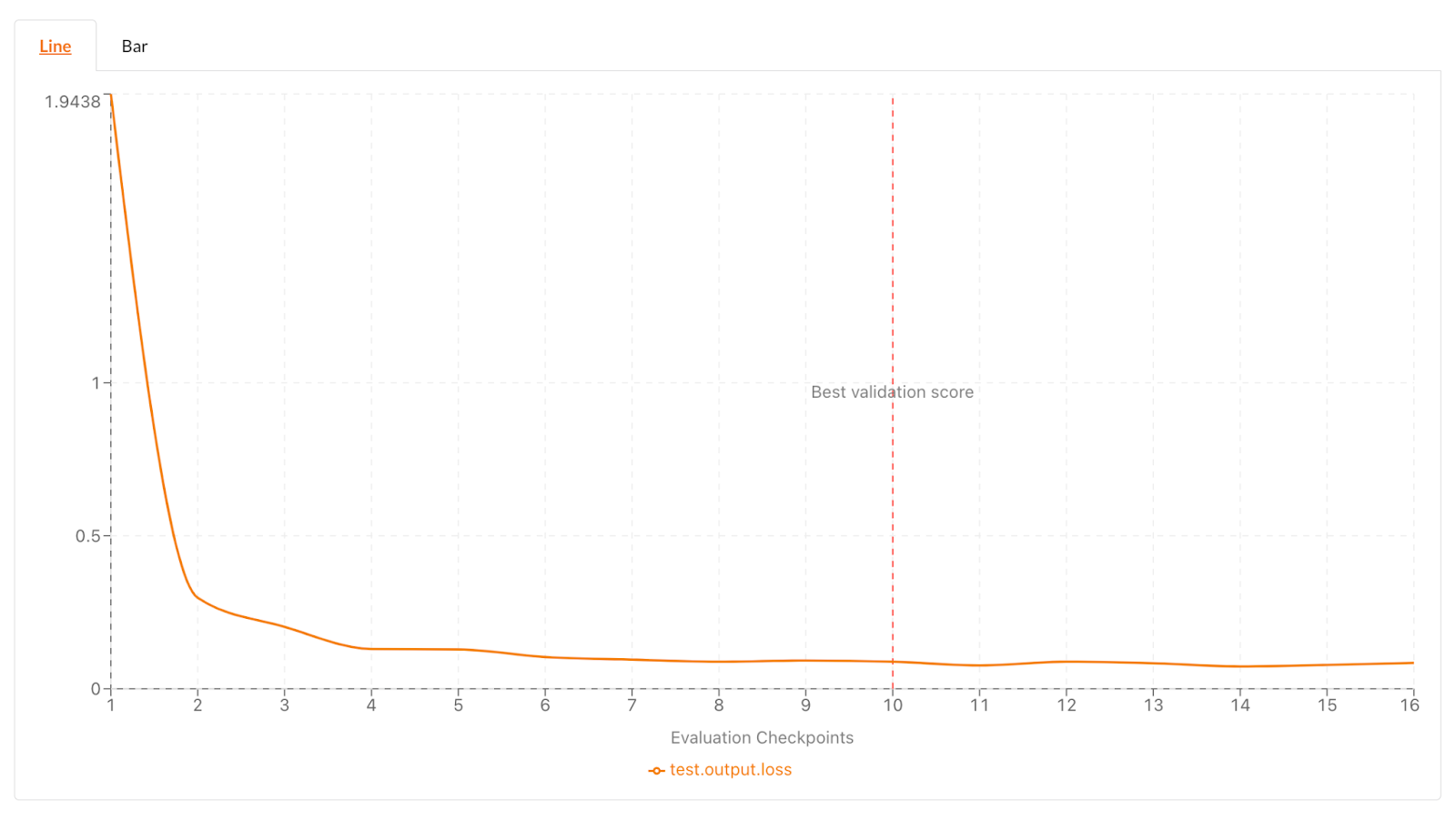

Here is the loss curve from a sample training run for reference:

Loss curve from our Gemma-7B-Instruct training run

Fine-Tune Gemma-7B-Instruct for Your Use Case

In this short tutorial, we showed you how to easily, reliably, and efficiently fine-tune Gemma-7B-Instruct on readily available hardware using open-source Ludwig. Try it out and share your results with our Ludwig community on Discord.

If you are interested in fine-tuning and serving LLMs on scalable managed AI infrastructure in the cloud or your VPC using your private data, sign up for a free trial of Predibase (and get $25 of free credits!). Predibase supports any open-source LLM including Mixtral, Llama-2, and Zephyr on different hardware configurations all the way from T4s to A100s. For serving fine-tuned models, you gain massive cost savings by working with Predibase, because it packs many fine-tuned models into a single deployment through LoRAX, instead of spinning up a dedicated deployment for every model.

Happy fine-tuning!