Introducing Predibase: the enterprise declarative machine learning platform

A high performance, low-code approach to machine learning for data practitioners and engineers

For the last year, we’ve quietly been building Predibase — the first enterprise declarative machine learning platform. Under a common belief that ML shouldn’t be so hard to implement in the enterprise, the founding team came together from Uber AI, Google, and Apple, and created platforms internally and in the open source that expanded the access of ML and reduced complexity through a new declarative approach. Now, with backing from our investors (Greylock, Factory and others), we’re bringing Declarative ML and its benefits to more users. Today we’re excited to share our vision for the future of machine learning.

Predibase is focused on individuals and organizations who have tried operationalizing machine learning, but found themselves re-inventing the wheel each step of the way. Our declarative approach allows users to focus on the “what” of their ML tasks while leaving our system to figure out the “how”. In this post, we’ll share more about our journey, the value we bring to both large Fortune 500 enterprises and high-growth startups, and what’s coming up next.

Where things go wrong today

Building ML solutions in the enterprise is too slow, too expensive and too few people can do it, but it doesn’t have to be that way.

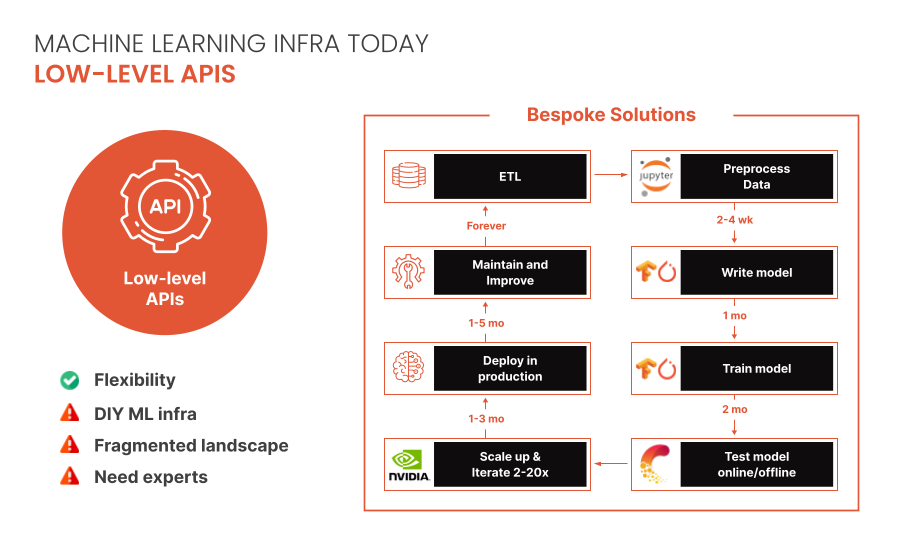

Having built ML systems at organizations large and small, we are painstakingly familiar with the process of delivering ML solutions. Building an ML solution often requires writing low-level ML code kludged together with developer tools. After months of work, the result is typically a bespoke solution handed over to other engineers, often hard to maintain in the long term and create technical debt.

We see this as the COBOL era of machine learning, and are proud to be building towards the SQL moment of machine learning.

This is a familiar pain for most data and ML teams that we’ve spoken to. But leaders of data science organizations are frustrated by both — having to deal with it and of the several no-/low-code platforms that have claimed to solve it. These platforms promise simplified model building, but don’t strike the right balance between ease of use and control for sustained usage by engineers and data scientists. And the unfortunate reality is that when teams use these products, they often struggle to build anything more than a prototype.

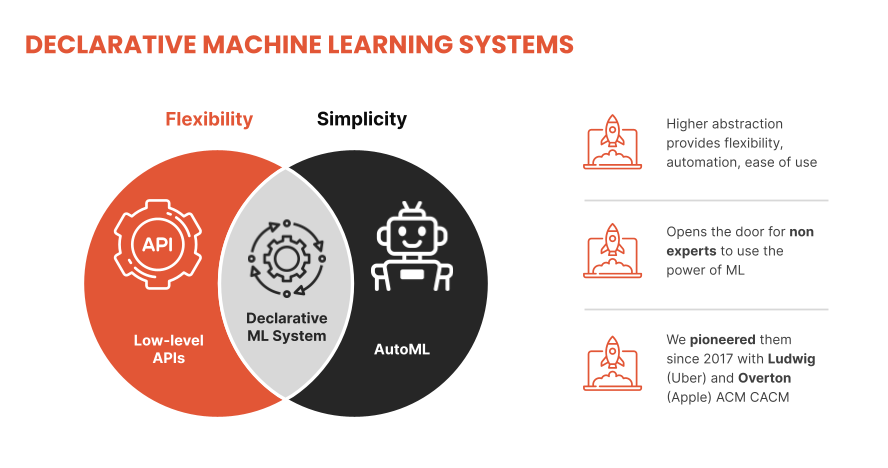

In our view, the key is to strike the right abstraction — one that provides an easy out-of-the-box experience while supporting increasingly complex use cases and allowing the users to iterate and improve their solutions.

Once a satisfactory model has been obtained, it should be ready to move into production without additional engineering effort. But from our team’s experiences on Uber’s Michelangelo machine learning platform and Vertex AI, we’ve seen firsthand how even sophisticated ML organizations struggle to get models from research into production.

The tools that are built-for-scale (Spark, Airflow, Kubeflow) are not the same tools that are built-for experimentation. The path of least resistance in most data science teams becomes downloading some subset of the data to a local laptop and training a model using some amalgamation of Python libraries like Jupyter, Pandas, and PyTorch. Such models are commonly thrown over the wall to ML engineers tasked with getting them into production, but the process ends up being a complete rewrite of the data scientist’s workflow.

We devised the new idea of declarative ML systems for addressing these issues and applied it at Uber and Apple where it increased productivity and reduced costs, made it available as open source and we are finally bringing it to the broader enterprise market.

Declarative ML Systems: LEGO for Machine Learning

The basic idea behind declarative ML systems is to let users specify entire model pipelines as configurations and be intentional about the parts they care about while automating the rest. Like infrastructure-as-code simplified IT, these configurations allow users to focus on the “what” rather than the “how” and have the potential to dramatically increase access while lowering time-to-value.

We pioneered declarative ML systems independently, with Ludwig at Uber and Overton at Apple. Ludwig served many different applications in production at Uber ranging from customer support automation, fraud detection and product recommendation while Overton processed billions of queries across multiple applications at Apple. Both frameworks made ML more accessible across stakeholders, especially engineers, and massively accelerated the pace of projects.

Over the last year, we’ve doubled down on our efforts in Ludwig in the open-source.

Ludwig makes it easy to define deep learning pipelines with a flexible and straightforward data-driven configuration system, suitable for a wide variety of tasks. Depending on the types of the data schema, users can compose and train state-of-the-art model pipelines on multiple modalities at once.

Writing a configuration file for Ludwig is easy, and provides users with ML best practices out-of-the-box, without sacrificing control. Users can choose which part of the pipeline they want to swap new pieces in for, including choosing among state-of-the-art model architectures and training parameters, deciding how to preprocess data and running a hyperparameter search, all via simple config changes. This declarative approach increases the speed of development, makes it easy to improve model quality through rapid iteration, and makes it effortless to reproduce results without the need to write any complex code.

One of our open source users referred to composing Ludwig configurations as “LEGO for deep learning”. We couldn’t say it better ourselves.

But as any ML team knows, training a deep learning model alone isn’t the only hard part — building the infrastructure to operationalize the model from data to deployment is often even more complex. That’s where Predibase comes in.

Predibase — Bringing declarative ML to the enterprise

We started Predibase to bring the benefits of declarative ML systems to market with an enterprise-grade platform. There are three key things users do in Predibase:

- Connect data — users can easily connect their structured and unstructured data stored anywhere on the cloud data stack in a highly secure way.

- Declaratively build models — users can provide model pipeline configurations and run on a scalable distributed infrastructure to train models efficiently as easily as on a single machine.

- Operationalize models — users can deploy model pipelines at the click of a button with high efficiency and query them immediately.

Predibase’s vision is to bring all the stakeholders of data & AI organizations together in one place, making collaboration seamless between data scientists working on models, data engineers working on deployments and product engineers using the models. Let’s take a look at the features we’ve added on top of our open-source foundations that make it possible.

Integrated platform, from data-to-deployment

Predibase provides the fastest path from data to deployment without cutting any corners along the way. Connect directly to your data sources, both structured data warehouses (ex: Snowflake, BigQuery, Redshift) and unstructured data lakes (ex: S3, GCS, Azure Storage). Any model trained in Predibase can be deployed to production with zero code changes, and configured to automatically retrain as new data comes in. Because both experimentation and productionization go through the same unified declarative configuration, models can continue to be updated and improved even after being deployed to production.

Cutting-edge infra made painless

Predibase features a true cloud-native, serverless ML infrastructure layer built on top of Horovod, Ray, and Kubernetes. We provide the ability to autoscale workloads across multi-node and multi-GPU systems in a way that is cost effective and tailored to the model and dataset. All of this provides the ability to combine highly parallel data processing, distributed training, and hyperparameter optimization into a single workload, and supports both high throughput batch prediction as well as low-latency real-time prediction via REST.

A new way to do iterative modeling

The declarative abstraction that Predibase adopts makes it very easy for users to modify model pipelines by just editing their configurations. Predibase monitors performance and quality as models are trained and supports users in their model iteration workflows by keeping track of lineage between models and the data they are trained on. Defining models as configs allows us to show differences between model versions over time in a concise way, making it easy to iterate and improve them. This is where our unique alternative to AutoML comes in: instead of running expensive experiments, Predibase suggests to the user the best subsequent configurations to train depending on the explorations they have already conducted, creating a virtuous cycle of improvement.

Supporting multi-personas with PQL

With the rise of the modern data stack, the number of data professionals comfortable with SQL has also grown. So, alongside our Python SDK and UI, we’re also introducing PQL — Predictive Query Language — as an interface that brings machine learning closer to the data. Using PQL, users can use Predibase for connecting data, training models and running predictive queries, all through a SQL-like syntax they are already familiar with. We see a future where users run and share predictive queries just as commonly as they share analytical queries today.

Our plans

Predibase is currently available by invitation only as we scale our platform, but we’ve been lucky to work with multiple customers ranging from the Fortune 500 to start-ups and across industries including banking, healthcare and high-tech. If you are interested in trying Predibase at your organization, please request a demo and we will be more than happy to show you our platform.Over the coming months, expect to hear more frequently from us as we release more details about our platform and how you can use it or get involved. In parallel, we’ll also double down on our open source projects — in particular on Ludwig, where you can expect to see some exciting new upgrades soon. Join us on Slack if you’d like to follow us in the community and watch out for the latest updates on the Predibase blog!

About us

Founders

- Piero Molino, CEO� — Piero is the Chief Executive Officer, previously an ML researcher and one of the founding members of the Uber AI organization, where he authored Ludwig — an open source declarative deep learning framework that is at the core of Predibase.

- Travis Addair, CTO — Travis is the Chief Technology Officer, building on experience leading the deep learning training team on Uber’s Michelangelo platform, where he was the co-author of Horovod — an open source distributed deep learning training framework.

- Devvret Rishi, CPO — Dev is the Chief Product Officer, bringing an academic background in ML and prior experience as a PM on Firebase, Google Assistant and Google Cloud AI Platform, where he was also the first PM for Kaggle — the world’s largest ML community.

- Chris Ré, co-founder — Professor Chris Ré is a co-founder, who leads the Hazy machine learning research group at Stanford and previously built Overton while at Apple, one of the first declarative ML systems used in industry.

Team

We’re proud of the team behind the Predibase platform, a mix of machine learning researchers, engineers and product designers from leading tech companies like Amazon, Google, Apple, Uber and more. Our team has worked on machine learning systems from research to production, and we are excited to bring our new approach to a broader audience. We’re hiring for strong candidates in engineering and go-to-market, so please reach out to us if you are interested!