Last month, we announced LoRA Exchange (LoRAX), a framework that makes it possible to serve hundreds of fine-tuned LLMs at the cost of one GPU with minimal degradation in throughput and latency. Today, we’re excited to release LoRAX to the open-source community under the permissive and commercial friendly Apache 2.0 license.

At Predibase, we believe that the future of generative AI is smaller, faster, and cheaper fine-tuned open source LLMs. With LoRAX open-sourced, we want to make it possible to work towards this future together, alongside the broader ML community.

What is LoRAX?

LoRAX (LoRA eXchange), allows users to pack 100s fine-tuned models into a single GPU and thus dramatically reduce the cost of serving. LoRAX is open-source, free to use commercially, and production-ready, with pre-built docker images and Helm charts available for immediate download and use.

How LoRAX Works

LoRAX is a new approach to LLM serving infrastructure specifically designed for serving many fine-tuned models at once using a shared set of GPU resources. Compared with conventional dedicated LLM deployments, LoRAX consists of three novel components:

- Dynamic Adapter Loading, allowing each set of fine-tuned LoRA weights to be loaded from storage just-in-time as requests come in at runtime, without blocking concurrent requests.

- Tiered Weight Caching, to support fast exchanging of LoRA adapters between requests, and offloading of adapter weights to CPU and disk to avoid out-of-memory errors.

- Continuous Multi-Adapter Batching, a fair scheduling policy for optimizing aggregate throughput of the system that extends the popular continuous batching strategy to work across multiple sets of LoRA adapters in parallel.

For more details, see the original LoRAX blog post here!

Why Open-Source LoRAX?

At Predibase, we strongly believe that the future of generative AI will ultimately rest upon smaller, faster, and cheaper open-source LLMs fine-tuned for specific tasks. In order for us to get there, we must significantly reduce the cost of serving fine-tuned models.

Open-source will play a key role in democratizing AI in the coming years. Empowering organizations to build and deploy their own AI models will prevent AI from concentrating in the hands of a few large, well-resourced tech companies.

Our Open-Source Philosophy

Our team has a proven track record of building and maintaining popular open-source machine learning Infrastructure frameworks, with founders from the core developer teams of both Ludwig and Horovod. In this section, we detail the three pillars underlying our philosophy for building a vibrant community around the LoRAX project.

Pillar #1: Built for scale.

LoRAX is first and foremost a production-ready LLM serving framework – we want to make it as frictionless as possible for users to go from model development to deployment. That’s why we’re launching with battle-tested code, as well as pre-built docker images designed for drop-in use with your existing Kubernetes cluster. Upcoming releases will continue to emphasize the importance of building for LLM serving at scale.

Pillar #2: Where research meets production.

Oftentimes, repositories open-sourced as artifacts of ML publications are implemented as a way to share novel, but isolated concepts to the broader ML community. The LoRAX project is intended to be a place to bring the best ideas from research into a single unified framework, and thus create a streamlined path for cutting-edge research to make into production systems.

On day 1, we are launching with native support for Flash Attention 2, Llama 2 and Mistral architectures, and, most recently, the SGMV CUDA kernel to bring significant performance improvements to multi-adapter batching. There’s plenty more to come; in the meantime, please feel free to submit a feature request here!

Pillar #3: Commercially viable. Always.

Ultimately, LoRAX is meant to serve as a foundational piece of infrastructure for engineering teams seeking to productionize LLMs at scale. Under the permissive and commercial friendly Apache 2.0 license, developers can rest assured that they can use LoRAX to empower their businesses with AI, whether it is via consumer applications, or through enterprise platforms like Predibase.

LoRAX is Ready for Production

Run in a Docker container

LoRAX ships pre-built Docker images that include optimized CUDA kernels for fast GPU accelerated inference, including Flash Attention v2, Paged Attention, and SGMV.

LoRAX can be launched serving a Llama or Mistral base model using a single command:

docker run --rm --runtime=nvidia \

-e PORT="8080" \

-p 8080:8080 \

ghcr.io/predibase/lorax:latest \

--model-id mistralai/Mistral-7B-Instruct-v0.1LoRAX supports a REST API compatible with HuggingFace’s text-generation-inference (TGI) for text prompting:

curl -X POST http://localhost:8080/generate -H "Content-Type: application/json" -d '{"inputs": "Natalia sold clips to 48 of her friends in April, and then she sold half as many clips in May. How many clips did Natalia sell altogether in April and May?"}'An individual fine-tuned LoRA adapter can be used in the request with a single additional parameter, adapter_id:

curl -X POST http://localhost:8080/generate -H "Content-Type: application/json" -d '{"inputs": "Natalia sold clips to 48 of her friends in April, and then she sold half as many clips in May. How many clips did Natalia sell altogether in April and May?", "parameters": {"adapter_id": "vineetsharma/qlora-adapter-Mistral-7B-Instruct-v0.1-gsm8k"}}'Helm and Kubernetes

We’ve open sourced parts of our production Kubernetes deployment stack in LoRAX, including Helm charts that make it easy to start using LoRAX in production with high availability and load balancing.

To spin up a LoRAX deployment with Helm, you only need to be connected to a Kubernetes cluster through kubectl. We provide a default values.yaml file that can be used to deploy a Mistral 7B model to your Kubernetes cluster, ready for use with fine-tuned adapters, with a single command:

helm install mistral-7b-release lorax/charts/loraxThe helm install command initiates a Kubernetes Deployment and Service, schedules a pod for each LLM replica in the Deployment, and configures the necessary networking for load balancing. Upon successful installation, you can monitor these deployed resources using the following commands:

kubectl get deployment

kubectl get pods

kubectl get servicesThe default values.yaml configuration deploys a single replica of the Mistral 7B model. You can tailor configuration parameters to deploy any Llama or Mistral model by creating a new values file from the template and updating variables. Once a new values file is created, you can run the following command to deploy your LLM with LoRAX:

helm install -f your-values-file.yaml your-model-release lorax/charts/loraxIf you want to tear down the deployment to save costs, you can deprovision the deployment by running:

helm uninstall your-model-releaseLoRAX Serving Costs and Performance Results

LoRAX was built to give engineers a cost-effective path to serving 100s of fine-tuned LLMs with minimal degradation to throughput and latency. In our experiments, we demonstrate this capability by benchmarking the serving performance of Llama 2 7B when sent queries ranging from 1 to 128 different adapters served on a single Nvidia A10G GPU.

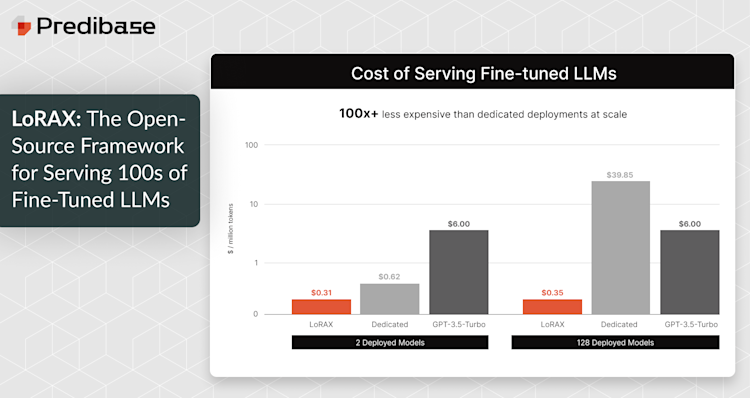

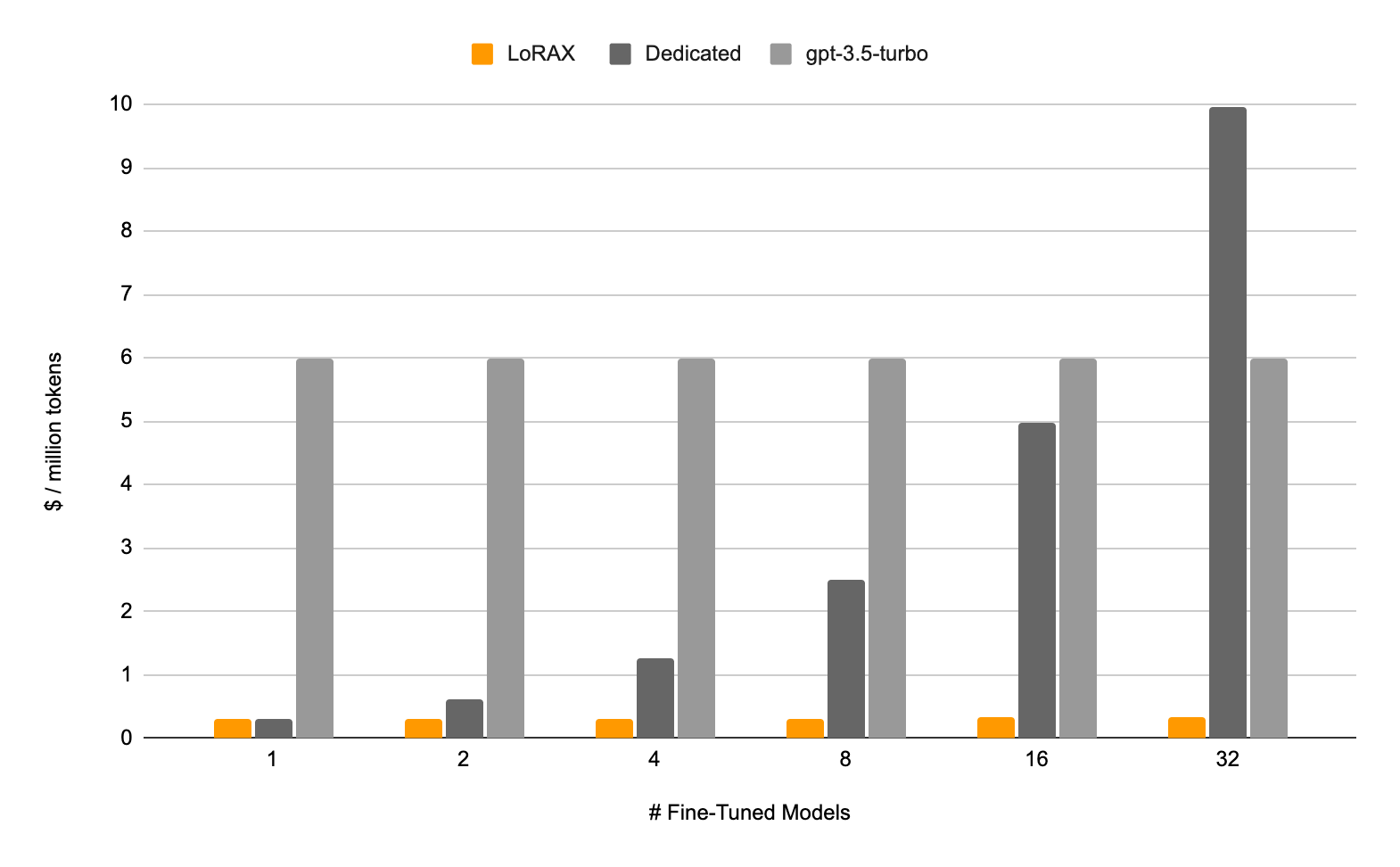

Figure 1: A cost comparison of processing 1M tokens evenly distributed across N fine-tuned models. Processing 1M tokens spread evenly across 32 different fine-tuned models takes just about as much time as processing the same number of tokens for 1 fine-tuned model due to the near-optimal multi-adapter batching throughput associated with LoRAX.

First, we compare the cost of processing 1M tokens evenly distributed across N fine-tuned models (Figure 1). The cost associated with gpt-3.5-turbo is the cost per token for fine-tuned GPT-3.5-Turbo models. For LoRAX and Dedicated deployments, the cost is the cost measured against GPU-hours used to host N fine-tuned models for processing 1M/N tokens each. LoRAX has nearly constant cost across all experiments due to highly performant multi-adapter batching, which enables near-optimal throughput since samples across adapters can be processed in the same batch.

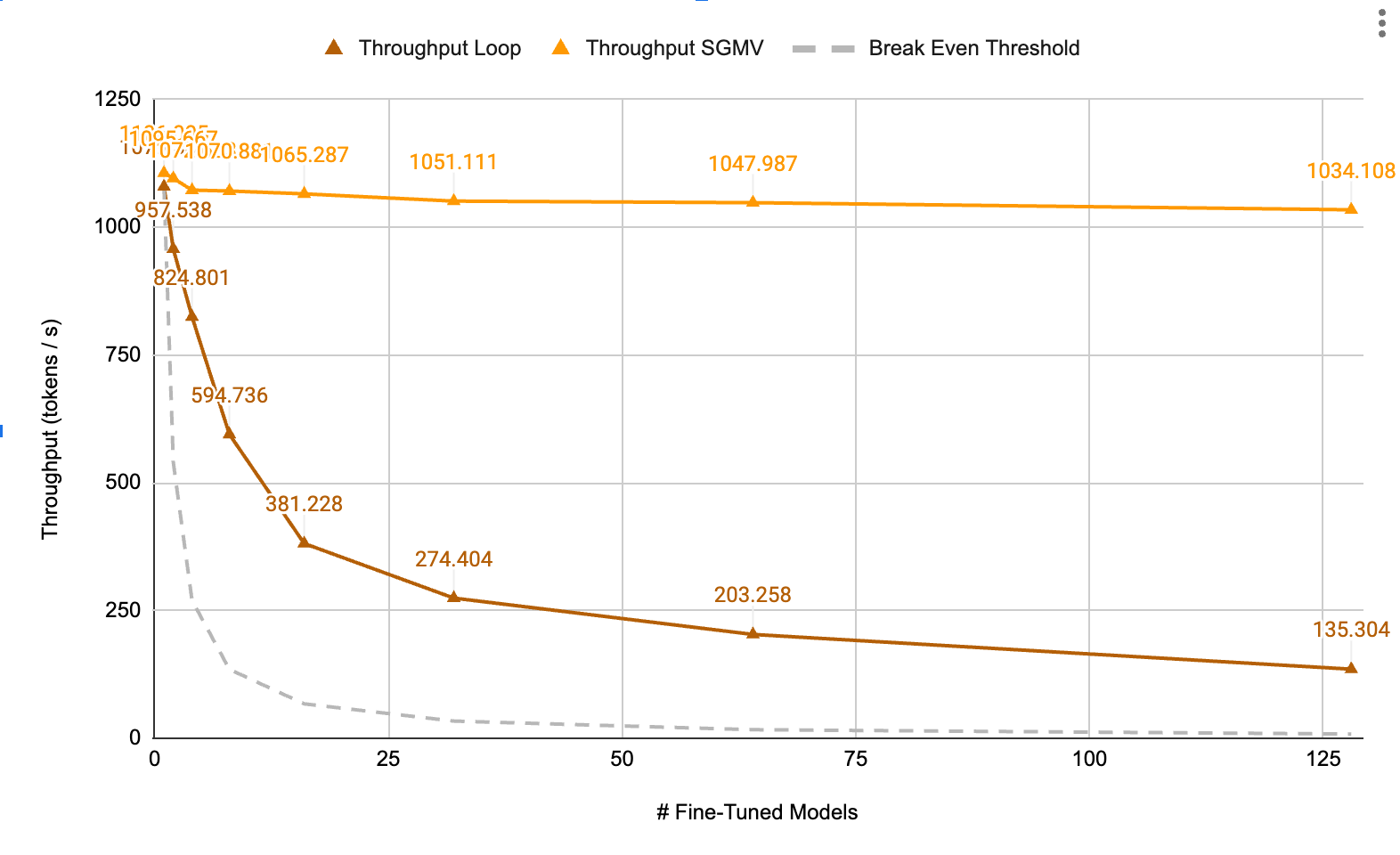

Figure 2: A throughput (tokens/s) comparison between the two multi-adapter batching implementations baked into the LoRAX forward pass, Loop and SGMV, and the Break Even Threshold, which is the minimum throughput required to justify using a multi-adapter solution like LoRAX vs. simply serving each model in dedicated compute.

Then, we compare throughput (tokens/s) between the two multi-adapter batching implementations baked into the LoRAX forward pass, Loop and SGMV (Figure 2). Loop is a naive mask-based implementation, and SGMV is the kernel implemented in Punica here. We fall back on the Loop implementation in cases where the rank between LoRAs differ within a given batch. Both implementations have superior throughput over the Break Even Threshold, which is the throughput required to justify deploying with LoRAX as opposed to buying a new dedicated instance for each fine-tuned model.

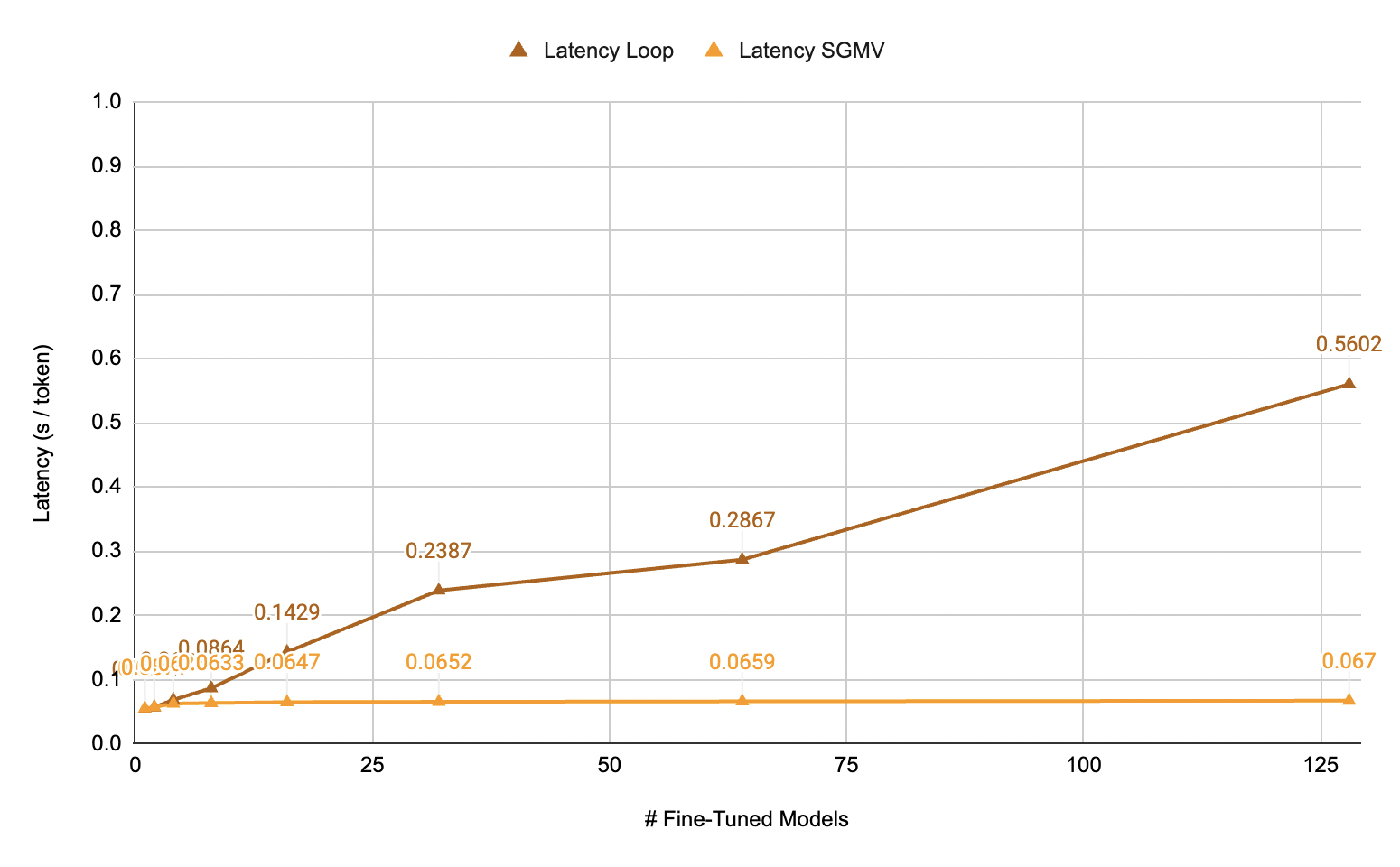

Figure 3: A latency (s/token) comparison between the two multi-adapter batching implementations baked into the LoRAX forward pass, Loop and SGMV.

Finally, we compare latency (s/token) between the two multi-adapter batching implementations baked into the LoRAX forward pass, Loop and SGMV (Figure 3). SGMV sees an increase in latency of only 20% even when fielding requests from 128 different fine-tuned models.

LoRAX + Reinforcement Fine-Tuning (RFT)

When paired with Predibase RFT, LoRAX becomes even more powerful. RFT lets you fine-tune models with just a handful of labeled examples—no huge dataset needed. LoRAX makes it possible to deploy those fine-tuned models at scale, immediately.

Join the Community

Ultimately, the success of OSS projects hinges on the vibrancy of their respective communities, and LoRAX is no different. In the coming months, we’ll be finalizing docs and tutorials, as well as queueing up a sequence of community events. In the meantime, check out our GitHub and Discord!

If you want to get started training and deploying OSS LLMs using the best of Ludwig, Horovod, and LoRAX, check out Predibase, the developer platform for productionizing open-source AI. Sign up for our 2-week free trial here, where you can fine-tune and serve a Llama 2 7B model for free today.

FAQs

What is LoRAX in machine learning?

LoRAX is an open-source framework developed by Predibase for serving and managing hundreds of fine-tuned large language models (LLMs). It uses LoRA (Low-Rank Adaptation) adapters to efficiently handle inference across multiple models.

How to download LoRAX?

You can download Lorax from the official GitHub repository at https://github.com/predibase/lorax or install it via pip. Full installation instructions are available in the README.

What does LoRAX do?

LoRAX makes it easy to serve multiple fine-tuned LLMs with low latency. It enables fast, scalable model deployment using LoRA adapters, helping ML teams manage model versions, endpoints, and configurations with minimal compute overhead.

Is LoRAX related to LoRA ML?

Yes, LoRAX is built specifically to support LoRA-based fine-tuning workflows. It allows enterprises to run hundreds of LoRA adapters over a base LLM with efficient resource use.

Can LoRAX be used for inference at scale?

Absolutely. LoRAX was designed to scale inference workloads across many fine-tuned models. It integrates with popular LLMs and provides tools for rapid deployment and version control.