Ludwig v0.7 is here, bringing a data-centric and declarative interface to fine-tuning state-of-the-art computer vision (image) and NLP (text) models in just a few lines of YAML.

Key highlights from the latest v0.7 release we’ll cover in this announcement include:

- Pretrained TorchVision Models for state-of-the-art performance on computer vision tasks.

- Image Augmentation at scale with Ray AIR to overlap augmentation with model training.

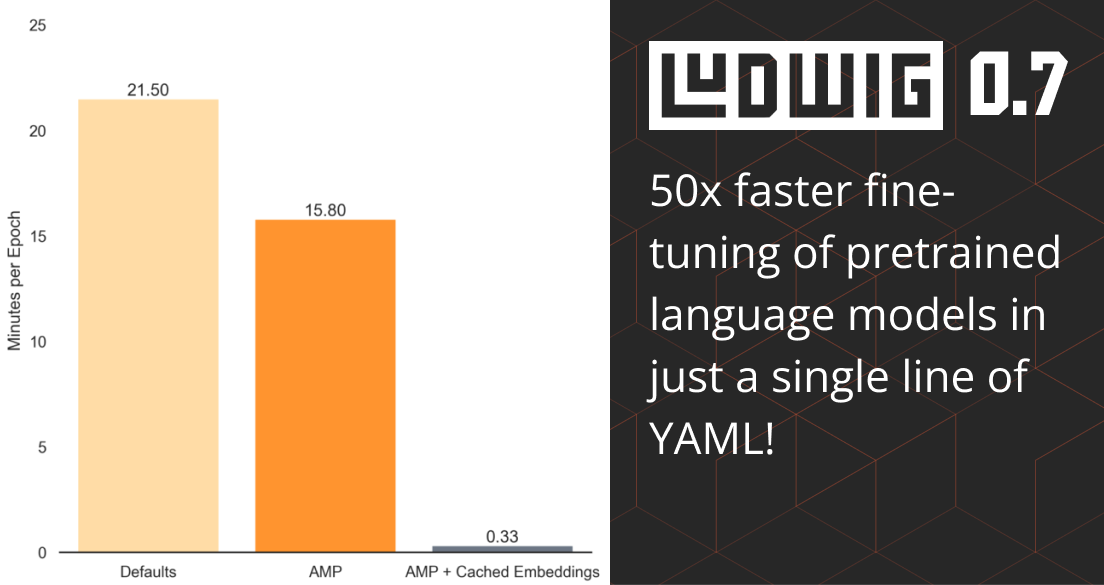

- 50x Faster Fine-Tuning of large language models with mixed precision and embedding caching.

- New Distributed Training Strategies for data and model parallelism.

See the Ludwig v0.7 release notes for complete details, and be sure to download or upgrade Ludwig today to take advantage of these exciting new features:

pip install -U ludwigAs a refresher, here’s all you have to do to fine-tune a pretrained BERT model to your dataset and task in Ludwig:

input_features:

- name: review

type: text

encoder:

type: bert

trainable: true

output_features:

- name: label

type: categoryFine-tuning BERT in Ludwig.

This minimal config is only the beginning, however. There are many new options available in the Ludwig configuration in v0.7 and we’re excited to show you some of them in the sections that follow.

Pretrained TorchVision Models

In v0.6, we introduced ViT as the first pretrained image model in Ludwig alongside model architectures such as MLP Mixer and ResNet. Now v0.7, we’ve added 20 additional TorchVision pretrained models as image encoders, including: AlexNet, EfficientNet, MobileNet v3, and GoogleLeNet. All of these powerful pretrained models can be switched between with a single parameter in the encoder portion of the feature config:

name: image_feature

type: image

encoder:

type: resnet

use_pretrained: true

trainable: falseSelect from 20+ pretrained TorchVision models with a single parameter.

The pretrained TorchVision models in Ludwig have been trained on the ImageNet 1000 class dataset, allowing users to quickly train a downstream fine-tuned model on their own set of image features and targets harnessing all the background knowledge learned from ImageNet. In practice, this kind of transfer learning dramatically improves model performance on a range of computer vision tasks.

Comparing accuracy in Ludwig with and without pretrained weights on the Kaggle Dogs vs Cats dataset.

The figure above compares a randomly initialized Resnet encoder (available in Ludwig v0.6) against the same model with weights pretrained on ImageNet. Even using the pretrained model without fine-tuning the weights (trainable=false), we observed a 20% increase in accuracy on the held-out test set over 20 epochs of training (95% accuracy with pretrained weights, 75% accuracy without). After only 2 epochs of training, the pretrained run was already at around 90% accuracy.

As with Ludwig’s text encoders, users can set a single trainable parameter to switch between adjusting the weights of the pretrained model, or keep the weights of the pretrained model fixed and train a series of dense layers on top.

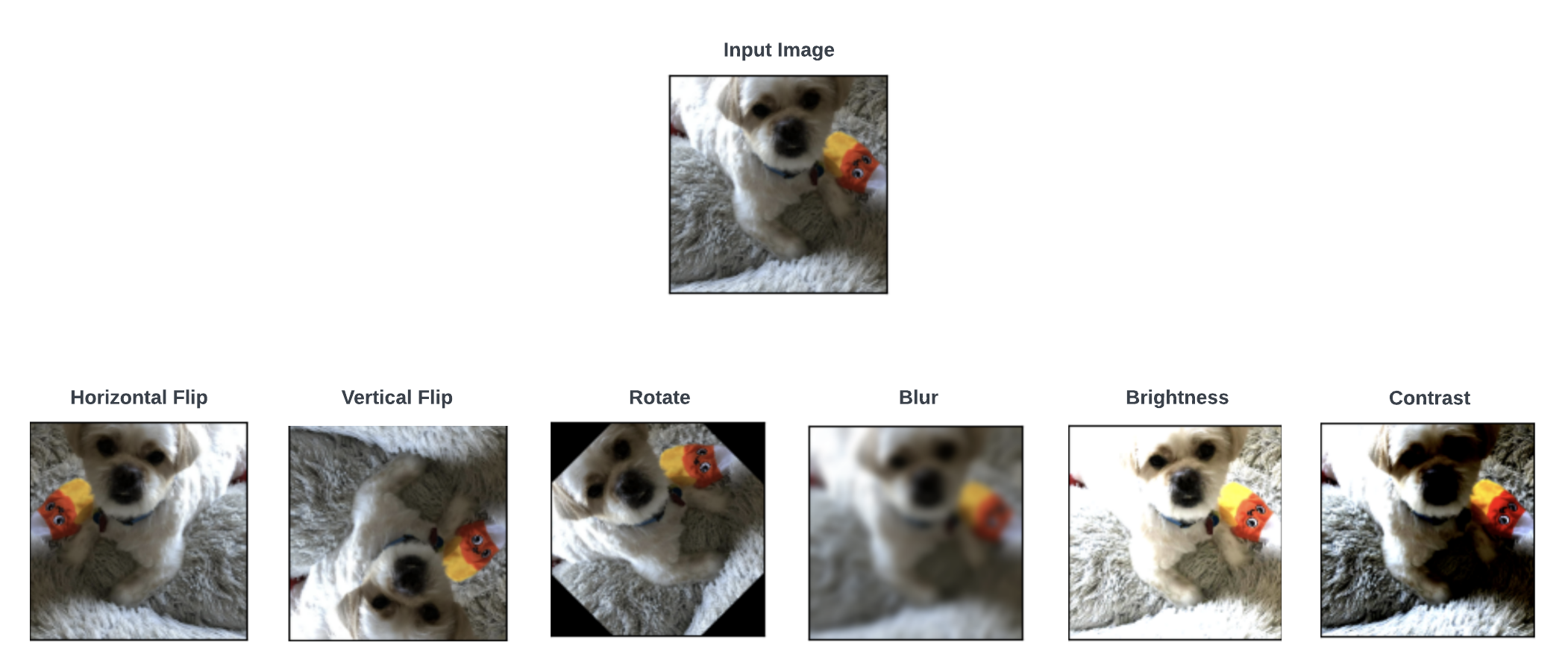

Image Augmentation

Ludwig v0.7 also introduces image augmentation, artificially increasing the size of the training dataset by applying a randomized set of transformations to each batch of images during training.

When using the Ray backend, Ludwig will leverage the streaming data loading provided by Ray Datasets to offload the image augmentation to idle CPU workers while training takes place in the foreground on GPU. This overlap of augmentation with training means that with a well-provisioned Ray cluster, you’ll be able to run full image augmentation in Ludwig while maintaining full GPU utilization during training, all with zero code or config changes required.

Image augmentation can be turned by for any image input feature by setting the augmentation parameter in the config:

name: image_feature

type: image

encoder:

type: densenet

augmentation: trueTurn on image augmentation with the augmentation parameter in the Ludwig config.

Or configure each step of the augmentation pipeline by hand:

name: image_feature

type: image

encoder:

type: densenet

augmentation:

- type: random_horizontal_flip

- type: random_rotate

degree: 15

- type: random_contrast

min: 0.5

max: 2.0Customize your image augmentation pipeline with full control over steps and parameters.

Full the complete list of options, see the Image Augmentation documentation.

50x Faster Fine-Tuning

As mentioned above, Ludwig currently supports two variations on fine-tuning, configured via the trainable encoder parameter:

- Modifying the weights of the pretrained encoder to adapt them to the downstream task (trainable=true).

- Keeping the pretrained encoder weights fixed and training a stack of dense layers that sit downstream as the combiner and decoder modules (trainable=false). This is sometimes distinguished as transfer learning.

Both of these options were available to users in Ludwig v0.6, but were slow to train without an expensive multi-GPU setup to take advantage of distributed training. In v0.7, we’ve introduced a number of significant performance improvements that collectively improve training throughput by 2x when trainable=true and over 50x when trainable=false.:

- Automatic mixed precision (AMP) training, which is available when both trainable=true and trainable=false.

- Cached encoder embeddings, which is only available when trainable=false.

- Approximate training set evaluation (evaluate_training_set=false), which computes the reported training set metrics at the end of each epoch as a running aggregation of the metrics during training, rather than a separate pass over the training set at the end of each epoch of training. Though this makes training metrics appear “noisy” in the early epochs of training, it generally results in a 33% speedup in training time, and as such we’ve made it the default behavior in v0.7.

Made batch_size=auto the default, allowing Ludwig to automatically tune the batch size to maximize training throughput. Because this can result in training with very large batch sizes, we’ve also introduced ghost batch normalization for most of our encoders, combiners, and decoders, allowing you to train with very large batch sizes without degrading model convergence.

Training speed measured as “minutes per epoch” on the Yelp Reviews dataset. Note: you may need to squint to see the per epoch training time of AMP + Cached Embeddings!

The Ludwig AutoML API has also been updated to suggest optimized fine-tuning parameters on text datasets. Here’s an example of a typical AutoML suggested config for fine-tuning that takes advantage of these optimizations:

defaults:

text:

preprocessing:

cache_encoder_embeddings: true

encoder:

type: bert

trainable: false

combiner:

type: concat

norm: ghost

trainer:

batch_size: auto

learning_rate: 0.00002

learning_rate_scheduler:

warmup_fraction: 0.2

decay: linear

optimizer:

type: adamw

use_mixed_precision: true

evaluate_training_set: falseExample of a AutoML config that includes new optimizations for fine-tuning text models.

Check out our new Fine-Tuning Guide in the Ludwig documentation for more details.

Mixed Precision Training

Automatic Mixed Precision (AMP) is a method for speeding up training by using float16 parameters where it makes sense. See PyTorch’s blog for more details on the technique.

Mixed precision training is not always reliable in achieving the same convergence in model quality as float32 training, but its effect on large pretrained models like BERT is well-understood, and as such we recommend enabling it for any fine-tuning task. In our tests, we found it typically gave anywhere from a 2 to 2.5x speedup in training time.

In the future, we may make mixed precision the default in Ludwig when training on GPU, but for now, you can enable it by setting use_mixed_precision in the trainer section of your config:

trainer:

use_mixed_precision: trueEnabling mixed precision for accelerated trained.

See the Ludwig Trainer documentation for more details.

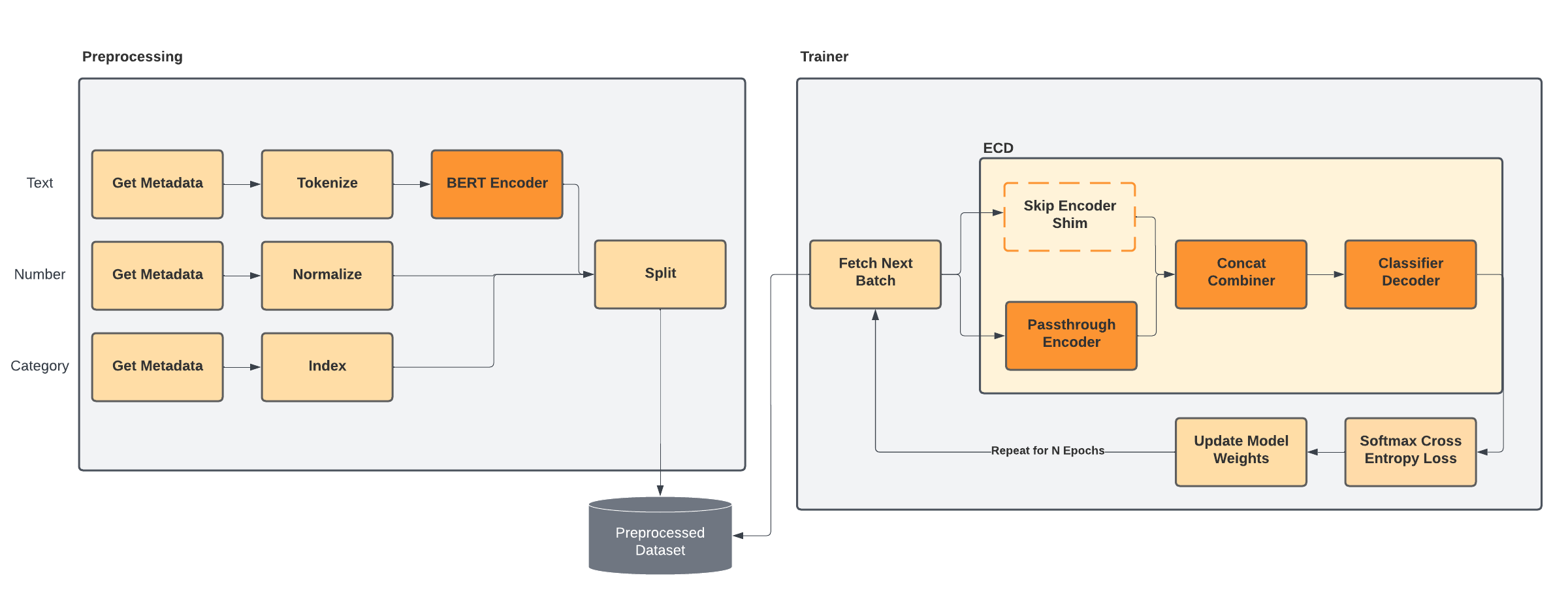

Cached Encoder Embeddings

In Ludwig v0.6, even when setting trainable=false, the embeddings generated from the tokenized input features would be generated fresh in each batch of training in every epoch. Now in v0.7, you have the option to move this step out of the training loop and into the one-time preprocessing step by setting cache_encoder_embeddings in the feature preprocessing config.

name: my_text_feature

type: text

preprocessing:

cache_encoder_embeddings: true

encoder:

type: bertCaching encoder embeddings is supported for every pretrained text encoder.

Encoder embedding caching is a relatively simple technique in concept, but is tricky to implement in most machine learning frameworks that decouple dataset preprocessing from model training. In Ludwig, we handle both the dataset preprocessing and model training in one declarative config, and as such are able to move expensive parts of the training process into preprocessing where it makes sense.

When the embeddings are cached in the preprocessed data, we replace the respective encoder in the ECD model at training time with a shim “Skip Encoder” that passes the input tensor data directly into the downstream combiner for concatenation with other input features. At prediction time and when exporting the model to TorchScript, the Skip Encoder is replaced with the original encoder and its pretrained weights.

While embedding caching is useful for a single model training run because it pays the cost of the forward pass on the large pretrained model for only a single epoch, it can be even more valuable in subsequent experiments. Because Ludwig caches preprocessed data and reuses it for new model training experiments (when preprocessing parameters are the same), any subsequent training runs for the same dataset will require no forward passes on the pretrained model whatsoever.

In v0.8, we plan to use this same technique to enable training Gradient-Boosted Machine (GBM) models in Ludwig with rich embeddings from large language models, allowing users to swap between neural networks and tree models on the same preprocessed dataset using pretrained text and image features.

New Distributed Training Strategies

Since the early days of Ludwig, support for Horovod / MPI has been available to enable distributed data parallel training. Since v0.4, Ludwig has taken this integration a step further to make the entire end-to-end process including preprocessing, training, evaluation, prediction, and hyperparameter optimization scalable and distributed by running on top of Ray. Now in v0.7, we’re leveraging the simplified abstractions introduced in the Ray AI Runtime (AIR) to add support for more distributed training strategies beyond Horovod, supporting both data parallelism (DDP) and data + model parallelism (FSDP).

Distributed Data Parallel (DDP)

Distributed Data Parallel or DDP is PyTorch's native data-parallel library that functions very similarly to Horovod, but does not require installing any additional packages to use. You can switch from Horovod to DDP in Ludwig by changing the strategy param to ddp in the backend trainer section of the config:

backend:

trainer:

strategy: ddpSwitch between Horovod and DDP by changing the strategy parameter in the config.

In benchmarks, we found DDP and Horovod to perform nearly identically, so if you're not already using Horovod, DDP is the easiest way to get started with distributed training in Ludwig.

Fully Sharded Data Parallel (FSDP)

Fully Sharded Data Parallel or FSDP is PyTorch's native data-parallel + model-parallel library for training very large models whose parameters are spread across multiple GPUs.

backend:

trainer:

strategy: fsdpEnable Fully Sharded Data Parallel for training very large models.

The primary scenario to use FSDP is when the model you're training is too large to fit into a single GPU (e.g., fine-tuning a large language model like BLOOM). When the model is small enough to fit in a single GPU, however, benchmarking has shown it's generally better to use Horovod or DDP.

Conclusion

This announcement blog only scratches the surface of the new features on offer in Ludwig v0.7. There’s a lot more we didn’t cover that we encourage you to try out including:

- Outlier Removal for number features.

- Hash Splitting for deterministic train-test splits that prevent data leakage.

- Improvements to the Ludwig Dataset Zoo API, including a number of new benchmark datasets.

- API annotations for contributors and Python users.

- Compatibility with Ray 2.3 and the new Ray AI Runtime (AIR).

We’re excited for the community to try the fine-tuning large image and text models with Ludwig v0.7. Please reach out to us on GitHub or Slack if you have any questions or would like to get in touch.

In the v0.8 release, we’re looking forward to revisiting GBMs and enabling more seamless experimentation between ECD and GBM models across a range of different data types (not only tabular features!).

We’d like to acknowledge the contributions of:

- Jim Thompson for his amazing work on pretrained image models and image augmentation.

- Arnav Garg and Geoffrey Angus for their work on Ray 2.1, 2.2, and 2.3 compatibility fixes and other fixes and improvements.

- Justin Zhao, Wael Abid, Jeffery Kinnison, Joppe Geluykens, and Shreya Rajpal for various bug fixes and improvements.

- Kabir Brar and Connor McCormick for their work on unifying and completing the Ludwig schema.

- Daniel Treiman for his work on the revamped Dataset Zoo API.

- Clark Zinzow, Amog Kamsetty, and Antoni Baum at Anyscale for their continued collaboration on Ray integration with Ludwig.