Predicting Customer Review Ratings on Multi-Modal Data with Predibase and Ludwig

Watch our recent webinar to see this use case in action: https://go.predibase.com/hands-on-with-multi-modal-machine-learning-lp.

In this blog post, we will show how you can easily train, iterate, and deploy a highly-performant ML model on a multi-modal dataset using Predibase, the low-code declarative ML platform, and open-source Ludwig.

If you want to see this use case in action, make sure to watch the on-demand webinar or request a demo to learn more about the platform. If you’d like to follow along, you can use our OSS project Ludwig with a Jupyter Notebook here and if you are new to Ludwig, feel free to check out our quick tutorial.

Predicting Customer Reviews with ML

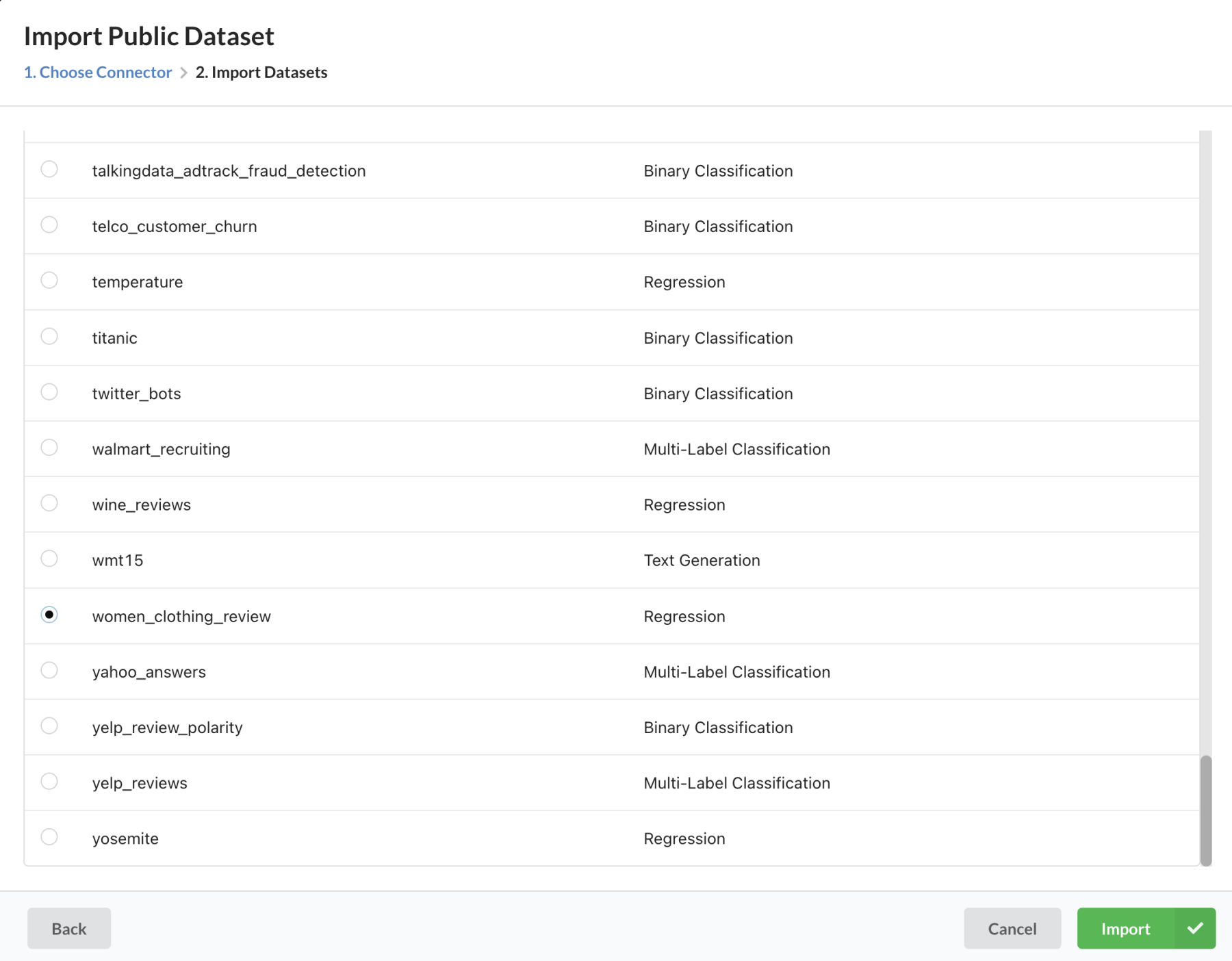

For today’s use case, we’ll take a look at the Women’s E-commerce Clothing Reviews dataset. With a surge in e-commerce shopping in recent years, customer reviews have become an important factor in both understanding customer satisfaction as well as influencing customer demand. We’ll be using the e-commerce dataset to predict customer ratings from detailed reviews. With this information, organizations can proactively reach out to dissatisfied customers or improve products before negative reviews start to amass. This use case can also be easily modified into a product recommendation or product sentiment use case directly within the Predibase platform depending on what you’re interested in predicting.

The dataset itself contains over 20k rows of real, anonymized reviews for women’s clothing in the e-commerce industry. In addition, this multimodal dataset includes a wide variety of feature types including categorical, numerical, and text features. These features include the age of the reviewer, product department, text description of the reviews, and the number of other users who found this review helpful. This set of wide-ranging features makes this use case a great fit for a platform like Predibase, which allows you to leverage all your structured and unstructured data to train cutting-edge ML models.

The ML task we’ll investigate will be to predict the number of stars (from 1 through 5, where 5 indicates the best score) of a given review. Since we will treat this rating score as a numerical value, this is a regression task and we’ll choose a corresponding performance metric of R2.

So without further ado, let’s dive in!

Connecting to Structured and Unstructured Data

Predibase allows you to instantly connect to your cloud data sources, pulling in all your data—including tabular, text, images, audio, and more—wherever it may live. For today’s example, the Women’s Clothing Review dataset is included in the out-of-the-box Ludwig datasets which makes it just a few clicks to pull it into Predibase.

If you’re following along with Ludwig, follow the instructions in the notebook to download the dataset.

Predibase makes it easy to try out a variety of datasets out of the box.

Getting our first ML models trained

Phase 1 - Train Model Baselines

Now that we have our data in Predibase, let’s continue to the exciting part of training models!

One of the benefits of a tool like Predibase is the ability to quickly establish baselines for a given dataset and task. When you are first analyzing a dataset, you may not immediately know if an ML-based approach could be useful, let alone what initial results you can expect to see. Built on top of Ludwig’s data-centric configuration approach, Predibase makes it easy to train your first baseline models. Let’s see this in action by training Neural Networks and Tree-based models.

Default Neural Network Model

Configuration

input_features:

- name: Clothing ID

type: number

- name: Age

type: number

- name: Recommended IND

type: binary

- name: Positive Feedback Count

type: number

- name: Division Name

type: category

- name: Department Name

type: category

- name: Class Name

type: category

output_features:

- name: Rating

type: number

model_type: ecdDefault Neural Network Configuration

- Training R2: 0.4921

- Test R2: 0.4896

Default Gradient-boosted Tree Model

Configuration

input_features:

- name: Clothing ID

type: number

- name: Age

type: number

- name: Recommended IND

type: binary

- name: Positive Feedback Count

type: number

- name: Division Name

type: category

- name: Department Name

type: category

- name: Class Name

type: category

output_features:

- name: Rating

type: number

model_type: gbmDefault Gradient-boosted Tree Model Configuration

- Training R2: 0.6619

- Test R2: 0.6141

Before moving on, let’s highlight a few key points.

To get started with Ludwig, all you have to do is specify your input and output features in a configuration-driven manner–in this case, YAML–to start to train your baselines. Predibase simplifies thmodeling process even further, reducing the process to arrive at a trained model, with just a few clicks.

In addition, one of the unique advantages you get is the ability to train both deep learning and tree-based models at the same time. This provides you with more confidence in the results of your baselines and serves as a starting options for future iteration. Below, we see the two models have varying performance which suggests there may be some room for improvement.

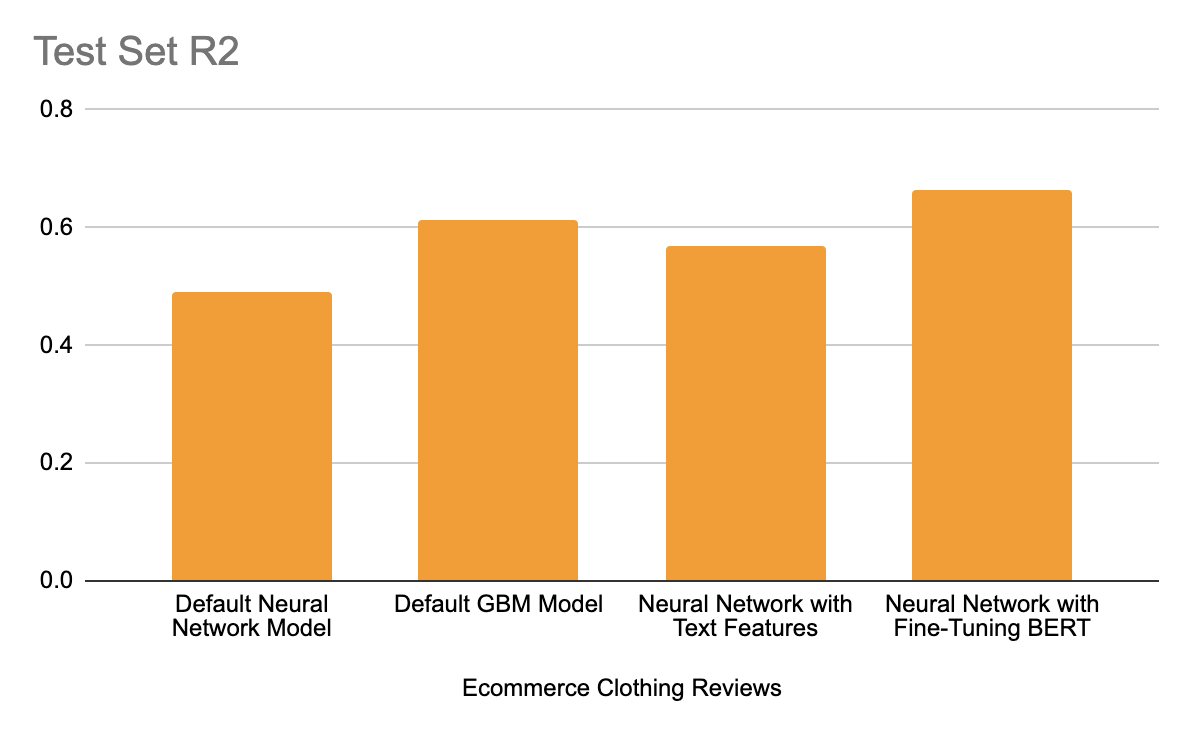

Default Neural Network. R2: 0.4896

Default GBM Model. R2: 0.6141

Iterating Models to Success

With real-world machine learning, it's rarely the case that the first model we train will be our final model. There are typically many iterations of exploration before converging on a model that meets our performance criteria while ensuring the model performs well on different slices of our data. So now that we have a few good baselines, what if we want to improve the model further?

In our experience, this question comes up all too often and other options on the market don’t quite work. If you look at other tools, you’ll likely find two broad types of options:

- Fallback to the DIY approach of building models from scratch requiring lots of code, or

- Try AutoML solutions hoping they can improve performance, but at the cost of flexibility

We’ve seen companies try both approaches, but in our opinion neither provides a truly efficient way for users of all skill levels to iterate on ML models. With Predibase and declarative ML, you get the best of both worlds through a unique combination of simplicity and flexibility, specifically:

- The simplicity of simply declaring what you want to change and Predibase automatically making the necessary modifications and training the end-to-end pipeline for you

- The flexibility to customize nearly *any* aspect of the model pipeline from how text is preprocessed to the learning rate used during training

This approach of declarative machine learning has been tried and tested by companies like Meta, Apple, and Uber (where Ludwig itself was created). Let’s discover how easy this declarative approach makes it to experiment and iterate!

Phase 2 - Adding Unstructured Text Data

How then should we begin thinking about iterating on our models? There are a variety of different approaches we can try including (but not limited to):

- Using additional preprocessing on the dataset

- Choosing different model types for encoders / combiners / decoders

- Tuning specific hyperparameters related to the training process like batch size and learning rate

In our case, we can try something even simpler to start. You may have noticed that although we used a neural network for one of our baseline models, we actually didn’t use the text features at all!

Given both the signal that could lie within customer review text and the ease of Predibase to handle unstructured data, let’s go ahead and add those text features and retrain our model. For Predibase, it’s a matter of a few toggles enabling those features, and in Ludwig, it’s only a few lines of code as shown below.

Neural Network using Text features

input_features:

- name: Clothing ID

type: number

- name: Age

type: number

- name: Title

type: text

- name: Review Text

type: text

- name: Recommended IND

type: binary

- name: Positive Feedback Count

type: number

- name: Division Name

type: category

- name: Department Name

type: category

- name: Class Name

type: category

output_features:

- name: Rating

type: number

combiner:

type: tabnetNeural Network using Text Features Config

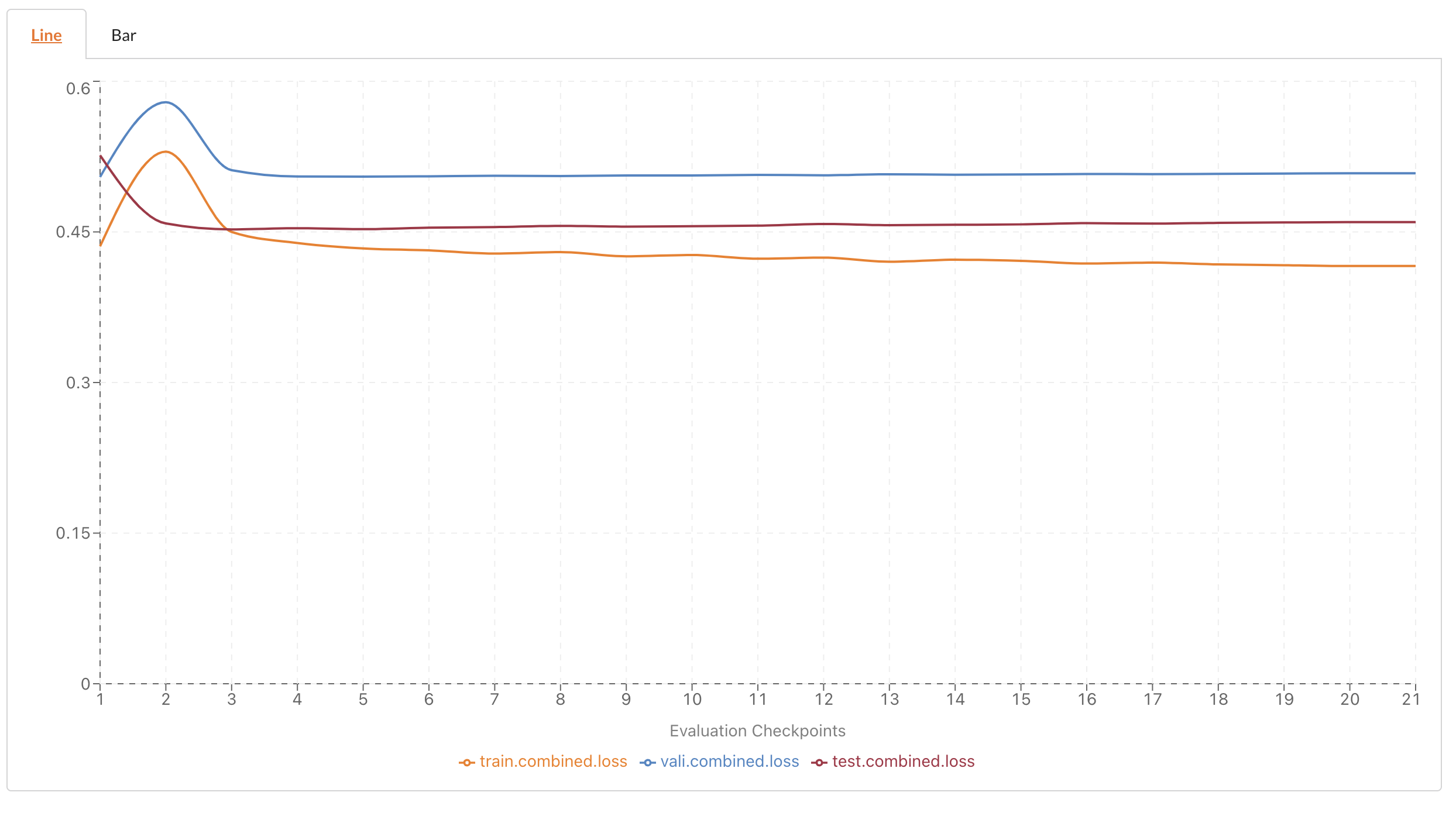

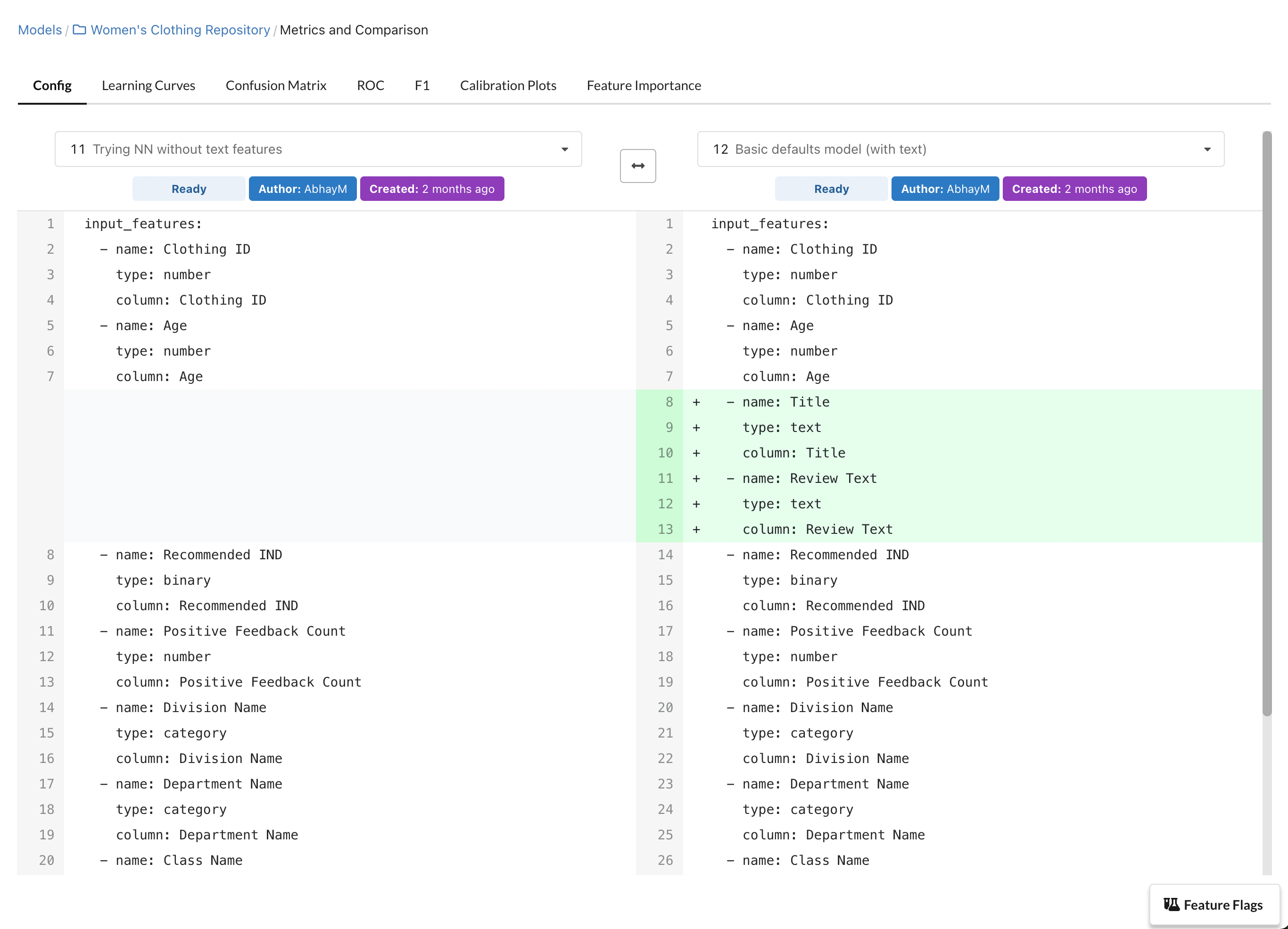

Once we train this new neural network model with the updated text features, we can see the performance metrics and compare how it performed to the previous iteration. Before we do that, however, it’s worth noting one of the many benefits of having models expressed as configuration files: Model Version Diff.

Comparing model parameters in Predibase is as easy as looking at a few lines of code in a config file.

With an entire model pipeline expressed as a single configuration file, we can visually inspect the differences between these two models and verify that we did indeed add the unstructured text fields in the new model versions. Neat!

Performance of Neural Network using Text Features

- Training R2: 0.7543

- Test R2: 0.5671

Great, it looks like adding text features helped the model achieve a better R2 score. Now you may be wondering if this should be our final model or if we should continue iterating. Predibase offers a variety of visualizations to understand the nuances of how our model performed and help answer that question including ROC curves, Confusion Matrices, and Feature Importances.

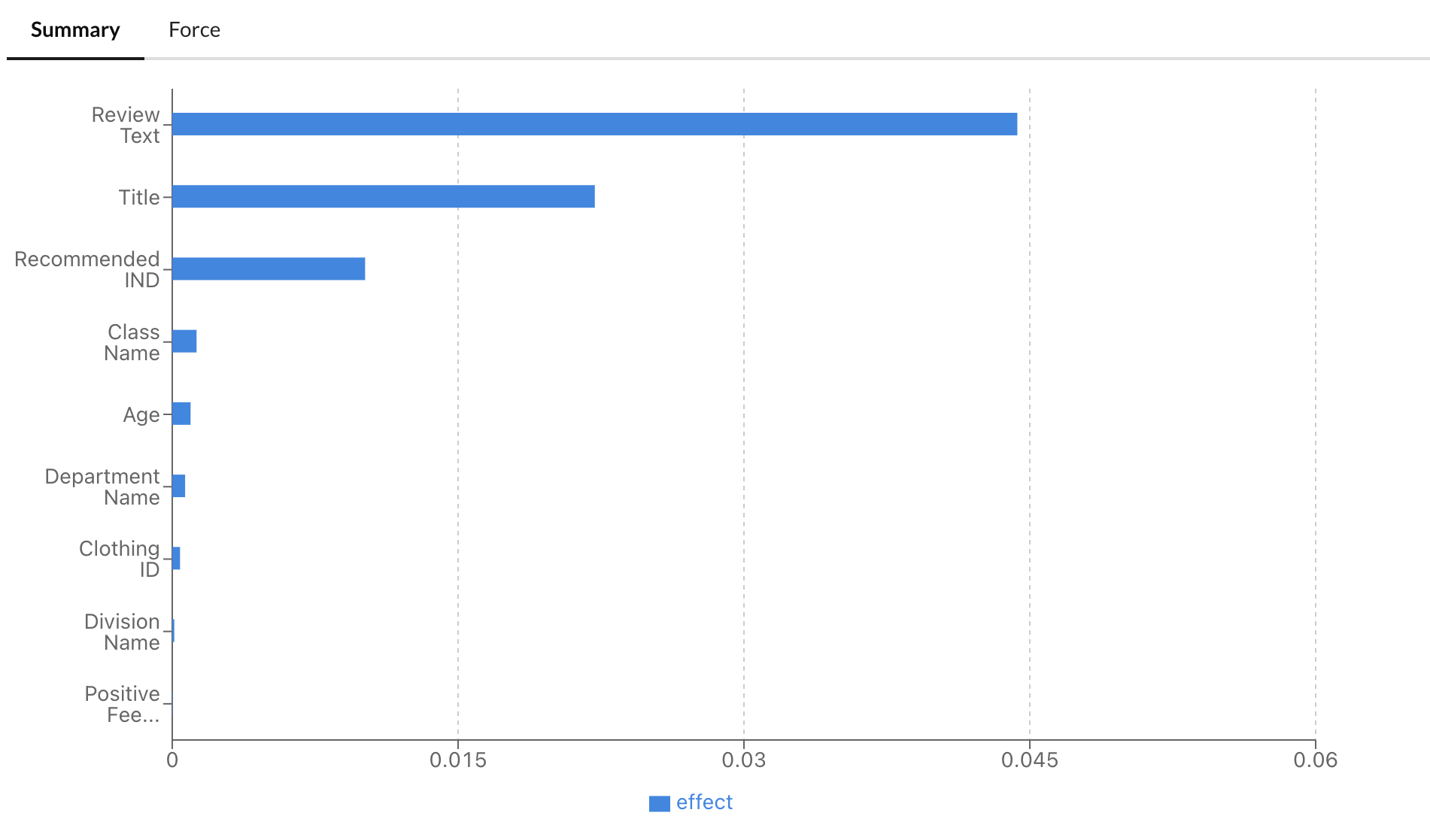

In particular, let’s take a look at the feature importance plots (powered by Integrated Gradients) to see if we can build stronger intuition for how much these text features aided model performance.

Feature Importance for Neural Network with Text Features.

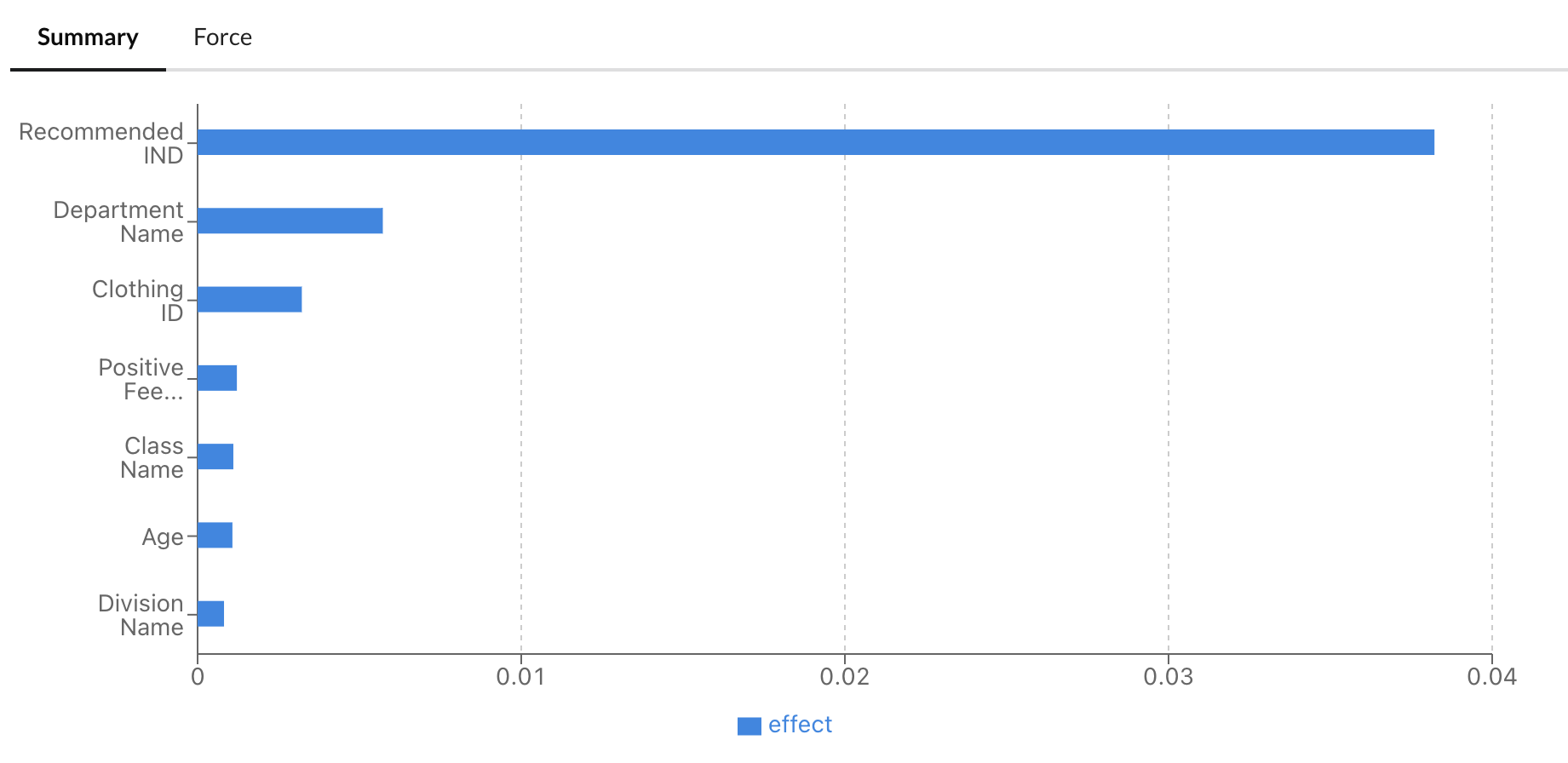

The chart above shows the relative importance values for each of the input features in the model. It looks like those text features (Review Text and Title) were critically important for the model to learn after all! The contrast becomes even starker when comparing relative to the original neural network’s feature importance chart.

Feature Importance for Neural Network without Text Features.

Phase 3 - Fine-tuning BERT

Now that we’re using all the features, let’s try something a bit more advanced! Predibase (and Ludwig) have integrations with Hugging Face, providing us with state-of-the-art models at our fingertips. Together with the fact that Ludwig leverages a compositional model architecture, we can use Hugging Face models as encoders at the individual input feature type level.

In our case, let’s try two things:

- Use a heavyweight encoder like BERT to encode our text features. We’ll use the pre-trained version model and choose to fine-tune the model on our dataset.

- We’ll also do a bit of feature selection and will only choose these two features as they were the most influential overall in our previous iteration.

Neural Network with fine-tuning BERT

input_features:

- name: Title

type: text

- name: Review Text

type: text

output_features:

- name: Rating

type: number

trainer:

optimizer:

type: adamw

batch_size: auto

learning_rate: 0.00002

learning_rate_warmup_epochs: 0

defaults:

text:

encoder:

type: bert

trainable: trueNeural Network with Fine-Tuning BERT Config

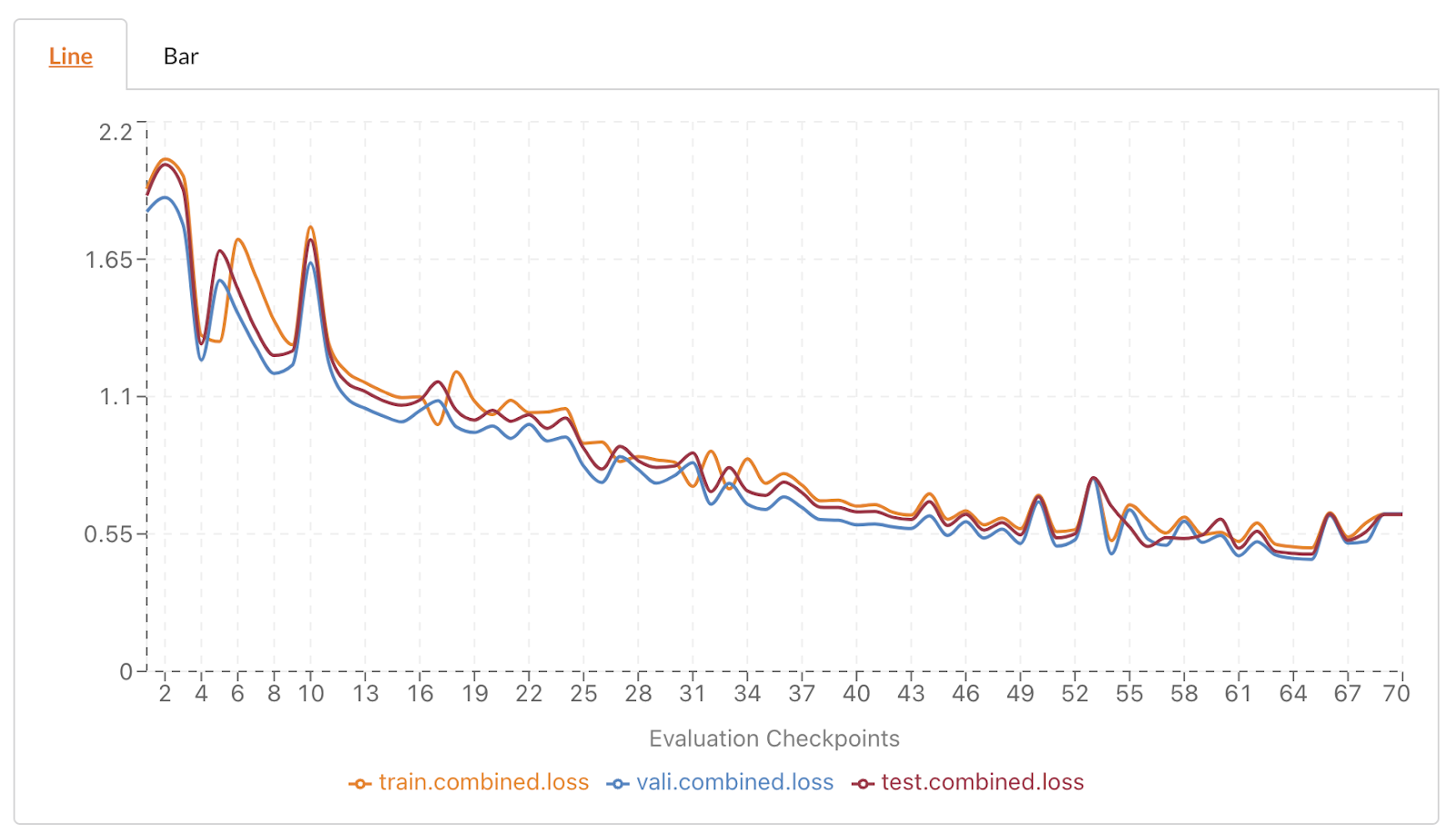

- Training R2: 0.9186

- Test R2: 0.6429

The Ease of Model Iteration with Predibase

Let’s summarize our findings from this iteration journey!

Summary of R2 scores for our customer rating prediction models.

You can see that training a multi-modal model that fine-tuned BERT for our text features along with defaults for our structured features resulted in the best performance with a Test R2 of 0.6636.

And while training baselines were simple to get started, Predibase also allowed us to easily experiment by adding features and modifying cutting-edge encoders to reach a high-performing model. Today, we experimented with some high-level parameters but in a future post, we’ll take a look at how we can tweak more advanced parameters as well. With Predibase, this iteration journey can also continue in a more automated fashion by leveraging distributed hyperparameter optimization.

Ready to Deploy Models into Production

Perfect, that final model is the best performing model we’ve trained so far! Now that we have a model we’re satisfied with, how exactly should we go about operationalizing it? Building a custom, in-house deployment process would be quite time-consuming and complex. Without Predibase, we’d likely have to pass the model over to the ML Engineering team who would have to figure out the best way to deploy the model. Given the use-cases and performance requirements for inference vary significantly per model, we’ve seen many organizations fall into the trap of creating insurmountable tech debt while building out a model deployment pipeline.

Recognizing these challenges, Predibase offers performant, hassle-free deployments natively within the platform for both batch and real-time serving through the use of Torchscript and other cutting-edge technologies.

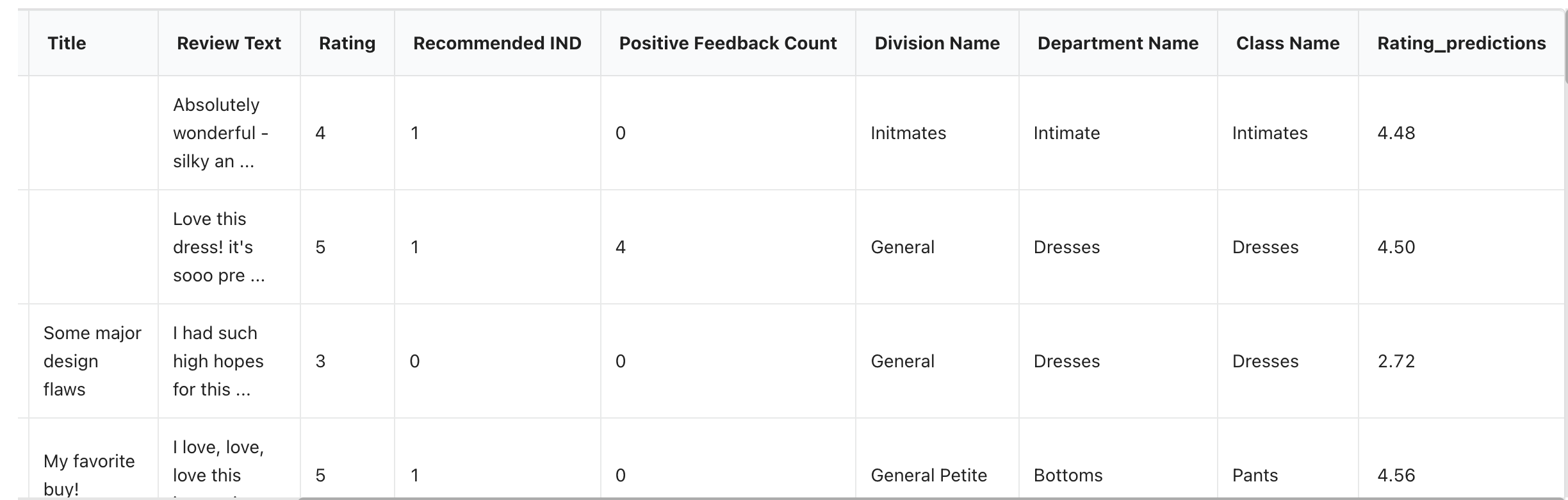

In the case of batch inference, we can use Predibase’s PQL (Predictive Query Language) to get predictions instantly and examine how the Rating_predictions compare to the ground truth Rating.

You can quickly explore rating predictions inline using Predibase’s predictive query language (PQL) and editor

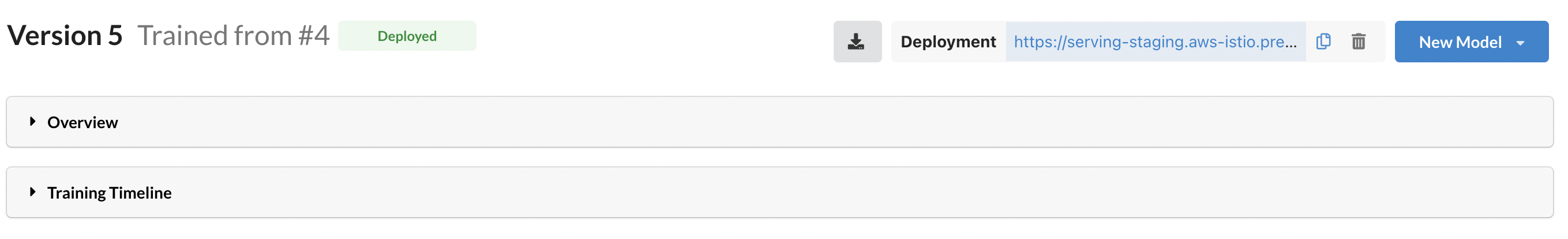

If instead, we want to deploy this to a REST endpoint for real-time inference, we can deploy to a high-throughput, low-latency endpoint that is live in just a few clicks!

You can deploy to a REST endpoint in just one click using Predibase.

Trying Multimodal Datasets on Predibase

In this example, we showed you how to build a review ratings prediction engine in less than 10 minutes using state-of-the-art deep learning techniques and multi-modal data on Predibase. This was possible due to the underlying compositional Encoder-Combiner-Decoder (ECD) model architecture that makes unstructured data as easy to use as structured data. This is powerful for any e-commerce or retail companies looking to better understand customer sentiment and apply ML to their business.

This was also just a quick preview of what’s possible with Predibase, the first platform to take a low-code declarative approach to machine learning. Predibase democratizes the approach of declarative ML used at big tech companies, allowing you to train and deploy deep learning models on multi-modal datasets with ease.

If you’re interested in finding out how declarative ML can be used at your company: