In our Fine-tuning Leaderboard, we showed how small fine-tuned open-source models consistently outperform GPT-4o across various tasks, offering a compelling alternative for organizations seeking cost-effective, high-quality AI solutions. However, a significant challenge remains: acquiring enough data to effectively train these smaller models. To overcome this, we have introduced a synthetic data generation workflow in Predibase. This means that with as few as just 10 rows of seed data, you can train a small language model (SLM) like Llama-3.1-8b that beats GPT-4o at a fraction of the cost.

With only 10 rows of real data, our adapter trained on a combination of real and synthetic data outperforms 10-shot GPT-4o in a grounded common sense inference task.

In this tutorial blog, we’ll show you how to create effective synthetic datasets comparing different techniques for synthetic data generation. This process combines chain-of-thought reasoning, problem decomposition, and a series of proposers, critiquers, and aggregators into a mixture of agents pipeline for enhanced synthetic data generation. We will walk through our experimental setup and key findings, emphasizing the importance of data quality, the impact of data quantity, and the cost benefits of fine-tuning a SLM on synthetic data versus K-shot learning and prompt engineering on GPT-4o. Lastly, we will demonstrate how to utilize this synthetic data generator on the Predibase platform, enabling you to unlock the full potential of these advanced models.

Synthetic Data Generation Methods

There are three main reasons why organizations want to generate synthetic data:

- Insufficient data: A lack of high-quality training data can be a major hurdle when dealing with new use cases, poor data quality, or significant performance gaps in prompting.

- Inaccessibility: Organizational barriers between machine learning teams and data engineering teams or data access restrictions can make allocating training data problematic for the teams responsible for fine-tuning LLMs

- Privacy: Synthetic data provides a practical solution when real data cannot be used for privacy reasons, for example when it contains personally identifiable information (PII).

In the following sections, we will focus on methods to generate narrow task-specific data which is crucial to a lot of organizations.

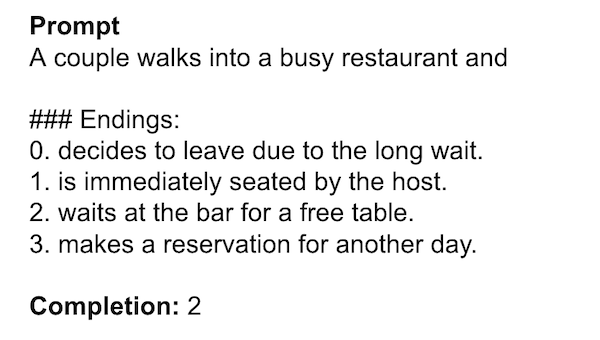

We evaluated these methods using the HellaSwag dataset, a common sense completion task, and CoronaNLP, a Twitter sentiment classification task. These datasets provide objective metrics and real-world relevance, helping us assess the strengths and weaknesses of each synthetic data generation approach.

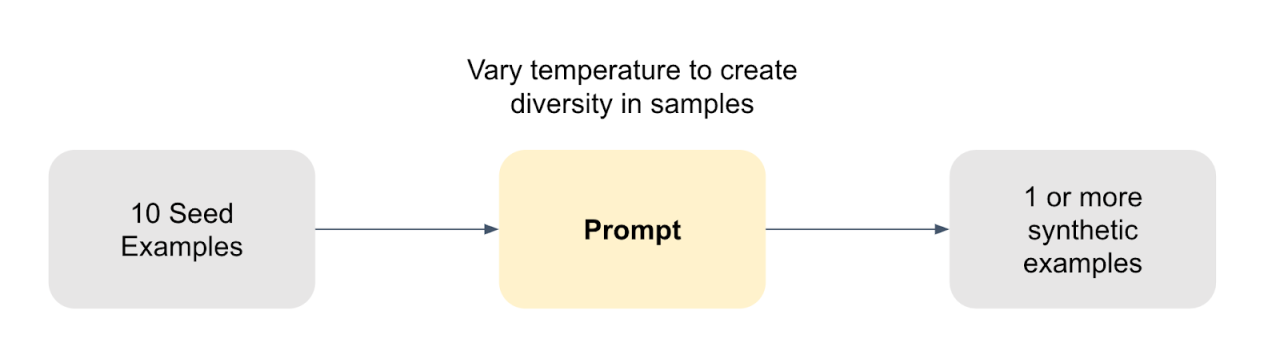

Synthetic Data Generation Method #1: Examples in, Example Out

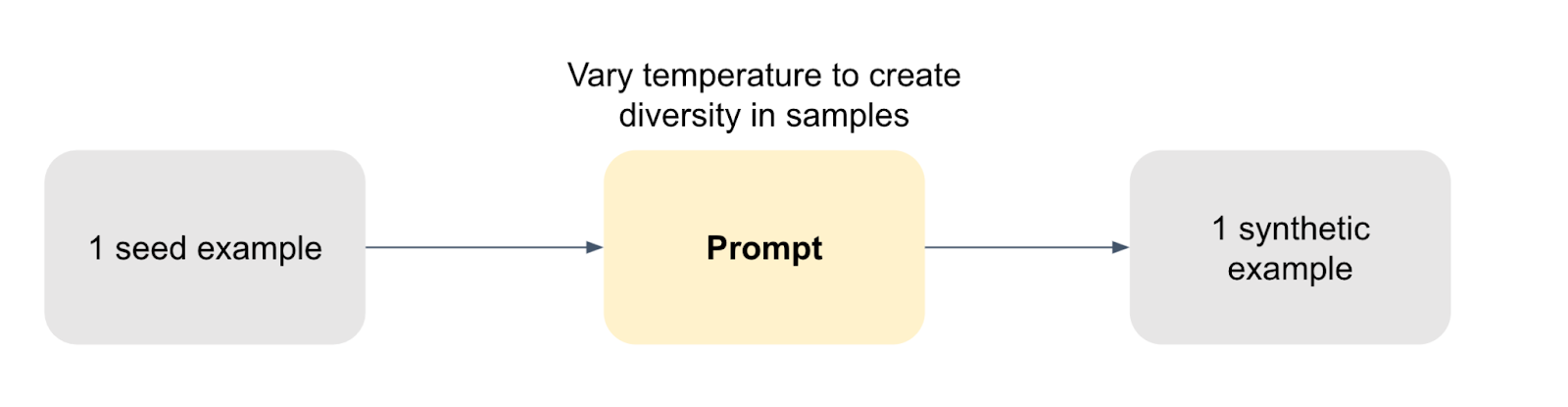

The first synthetic data generation method involves K-shot prompting, where a small number of seed examples, such as 10, are provided with task-specific context. For instance, with the HellaSwag dataset, the task is described, and examples are presented to the model, prompting it to generate one or more new synthetic examples.

synthetic data generation method involves K-shot prompting, where a small number of seed examples, such as 10, are provided with task-specific context.

Ensuring diversity in datasets is a crucial challenge, as it significantly impacts the effectiveness of fine-tuning. The temperature parameter plays a key role in determining output randomness. Lower temperatures produce more consistent results, while higher temperatures introduce greater variability, which can enhance creativity and diversity. By adjusting the temperature, you can address the issue of limited example diversity and improve the overall performance of your model.

This method is simple, fast, and cost-effective. However, providing multiple examples to the model at the same time can often lead to homogeneous data samples, even with varied temperatures as shown in the example below.

This generated example lacks details such that the possible endings are ambiguous.

We hypothesize that this is because the LLM internally condenses the examples together into one "average" example for generation, losing lots of nuance and specificity.

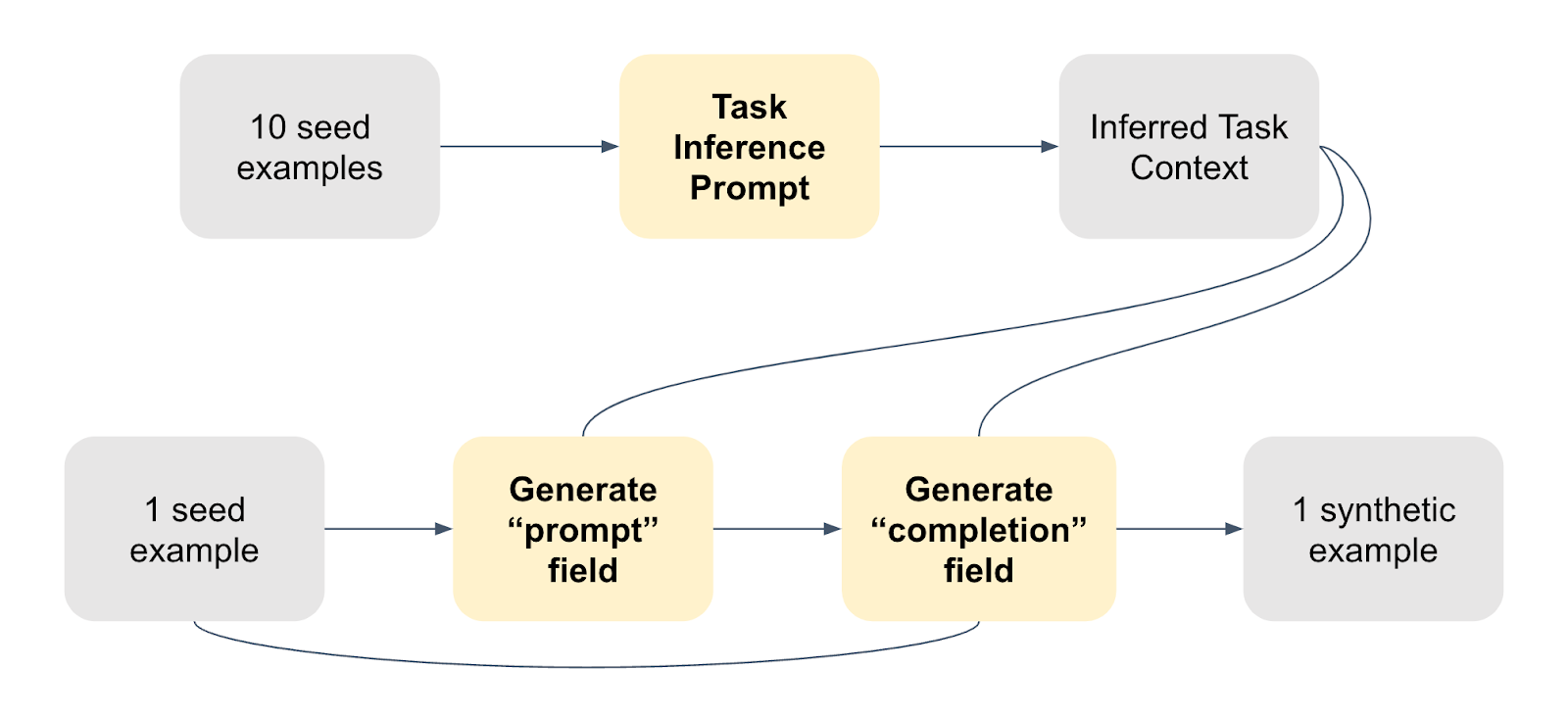

Synthetic Data Generation Method #2: Iterative, Single Sample

To address the issue of generating overly generic examples from multiple inputs, a single seed example method was tested. This approach involved providing one seed example to the model to generate a new example with similar characteristics.

Synthetic Data Generation Method: single seed example

We also used the temperature parameter to enhance the diversity of outputs, resulting in higher specificity and a greater percentage of valid examples. The generated examples were richer and more detailed, avoiding the context averaging seen with multiple examples.

This generated example is very specific and detailed with a non ambiguous completion as opposed to the more generic ones produced by method #1.

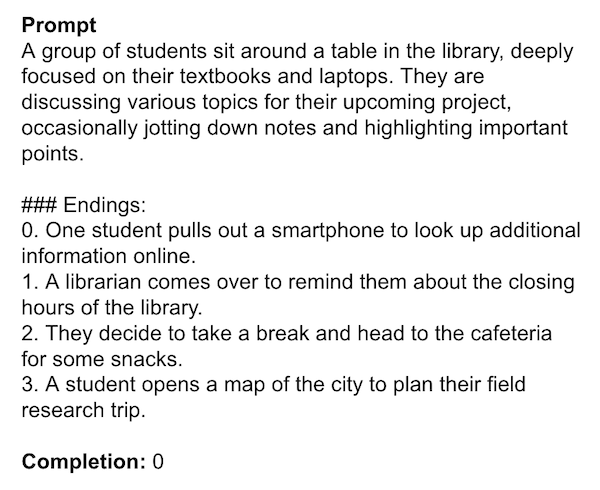

However, this approach sometimes missed general task nuances. For instance, in the example below, the model was not able to grasp the grammatical structure from the single example it was provided with and produced grammatically incorrect endings.

Invalid example generated by method #2 with a prompt ending with a coma and incomplete sentences as ending proposals which are grammatically incorrect.

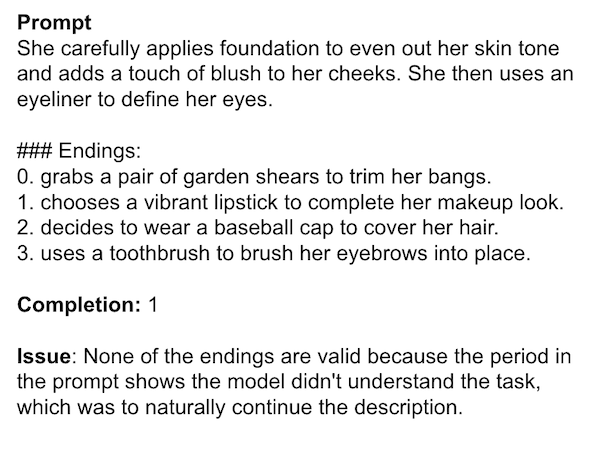

Additionally, the generated dataset tended to favor specific classes, such as "heavily positive" over "positive," losing the uniformity of the original distribution, as shown in the graph below.

The generated dataset is heavily skewed towards two specific classes.

Synthetic Data Generation Method #3: Single Pass

From method two, we found that using a single example can produce richer outputs but sacrifices the broader context provided by the K-shots method. Conversely, the K-shots method captures generic context but lacks specificity.

The concept of chain of thought and tree of thought reasoning were recently proposed in the LLM field. This follows the common guideline to decompose a problem into smaller, manageable parts and then combine the solutions and consists in having a model reason through a process before delivering an answer.

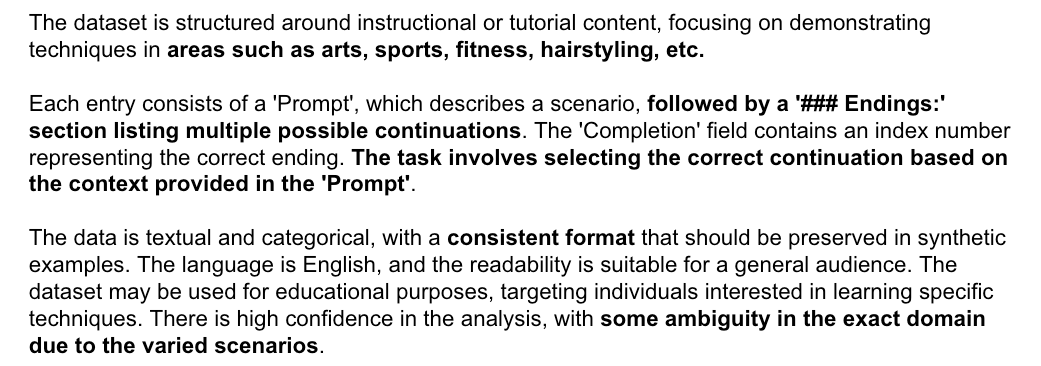

To balance context and specificity, we split the task to first capture the task context and subsequently generate prompts and completion. The task context consists of various attributes such as domain, structure, tone, format, intended use, target audience, etc. It is generated using multiple examples to capture the generic aspect of the data. The subsequent task was then broken down by producing a prompt and the completion separately. The prompt was generated using both the seed example and inferred task context. And the completion was produced using the seed example, synthetic prompt, and inferred context, achieving a balance between context and specificity.

Synthetic data generation: chain of thought, single pass

In the inferred context for the Hellaswag dataset shown below, the model recognized that the task's domain spanned multiple topics, identified the structure of the prompt column, and understood the task's goal. It lets the downstream LLM calls know that they need to maintain a consistent format in all generated synthetic examples and acknowledge uncertainty about the exact domain due to varied scenarios.

Inferred context from the Hellaswag dataset reflecting the multiple domains associated with the task, correctly identifying the structure of the prompt with a prompt followed by a "###" with possible endings and the goal of the task: select the correct ending using the context provided in the prompt.

This third method showed improved contextual understanding, greater consistency in sample quality, and a good balance between specificity and diversity. However, the quality of inferred context depends heavily on seed examples and still faces dataset distribution shift challenges, though less so than method one and two. To address these limitations, a fourth method called "mixture of agents" can be explored.

Synthetic Data Generation Method #4: Mixture of Agents

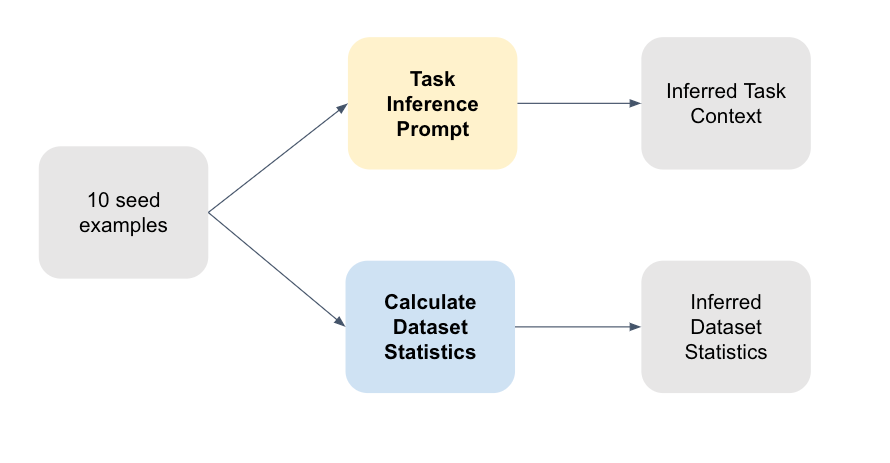

This approach enhances the single-pass approach by improving context inference and generation. Method three showed effective context inference, but distribution shift remained a challenge. To address this, dataset statistics like imbalance and prompt-completion ratios are calculated and incorporated into prompts, aligning the model with the dataset.

Synthetic Data Generation Method: Mixture of Agents. First, the context and dataset statistics are inferred from the 10 seed examples

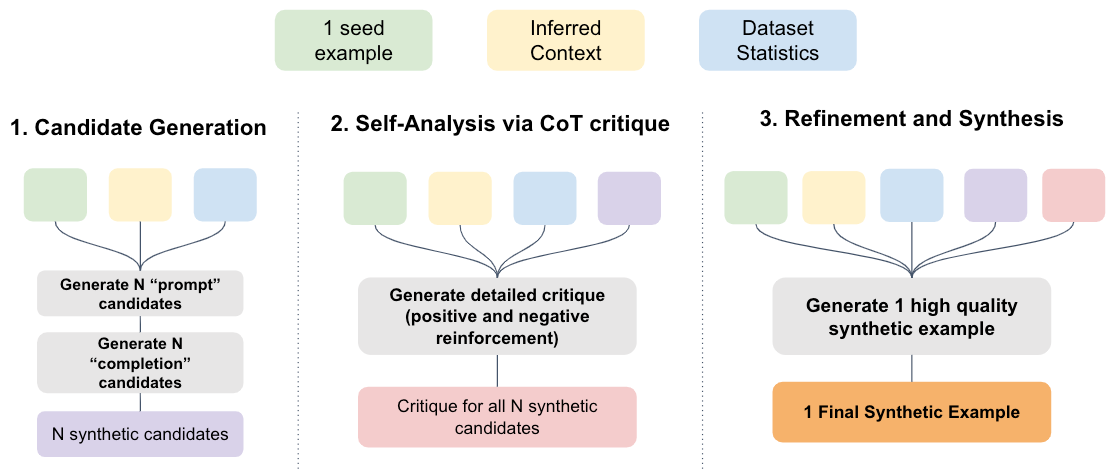

The mixture of agents (MoA) method involves three steps: candidate generation, self-analysis via chain of thought reasoning, and synthesis of feedback to generate a refined example. Unlike Method 3, instead of generating one sample per seed, it produces multiple prompt-completion candidates.

In the second phase, the MoA method employs self-analysis through chain of thought reasoning. This involves critiquing the generated examples by reflecting on the original examples, context, and dataset statistics, using specific criteria for both positive and negative reinforcement.

Finally, all information—seed example, inferred context, dataset statistics, and critiques—is combined to generate a high-quality synthetic example. This refined example will ultimately be used for fine-tuning.

The mixture of agents (MoA) method involves three steps: candidate generation, self-analysis via chain of thought reasoning, and synthesis of feedback to generate a refined example. Then, prompt-completion candidates are generated, critiqued, and synthesized into a single high-quality synthetic example.

The MoA method combines the strengths of previous approaches, preserving generic context while capturing specificity while reducing distribution shift by conditioning candidate generation on the dataset characteristics. It excels in datasets requiring detailed reasoning, reduced bias, and high accuracy. The method, while slower and costlier due to multiple calls, is designed for one-time processing to generate a comprehensive dataset. This upfront investment is worthwhile, as it allows the fine-tuning that leverages the cost-effective SLM in production. By paying the initial cost, significant savings and improved performance can be achieved at scale.

Results: Fine-tuning SLMs with Synthetic Data vs. K-shot prompting GPT-4o

Now that we have introduced our synthetic data generation method, let's delve into the results and address the question: "With a limited number of examples, should you opt for K-shot learning with GPT-4o, or generate a synthetic dataset to fine-tune an open-source model?"

Experiment Setup

Aspect | Details |

|---|---|

Experiment Setup | Evaluated Llama-3 8b model fine-tuned on 1,000 examples synthetic dataset generation using MoA with GPT-4 Turbo vs. K-shot prompting with GPT-4o. Used k=10, 25, 50, and 100. |

Evaluation Tool | Utilized our efficient open-source evaluation harness for fast, parallel API querying with retry logic and self-tuning delays. |

Datasets and metric | Used Corona NLP and HellaSwag for real-world tasks, avoiding biased metrics like Rouge, leveraging prior experience from Loraland. |

Seed Examples | Carefully selected high quality samples from the dataset. Note that the experiments we ran with randomly selected samples showed inferior results compared to when the seed set was more carefully selected. |

Request Size Assumptions | Assumed 1,500 tokens for prompts and 800 for completions in generation; 1,000 tokens for prompts and 50 for completions in inference. |

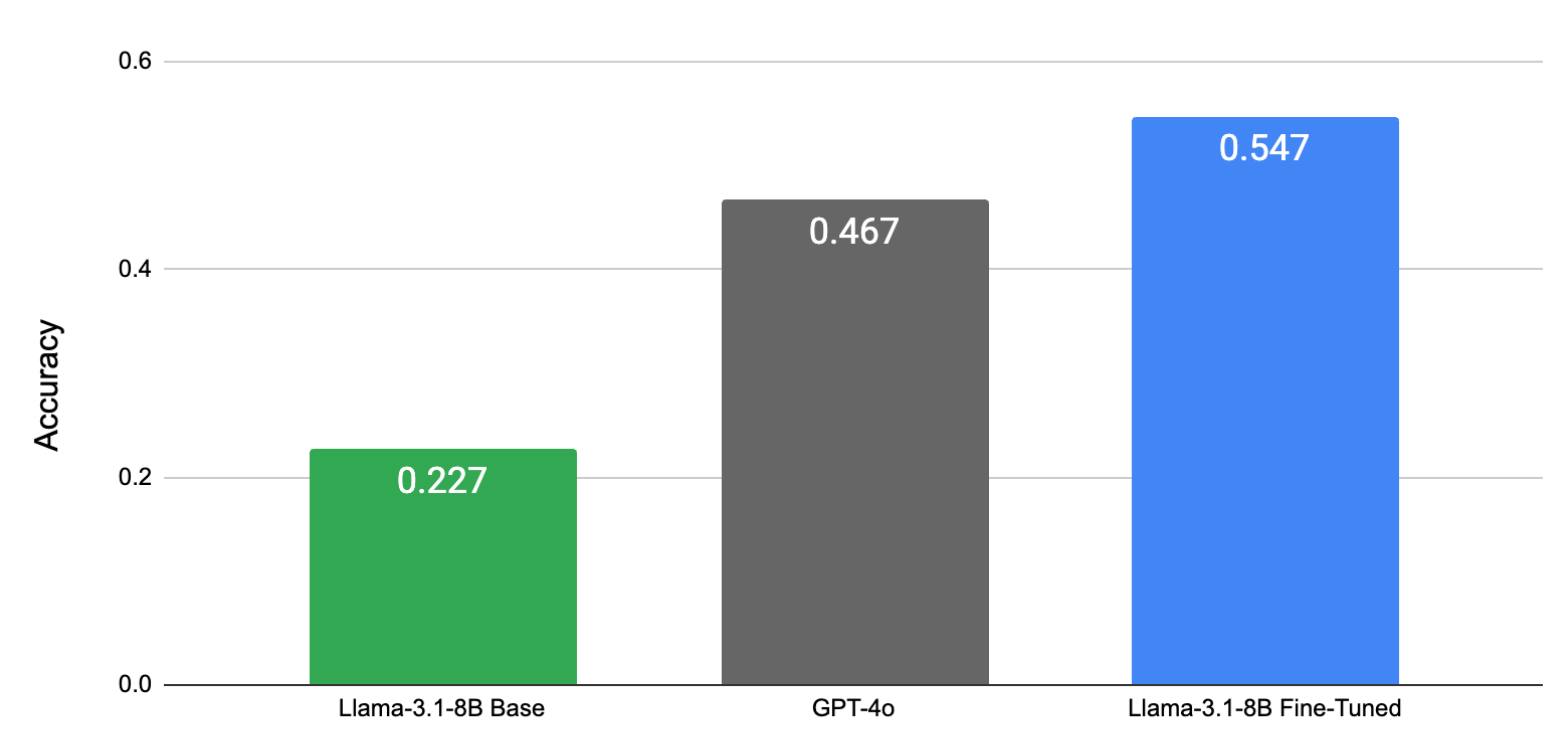

Results

14x cheaper with Predibase synthetic data generation

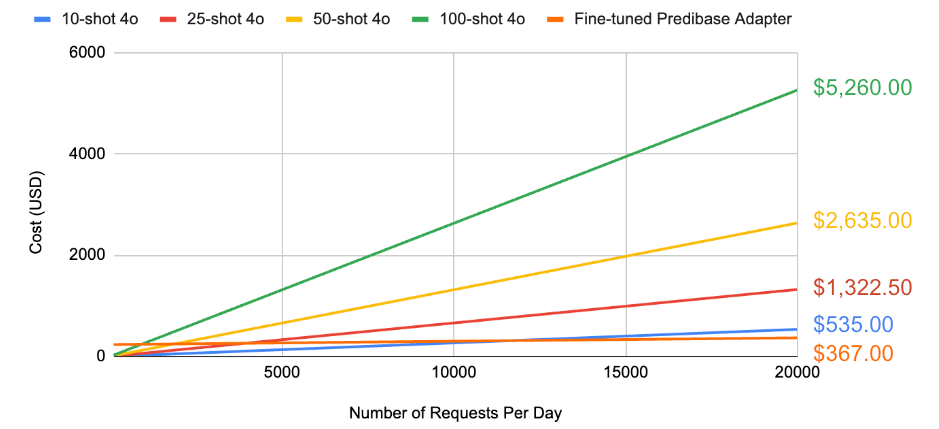

We found that while the cost of a Fine-Tuned Predibase adapter on synthetic data is practically constant with respect to the number of requests, the cost of k-shot inference with GPT-4o increases significantly and can be as much as 14 times more expensive with 20,000 requests per day!

Costs with Predibase vs cost with k-shot inference with GPT-4o.

Fine-tuned SLMs on Synthetic data outperform GPT-4o K-shot inference

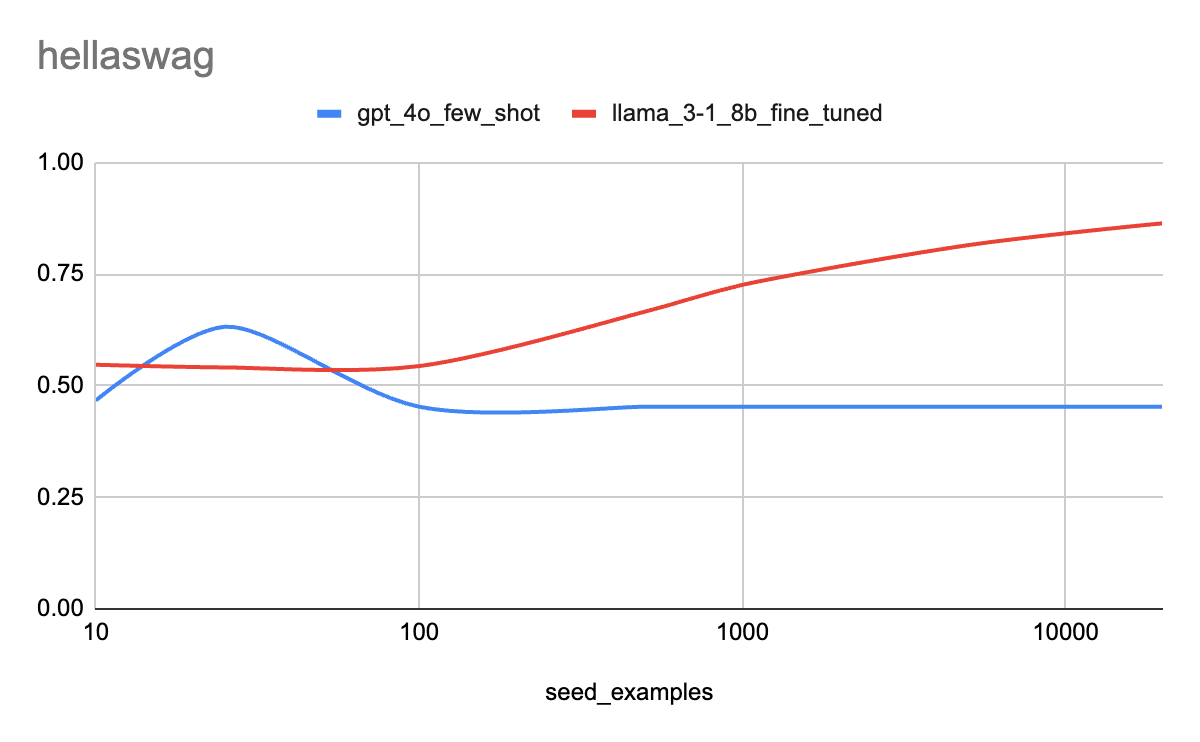

The performance comparison between GPT-4o and fine-tuned models on the HellaSwag dataset reveals that GPT-4o initially excels, but fine-tuning gains an advantage once around 80 ground truth examples are used. Beyond this point, fine-tuning continues to improve, while K-shot prompting levels off or gets worse. Fine-tuning becomes especially effective for larger datasets, as K-shot prompting struggles with context window limits beyond 1,000 examples.

Fine-tuned SLMs on Synthetic data outperform GPT-4o K-shot inference. Performance of GPT-4o vs Llama-3-8b fine-tuned on synthetic data - Hellaswag.

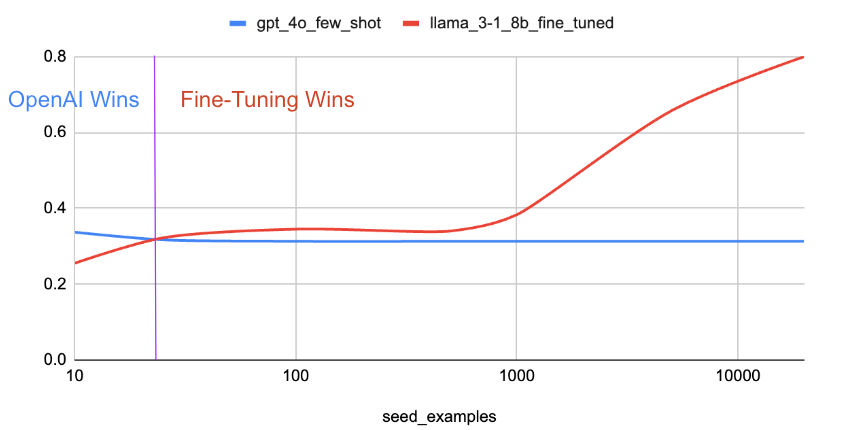

This trend varies with task complexity. For simpler tasks like Corona NLP, the inflection point where fine-tuning surpasses GPT-4o K-shot prompting occurs even earlier. However, fine-tuned models generally perform better on narrower tasks, as demonstrated in previous work. Thus, if you have ample data, fine-tuning complex task adapters yields significant benefits. You can refer to our fine-tuning index to find the best OpenSource LLM to fine-tune based on your task. Even with limited data, fine-tuning still delivers a significant boost, though the improvement may be less pronounced.

Performance of GPT-4o vs Llama-3-8b fine-tuned on synthetic data - Corona_NLP.

Alternative Approach: Gretel.ai Synthetic Data Generation

In a previous blog post, we presented a case study on using synthetic data generated by Gretel Navigator + fine-tuning on Predibase to obtain high accuracy for a text-to-sql use case. To address the data scarcity issue for fine-tuning, leveraging 3rd-party products that specializes in synthetic data generation like Greteal can be a viable option. Unlike Predibase’s MoA method, Gretel system combines a multitude of AI feedback, agentic workflows and synthetic data best practices and provides end-to-end synthetic data generation tools in one platform, ensuring data quality and privacy. We have done parallel experiments with Gretel synthetic data and fine-tuning on Predibase across various tasks, the evaluations using the same evaluation harness on those models reveal the same trend that the performance of smaller models fine-tuned on high-quality synthetic data can even exceed models fine-tuned on original dataset and commercial LLMs such as GPT.

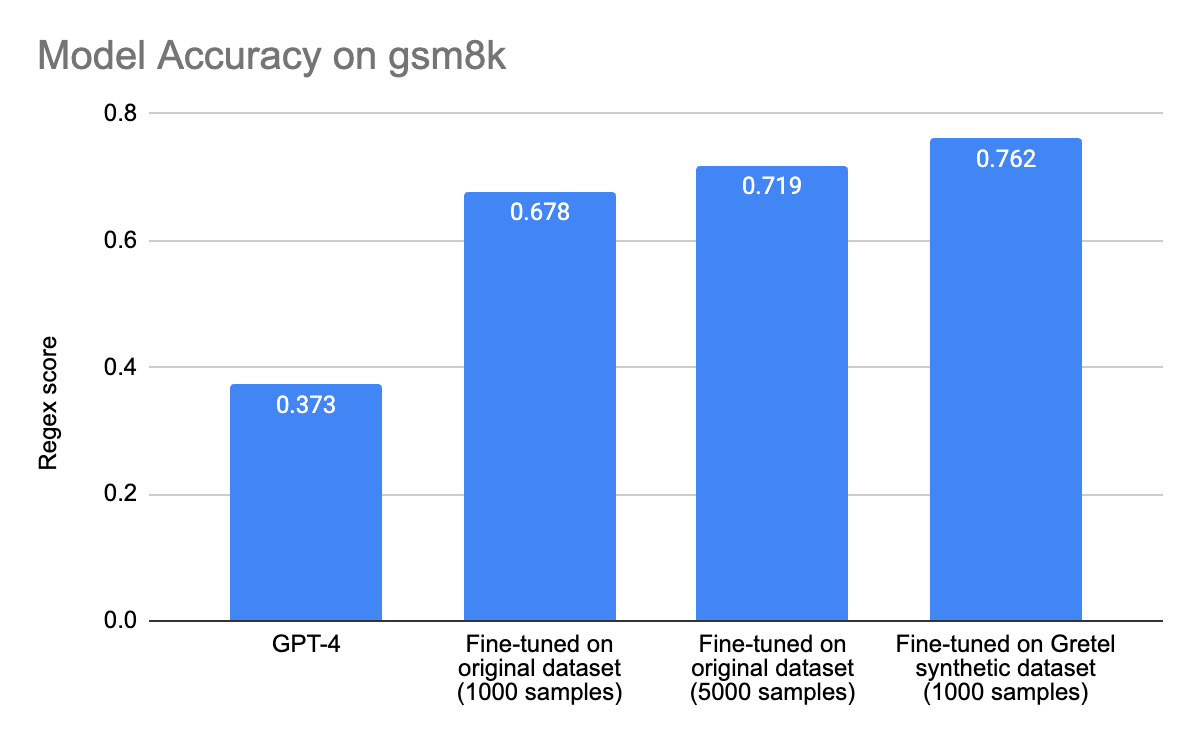

The gsm8k dataset consists of high quality linguistically diverse grade school math world problems and supports the task of question answering on basic mathematical problems that require multi-step reasoning. We used the Gretel system to generate 1000 rows of synthetic data based on 25 seed samples, fine tuned Llama3.1-8b-instruct based on synthetic data and real data and evaluated models using Regex matching. We discovered that models that were fine-tuned on Gretel generated synthetic data outperformed models that were fine-tuned on gsm8k real data, and GPT does not do well in solving math problems at all:

Performance of GPT-4 vs Llama-3-8b fine-tuned on real data vs. synthetic data - gsm8k.

Tutorial: How to Generate Synthetic Data and Fine-tune a SLM with Predibase

Using the Synthetic data generator on Predibase is very easy. First, we’ll show you how to do this in just a few clicks with the Predibase UI. We also have a Synthetic Generation and Fine-tuning Notebook, so you can see how to do this directly in our SDK with a few lines of code.

Step 1: Prepare your seed dataset for synthetic data generation

To use the data augmentation feature, you need to have at least 10 rows of sample data uploaded on the platform. The sample data is considered as the seed dataset and should be a good representation of what you want your actual data to look like in terms of statistical distribution and content diversity. All the synthetic data will be generated based on the inferred context of the seed data.

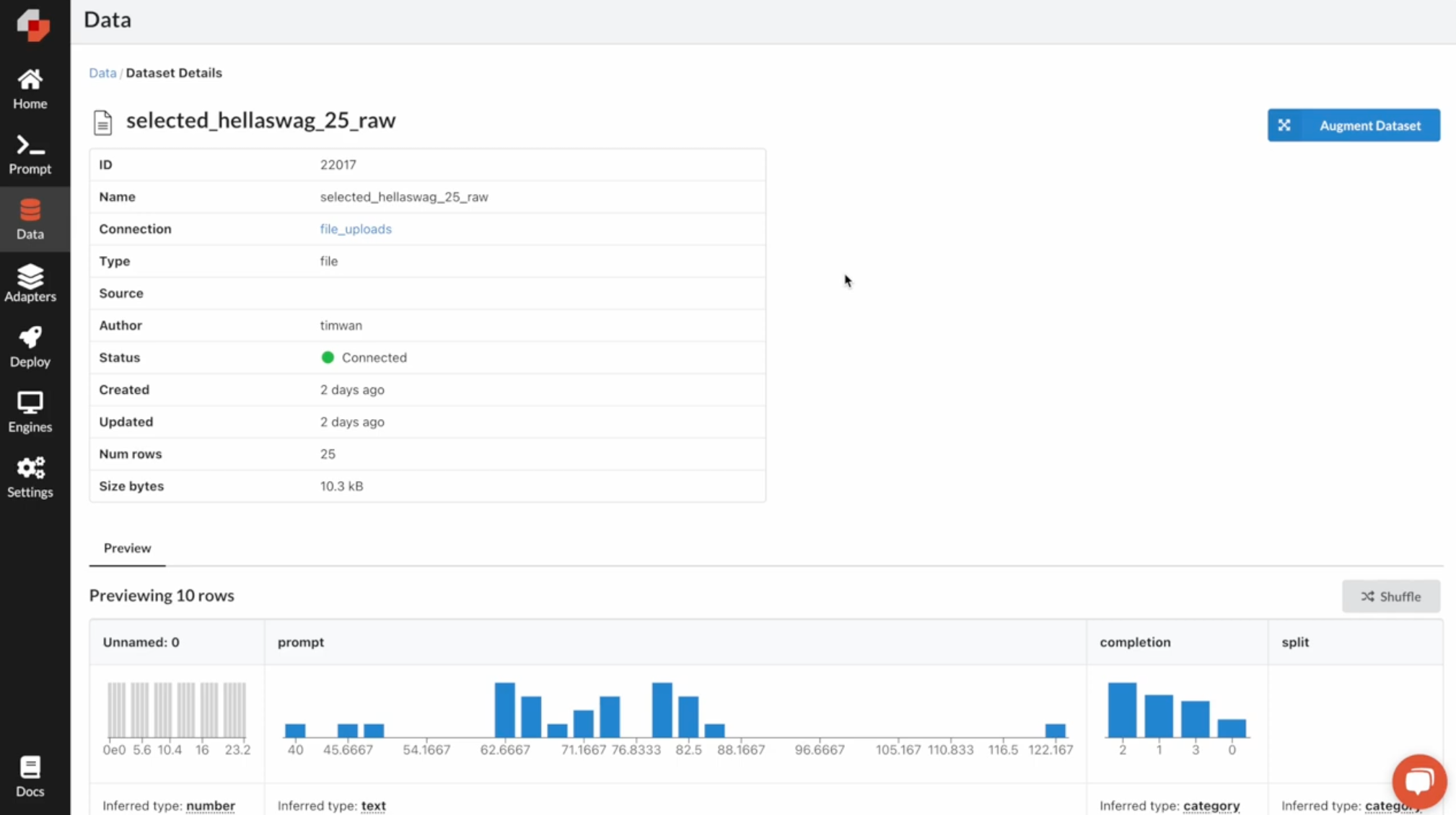

Once the seed data is ready in the Data tab on Predibase UI, you will see a preview of the dataset as well as its summary statistics, simply click “Augment Dataset” to start augmenting your seed dataset.

Prepare data for synthetic data generation. Click "Augment Dataset" button from the data tab.

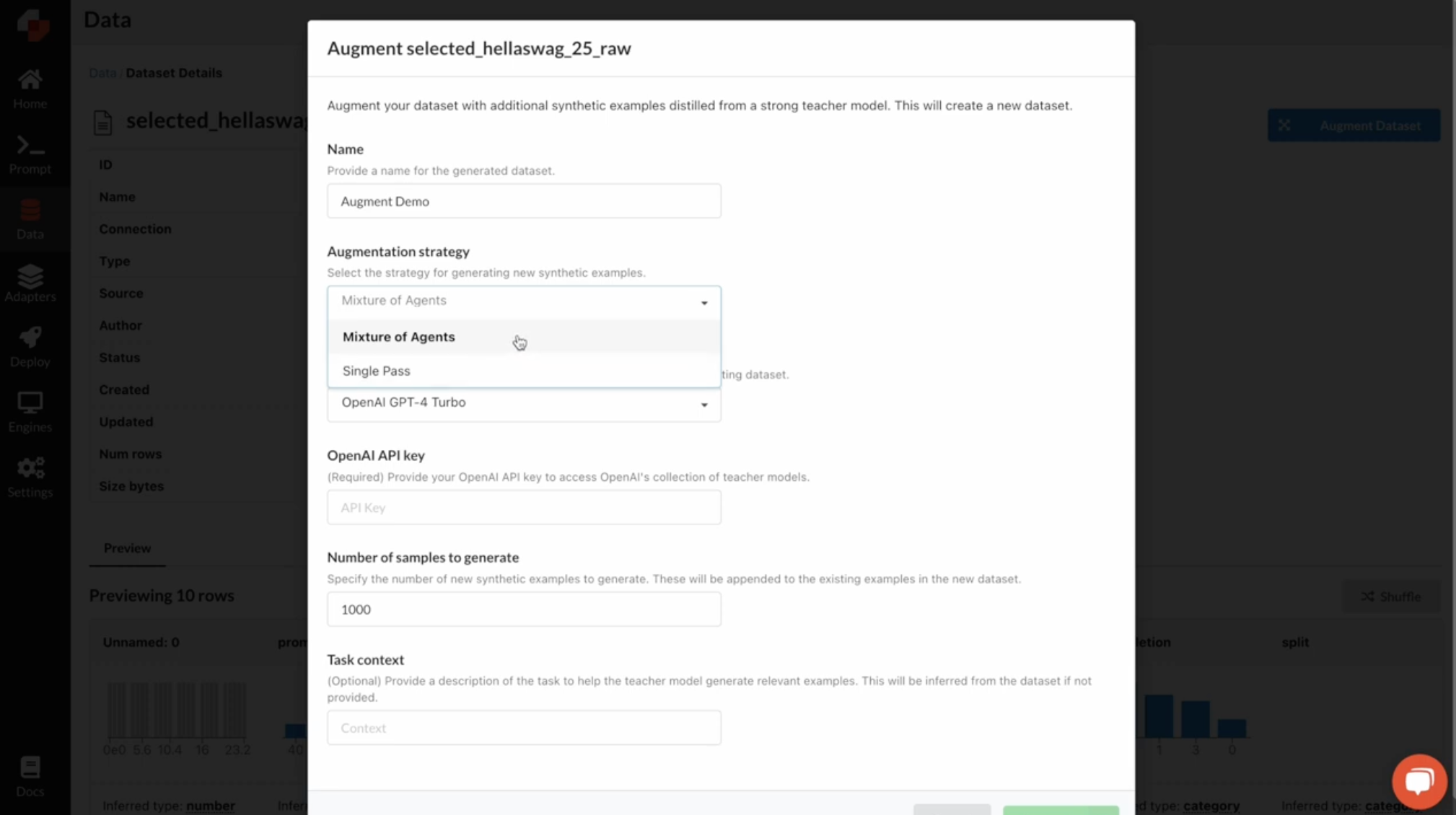

Step 2: Choose the augmentation strategy

Once you click, you can configure how you want your dataset to be augmented. You can select your augmentation strategy in the dropdown menu, whether you want to use the Single Pass or the Mixture of Agents approach.

configure how you want your dataset to be augmented. You can select your augmentation strategy in the dropdown menu, whether you want to use the Single Pass or the Mixture of Agents approach.

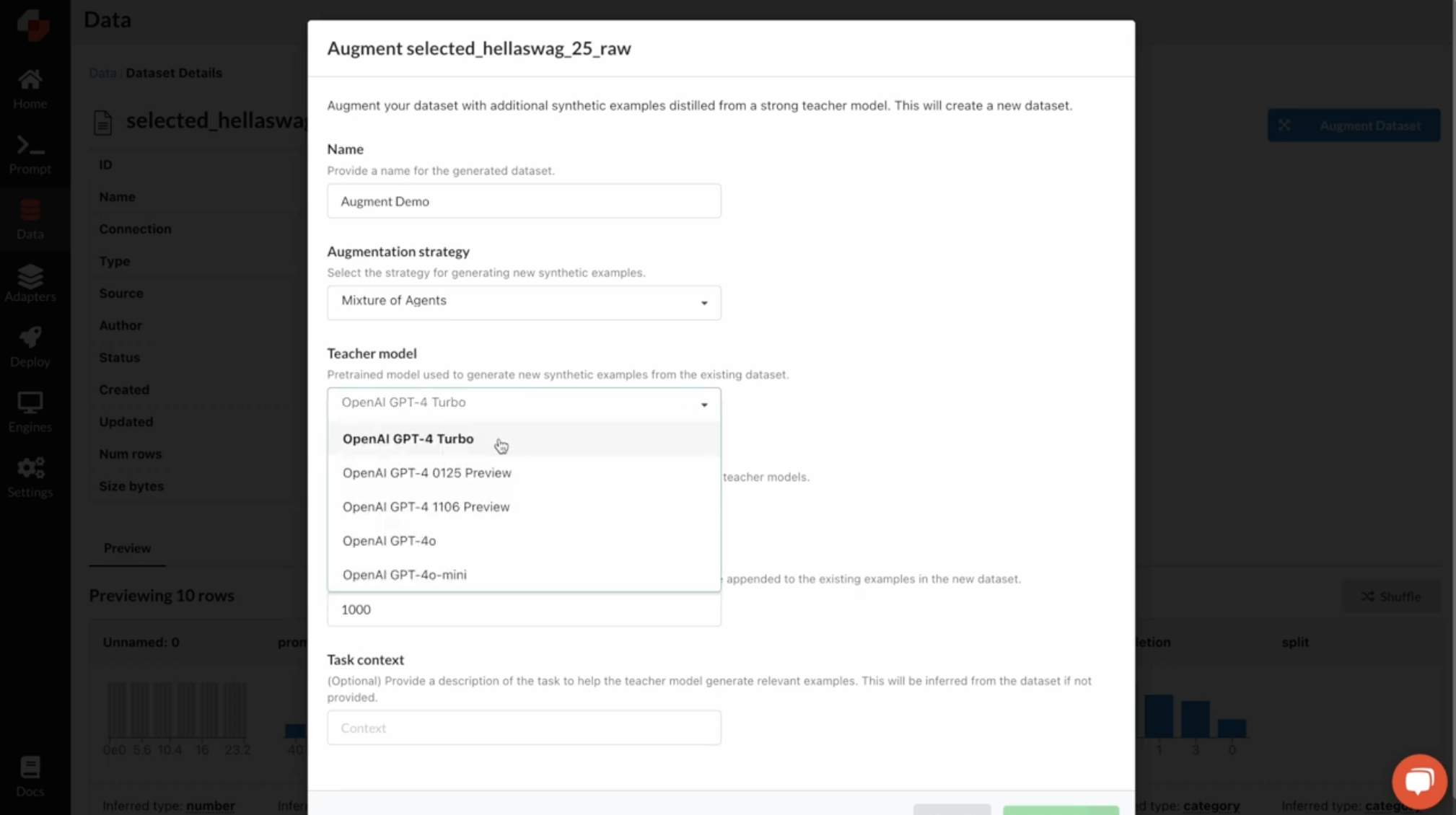

Step 3: Choose the teacher model

We support a list of OpenAI GPT models to generate new synthetic examples, and we’re continuously adding new models to the list as options.

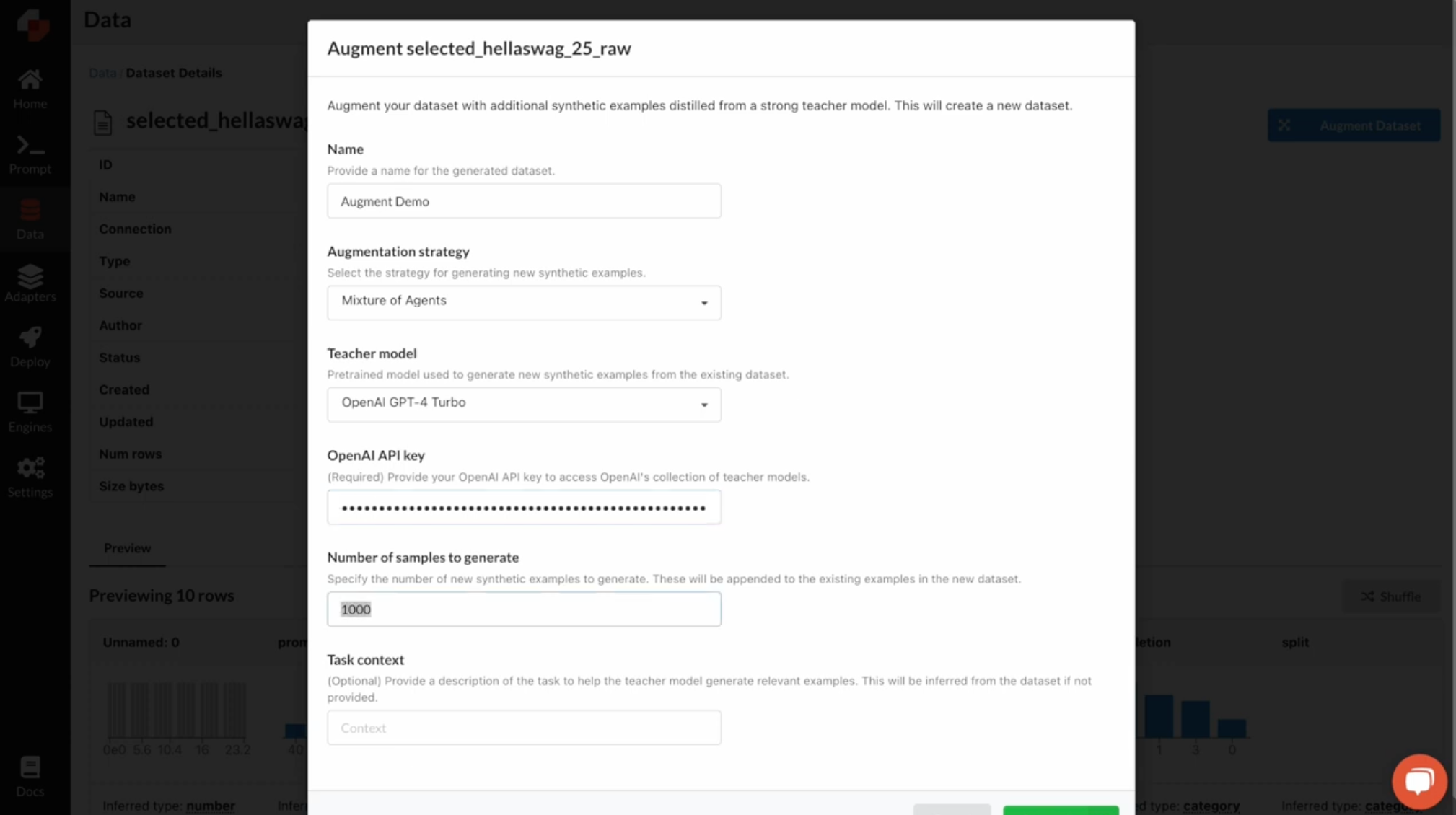

Step 4: Pass in your API key

You’re responsible for paying the OpenAI models (https://openai.com/api/pricing/) for the synthetic data examples the teacher models generate. You can calculate the projected cost based on the OpenAI pricing information, your augmentation strategy and number of examples you want to generate.

Step 5: Choose the number of samples you wish to generate

We chose 1000 for our experiments. These will be appended to your existing examples in the new dataset.

Step 6: Pass in the task context

This is where you would specify your own details for the task to assist the model in generating high-quality samples, or you can leave this blank to let Predibase infer the task-specific context for you based on your seed dataset. Previous experiments prove that adding more specifics and details about the data you want to generate helps improve the synthetic data quality.

Step 7: Inspect your newly generated dataset

Once the augmentation process finishes running, you now have a new dataset with the name that you provided. You can inspect a few examples and check its statistics, then start a training job using this dataset

Synthetic data generation via Python SDK

As mentioned above, you can also use our notebook tutorial to accomplish this with our Python SDK. The notebook illustrates the end-to-end process of generating synthetic data, fine-tuning a LoRA adapter with a small language model (llama-3.1-8b) using your synthetic dataset, and performing evaluations on the adapter using the HellaSwag dataset.

Key Takeaways: Generating Synthetic Data

Through this journey, we learned several key insights about synthetic data generation:

- Data Quality: The quality of your base data is crucial. Transitioning from arbitrarily selected to carefully chosen samples significantly enhances the quality of synthetically generated data. Even with limited data, prioritize high quality.

- Data Quantity: Quantity is also important; more ground truth samples lead to better performance. Synthetic data generation helps level the playing field.

- Fine-Tuning vs. K-shot Prompting: Ultimately, fine-tuning surpasses GPT-4 K-shot prompting, emphasizing the importance of data quantity.

Next Steps: Train Your Own SLM with that Beats GPT-4

Don't let a small amount of data prevent you from fine-tuning a highly-accurate SLM. With just 10 examples, our synthetic data generator enables efficient fine-tuning. Explore our intuitive workflow, efficient fine-tuning, and serverless auto-scaling infra with $25 in free credits.