Check out the most recent improvements to Predibase! Whether you’re looking to migrate from OpenAI with our new OpenAI API compatible endpoints, want to start fine-tuning models like Mixtral-8x7b for free, or are curious about some of our recent performance improvements, read on!

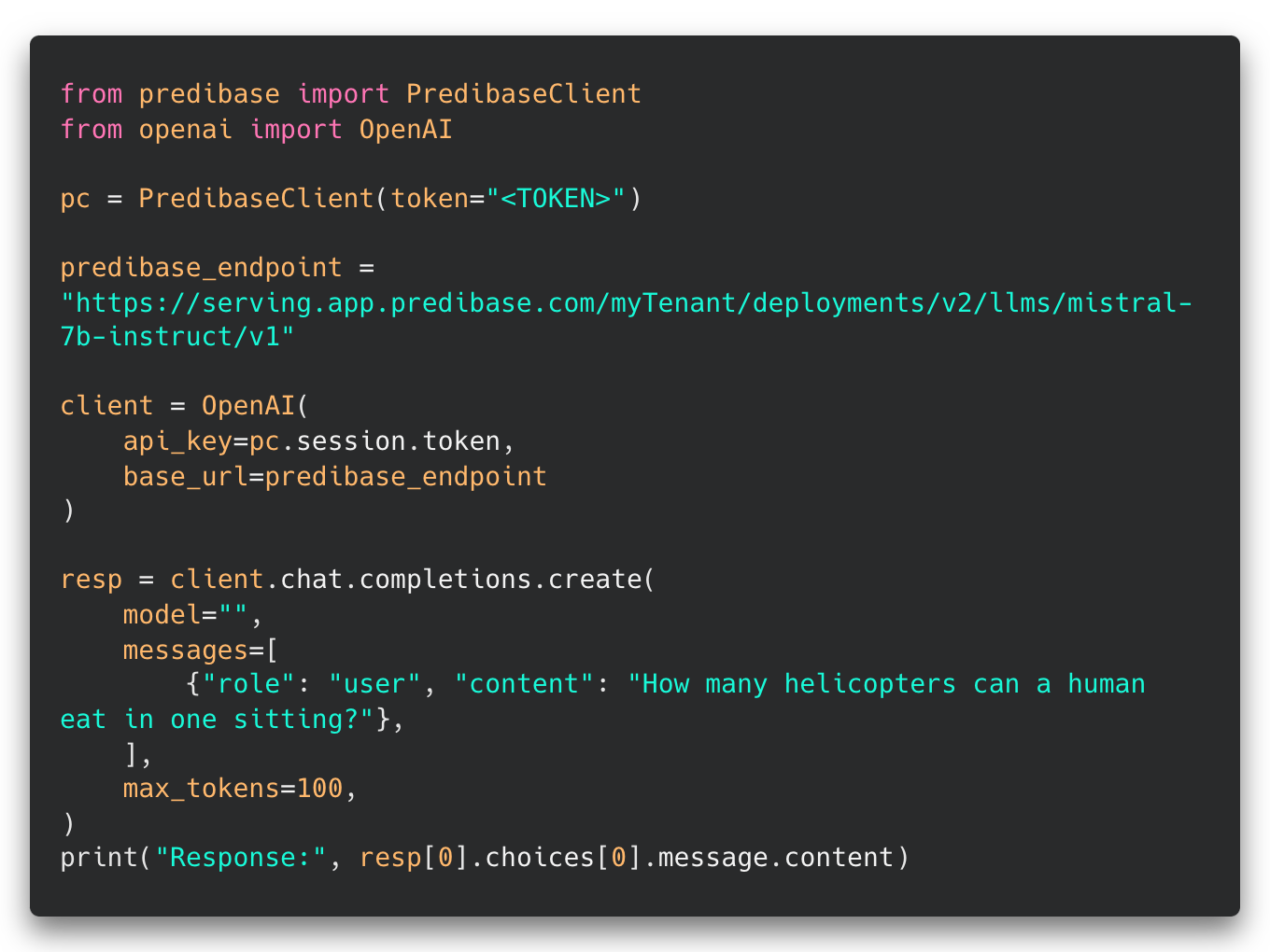

Introducing OpenAI API compatible endpoints

We’ve made the migration path from OpenAI to Predibase even easier with OpenAI API compatible endpoints that make switching as easy as changing a few lines of code. These endpoints can be used via Python SDK or REST API. Learn more in our docs.

Free trials now come with $25 in credits!

We’ve restructured our free trial and are excited to now offer a 30-day trial period that comes with $25 in credits. This makes Predibase the first platform to offer you the ability to fine-tune models such as Llama-2-70b and Mixtral-8x7B on cutting-edge hardware for free! Try it today!

100x Less Latency with Optimized LLM Inference Engine

We’ve significantly revamped our LLM Inference Engine to improve latency by over 100x when scaling workloads to thousands of concurrent requests! As a result, users will see a noticeable improvement in response times for both serverless endpoints and dedicated deployments. Stay tuned for more detailed updates and benchmarks coming soon.

Token Streaming

By popular demand, we’ve added an additional endpoint /generate_stream for streaming responses from any model hosted on Predibase.

Enhanced API Token Management

Users will now have the ability to expire, delete, and have visibility into tokens in use at an account level. This allows customers to have better visibility into their tokens, disable old tokens if they’re ever leaked, and rotate tokens on a regular cadence.

Azure VPC self-serve provisioning workflow

In addition to offering a fully-managed cloud deployment, Predibase can be deployed in your Virtual Private Cloud (VPC) to give you more control over your infrastructure and data. We’ve long offered support for AWS and recently released support for Microsoft Azure. Read more in our documentation about how to quickly deploy Predibase into your Azure cloud environment.