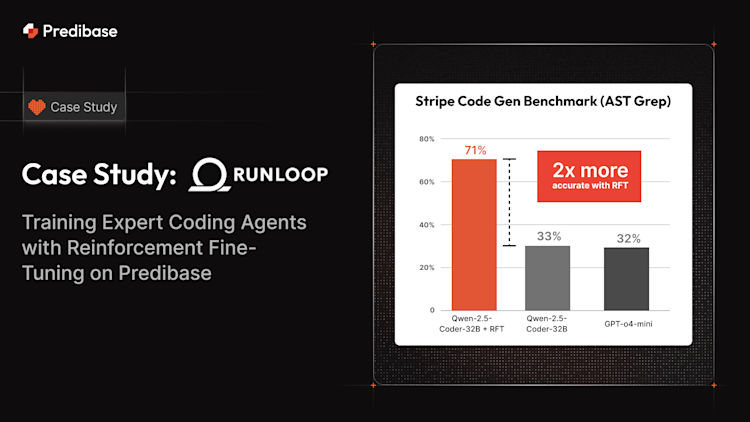

Keeping up with complex software systems is one of the biggest challenges in modern software engineering. LLMs like GPT or Code LLaMA often hallucinate methods, misinterpret APIs, or reference outdated docs, especially in high-stakes use cases like Stripe API integrations. To solve this, we used Reinforcement Fine-Tuning (RFT) to turn a general-purpose code LLM into a domain-specific coding expert. Using just 10 prompts and Runloop’s Devboxes with Predibase’s fine-tuning stack, we achieved a 2x improvement in API call accuracy—transforming Qwen2.5-32B-Coder into a reliable expert coding agent.

Why General-Purpose LLMs Struggle with API Integration

Staying up to date with evolving software systems is one of the biggest challenges developers face today. Domain expertise, especially in nuanced internal codebases or intricate third-party APIs, is incredibly valuable, but hard to scale. The AI software engineer of the future needs to be an expert, not a generalist. That’s why your coding assistant should be reaching out to AI agents trained on your domain-specific codebase or the latest docs for mission-critical APIs. You can’t count on coding help from LLMs that are months behind the latest updates.

Predibase makes it easy to get there. With reinforcement fine-tuning (RFT) and as few as 10 prompts, you can inject expert-level behavior into your AI assistants, fast. In our experiments, we were able to improve Qwen2.5-32B-Coder’s performance by over 2x, from 33% to 71%! Couple that with Runloop’s lightning-fast Devboxes and coding-specific grading functions, and you can turn any general Code Model into a coding domain expert.

To put this to the test, we chose a highly relevant challenge: building an expert coding agent to help us integrate Stripe Billing. If you’ve ever asked ChatGPT to handle a complex API integration, only to get inaccurate calls or code that ignores your libraries, then you understand the struggle. Our own Stripe use case involved metered usage, tiered pricing, trial credits, and more - a perfect storm of complexity. So we asked: could a fine-tuned agent, built with reinforcement learning and only 10 rows of data, deliver the expert support we need?

Case Study: Challenges of Stripe API Integration with LLMs

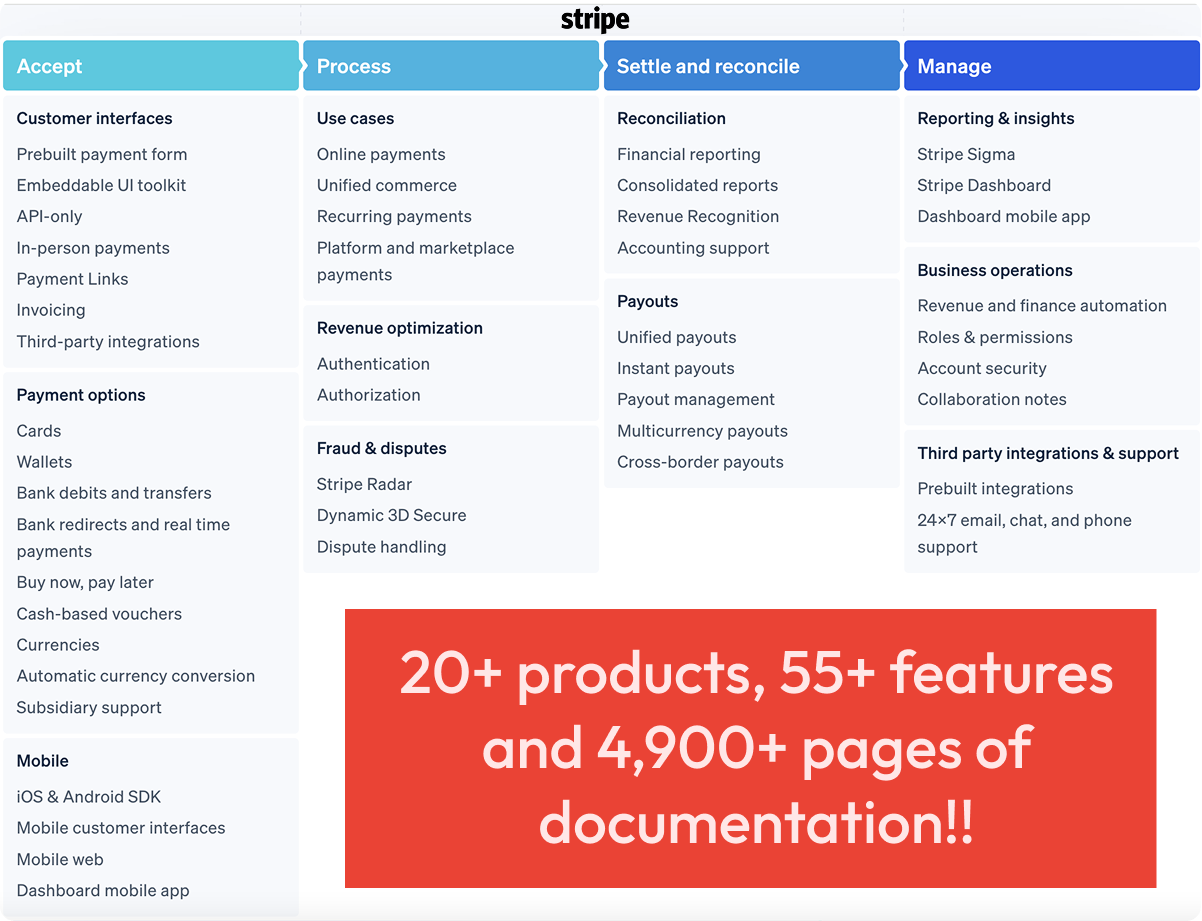

Stripe is arguably the easiest way to add payments to your app/website. But, as soon as you go beyond the simplest of use cases, even your Stripe integration starts becoming pretty complex (Stripe offers 20+ products, 55+ features). You have to think about flat-rate pricing, per-seat pricing, metered billing, subscription schedules, rate cards, etc. We encountered this ourselves when trying to integrate Stripe into our platform so we know the pain!

The Stripe API is complex with 20+ products and 4,900+ pages of documentation

Given how widespread Stripe API integration is, we decided to use it as our use case for showcasing the capabilities of RFT.

Where do vanilla LLMs stand on coding tasks?

We evaluated o3-mini and o4-mini and the results were familiar and frustrating:

- API misuse – e.g., confusing Balance Transactions with Credit Grants.

- Hallucinated methods – the LLMs made calls to methods like.usageEvents which is a legacy method that doesn’t exist in newer API versions.

- Ignored best practices – idempotency keys to prevent double counting usage? Never mentioned.

- Doc-blindness – even when we linked directly to Stripe docs (integration guides) the model often picked outdated information or failed to follow the doc’s instructions.

What was surprising is that even including Stripe documentation within the prompt did not solve these problems. Above missteps are typical for these types of use cases, costing real engineering hours. We needed something more accurate for our task.

Challenges in API Integration with LLMs

Integrating APIs is a complex task and one of the many reasons software engineering remains in such high demand. But what exactly makes API integration so challenging, especially when using LLMs? There are four key challenges to overcome when using LLMs for complex coding tasks:

- Translating Business Requirements into API Calls. APIs evolve rapidly, sometimes weekly, making it difficult for AI models to stay current. When trained on outdated data, these models can offer stale suggestions or even hallucinate non-existent endpoints.

- Accurately Composing API Calls. Real-world integrations often require stitching together multiple usage patterns from scattered documentation. LLMs frequently struggle with this synthesis, leading to incorrect or incomplete API calls.

- Embedding API Calls into Real Projects. Even if an API call is technically correct, integrating it into a working project (like a Next.js app) introduces additional complexity. AI-generated code can miss critical contextual details, resulting in broken or impractical implementations that sometimes take longer to debug than writing from scratch.

- Providing Clear Documentation and Explanations. A good integration isn’t just about working code, it’s also about code that’s understandable and maintainable. Off the shelf LLMs often produce code that looks correct, but fails during execution or lacks clarity, making it hard for developers to trust or inspect.

How Reinforcement Fine-Tuning (RFT) Solves Code Generation Problems

While the overall challenge is significant, there’s one promising aspect that points toward a solution: although generating a correct API integration is difficult, verifying its correctness is relatively easy. It may take an LLM multiple attempts to get all four parts of the solution right, but once a complete solution is produced, it's straightforward to test and confirm whether it works. This is precisely the type of problem that reinforcement learning-based model fine-tuning (RFT) is designed to solve.

Unlike traditional supervised model tuning, RFT does not require human generated labels. Instead, it relies on “graders” to score the output of the model. In our case of Stripe integration, we have two types of graders: format check and code correctness check. The former checks if the model output is formatted for correct parsing while the latter checks that the model response actually solves the task. Let’s dive deeper into how all of this works.

How We Designed a Benchmark for API Code Generation

The first step in reinforcement learning fine-tuning (RFT) is establishing a rigorous benchmark so we can measure performance improvement against a baseline. We needed to evaluate our tuned model systematically, covering the real-world complexities of Stripe integrations. We also needed a scalable method to generate test cases for our benchmark.

We followed the steps below, incorporating human intervention and augmentation at each stage to ensure high-quality results:

Step 1. Generating Realistic Prompts

Our first goal was to develop a set of realistic questions that a software engineer might ask of a Stripe API integrations expert. We wanted to generate a diverse set of real-world questions so we created a series of developer and business personas and prompted GPT-4o to create questions for those users based on Stripe’s official integration guides.

Step 2. Creating Problems with Varying Levels of Complexity

To simulate real-world usage, we crafted diverse prompts with varying complexity levels based on how many Stripe features were required:

- Simple integrations: straightforward, one or two-step integrations that followed single guides (e.g.) Stripe Checkout.

- Medium integrations: multi-step integrations tasks requiring combining knowledge from multiple different linked integration guides (e.g. Stripe Terminal)

- Advanced integrations: tasks combining multiple features from across multiple integration guides, sometimes in novel undocumented ways (e.g., coupons + metered billing).

Step 3. Creating Graders

Instead of constantly checking if the model output “looks right” we needed a way to automatically score model outputs. So, we created scoring functions focused on two key areas:

- Response Format Validation: we checked that responses followed a consistent markdown structure with clearly labeled code blocks, explicit file names, and accompanying explanations.

- Correct API usage: we used the AST-grep library to search generated code clocks for specific Stripe API calls and validate that their parameters matched integration guide specifications.

Note that it is possible to have many more graders like above. For example, linting, type checking, etc. but we wanted to keep our evaluation simple hence we focused on the above two graders. Also, while AST-grep check is not the ultimate proof of code correctness, it does provide a concrete signal to measure directional improvement of the model.

One great thing about these graders is that all outputs are deterministically verifiable. Thus, it eliminates ambiguity during evaluation. This is especially critical for RFT, where clear, objective feedback is essential for effective training and avoiding noisy signals.

RL Fine-Tuning with Predibase

With our comprehensive benchmark in hand, we were ready to launch our RL fine-tuning job. We worked with the Predibase team and leveraged Predibase’s RL fine-tuning platform to train a Qwen 32B Coder model. RL fine-tuning allowed us to use a small, controlled set of task prompts while still getting detailed feedback through our scorers.

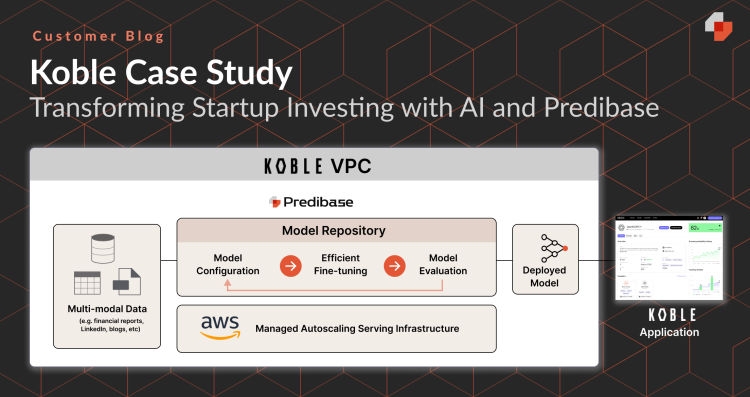

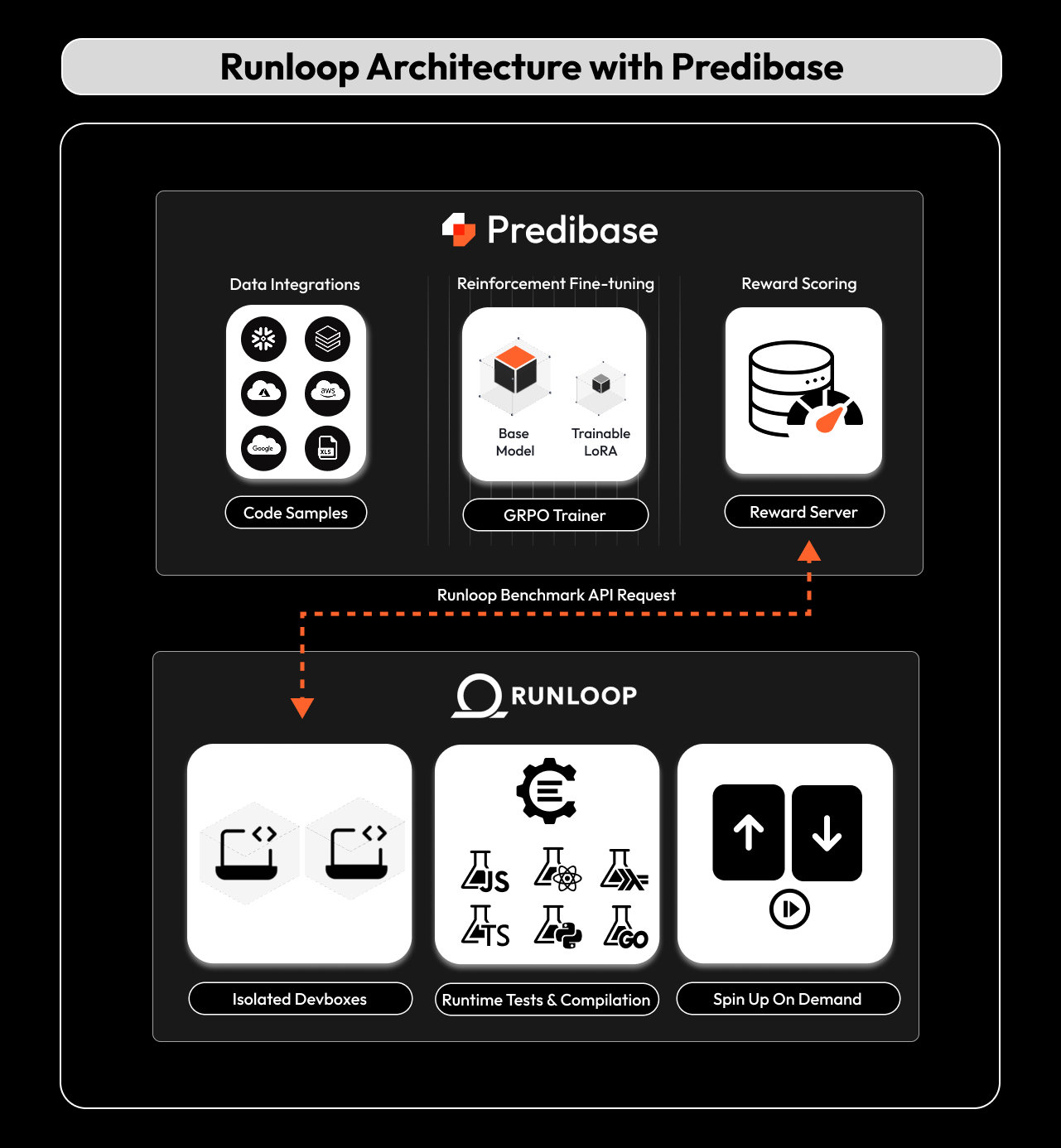

Predibase streamlined the training process, enabling us to rapidly get a training loop up and going with just a few simple API calls. The final training pipeline had Predibase hosting and orchestrating the training loops while using the Runloop Devbox environments to run graders. Devboxes provide an isolated environment so that arbitrarily complex graders can be run without worrying about security. Further, Predibase supports parallel evaluation of graders of model outputs while Runloop supports efficient execution of the graders. Together, they create an efficient system that eliminates bottlenecks in RFT.

Runloop and Predibase architecture for creating expert AI coding agents

Predibase completely abstracts away all of the complexity of managing GPUs and the associated memory while doing efficient training. Using Predibase allowed us to scale our training to even larger 24k context windows without dealing with the complexities of multi-gpu training loops while keeping costs low.

Once the training was complete, the model was automatically queryable on the Predibase platform and available via API!

Reinforcement Learning Experiments and Findings

With our training pipeline in place, we conducted several experiments, each building on the insights of previous iterations. Here's what we learned:

Bigger Models, Longer Context, Better Results

We first experimented with different model sizes and including/excluding relevant integration guides in the prompt context.

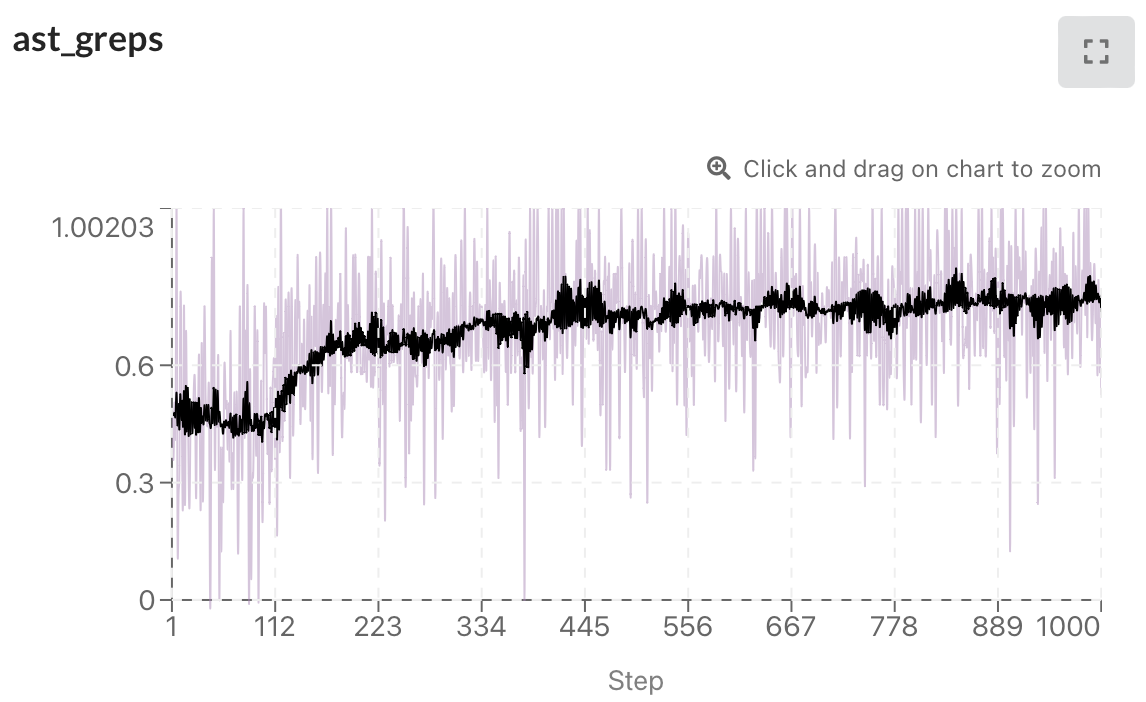

Outcome: Models below 32B struggled with the task despite the context containing relevant docs. However, Qwen2.5-32B-Coder + Integration Guides (20k input tokens context length) showed consistent improvement throughout training. Below graph shows AST-grep check score as training progresses.

AST Grep benchmarks for our coding models

Multiple Graders

We tested using additional graders like linting and type-checking in order to have a more comprehensive evaluation of the model output.

Outcome: This led to unstable training. We suspect the instability was due to the base model's insufficient capability to generate code that consistently passed more rigorous checks, such as linting and runtime tests.

Simplified RFT

To stabilize training, we conducted a second experiment focused exclusively on our simplest, most direct scorers—formatting and AST grep scorers—removing more complex linters and type checks.

Outcome: This adjustment yielded stable and encouraging results. We observed clear progress as the model consistently produced correct API calls and significantly reduced hallucinations. In practical terms, our model was able to now consistently start getting the right API calls and minimize hallucinations!

Curriculum-Style RL Fine-Tuning

Next, we attempted a more structured, curriculum-based training approach. We began training with simpler scorers (formatting and AST grep) and eventually introduced harder checks (linters and type checks) as the training progressed by building on previous adapters.

Outcome: We once again experienced instability when incorporating advanced scorers. While there was some improvement, the primary issue seemed to be the base model’s limited proficiency in generating code that fully passed stringent linting and type-checking requirements. This limitation meant the model rarely produced high-quality outputs needed to effectively learn from our reward signals.

Future Consideration: We could improve our reward function by refining the scoring system to assign quantifiable penalties based on the severity and type of errors. This would preserve objective, numerical feedback while making it more informative - enabling the model to make directional improvements by learning which types of mistakes matter more. We also believe that enriching the prompt context with explicit instructions for satisfying linting and type-checking requirements could enhance the model’s performance as measured by these reward functions.

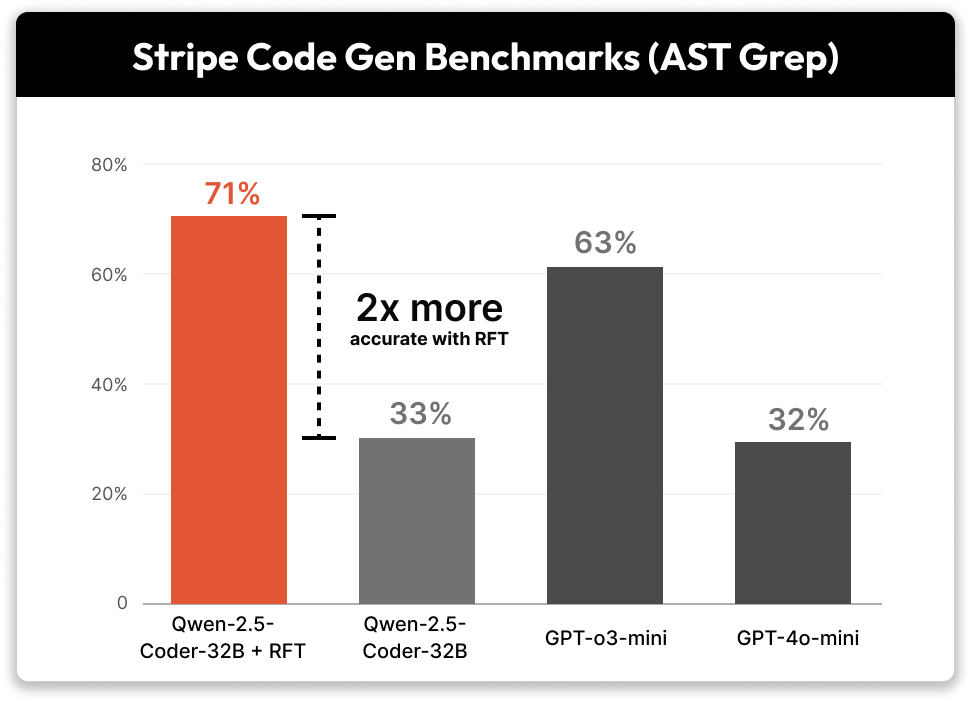

Benchmark Results: 2x Performance Lift with RFT

Our RFT model punched well above its weight. With just ten training examples, our model was within striking distance of the larger foundation models and significantly outperformed several midsized commercial models, such as OpenAI’s o3-mini and o4-mini. On AST Grep benchmarks (correct Stripe SDK/API calls with correct parameters), our model was more than 2x more accurate than the base Qwen coder model and o4-mini.

Our reinforcement fine-tuning improved Qwen2.5-32B-Coder’s performance by over 2x.

As mentioned earlier, AST grep checks are not the ultimate proof of the model’s expertise, but it is a great starting point that shows promising improvements. These results are only the beginning: as we scale model size, context lengths, and add more labeled examples, we expect performance to climb even higher to be on par or better than the much larger frontier models, bringing us ever closer to that “expert Stripe Integrations Engineer in your pocket.”

For developers, this translates to cleaner, more accurate coding models without the need to build a huge, labeled training set. This technique can be applied to any domain specific coding task where labeled data is scarce, since all it requires is writing scorers and graders which often take the form of test cases. And best of all, by training an open-source model, developers no longer need to share sensitive training data with a commercial model provider, can own their own model IP and host within their own cloud.

Ready to build your own expert AI SWE?

Runloop’s vision is simple: enable developers to easily build their own AI SWE applications to uplevel every aspect of the software development lifecycle. Runloop’s isolated Devboxes help do this by taking away all pain of deploying your AI SWEs to work on real projects; Devboxes allow your AI agents to safely generate, build, and test code in a fully isolated environment. Runloop’s Hosted Benchmarks build on top of Devboxes by enabling users to fully declare specific Devbox environments and scoring functions to fully measure their progress as they iterate and improve their agents with tuning processes like RL fine-tuning.

By combining Predibase’s user-friendly RL fine-tuning tools with Runloop’s robust Benchmark and Devbox solutions, we’ve begun testing powerful patterns that move us closer to making this vision a reality.

Contact RunLoop to learn about their industry leading AI Infrastructure for software engineers and experiment with Predibase’s inference and training stack with a free trial.

FAQ

Why are LLMs like GPT or Code LLaMA not reliable for complex API integrations?

Vanilla LLMs often hallucinate methods, misuse APIs, or reference outdated docs. Even when given relevant documentation, they struggle with context comprehension and composing end-to-end correct code. That’s why domain-specific fine-tuning is essential.

How does Predibase help with LLM reinforcement fine-tuning?

Predibase provides an end-to-end platform for running RFT jobs efficiently. It abstracts GPU management, orchestrates training loops, supports large context windows, and integrates easily with tools like Runloop Devboxes for secure, isolated evaluation.

Can you build an expert coding model with only a few prompts?

Yes. With the right grading functions and reinforcement learning loop, even a small number of examples (as few as 10) can lead to significant performance improvements—as demonstrated with the Stripe integration use case.

How accurate was the fine-tuned model compared to OpenAI’s o4-mini?

The Predibase-trained model achieved over 2x the AST grep accuracy of o4-mini and the base model, showing strong improvements in correct API call generation with proper parameters.