We believe the future of AI lies in open-source models that are smaller, faster, and fine-tuned on your organization’s proprietary data. In fact, in our experiments, we’ve seen smaller LLMs outperform their larger more expensive commercial alternatives.

However, larger open-source LLMs are sometimes preferred for certain use cases and one of the major challenges engineers face when fine-tuning these models comes down to the optimizations required to train them quickly and cost-effectively. One of the largest and most popular open-source LLMs available today, Llama-2-70B, has historically been difficult for ML practitioners to train and serve.

We’re excited to share that we’ve made it easier than ever to fine-tune Llama-2-70B for free using Ludwig, the powerful open-source framework that enables highly optimized model training through a declarative, YAML-based interface. Ludwig is used by hundreds of organizations like Payscale, Navan (formerly TripActions) and Koo to train custom state-of-the-art LLMs and has over 10,000 stars on github.

In this blog post, we’ll discuss the optimizations introduced into Ludwig to make fine-tuning Llama-2-70B possible, as well as demonstrate the performance benefits of fine-tuning over OpenAI’s GPT-3.5 and GPT-4 models in a case study involving structured JSON generation.

Fine-tuning Llama-2-70B on a single A100 with Ludwig

We made possible for anyone to fine-tune Llama-2-70B on a single A100 GPU by layering the following optimizations into Ludwig:

- QLoRA-based Fine-tuning: QLoRA with 4-bit quantization enables cost-effective training of LLMs by drastically reducing the memory footprint of the model.

- Gradient accumulation: accumulating gradients over multiple mini-batches before updating the model's weights is beneficial for effectively training models with limited GPU memory, as it mitigates the need for large batch sizes. This is especially important when training LLMs which tend to be GPU memory-intensive.

- Paged Adam optimizer: we offload optimizer states to CPU memory from GPU memory in order to make space for activations in the GPU, further reducing memory pressure during fine-tuning.

All of these optimizations are ready to use out-of-the-box in Ludwig. The fine-tuning Ludwig configuration incorporating these optimizations is shown below:

model_type: llm

preprocessing:

split:

type: fixed

input_features:

- name: input

type: text

preprocessing:

lowercase: false

max_sequence_length: 512

output_features:

- name: output

type: text

preprocessing:

lowercase: false

max_sequence_length: 512

prompt:

template: >

Your task is a Named Entity Recognition (NER) task. Predict the category of

each entity, then place the entity into the list associated with the

category in an output JSON payload. Below is an example:

Input: EU rejects German call to boycott British lamb .

Output: {{"person": [], "organization": ["EU"], "location": [], "miscellaneous": ["German", "British"]}}

Now, complete the task.

Input: {input}

Output:

adapter:

type: lora

trainer:

type: finetune

epochs: 2

optimizer:

type: paged_adam

batch_size: 1

learning_rate: 0.0002

eval_batch_size: 2

learning_rate_scheduler:

decay: cosine

warmup_fraction: 0.03

gradient_accumulation_steps: 16

base_model: llama-2-70b-chat

quantization:

bits: 4Case Study: Structured Information Extraction From Natural Language

In the following example, we’ll show you how to fine-tune Llama-2-70B and demonstrate the effectiveness of fine-tuning open-source models over OpenAI’s GPT-3.5 and GPT-4 models on a structured information extraction task. Extracting customer metadata from text is a common enterprise workflow well-suited for LLMs. We simulate this workflow by reframing the classic CoNLLpp Named Entity Recognition (NER) dataset as a generative JSON generation task. For example, we take inputs like the following:

```

Japan began the defence of their Asian Cup title with a lucky 2-1 win against Syria in a Group C championship match on Friday.

Then, we query the LLM to output the following JSON payload:

{"person": [], "organization": [], "location": ["Japan", "Syria"], "miscellaneous": ["Asian Cup"]}

```We fine-tune a 4-bit quantized Llama-2-70B model on the training split of the dataset for 2 epochs using a simple prompt template:

```

Your task is a Named Entity Recognition (NER) task. Predict the category of each entity, then place the entity into the list associated with the category in an output JSON payload.

Below is an example:

Input: EU rejects German call to boycott British lamb .

Output: {{"person": [], "organization": ["EU"], "location": [], "miscellaneous": ["German", "British"]}}

Now, complete the task.

Input: {input}

Output:

```Two items to note:

- We incorporate a 1-shot example into the prompt in order to facilitate more sample-efficient fine-tuning, as described by Scao et al. 2021. We choose to use only a single shot in order to improve sample-efficiency while still using relatively short sequence lengths during fine-tuning.

- We inject the input string into the template by injecting it into the {input} placeholder.

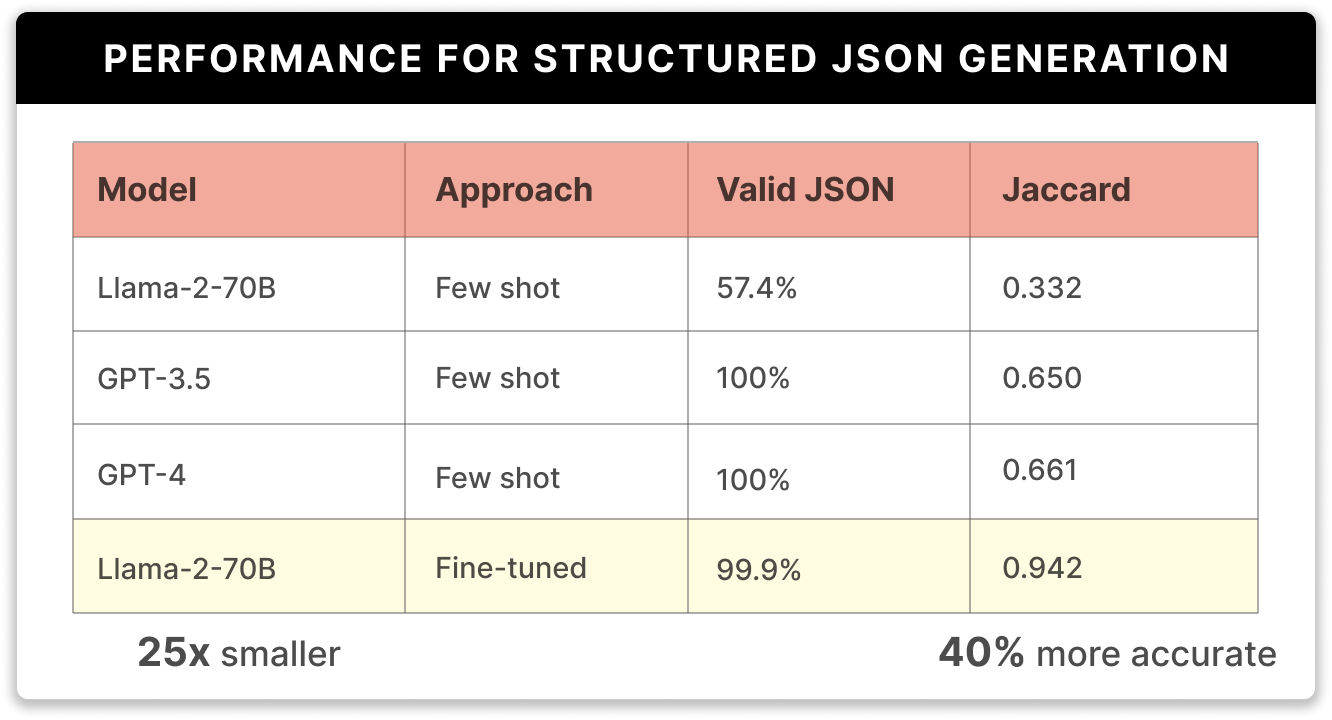

We compare this fine-tuned model’s predictions with the few-shot predictions of off-the-shelf Llama-2-70B, GPT-3.5, and GPT-4. In order to simulate real-world use of these models, we increase the number of examples in the prompt from 1 to 5. This greatly increases prompt length, but improves overall model performance. See the full few-shot prompt in this GitHub gist.

In summary, we evaluate model performance by computing metrics on the predictions of our fine-tuned Llama-2-70B model, and the few-shot predictions of off-the-shelf Llama-2-70B, GPT-3.5, and GPT-4. We compute metrics across all four experiments by measuring two values: (1) the percentage of outputs that produced valid JSON with all four of the correct keys, and (2) the accuracy of the models, measured by Jaccard similarity.

We prepend the JSON keys of the entities to the entities themselves to ensure that we are scoring based on the presence of the entity, as well as correct entity categorization. As an example, we compare the Jaccard similarity of predictions and targets formatted as the following:

```

{“__person__Fischler”, “__location__France”, “__location__Britain”}

```The following figure shows the performance of our fine-tuned open-source model vs. few-shot baselines:

Based on this data, we can draw the following conclusions:

- Few-shot Llama-2-70B struggles to produce valid JSON and additionally fails the downstream task of extracting the entities into their appropriate categories.

- GPT-XX out-of-the-box demonstrates strong performance in generating valid JSON; however, both GPT-3.5 and GPT-4 fall short in conducting the actual information extraction task, getting Jaccard scores of only 0.650 and 0.661, respectively.

- Fine-tuning Llama-2-70B vastly improves its performance. It outputs nearly perfect JSON outputs, and improves the Jaccard score for the model by over 60 points from 0.332 to 0.942.

Next Steps: Fine-tune Llama-2-70B for Your Own Use Case

In this short how-to blog, we demonstrated how to easily and efficiently fine-tune LLama-2-70B on a single A100 GPU with open-source Ludwig, producing more accurate results than commercial LLMs like GPT-3.5 and GPT-4.

If you are interested in fine-tuning and serving LLMs on fully managed cost-effective serverless infra within your cloud, then check out Predibase. The Predibase platform offers efficient and configurable fine-tuning and serving of any open-source large language model. Just sign up for a free 2-week trial, and train and serve any model from HuggingFace independently—no unnecessary hand holding. Training jobs and serving can happen on different hardware configurations all the way from T4, A10G, to A100s. This allows for fine-tuning at different price points and different speeds.

For deploying these fine-tuned models, customers have the option of spinning up a dedicated deployment for a single fine-tuned model, or packing many fine-tuned models in one deployment, offering the capability to serve hundreds of them for the cost equivalent of a single model without sacrificing throughput and latency. Read more about how we implement dynamic model serving in our LoRAX blogpost.

Serve your fine-tuned Llama-2-70b for $0.002/1k tokens. Sign up for a Predibase free trial to get started customizing your own LLMs.

Frequently Asked Questions

What is Llama 2, and how does it differ from previous models?

Llama 2 is an open-source large language model developed by Meta AI, offering improved performance and versatility over its predecessors.

Why should I fine-tune Llama 2 for structured JSON generation?

Fine-tuning Llama 2 enables it to accurately convert unstructured text into structured JSON formats, enhancing data extraction and integration processes.

How can Ludwig assist in fine-tuning Llama 2 models?

Ludwig is an open-source framework that simplifies the fine-tuning of Llama 2 models through a declarative, YAML-based interface, incorporating optimizations like QLoRA-based fine-tuning and gradient accumulation.

What are the hardware requirements for fine-tuning Llama-2-70B?

With Ludwig's optimizations, Llama-2-70B can be fine-tuned on a single A100 GPU, making it more accessible for organizations with limited hardware resources.

Can I use fine-tuned Llama 2 models for real-time data extraction applications?

Yes, fine-tuned Llama 2 models are capable of handling real-time data extraction tasks efficiently, especially when optimized with tools like Ludwig.