Introduction

Ludwig is an open-source declarative machine learning framework that enables you to train and deploy state-of-the-art models for a variety of tasks including tabular, natural language processing, and computer vision by just writing a configuration. If you are new to Ludwig, check out our quick tutorial.

Until now, Ludwig only supported neural network (NN) models, but with the release of Ludwig 0.6, we are excited to add gradient boosted tree (GBM) models as a new model type for tabular data.

One of the key advantages of gradient boosted trees is their speed of training compared to neural network models. They also tend to perform competitively on tabular data and to be more robust against class imbalance compared to neural networks, making them a reliable and widely-used tool among practitioners. By adding gradient boosted trees to Ludwig, we hope to provide you with a unified interface for exploring different model types and obtain improved performance in particular on small tabular data tasks.

We created an interactive tutorial that you can explore here.

Why add GBMs to Ludwig?

Ludwig provides a simple and flexible declarative interface for building and serving the best quality models on a wide variety of tasks.

Ludwig uses a compositional architecture with neural networks as the default for modeling. This is because neural networks are able to handle a wide range of data types and support multi-task learning, making them a versatile choice for many applications.

While neural networks work well with many types of data, they are relatively slow to train and they may not always be the best choice for tabular data, particularly when the dataset is small or when there is class imbalance. Additionally, using multiple frameworks to try different model types can be time-consuming and cumbersome.

How to use GBMs in Ludwig?

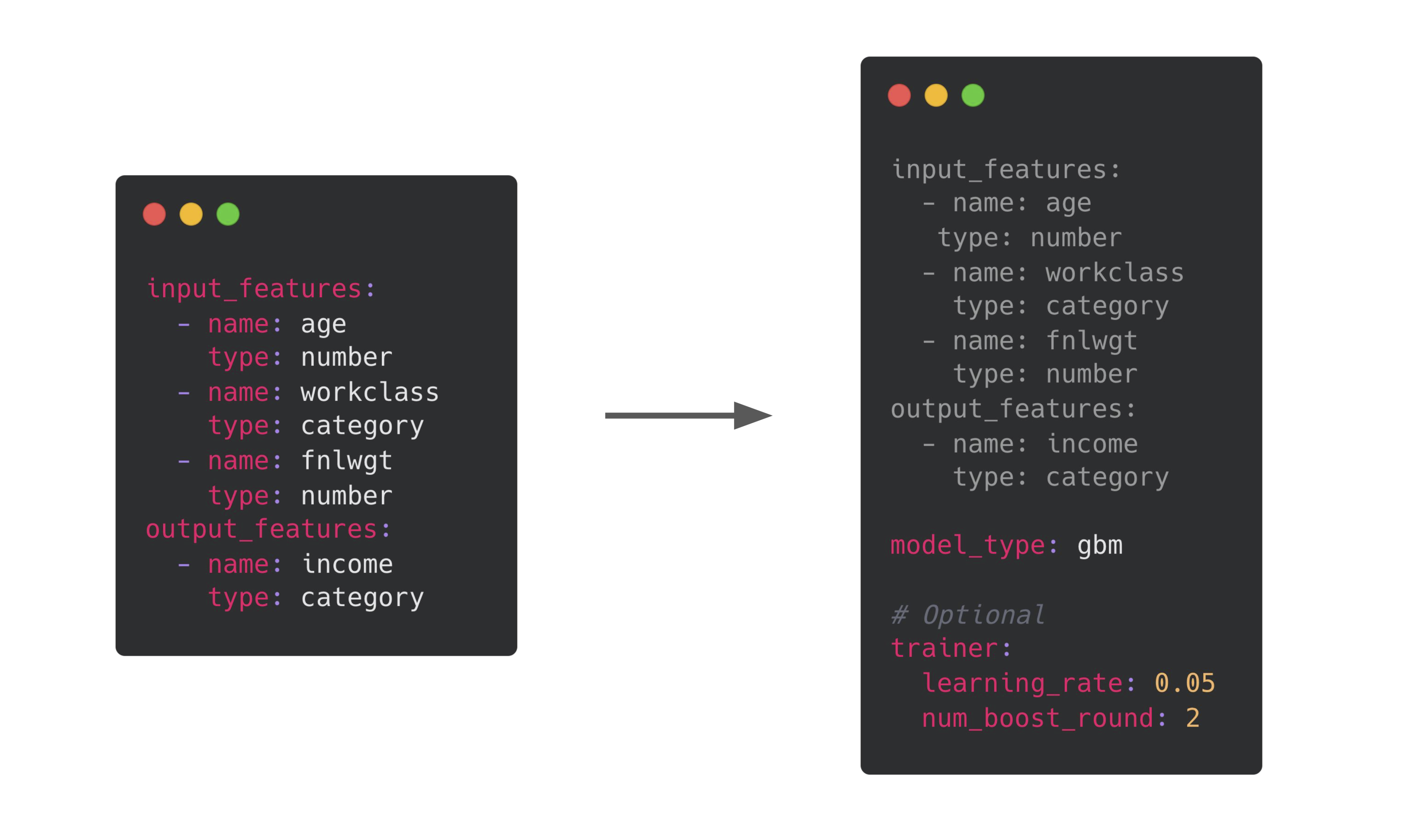

If you have a dataset to predict income from census data, this is a Ludwig configuration that gets you started:

input_features:

- name: age

type: number

- name: workclass

type: category

- name: fnlwgt

type: number

output_features:

- name: incomeLudwig configuration file

This will train a neural network with default architecture and training parameters. If you want to train a GBM model instead, you can specify the model_type in the Ludwig configuration:

model_type: gbmThis tells Ludwig to train a GBM model, instead of the default NN.

Under the hood, Ludwig uses LightGBM to train a gradient boosted tree model. LightGBM is a gradient boosting framework that uses tree based learning algorithms and is designed to be scalable and performant.

Like other parameters in Ludwig, LightGBM-specific hyperparameters come with a default value, which you can override if desired. For example, you might include the following in the trainer section of the configuration file:

trainer:

learning_rate: 0.1

num_boost_round: 50

num_leaves: 255

feature_fraction: 0.9

bagging_fraction: 0.8

bagging_freq: 5

min_data_in_leaf: 1

min_sum_hessian_in_leaf: 100Example trainer section of a Ludwig configuration file

This sets the learning rate, number of boosting rounds, and other hyperparameters for the GBM model. For more information about the available hyperparameters, default values and how to override them, you can refer to the Ludwig documentation.

Here is a comparison of the configurations:

Default Ludwig config (left) and Ludwig config for training GBM (right)

Image: Default Ludwig config (left), Ludwig config for training GBM (right)

Hyperparameter tuning

Hyperparameters heavily influence the performance you get out of any model, including GBMs. In Ludwig there is a simple way to run hyperopt by just adding a section in the configuration.

For example, you can do a hyperopt sweep over some specific values for learning_rate and max_depth by adding these to the hyperopt parameters section of the Ludwig config:

hyperopt:

parameters:

trainer.learning_rate:

space: grid_search

values:

- 0.001

- 0.01

- 0.1

trainer.max_depth:

space: grid_search

values:

- 5

- 10

- 30Adding hyperopt parameters to a Ludwig config

Adding this section to the config will run a grid search on the outer product of those parameters (9 runs in total). Ludwig also supports random search, bayesian optimization and other advanced techniques, check out the documentation for more details.

Model quality

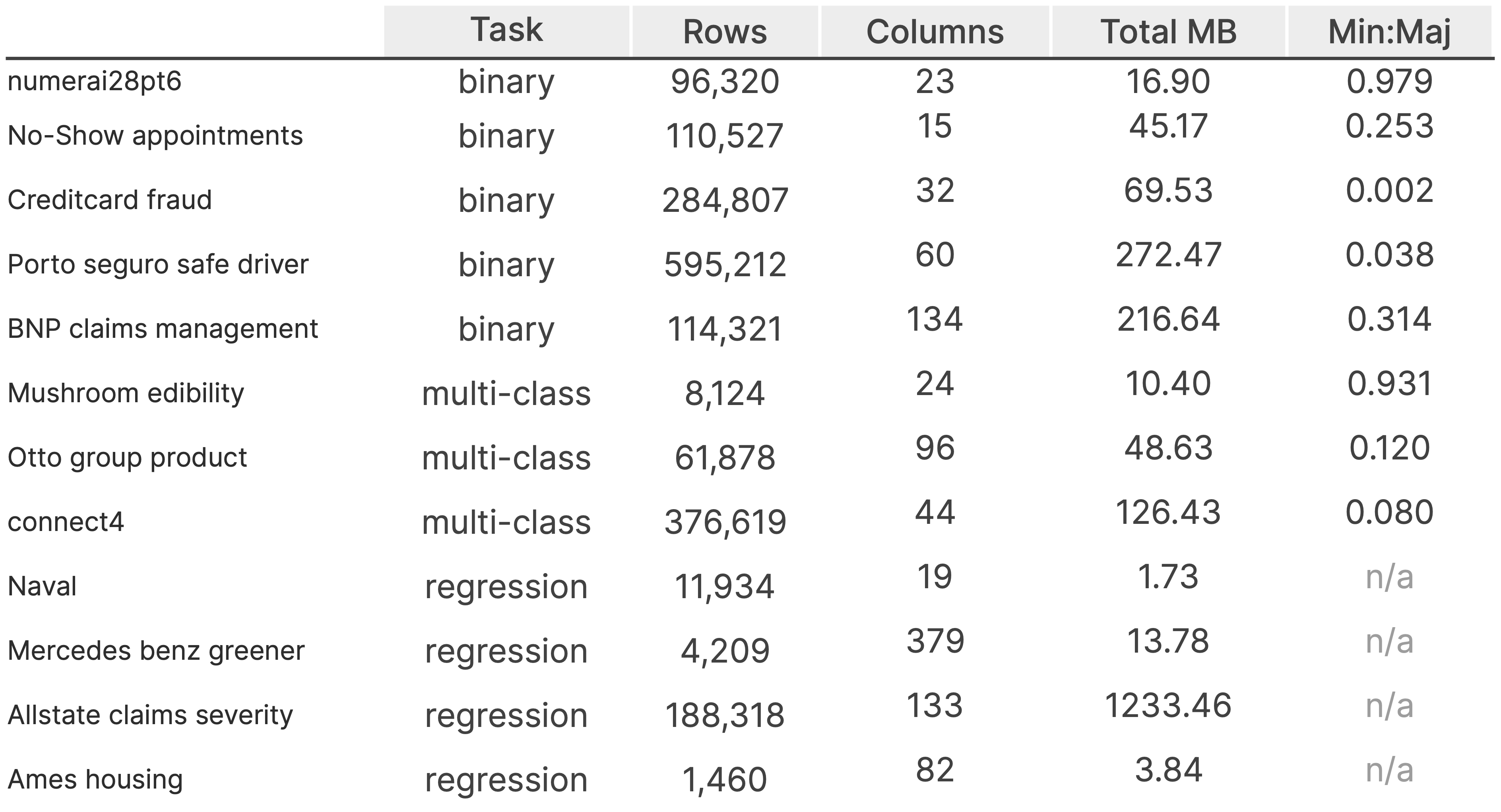

In order to test out the new GBM capability in Ludwig we ran some benchmarks to compare GBMs with the default neural network architecture. We looked at small tabular datasets representing various tasks and characteristics. By using a diverse set of datasets, we were able to evaluate the model's ability to handle different types of data and tasks, as well as its robustness to imbalanced datasets.

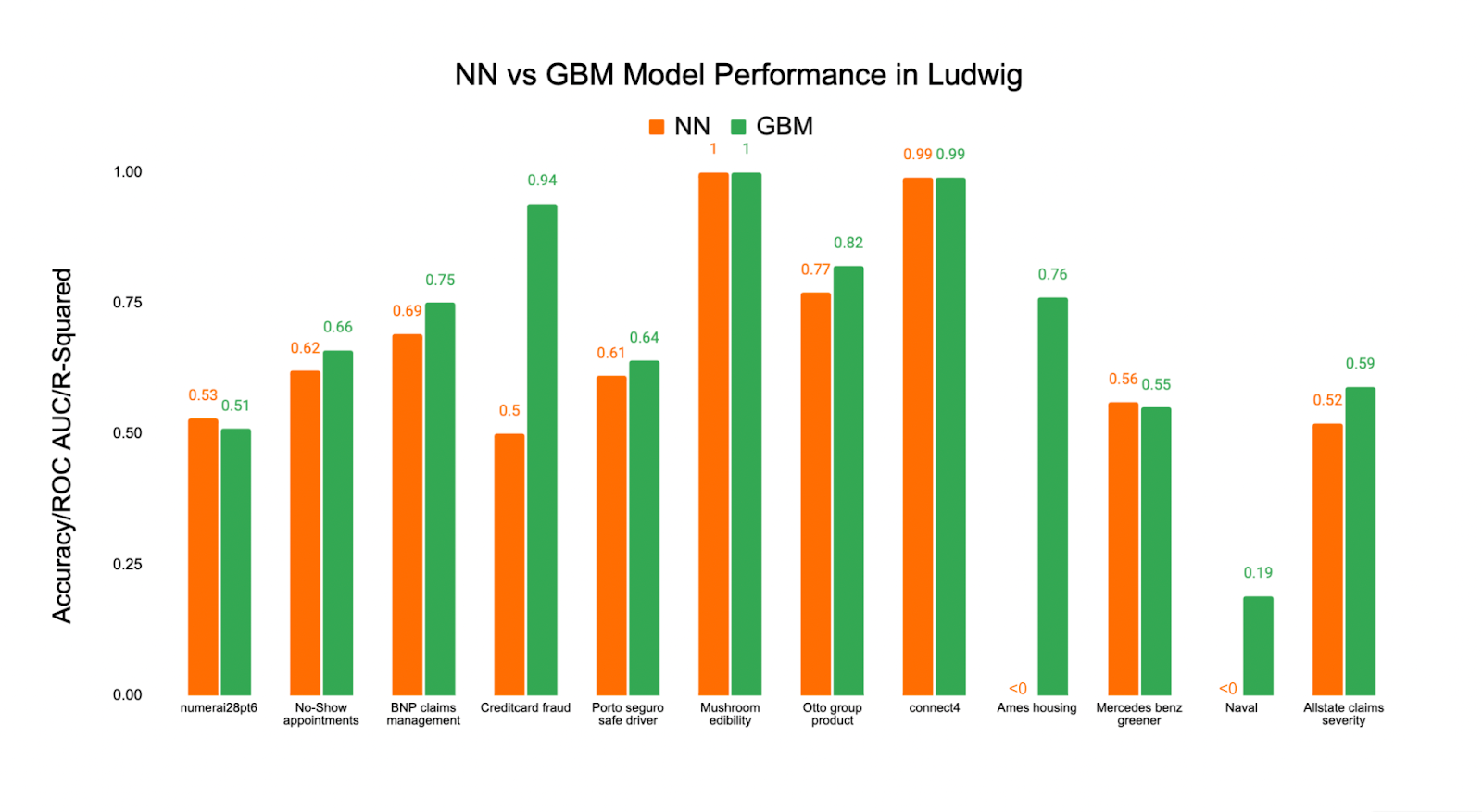

On 8 out of 12 datasets, GBMs outperformed NNs in terms of predictive power. In particular GBM models perform on par or better for 7 out of the 8 classification datasets and 3 out of 4 regression datasets. For the datasets where NNs perform better than GBMs, the performance difference is within 2% of ROC AUC (binary classification) and 1% of R2 (regression).

GBM Table Benchmark Graphic

Out of the 12 datasets, 5 are binary classification, 3 are multi-class classification, and 4 are regression datasets. Of the 8 classification datasets, 2 are balanced, 2 are imbalanced with a minimum to majority ratio of less than 0.9, and 4 are highly imbalanced with a minimum to majority ratio of less than 0.25. The size of the smallest dataset (naval) is 1.73MB, while the largest (Allstate claims severity) is 1,233.46MB. The number of features in the datasets ranges from 15 (No-show appointments) to 379 (Mercedes-Benz greener).

On the balanced datasets numerai28pt6 and mushroom edibility, NNs perform on par or better than GBMs, whereas GBMs outperform NNs on most imbalanced datasets. This provides some evidence for the hypothesis that GBMs are more robust to class imbalance.

Figure 1 We report accuracy for multi-class classification datasets, ROC AUC for binary classification datasets and R-squared for regression datasets.

Figure 1: We report accuracy for multi-class classification datasets, ROC AUC for binary classification datasets and R-squared for regression datasets.

Note that the results presented here are based on small tabular datasets and may not represent the performance of GBMs and NNs on larger or more complex data. To better understand the relative strengths and weaknesses of both models, we plan to conduct a larger-scale comparison on a wider range of datasets which will include a more indepth hyperopt.

Faster training

On 7 out of 12 datasets, GBMs outperformed NNs in terms of training speed. The time it took to train a NN on all datasets was 2.5 times longer than the time it took to train a GBM on the same datasets. In particular, the NN took 10 times as long to train on the BNP claims management dataset and over 5 times as long on the Allstate claims severity dataset. Both model types were trained on a single Ray cluster with 1 head node and 1 worker node, both running m5.2xlarge instances on AWS.

Benefits and limitations

The introduction of GBMs in Ludwig makes it a one-stop shop to declaratively train multiple types of models.

A major benefit is that you can take advantage of all of the additional functionalities that Ludwig offers for NNs also for GBMs: preprocessing, hyperparameter optimization using Ray Tune, interoperability with different data sources (pandas, dask, modin), which are all declared in the same familiar and concise Ludwig config, and Ludwig’s out of the box visualization and serving capabilities.

Moreover, the fact that running different experiments with different model types only requires modifying a couple strings of a configuration makes it very easy to perform a fair comparison of models and experiment with different preprocessing, training schedules and find the best hyperparameters.

Choosing GBM as the model type in Ludwig imposes some limitations on the available data types and the number of output targets.

GBMs can only be used for binary, categorical, or regression tasks, and the data types for input and output features are limited to binary, categorical, or numerical data. If your task includes other types of data, such as images, audio, or text, you can use the default Ludwig model type, which is neural networks. Ludwig contains many state of the art NN architectures out of the box.

Additionally, GBM models currently only support a single output feature. If you want to do multi-task learning, where the model makes predictions for multiple output targets, you will need to use neural networks instead.

Stay in the loop

We hope you are as excited as we are about how easy it is to use GBM models in Ludwig 0.6 and that you take it for a spin! We are also working on benchmarking this capability on a wider range of datasets, so stay tuned. In the meantime, you can download Ludwig and join our Slack community to connect with Ludwig builders. Happy modeling!

If you are interested in adopting Ludwig in the enterprise, check out Predibase, the declarative ML platform that connects with your data, manages the training, iteration and deployment of your models, and makes them available for querying, reducing time to value of machine learning projects. In Predibase, you can switch between a NN and a GBM with the click of a button.

Don’t want to miss future updates? Join the Ludwig email list.