With over $500M in daily trade volume, Paradigm is one of the world’s largest institutional liquidity networks for cryptocurrencies. Their mission is simple: bring on-demand liquidity for traders, anytime and anywhere without compromises.

Paradigm is on a mission to provide on-demand liquidity for traders with their unified markets platform.

As a leading platform for cryptocurrency options trading, Paradigm faced a critical operational challenge familiar to many users who have experienced notification & alert fatigue – traders were receiving too much irrelevant content and having difficulty separating signal from noise. Prior to adopting Predibase's solution, market makers on the platform received RFQs (Request for Quotes) in a chronological order without personalized ranking or notifications. This approach meant market makers missed highly relevant RFQs, especially during periods they were away from the platform.

The absence of personalized ranking and the lack of RFQ notifications directly impacted Paradigm's primary objective: increasing trading volume on their platform by improving the response rate of relevant market makers to RFQs.

Solving the trader’s dilemma with personalized recommendations on Snowflake

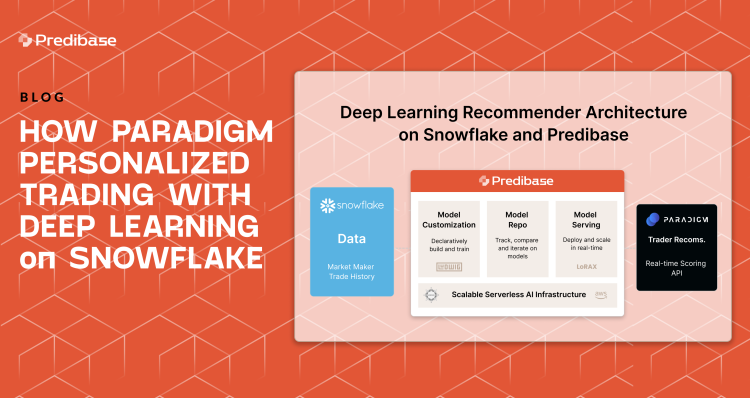

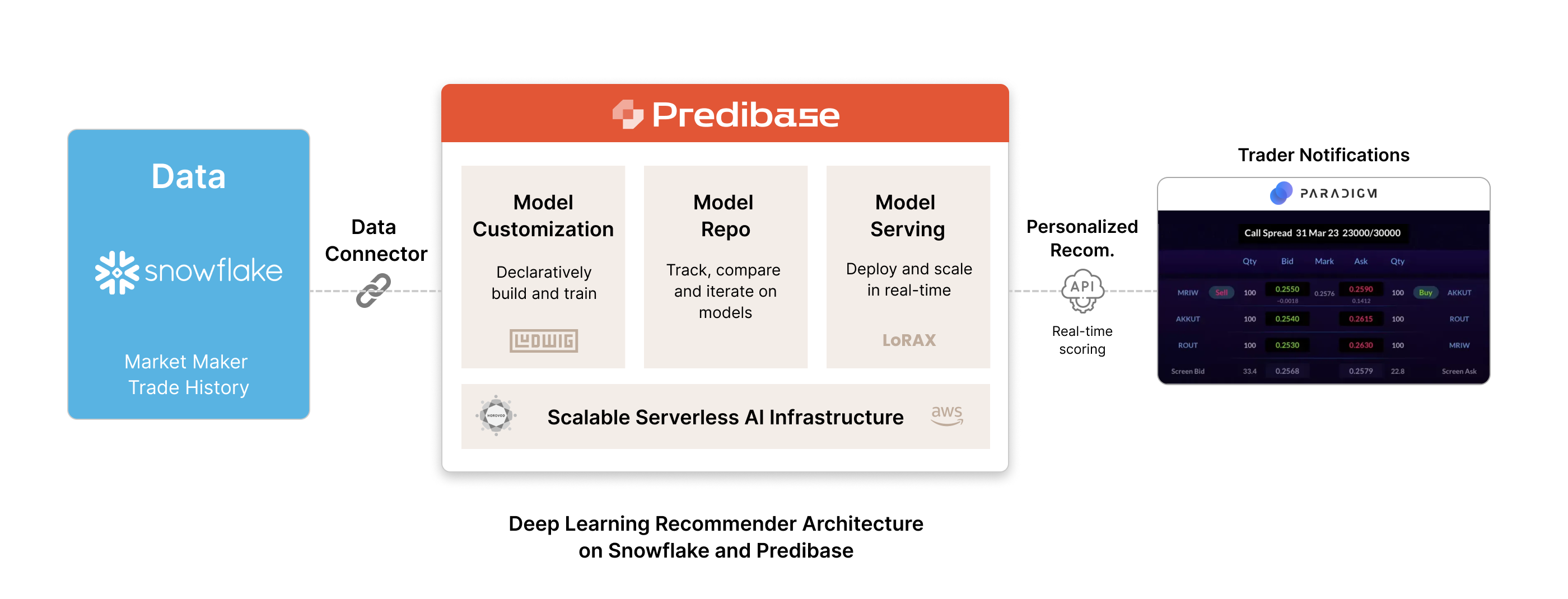

In response to Paradigm's challenge, Predibase collaborated closely with the Paradigm engineering team, leveraging Predibase’s seamless integration with Snowflake to develop a cutting-edge deep learning-based personalization system. Using Predibase’s unique combination of a declarative ML interface with managed model training infrastructure on AWS, Paradigm was able to rapidly build and maintain predictive models that are capable of scoring maker-trade combinations based on historical trading data from their Snowflake Data Cloud.

Paradigm’s high performance production environment connects with Predibase via its built-in model serving API. As new RFQs come in, Paradigm's systems access individualized relevance scores for market makers from Predibase's low-latency gRPC endpoint. This allows Paradigm to send real-time personalized notifications to the most relevant market makers, ensuring that they never miss out on crucial RFQs.

How Paradigm built a recommendation system with Predibase and Snowflake

What’s an RFQ?

A market maker is a financial institution that provides liquidity in the market by continuously quoting prices at which they are willing to buy and sell securities. A Request for Quote (RFQ), see Paradigm docs, is a process in which market participants request quotes from potential market makers for specific transactions like bespoke crypto option trades. Using Paradigm, market makers respond to RFQs with quotes and play a pivotal role in facilitating these trades by providing liquidity.

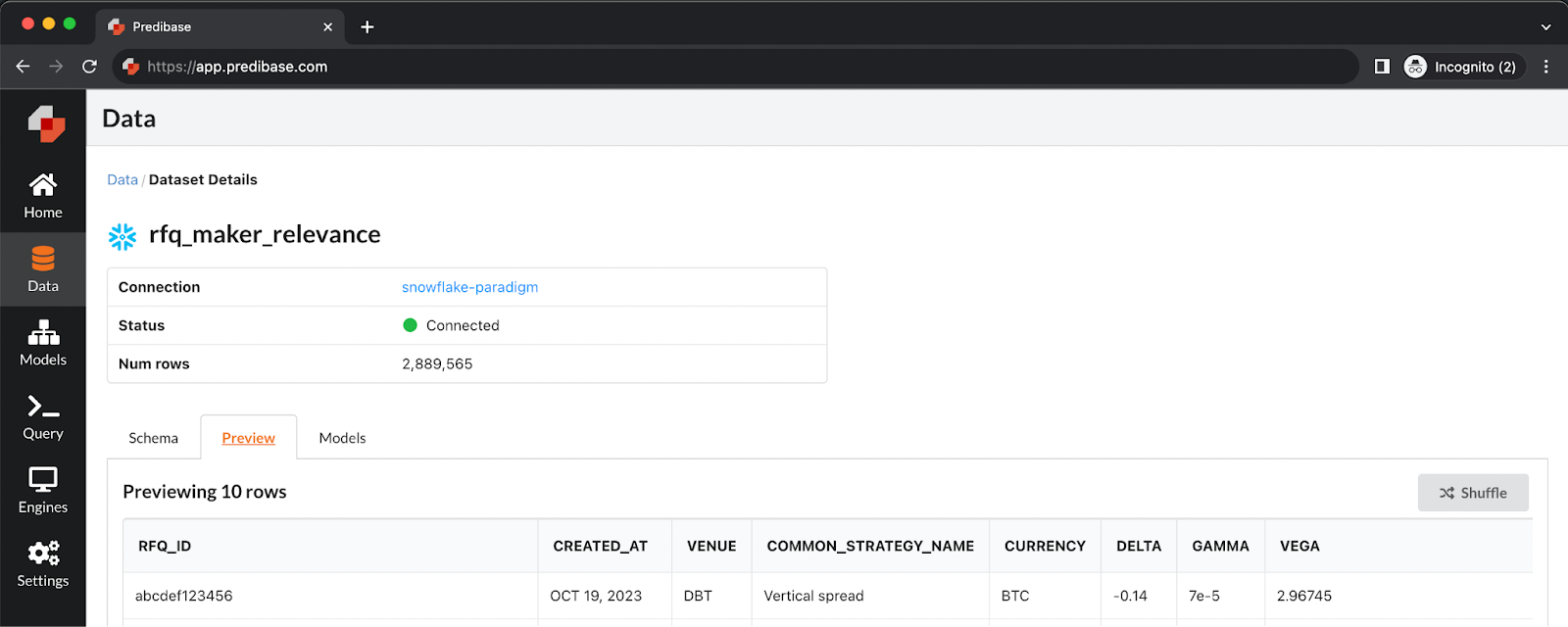

Connecting Predibase to Snowflake to build predictive models

Paradigm wanted to use historical market maker and RFQ data stored in Snowflake to create a model that predicts the likelihood a market maker will quote and trade a given derivative. Paradigm has millions of records in Snowflake that can be used to train their ML model, and connecting to their Snowflake warehouse only took a couple seconds using Predibase’s easy to use data connections interface and out-of-the-box integration with Snowflake.

The Snowflake dataset connected inside Predibase.

To build the model, Paradigm leveraged a number of input features about the trade like currency, quantity, venue, strategy and Greeks, as well as features about the user (market maker) like a unique identifier and activity score.

CURRENCY | QUANTITY | VENUE | STRATEGY | DELTA | GAMMA | VEGA | THETA | RHO | MAKER | ACTIVITY SCORE | QUOTED | TRADED |

BTC | 250 | DBT | Vertical spread | -0.14 | 7e-5 | 2.96745 | -21.664 | -0.4 | fegdhi6789 | high | false | false |

Choosing the right deep learning approach for Paradigm

Using millions of these trade-maker pairs and corresponding labels of “did quote” and “did trade”, Paradigm trained a deep learning recommender system on Predibase with a couple of clicks and few lines of code. Predibase has built-in support for recommender systems by means of Ludwig’s Comparator combiner, a specialized trainable module that compares the embeddings of two entities, trade and maker, to determine the likelihood of an interaction (quote/trade). This architecture that uses embeddings from entities and users to do relevancy matching for personalization systems has been used with other leading recommender systems as well, like the UberEats personalization system.

Building the end-to-end recommender model with a few lines of YAML

Predibase makes it easy for any engineer to do the work of an ML engineer with its declarative approach to model building. End-to-end deep learning pipelines—from preprocessing to training to post-processing—can be built using just a handful of lines of YAML configuration. The configuration specifies the inputs to your model, the output(s) you’d like to predict, and any specifications you’d like to set about the training job like learning rate or batch size. Predibase handles all the boiler-plate code behind the scenes, so you can declaratively customize what you want to and allow the system to automate the rest.

For example, the configuration below sets the model pipeline for a comparator combiner architecture described above and implemented by Paradigm for their custom recommender model. Their model configuration also includes a parameter for dropout to the training process to prevent overfitting.

Example model configuration:

input_features:

- name: CURRENCY

type: category

- name: QUANTITY

type: number

- name: VENUE

type: category

- name: STRATEGY

type: category

- name: DELTA

type: number

- name: GAMMA

type: number

- name: VEGA

type: number

- name: THETA

type: number

- name: RHO

type: number

- name: MAKER

type: category

- name: MAKER_ACTIVITY_SCORE

type: category

output_features:

- name: QUOTED

type: binary

- name: TRADED

type: binary

dependencies:

- QUOTED

preprocessing:

split:

type: fixed

combiner:

type: comparator

dropout: 0.1

entity_1:

- CURRENCY

- QUANTITY

- VENUE

- STRATEGY

- DELTA

- GAMMA

- VEGA

- THETA

- RHO

entity_2:

- MAKER

- MAKER_ACTIVITY_SCOREParadigm sample yaml

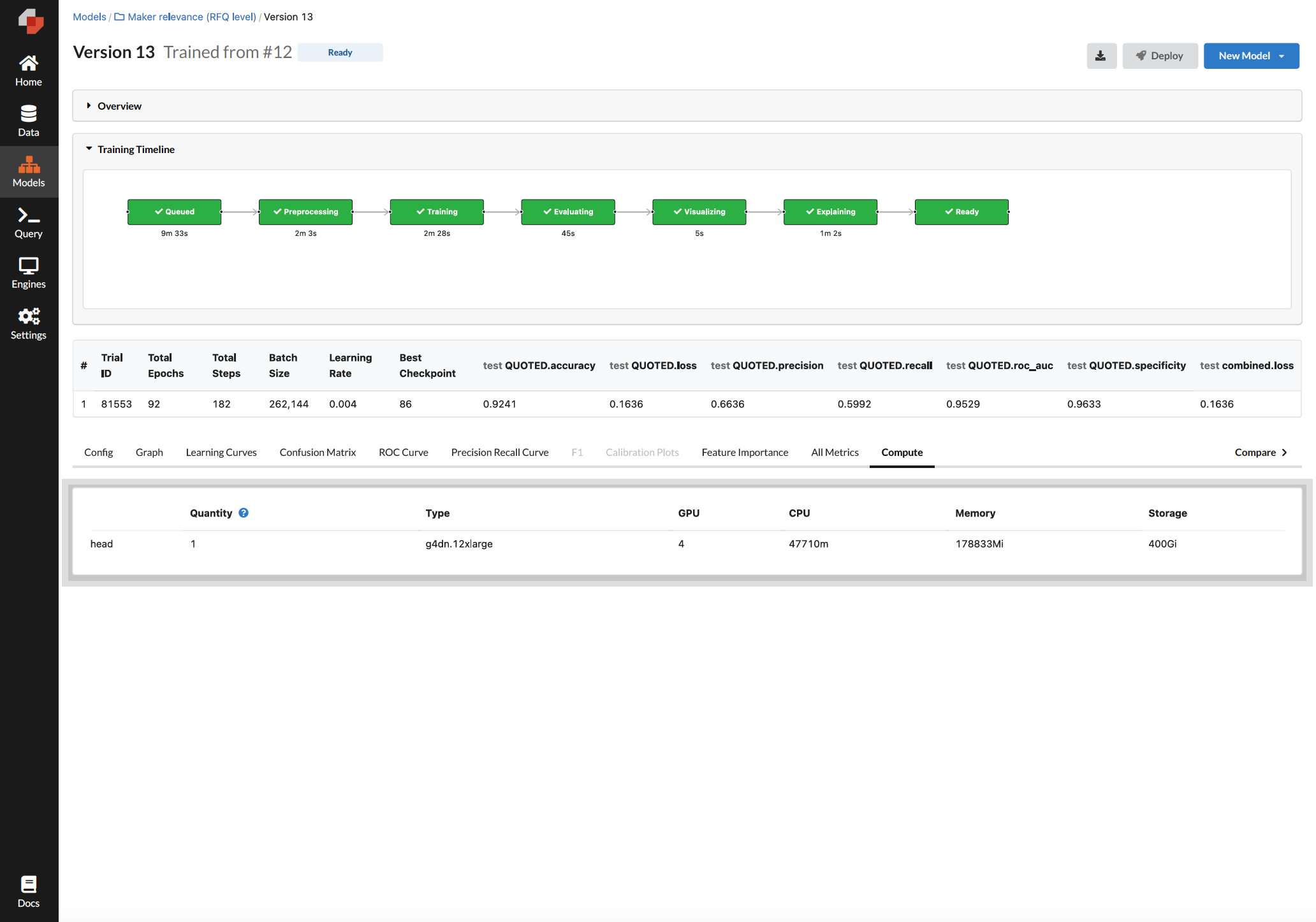

Once you kick off a model training job inside of Predibase, you receive a dashboard of the entire process and all available metrics. Predibase also right-sizes the appropriate amount of compute for your task (both GPUs and CPUs) based on the dataset you’ve connected, and the model architecture you’ve decided to use. This made it easy for a single engineer at Paradigm to train their model in minutes without spending countless hours and days building a custom infrastructure for distributed training.

Monitoring Paradigm's model training in the Predibase platform.

Results from paradigm’s model training

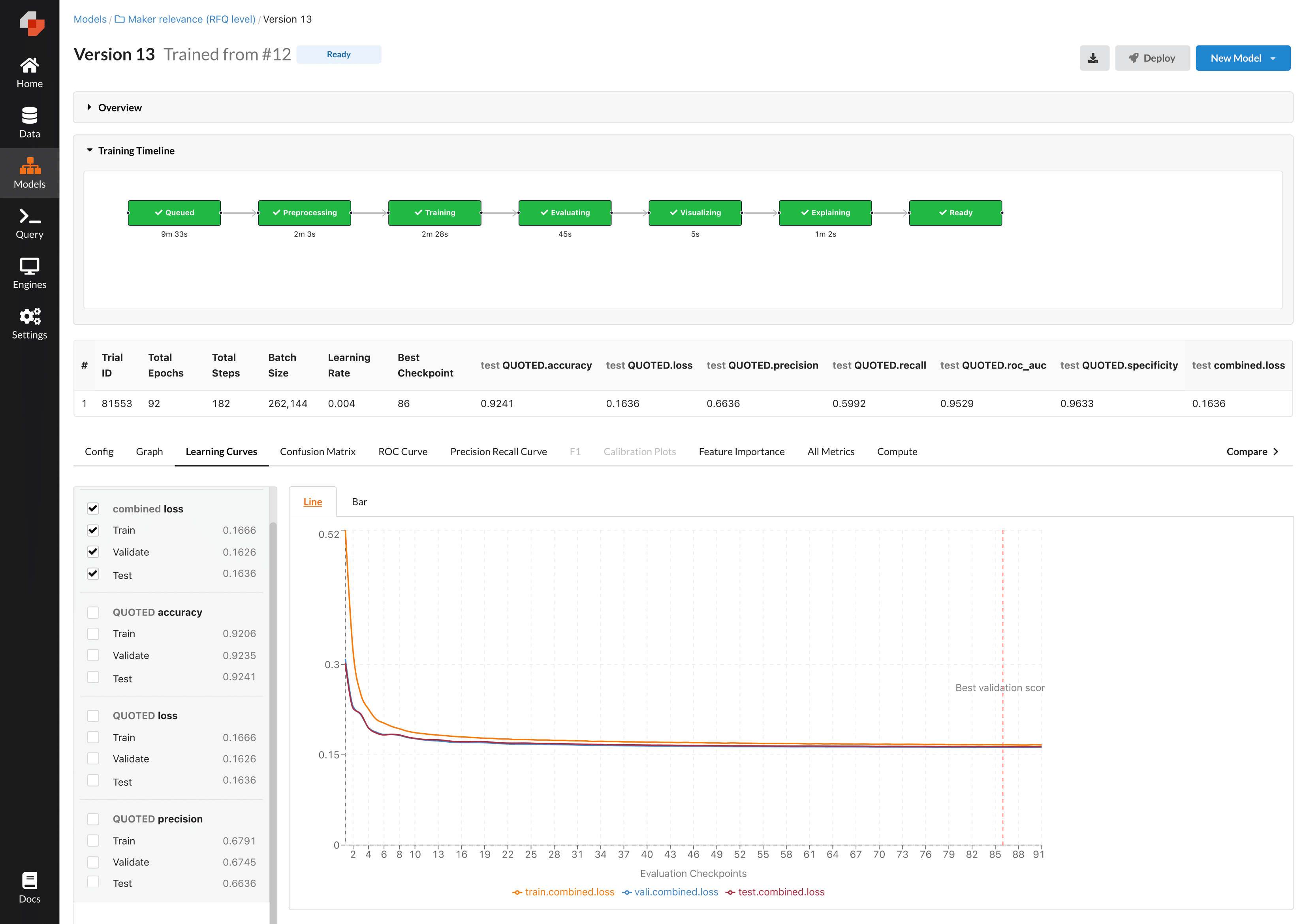

Once the model was trained, Paradigm was able to quickly review key performance metrics for their experiments using a series of dashboards automatically generated by Predibase. The dashboards include aggregate metrics like loss, accuracy, precision/recall, F1 score and much more. Predibase also handles splitting the dataset into training, validation and test splits and reports metrics on all of these axes.

In Paradigm’s case, the model operated at over 92% accuracy on the test set, and started to converge to an optimal set of results after just a couple dozen epochs of training. After training, Paradigm computed metrics on a held-out test set by using Predibase’s native batch prediction for offline evaluation of Snowflake data. Once Paradigm validated that the model was performing at an acceptable level, they were able to use Predibase’s deployment capabilities to seamlessly integrate it into their application.

Evaluating Paradigm's model performance in the Predibase platform.

Prepping Paradigm’s Recommender for Production

With Predibase’s managed infrastructure for model serving, Paradigm is able to easily deploy models in a few lines of YAML. Once in production, Paradigm can query a hosted endpoint for streaming inference and send personalized notifications to their market makers in real-time. Whether they’re away from the keyboard or simply not actively monitoring Paradigm, traders won’t miss another relevant investment opportunity. The production model is kept up-to-date by automatically retraining on Predibase as new labeled data comes into Snowflake. Additionally, Predibase's SDK makes it easy to set up custom retrain policies based on triggers like model drift.

Conclusion and future of ML at Paradigm

Paradigm's deep learning recommender architecture built on Predibase and Snowflake.

By adopting Predibase, Paradigm was able to build a deep learning-based recommendation system for their traders on top of their existing Snowflake Data Cloud. Market makers can now be notified of the most relevant RFQs, improving the trader experience and growing platform engagement.

Anand Gomes, CEO of Paradigm, expressed their satisfaction with the outcome, "The time it takes to build production models on top of Snowflake has been reduced from months to minutes and at a fraction of the cost. With Predibase, we’ve built powerful in-platform intelligence that helps our customers gain an edge."

Beyond RFQ personalization, Paradigm is exploring additional use cases for Predibase, including intelligent order book seeding and LLM (large language model) fine-tuning, demonstrating the versatility of the platform.