With the recent rise of open-source vision-language models, or VLMs, machine learning enthusiasts have begun exploring different use cases for them, and while instruction-tuned models such as Llama-3.2-11B-Vision-Instruct boast impressive results out-of-the-box, our prior work with LLMs highlights the potential of fine-tuning to further improve model quality.

What are VLMs?

VLMs are a fascinating type of multimodal model that combine text and image processing to understand and generate outputs based on both. In simple terms, they can take images and text as input and produce text as output, making them powerful tools for a range of tasks like image captioning, document understanding, and even visual question answering.

One of the most exciting aspects of large VLMs is their strong zero-shot capabilities. They’re able to generalize well to new situations without needing additional training. They can even capture detailed spatial relationships within images. This means they can identify and locate objects and answer questions about the locations of entities within the visual content.

With a broad set of potential uses, VLMs have unlocked new possibilities in areas such as chatting about images, recognizing elements in pictures by following instructions, and producing in-depth descriptions of visual content.

The fine-tuning opportunity and challenge

Fine-tuning can be very effective, but comes with its own set of challenges. Most engineers and data scientists attempting to fine-tune models quickly realize that productionizing open-source models is harder than it seems. Even with LLMs, we saw three primary challenges that teams struggle with:

- Complex Tooling: staying abreast of the latest fine-tuning techniques from research (e.g. Turbo LoRA, etc), learning how to implement, and then stitching together various open-source tools and frameworks is a cumbersome task for any individual.

- Unreliable Fine-Tuning: high-end GPUs (like A100s) are in short supply. As a result, most teams are left renting commodity GPUs that they struggle to use effectively. This results in frequent out-of-memory errors among other errors that bring training to a halt.

- Costly Model Serving: building a production system that meets SLAs and scales efficiently for a growing set of fine-tuned task-specific models requires deep expertise in building serving infra—which frankly is out of reach for most engineering teams.

With VLMs, even more difficulties arise:

- Inconsistent Expected Inputs: After the raw texts and images are processed into the final, tokenized model inputs, they must contain the correct parameters before being passed into the model. For example, for Llama-3.2-11B-Vision-Instruct fine-tuning, a cross-attention mask must be properly formatted for fine-tuning to work as expected.

- Lack of Standardized Model Structure: The number and types of layers in each model is different, making VLM serving a particularly complex problem. Due to the lack of standardization, each VLM requires a custom serving solution.

With Predibase, training and deploying your VLM has never been easier. We take care of all these complex, low-level details so you don’t have to. Our data preprocessing pipeline ensures your data is perfectly formatted for fine-tuning, and LoRAX handles serving seamlessly. Plus, Predibase is the only platform that offers instruction-based fine-tuning for VLMs, setting us apart from the common completion-based approaches. We've designed our fine-tuning framework to be fast, effective, and user-friendly, so you can focus on building powerful models without the hassle.

Results of Fine-Tuning

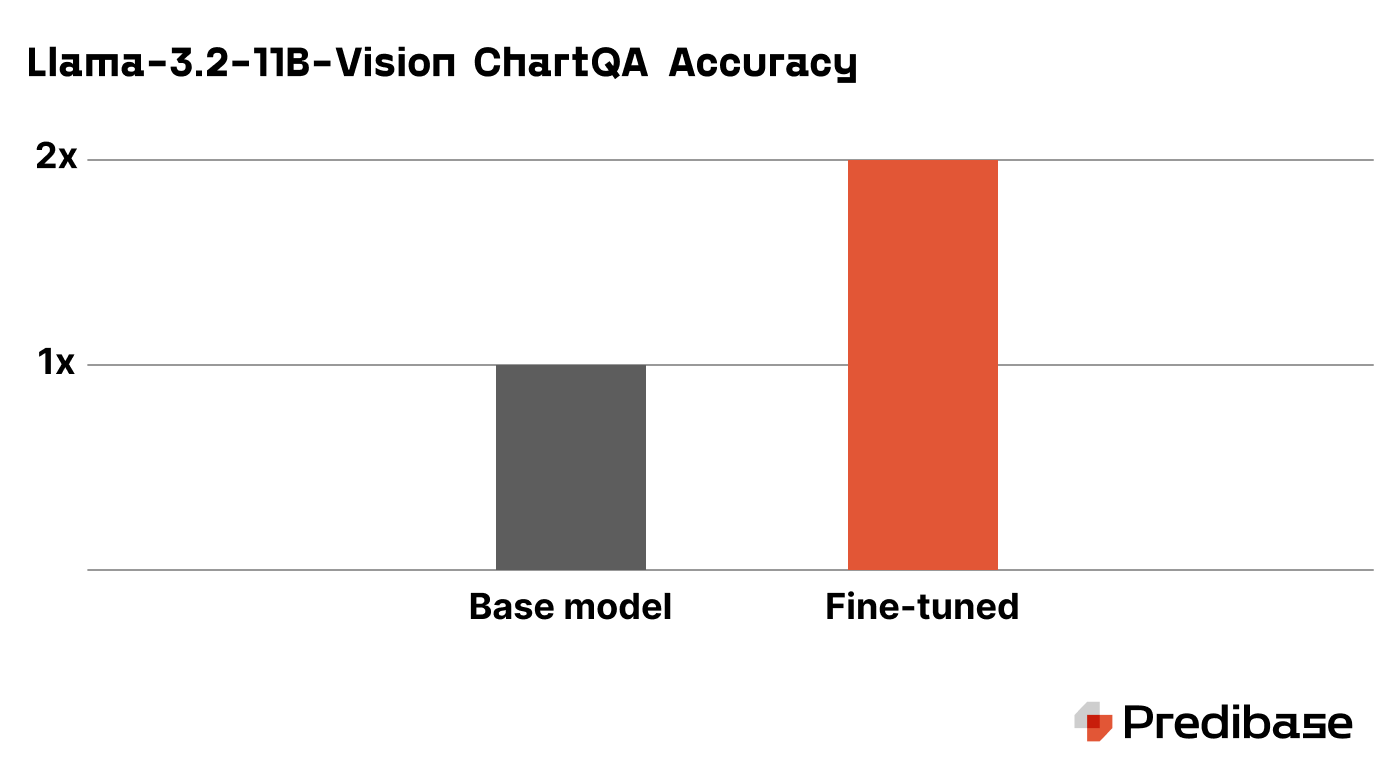

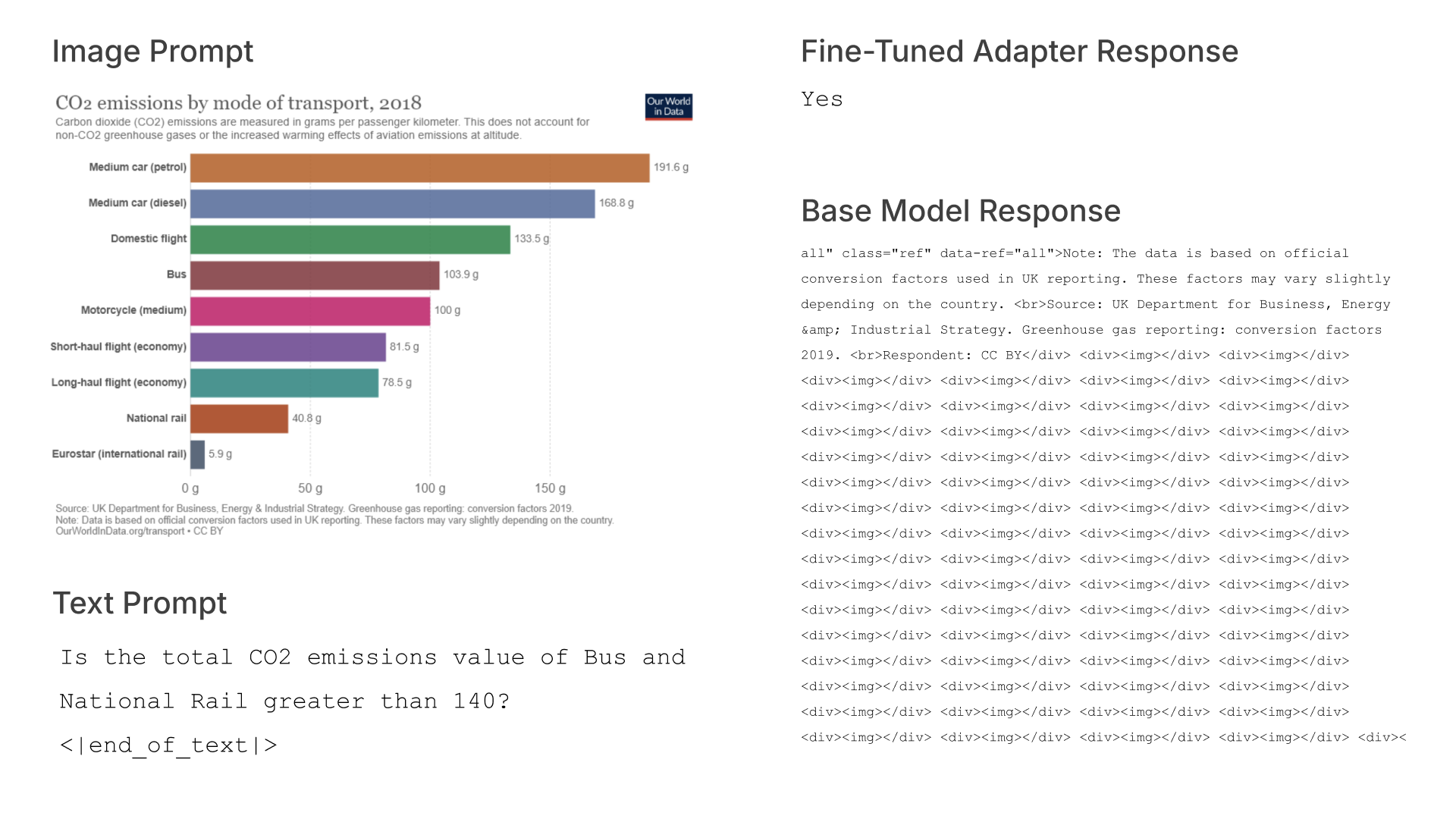

We fine-tuned a Llama-3.2-11B-Vision adapter on just 300 rows of the ChartQA dataset and got a 2x improvement on accuracy:

Here’s a side-by-side comparison of the performance:

After fine-tuning, our adapter ends up being far more concise and accurate than the base model.

Fine-tuning VLMs the easy way with Predibase

Predibase makes fine-tuning easy for any engineer and solves the challenges that teams frequently face by:

- Replacing the need for fine-tuning code with a declarative approach: Instead of having to learn the ins and outs of fine-tuning a model with code, we allow users to specify model configurations that establish how training will take place. This makes it easy to iterate on your fine-tuning journey, try different parameter-efficient strategies, and experiment with different datasets or parameters, all with just a few clicks of your mouse.

- Dynamically serving 100s of fine-tuned models for the price of one: Predibase’s scalable serving infra automatically scales up and down to meet the demands of any production environment. On top of that, we implement a novel serving architecture called LoRA Exchange (LoRAX). This approach allows your team to serve many fine-tuned LLMs together for over 100x cost reduction versus dedicated deployments. Fine-tuned models can be loaded and queried in seconds.

Two Easy Steps to Fine-Tune Llama-3.2-11B-Vision in Predibase

1. Format and connect your dataset

First, make sure that your data is formatted and connected properly. We’re going to be using https://huggingface.co/datasets/lmms-lab/ChartQA for our dataset in this demo.

Let’s start with isolating the prompt, completion, and image from this dataset:

def process_chartqa(row):

prompt = row["question"]

completion = row["answer"]

image = row["image"]

return {"prompt": prompt, "completion": completion, "images": image}Then, we’ll need to convert the images to bytes. The function provided is a general purpose function that can be used on a large number of datasets:

def to_bytes(image_url):

if isinstance(image_url, list) or isinstance(image_url, np.ndarray):

image_url = image_url[0]

if isinstance(image_url, dict):

image_url = image_url["bytes"]

if isinstance(image_url, bytes):

return image_url

if isinstance(image_url, str):

image = Image.open(requests.get(image_url, stream=True).raw)

image = Image.new("RGB", image.size, (255, 255, 255))

else:

image = image_url

img_byte_array = io.BytesIO()

image.save(img_byte_array, format='PNG')

return img_byte_array.getvalue()Finally, we will format the text to accommodate the image:

def format_row(row):

prompt = row["prompt"]

messages = [

{

"role": "user",

"content": [

{

"type": "text",

"text": processor.image_token + prompt,

},

]

}

]

try:

formatted_prompt = processor.apply_chat_template(messages, tokenize=False, add_generation_prompt=False)

except:

formatted_prompt = processor.image_token + prompt + processor.tokenizer.eos_token

completion = row["completion"]

image_bytes = to_bytes(row["images"])

return {"prompt": formatted_prompt, "completion": completion, "images": image_bytes}With these three functions defined, your code for downloading and formatting your dataset should look something like this:

from transformers import AutoProcessor

from PIL import Image

import pandas as pd

import requests

import io

import numpy as np

import datasets

processor = AutoProcessor.from_pretrained("meta-llama/Llama-3.2-11B-Vision-Instruct")

dataset = datasets.load_dataset("lmms-lab/ChartQA", split="test").select(range(300))

dataset = dataset.to_pandas()

print("Processing dataset...")

dataset: pd.DataFrame = dataset.apply(process_chartqa, axis=1, result_type="expand")

print("Formatting rows...")

dataset: pd.DataFrame = dataset.apply(format_row, axis=1, result_type="expand")

dataset.to_csv("vlm_dataset.csv")With the size of VLM data, we highly recommend that you first upload this data to S3 before connecting to Predibase via the UI.

2. Launch the training job

After your dataset has been uploaded to Predibase, you can simply fine-tune on it using the following code:

from predibase import Predibase

pb = Predibase("{PREDIBASE_API_TOKEN}")

pb_dataset = "vlm_dataset" # This is the name of your VLM dataset in Predibase

config={

"base_model": meta-llama/Llama-3.2-11B-Vision-Instruct,

"epochs": 3,

"rank": 16,

"learning_rate": 0.0002,

"target_modules": ["q_proj", "v_proj", "k_proj", "o_proj", "up_proj", "down_proj", "gate_proj", "fc1", "fc2"]

}

adapter = pb.finetuning.jobs.create(

config=config,

dataset=pb_dataset,

repo="predibase_vlm",

description="I fine-tuned a VLM!",

)Running Inference on Your Fine-Tuned VLM

Inference on your VLM in Predibase is nearly identical to inference on an LLM. The only major difference lies in how we format our inputs. Note how we add the image into the text input below.

First, you can use the “to_bytes” function from above to convert an image from a URL/numpy array/etc. to a byte string:

def to_bytes(image_url):

if isinstance(image_url, list) or isinstance(image_url, np.ndarray):

image_url = image_url[0]

if isinstance(image_url, dict):

image_url = image_url["bytes"]

if isinstance(image_url, bytes):

return image_url

if isinstance(image_url, str):

image = Image.open(requests.get(image_url, stream=True).raw)

image = Image.new("RGB", image.size, (255, 255, 255))

else:

image = image_url

img_byte_array = io.BytesIO()

image.save(img_byte_array, format='PNG')

return img_byte_array.getvalue()Then, you can add it to your prompt by converting it to a base64-encoded byte string and query your model as below:

from predibase import Predibase, FinetuningConfig, DeploymentConfig

import ast

import base64

pb = Predibase(api_token="<PREDIBASE API TOKEN>")

image= ast.literal_eval(image) // image is the byte string from above

encoded_byte_string = base64.b64encode(image).decode()

prompt = f" What is this an image of?"

lorax_client = pb.deployments.client("llama-3-2-11b-vision-instruct")

print(lorax_client.generate(prompt, adapter_id="my-repo/1", max_new_tokens=100).generated_text)Start Your Customized LLama-3.2-Vision-Instruct with Predibase’s Free Trial

As you can see, Predibase makes it very easy to go from fine-tuning datasets to serving fine-tuned models using just a few commands in the Predibase SDK!

If you’re interested in fine-tuning and serving specialized LLMs, be sure to check out Predibase and sign up for a 30-day free trial to get started fine-tuning for free. And make sure to join the community on Discord.