Authors: Anne Holler, Avanika Narayan, Justin Zhao, Shreya Rajpal, Daniel Treiman, Devvret Rishi, Travis Addair, Piero Molino

Ludwig v0.4.1 introduces experimental AutoML with auto config generation integrated w/hyperopt on Ray Tune

INTRODUCTION

Ludwig is an open-source declarative deep learning (DL) framework that enables individuals from a variety of backgrounds to train and deploy state-of-the-art tabular, natural language processing, and computer vision models. Ludwig serves as a toolkit for end-to-end machine learning, through which users can experiment with different model hyperparameters using Ray Tune, scale up to large out-of-memory datasets and multi-node clusters using Horovod and Ray, and serve a model in production using MLflow. The model architecture, training loop, hyperparameter search range, and backend infrastructure are specified in a YAML file using Ludwig’s declarative interface, which eliminates the need to write code. By abstracting away the barriers to training and deploying DL models, Ludwig makes developing DL models a simple iterative process.

Currently Ludwig requires that users write a model configuration from scratch: specifying input and output features and types, choosing a model architecture, and describing the training loop as well as the hyperparameter search strategy. Hence, users need to have some familiarity with the attributes of various deep learning models and the effects of training parameters (e.g., learning rate) and model parameters (e.g., model size) on model performance. This knowledge can take significant time to acquire and is typically domain-specific.

To reduce this learning curve, a number of AutoML solutions have been developed to automate the preprocessing of features and the choice of model architecture and training parameters. Some example AutoML solutions for tabular datasets include AutoGluon-Tabular, GCP AutoML Tables, and Auto-sklearn. While lowering the barrier to entry, the most common critique of AutoML tools today is that they are black-box, providing limited transparency into modeling decisions and little-to-no customizability or control to the end user (Whither AutoML ‘21).

Ludwig’s simple, declarative, and extensible interface makes it well-suited to support AutoML as a glass-box solution, maintaining flexibility and control for the user. In this blog post, we describe the evolution of the Ludwig AutoML module, which automates the process of building deep learning pipelines in Ludwig. We cover the goals of Ludwig AutoML and provide details about its implementation. Focusing on tabular datasets, we compare the accuracy of its generated models with that of high-performing published models and its usability with several existing AutoML solutions. We give working examples of its invocation and output.

LUDWIG AUTOML GOALS

Our vision for Ludwig AutoML is that it be simple to use and that its results be easy to understand and refine. This vision leads us to the following goals:

- Data-Informed: Use feature data analysis and heuristics derived from historical model training to drive automatic model creation.

- Task- and resource-specific: Given task, training, and resource constraints, help users build an appropriate DL pipeline, including the selection of model and hyperparameter search space.

- User in-the-loop: Support user input and iterability in model construction.

- Black-box → Glass-box: Produce standard accessible tunable models, in contrast to the opaque models generated by a number of AutoML systems.

DATA-INFORMED AUTOML

The key challenge in creating data-informed Ludwig AutoML is to develop heuristics which support efficiently and automatically producing Ludwig models that have accuracy competitive with models produced manually by experts. Our approach to this challenge was to run an extensive set of hyperparameter training experiments across a variety of datasets, to verify that competitive models were produced by those runs, and then to analyze the runs for patterns we could exploit to produce similarly competitive models automatically using substantially less time and computing resources. We chose to focus on tabular datasets, since good performance on such datasets has been a recent area of interest in the DL community and since the kind of fine-tuning transfer learning which can be used to reduce training time for image or text datasets is not applicable.

In this section, we’ll first describe how we derived heuristics for automatically creating models for tabular datasets, based on hyperparameter search runs for three DL model types across 12 tabular datasets. We’ll then explain how we incorporated those heuristics into a new AutoML module for Ludwig. And finally, we’ll present our validation of Ludwig’s AutoML module on an additional nine tabular datasets. These results were gathered using the recently-released Ludwig v0.4.1 on TensorFlow; the upcoming Ludwig v0.5 release supports AutoML on PyTorch. All results were gathered on a fixed size three node Ray cluster, composed of AWS g4dn.4xlarge nodes; we discuss efficient resource management alternatives for DL training workloads here.

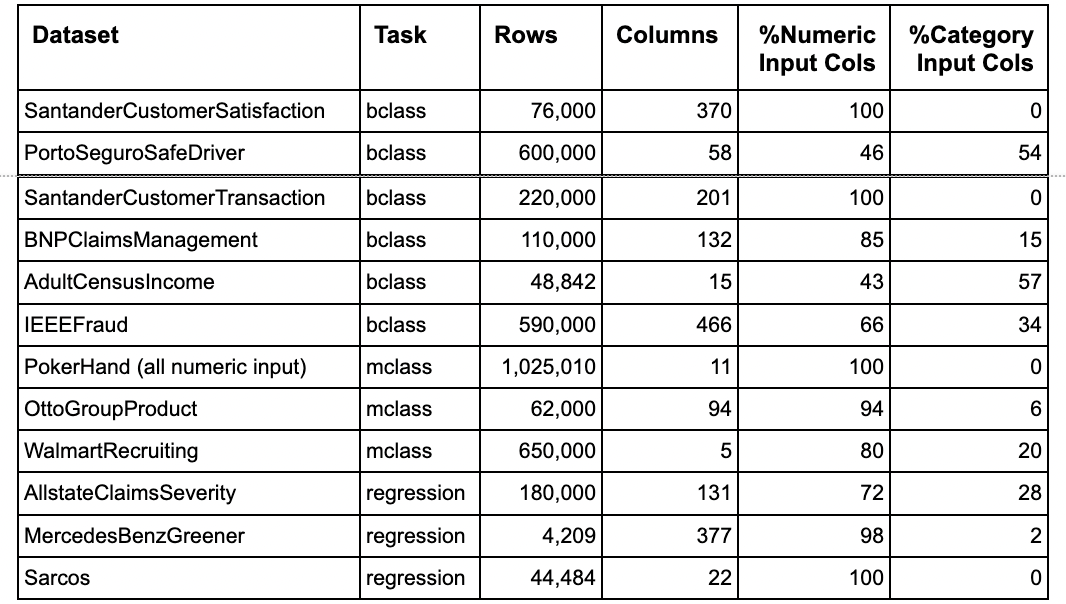

Table 1 shows the 12 tabular datasets we used to develop AutoML heuristics for Ludwig. We sought diversity in task type (6 binary classification, 3 multi-class classification, 3 regression), dataset length and width, and input column types (percent numeric and category).

Table 1: Tabular Datasets used to develop AutoML heuristics for Ludwig

For our extensive hyperparameter search, we trained DL models for each of the datasets for the three main tabular model types available in Ludwig:

- Tabnet [Google]; uses sequential attention to provide interpretable tabular learning

- Tabtransformer [Amazon]; applies the self-attention method to learn tabular embeddings

- Concat [Ludwig default MLP]; concatenates the encoding layer output and runs the result through fully connected layers

We used the skopt hyperparameter search technique via Ludwig’s interface to Ray Tune:

- For Tabnet, we searched across 11 hyperparameters

- For Tabtransformer, we searched across 8 hyperparameters

- For Concat, we searched across 5 hyperparameters

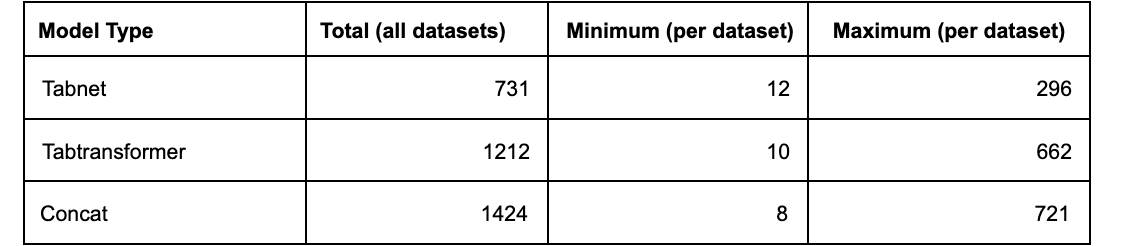

For each training job in the hyperparameter search, we ran up to 300 epochs, with early stopping set to 30 epochs. As previously mentioned, we ran each hyperparameter search job on a fixed size three node Ray cluster, composed of AWS g4dn.4xlarge nodes. Table 2 shows the Compute hours consumed.

Table 2: Compute hours consumed for hyperparameter search developing AutoML heuristics for Ludwig

We compared the accuracy of the models found by our search process with those reported publicly for high-performing models, to validate that these tabular model types had sufficient predictive power to be competitive. We then looked for characteristics of our best-accuracy models that we could exploit to automatically produce such models with greatly reduced compute time.

We observed, looking at the model type results, that Tabnet models:

- were most accurate on average across the datasets

- scaled efficiently as dataset width increased, particularly compared to Tabtransformer

- had the best train-time to model-performance ratio in most cases

We analyzed the relative importance of each hyperparameter with respect to model accuracy as well as its most impactful subset of values, to identify opportunities to reduce the ranges of hyperparameter search. This allowed substantial reduction in the search space needed to yield high-performing models.

And finally, we were surprised to find that if we used the best hyperparameter settings for a given model type for dataset X to train a model of that type for dataset Y, that the resulting model would usually be within ~1% of the best model produced by extensive hyperparameter search. This “transfer learning” of hyperparameter configuration settings between datasets can greatly reduce search time, and we are not aware of other AutoML systems exploiting this. We integrated this technique in our package by providing some high performance transferable configurations as an optional initial seed for the hyperparameter search.

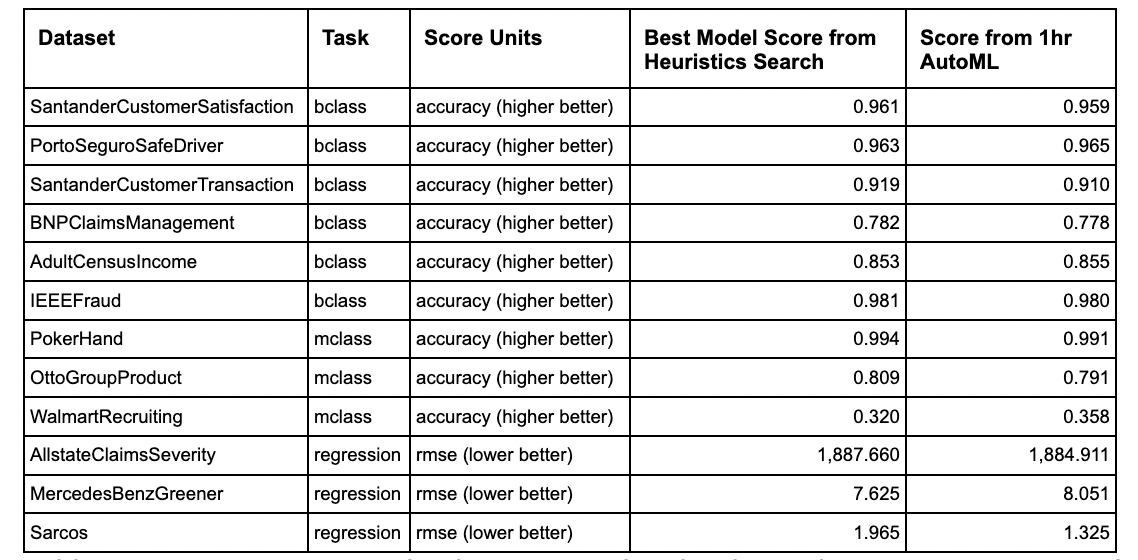

Based on these observations, we created an AutoML module for Ludwig. The Ludwig AutoML module chooses the model type and hyperparameter ranges to search for 10 trials, with the transfer learning model configuration specified as the initial trial to run. Ludwig AutoML includes automatic feature type selection, based on codifying key data attributes that experts use to assign types, but users can override the automatic choice. As a sanity check, we ran Ludwig AutoML with a short (1 hour) time budget on the 12 datasets that we used to create its heuristics. The results are shown in Table 3. In all cases but one, Ludwig AutoML performed within 2% of the best model found by the more extensive searches, which is a positive result. The exception was MercedesBenzGreener, which showed signs of overfitting (the validation score was better but the test score was worse); this is a known challenge with this small wide dataset.

Table 3: Running AutoML on the datasets used to develop its heuristics as a sanity check

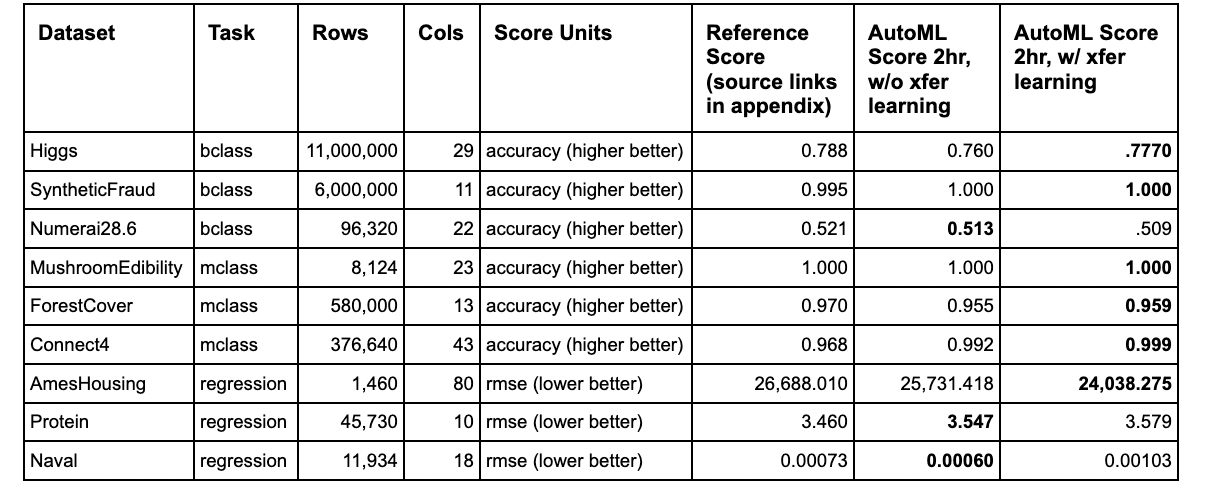

We ran the Ludwig AutoML module to produce models for nine tabular datasets that were not included in the 12 we used to develop our heuristics. The results are shown in Table 4. For each dataset, we report the model accuracy produced by Ludwig AutoML, along with reference scores for high-performing models reported publicly. In each case, the performance of the best Ludwig AutoML model (in bold) is within 2% of the reference model. And the Ludwig AutoML time budget was 2 hours, which is substantially lower than the tens or in some cases hundreds of hours per-model-type hyperparameter searches we ran to get high-performing models in our heuristics formation process. We list the accuracy of the model produced by Ludwig AutoML without and with the optional transfer learning of the hyperparameter settings to show the impact that aspect of Ludwig AutoML can have on efficiently finding high-performing models.

Table 4: Running AutoML on datasets not used to develop its heuristics as a validation check

COMPARISON WITH SELECTED AUTOML TOOLS

In this section, we compare Ludwig AutoML usability with that of three state-of-the-art AutoML systems for Tabular datasets.

AutoGluon-Tabular is an open-source AutoML framework from AWS that produces ensembles of multiple models stacked in multiple layers. The creators of the framework have shown that the tabular data models created by AutoGluon-Tabular perform well and are produced efficiently relative to those produced by a set of other AutoML systems. While the AutoGluon-Tabular approach is effective at producing high-performing tabular models efficiently, the models that are produced are complex ensembles of models, and as such can present problems in understanding, evolving, and maintaining those models outside of using AutoGluon-Tabular. Ludwig AutoML aims to efficiently produce competitive models that adopt standard DL architectures, for more flexible use and ease of deployment and maintenance.

GCP AutoML Tables is a commercial AutoML system from Google. Available as a managed service, GCP-Tables performs model training and prediction via API calls to Google cloud. While GCP-Tables was shown in the AutoGluon-Tabular paper to produce high-performing models with the convenience of cloud computing, its compute efficiency was low relative to a number of other AutoML techniques. Also, the models produced are tied to the Google Cloud platform. In contrast, Ludwig AutoML is transparent and open source, and efficiently produces standard models that are platform-agnostic. And it can easily be run on distributed clusters, thanks to the integration with Horovod and Ray.

Auto-sklearn uses global optimization across a set of scikit-learn model configurations, and produces an ensemble of all the models tested during the global optimization process. These models include multi-layer neural networks, but not full-fledged deep learning architectures. To speed up the optimization process, auto-sklearn uses meta-learning to identify similar datasets along with knowledge gathered from previous runs. Ludwig AutoML also uses a form of meta-learning by transferring configurations across tasks to speed up its optimization process, but focuses on producing standard non-ensembled DL models that can more easily be further refined or modified by the user.

LUDWIG AUTOML USAGE

The API for Ludwig AutoML is auto_train. Here is a simple example of its invocation:

auto_train_results = ludwig.automl.auto_train(

dataset=my_dataset_df,

target=target_column_name,

time_limit_s=7200,

tune_for_memory=False

)LUDWIG AUTOML USAGE

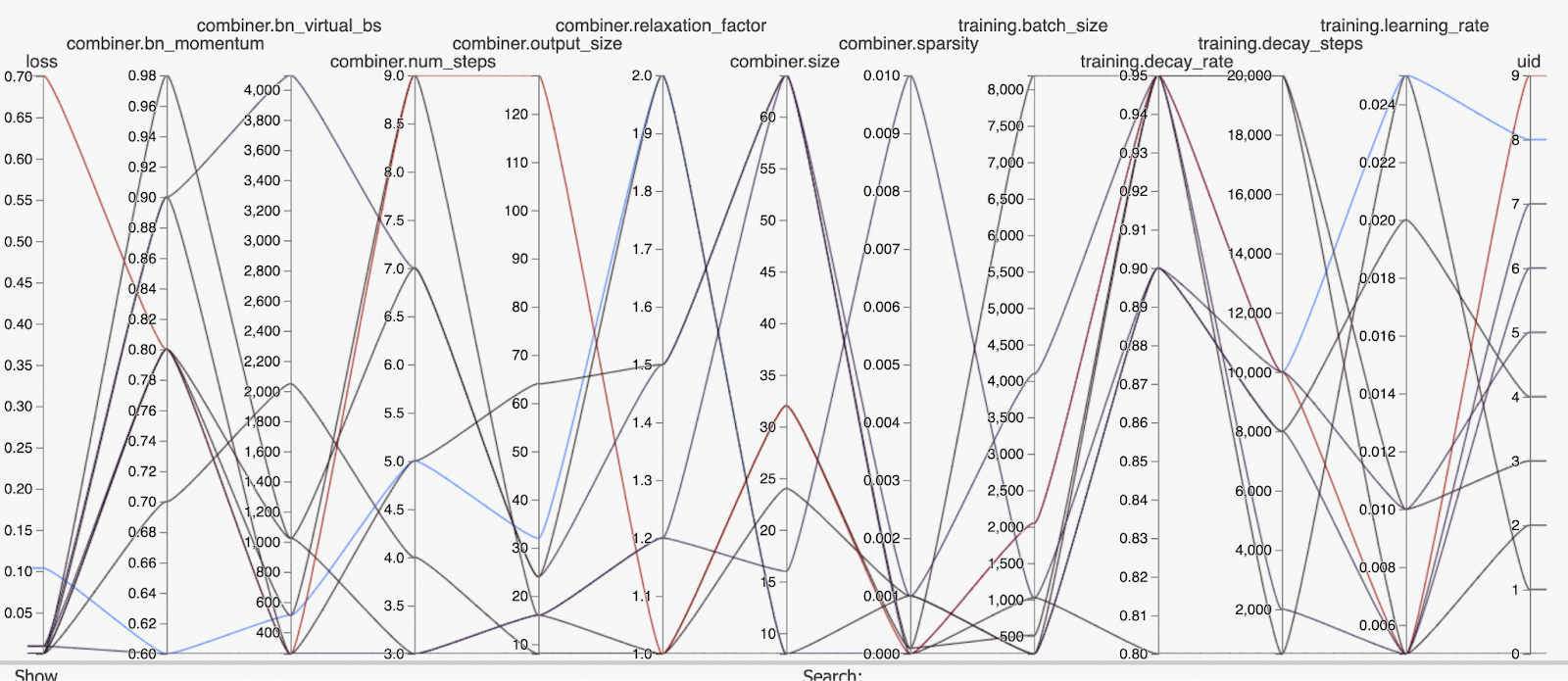

An example of its use on the Mushroom Edibility dataset can be found here, in which the dataset, the target column name, and a time limit for the AutoML are passed to the API. The API uses the heuristics previously described to create a hyperparameter search configuration that is run for the specified time limit using Ray Tune Async HyperBand. The result is the set of models produced by the trials in the search, along with a hyperparameter search report, which can be inspected manually or post-processed by various Ludwig visualization tools. Figure 1 contains the output for ludwig visualize -v hyperopt_hiplot run on this hyperparameter search report.

Figure 1: Ludwig visualize -v hyperopt_hiplot on hyperparameter search report

Users can audit and interact with the Ludwig AutoML API in various ways as described below.

The create_auto_config API outputs auto_train’s hyperparameter search configuration without running the search. This API is useful for examining the types chosen for the input and output features, the model type selected, and the hyperparameters and ranges specified. Here is a simple example of its invocation:

auto_config = ludwig.automl.create_auto_config(

dataset=my_dataset_df,

target=target_column_name,

time_limit_s=7200,

tune_for_memory=False

)An example of its use on the Mushroom Edibility dataset is here, with the associated results shown here. For manual refinement, this API output can be edited and directly used as the configuration for a Ludwig hyperparameter search job.

The user_config parameter can be provided to the auto_train or create_auto_config APIs to override specified parts of the configuration produced. For example, this auto_train invocation for the Walmart Recruiting dataset specifies that the TripType output feature be set to type category to override the Ludwig AutoML type detection system’s characterization of the feature as numerical, based on the presence of 38 non-contiguous integer values in the column. We note that this is one of only two cases, across the 21 datasets we ran, where we needed to override Ludwig AutoML’s automatic type detection.

SUMMARY AND FUTURE WORK

We have presented the development and validation of Ludwig AutoML for DL. The glass-box API provided in the package embodies Ludwig’s main design principles of marrying simplicity, flexibility, and effectiveness, without having to compromise on any.

This work focused on Tabular datasets, efficiently producing performance competitive with expert-developed models. We intend to next focus on tuning Ludwig AutoML for image and text datasets, so that the same simple auto_train interface can be used across many structured, unstructured, and semi-structured data tasks.

We are also actively working on a managed platform to bring this novel approach for automated machine learning to organizations at scale through Predibase, a cohesive end-to-end platform built on top of Ludwig, Horovod and Ray. We’re excited to share more details on that soon and if you’d like to get in touch in the meantime please feel free to reach out to us at team@predibase.com.

We welcome discussion or contributions from researchers and practitioners alike, and we welcome you all to join our open source community!

APPENDIX: REFERENCE SCORE LINKS

Below are links for the reference scores listed in Table 4.