Editors: Justin Zhao, Daniel Treiman, Piero Molino

A significant step was taken in Ludwig 0.6 to improve the code quality of Ludwig components, e.g., encoders, combiners and decoders. All of these components may be implemented as deep neural networks, and may have trainable or fixed parameters. Deep neural networks have many layers composed of a large number of parameters that must be updated to converge to a solution. Depending on the particular algorithm, the code for updating parameters during training can be quite complex. As a result it is near impossible for a developer to reason through an analysis that confirms model parameters are updated.

In this article we’ll show how we introduced a mechanism for testing the updates of the weights to Ludwig, how it improved code quality and how it can be used by the PyTorch community

How Neural Networks Are Trained

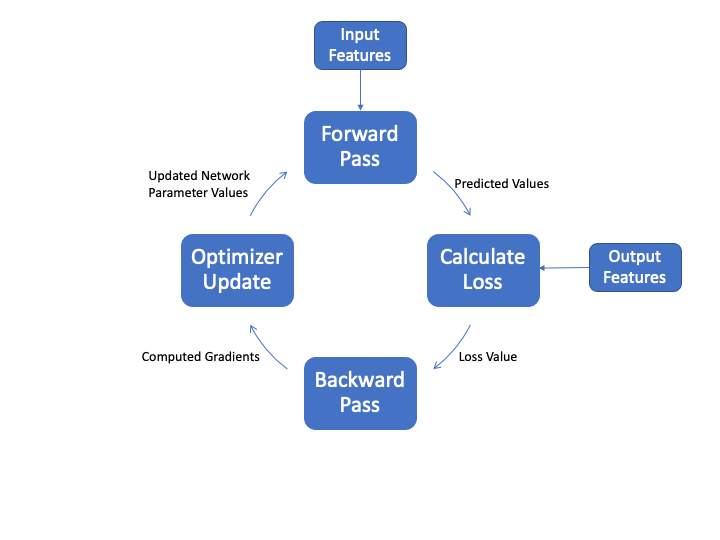

At a high-level Figure 1 shows the typical training cycle for a neural network. Input features from the training data are fed into the neural network where training data are combined with network parameters to produce predictions. These predictions are compared to the target output features to compute loss values. Lower the loss, the better the model’s predictions match the training data. The backward pass computes the gradient of each parameter with respect to the total loss for each batch of training examples. These gradients are used by the optimizer to update the network parameters to minimize the total loss. This process is repeated many, many times until the model “converges” to a solution.

Figure 1: Neural Network Training Cycle

Detecting Errors in Neural Architectures

Computations performed in the forward pass range from very simple, sequential processing to a complex set of computations that depend on various factors, such as type of training data and pseudo random number values. If these complex computations are not handled correctly, this may produce miscalculations of the gradient and inconsistent updating of network parameters during the optimization step.

Errors in neural network architectures may be harder to detect since unlike regular code, no hard errors are generated. Subtle architecture issues may surface only during model training, manifesting as slightly reduced performance or slower convergence time.

Introducing the check_module_parametes_updated utility

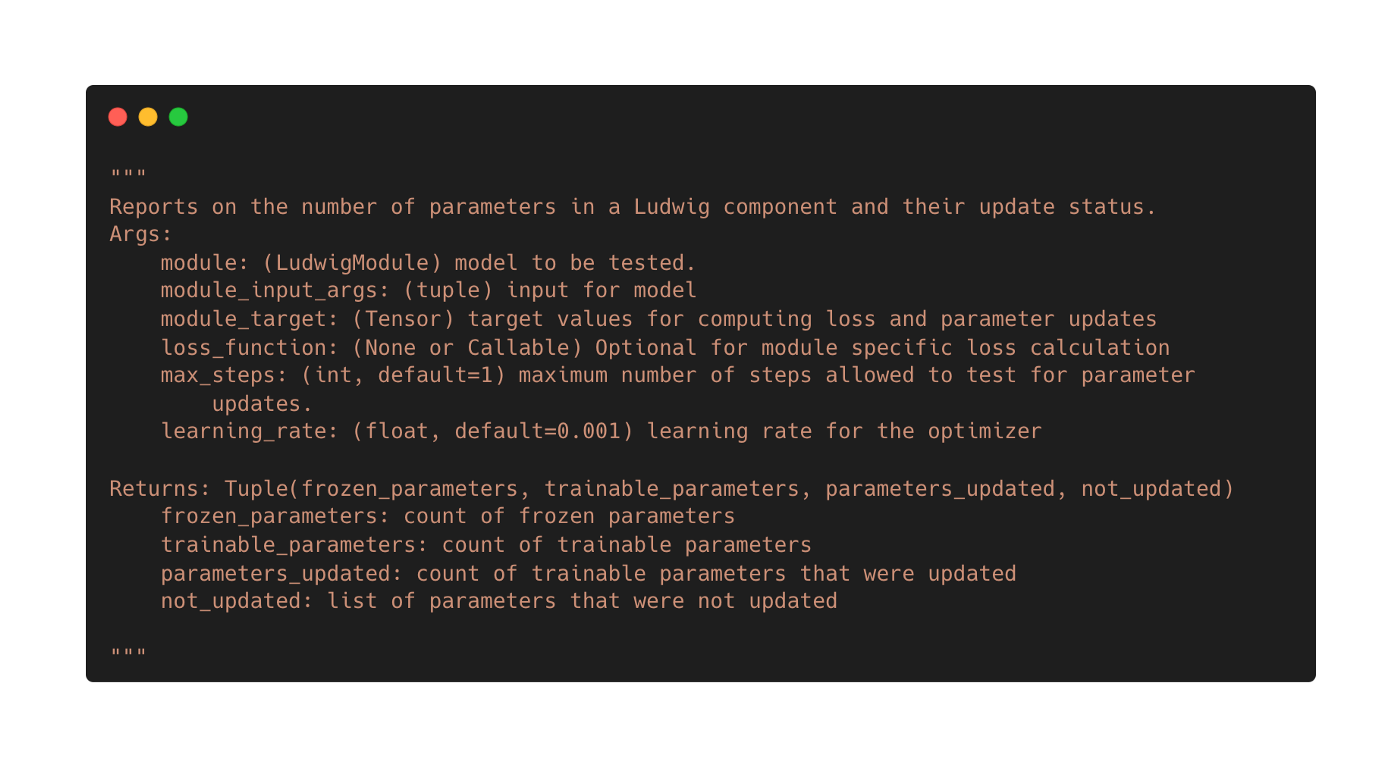

To address this difficulty, a reusable utility called check_module_parametes_updated() was developed by the Ludwig team to perform a quick sanity check on Ludwig encoders, combiners and decoders to ensure parameters, such as weights and biases, are updated during one cycle. This work was inspired by these earlier blog postings: How to unit test machine learning code and Testing Your PyTorch Models with Torcheck.

Before continuing, a note on terminology. For the remainder of the post, the term �“parameter” will mean Torch’s Parameter object. This should not be confused with the other use of the term “parameter” referring to the underlying Torch.tensor objects.

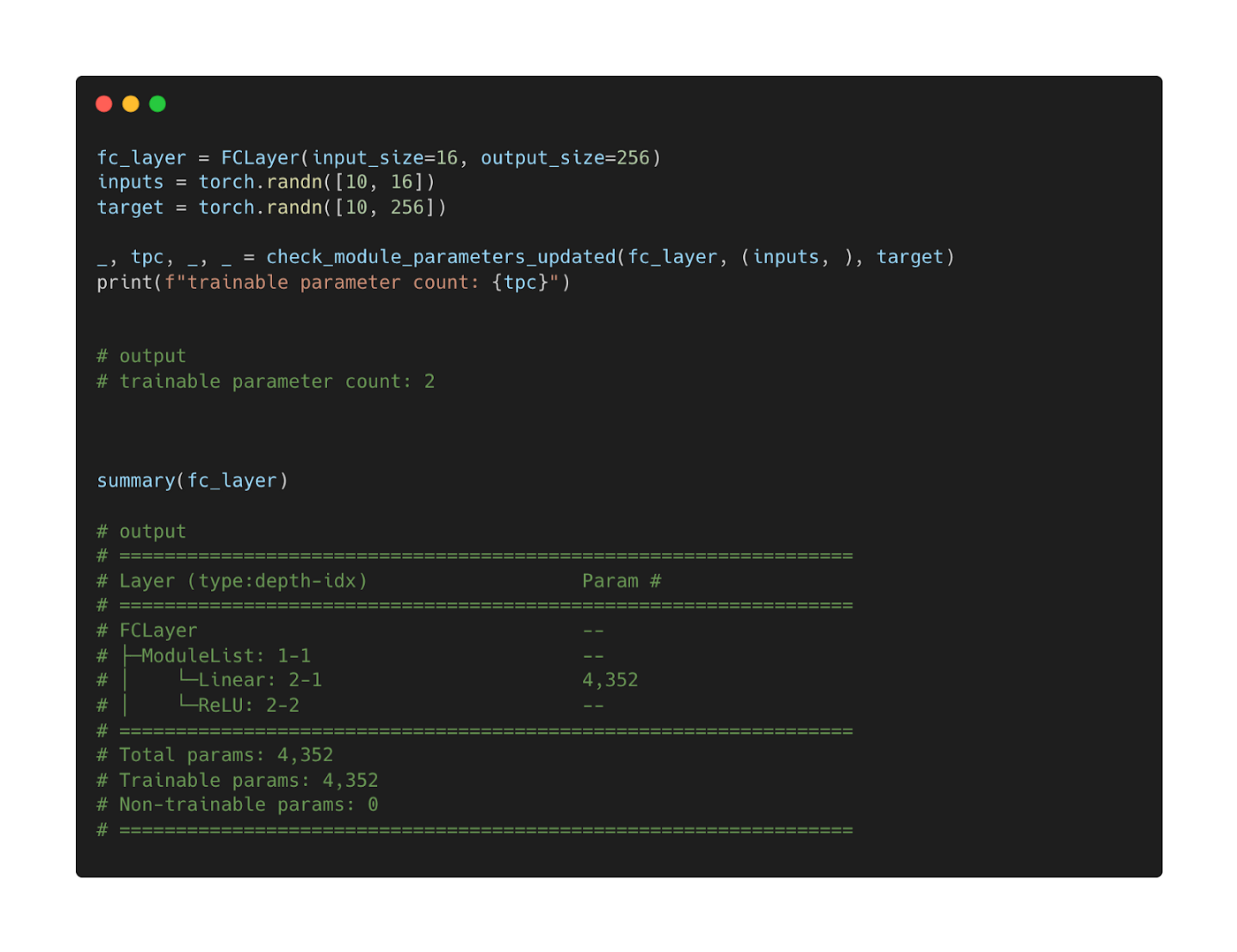

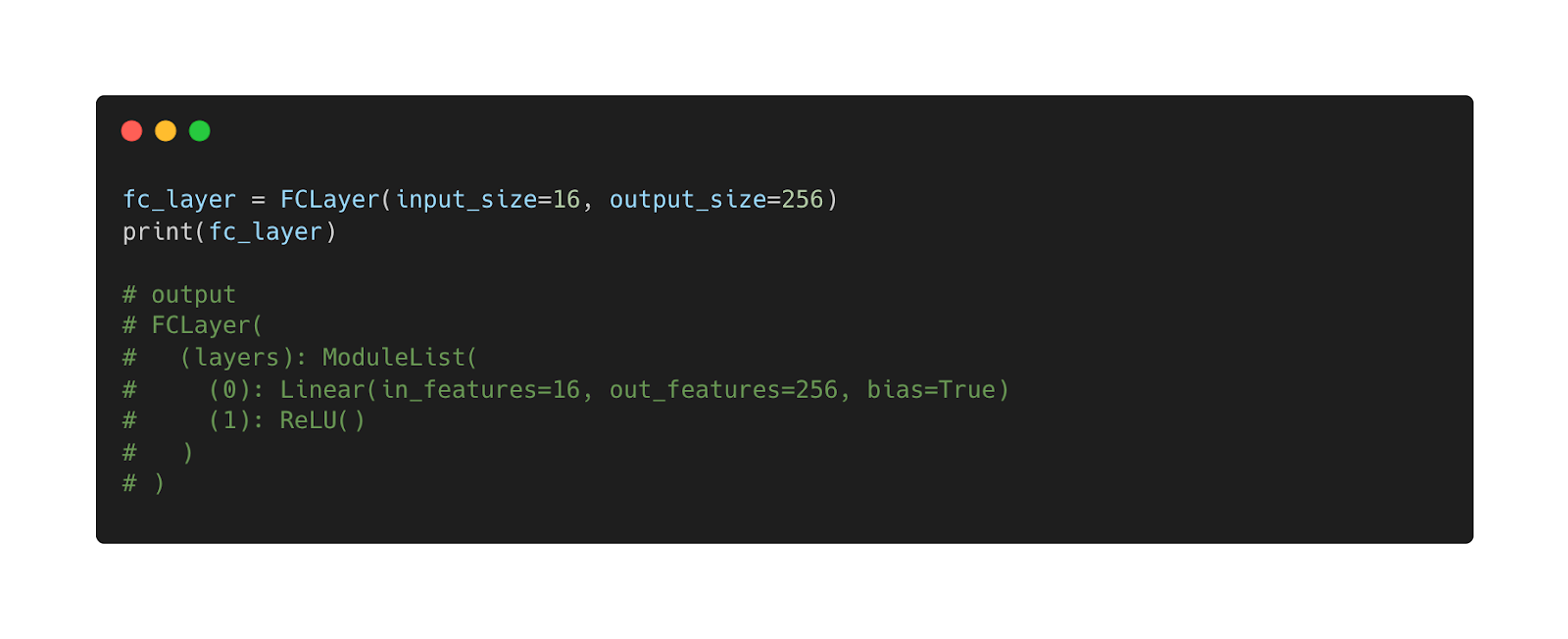

This code fragment illustrates this difference. For a fully connected layer (the FCLayer module in Ludwig) named fc_layer defined below,

check_module_parameters_updated()Parameter objects, which represent weights and bias for that fc_layer. For the same object torchinfo.summary() reports there are 4,352 parameters. These are the individual tensors that make up fc_layer weights (16 X 256 = 4,096) and bias (256) that add up to 4,352.

How does check_module_parameters_updated() work?

In a nutshell, the utility performs a minimal version of the core learning procedure in Figure 1 using synthetic data. As described in the function’s docstring, there are three required positional parameters:

module: this is the instantiated Ludwig object to be tested, i.e.,encoder,combiner, ordecodermodule_input_args: tuple of synthetic tensor data that is passed to theforward()method of the module being tested.module_target: target values used to compute losses.

The other function parameters are optional with the specified default values, which can be modified if needed.

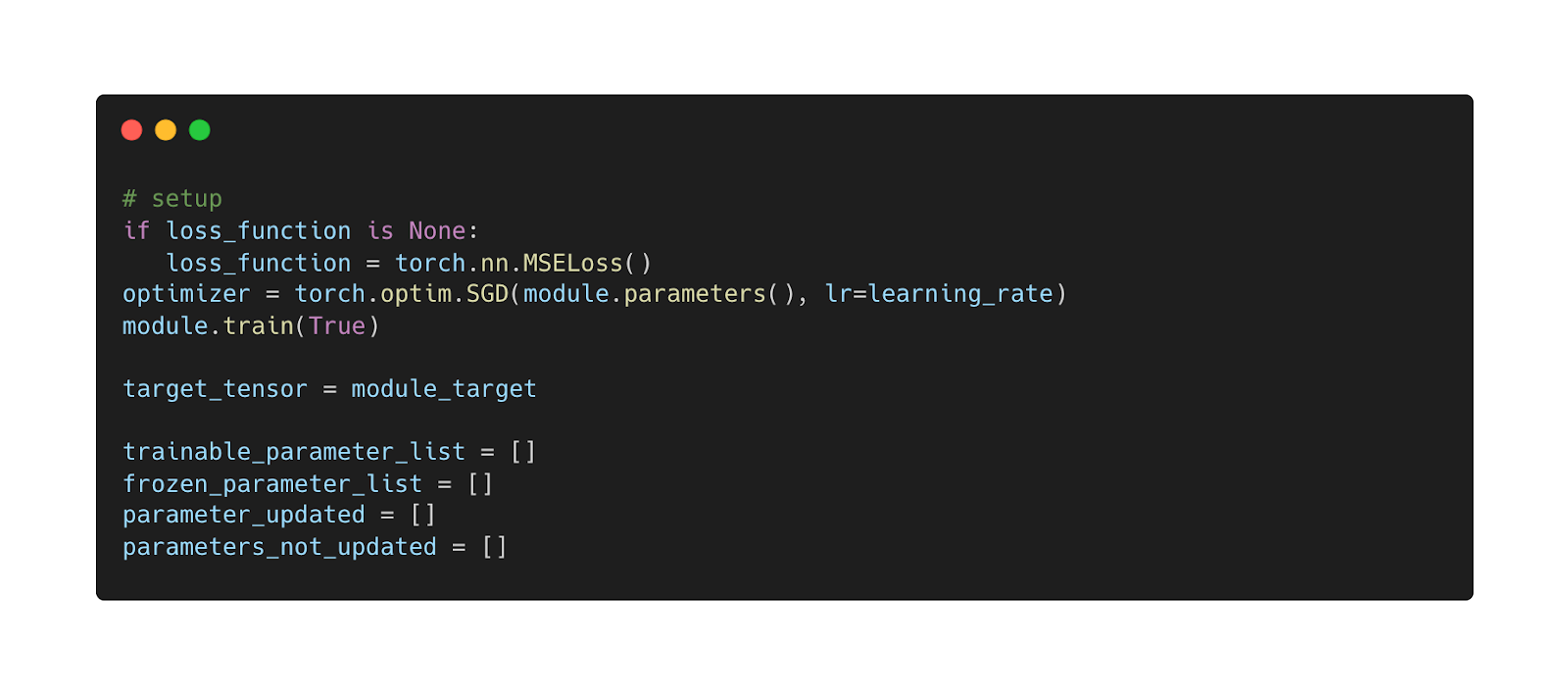

Let’s now look at how the functionality is implemented. Before executing the steps shown in Figure 1, the function sets up some key objects, such as the loss function to use and the optimizer. As noted earlier there is some ability to customize these key objects with the optional function arguments.

The next bit of setup involves initializing data structures to capture results of the Figure 1 process.

These are the key code fragments of the function:

This makes a forward pass with the synthetic input data through the Ludwig module under test.

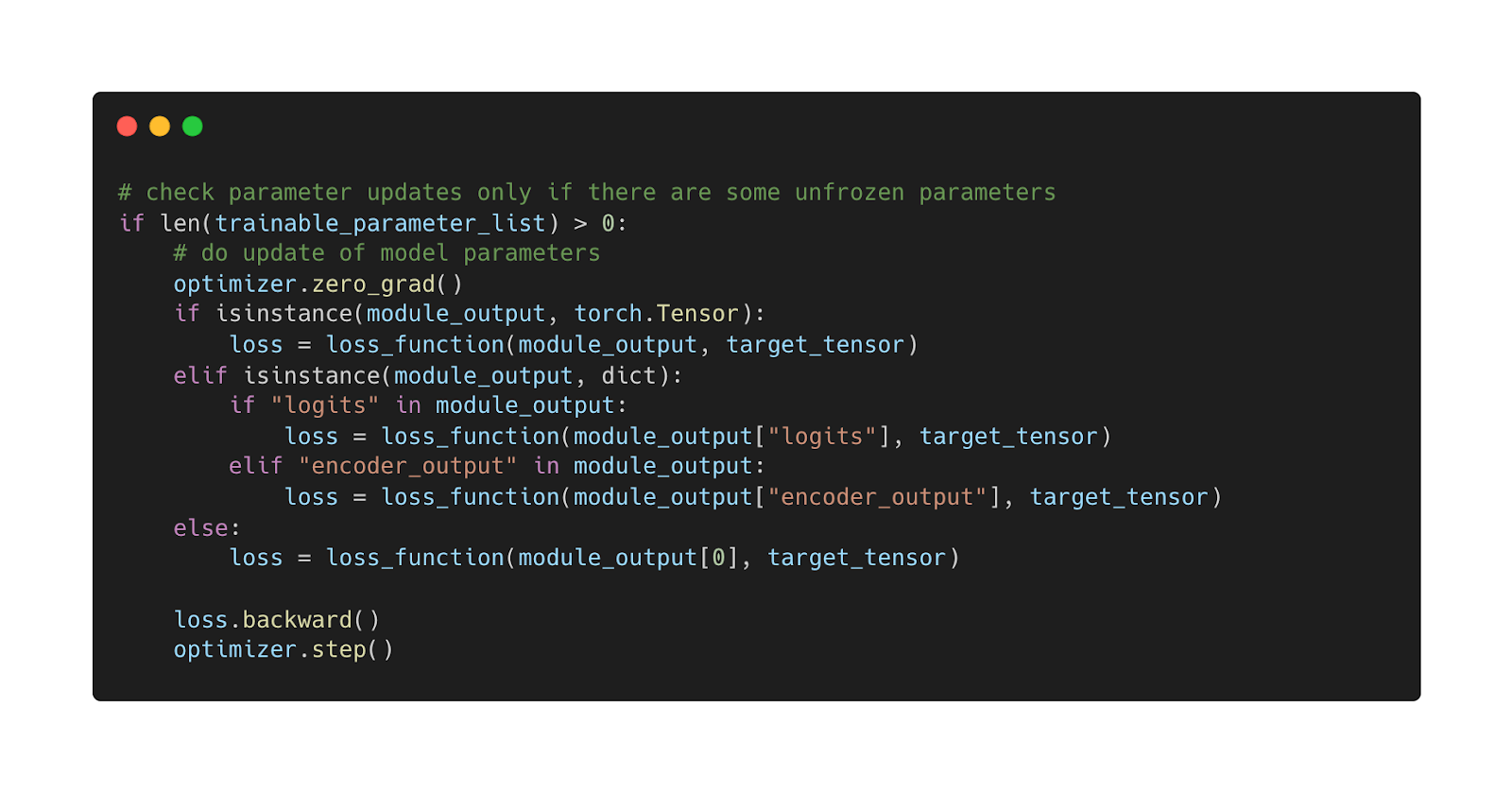

After taking the forward pass through the module, the function computes the loss from the predicted value and target, after which it performs the backward pass to compute gradients and updates the Parameter objects.

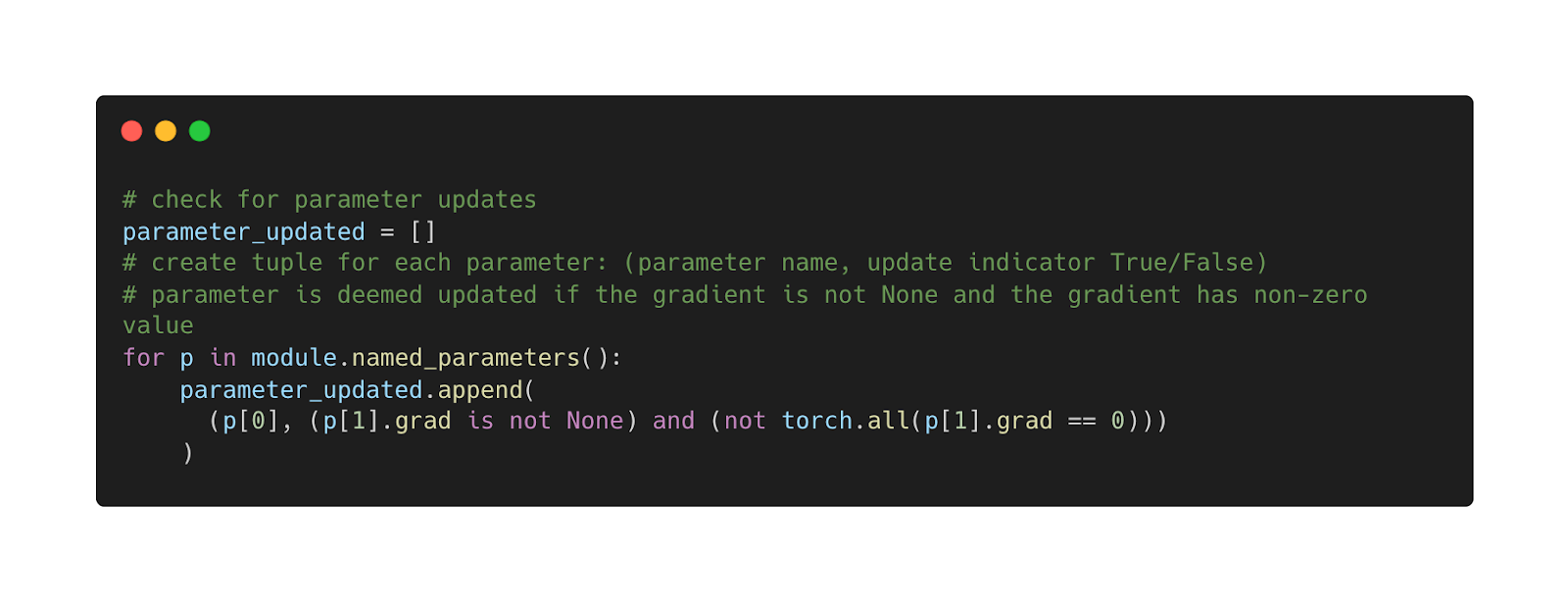

The function checks each Parameter. If a Parameter object contains a non-zero gradient tensor it means it was updated. If it is updated, the Parameter is recorded in a list to keep track of what Parameters were updated.

As noted earlier this work was inspired by two other blog posts. These posts describe taking a copy of the parameters before the backward pass and optimizer step and compare the before values against the values after the optimizer step to see changes. In our approach, which depends on the gradient value only, we eliminate the need to duplicate and update the model’s parameters. Depending on the model could involve a large amount of memory.

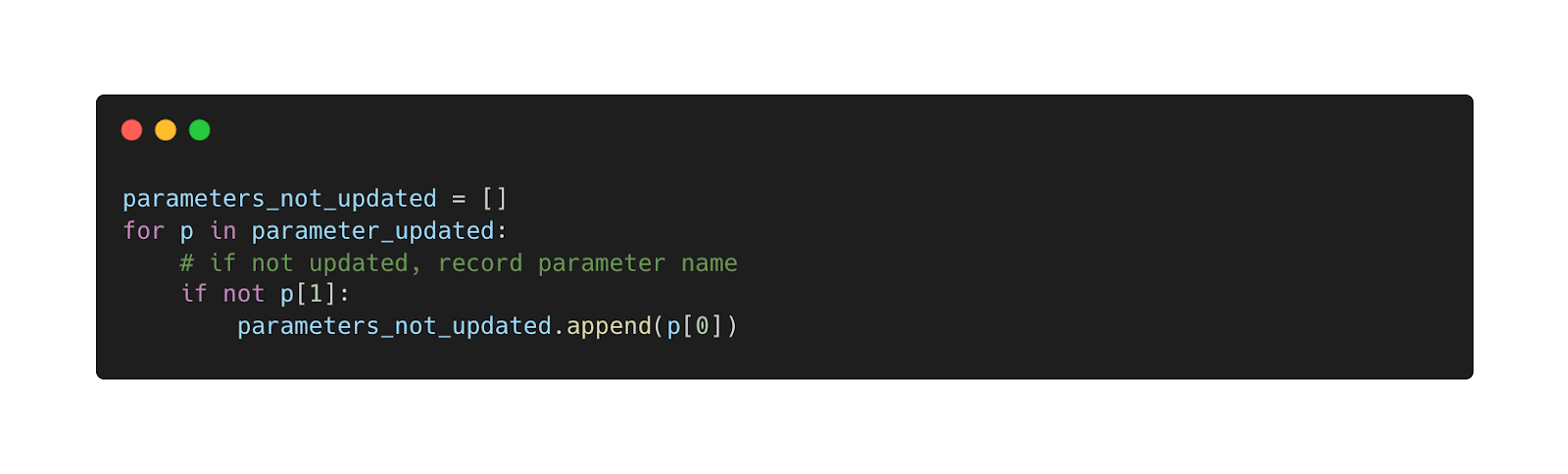

After capturing all updated parameters, the utility now captures any parameter not updated.

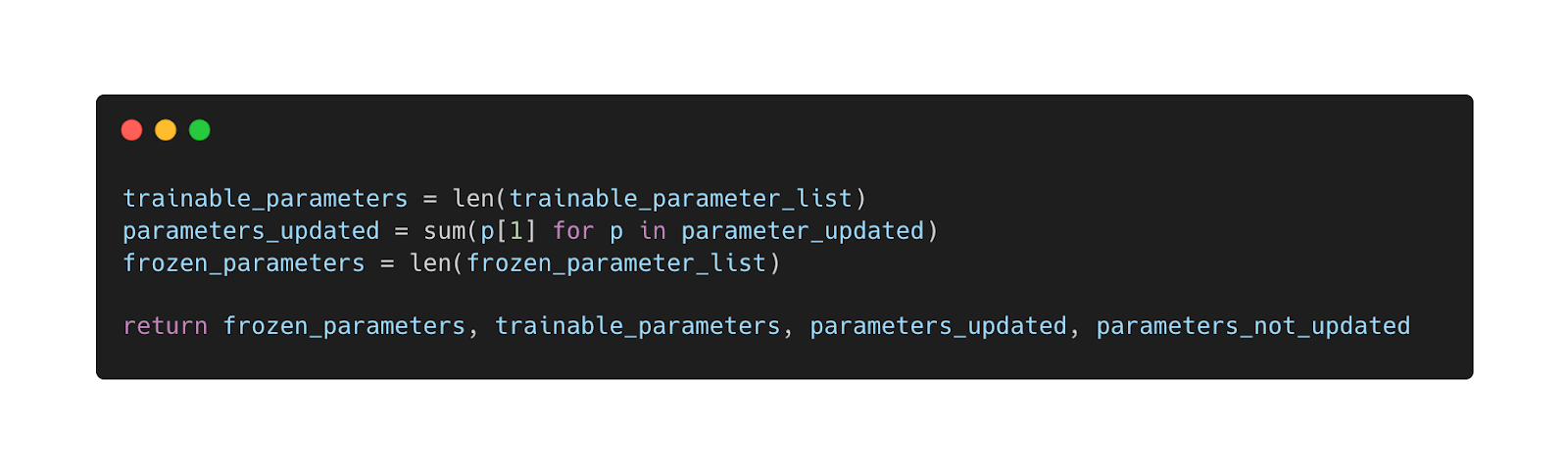

Finally the function returns results of the above checks, which are:

frozen_parameters: count of frozen parameterstrainable_parameters: count of trainable parametersparameters_updated: count of updated parametersparameters_not_updated: the list of parameters that are not updated, if any.

How is check_module_parameters_updated() used in unit tests?

First a developer will need a good understanding of PyTorch classes used to build neural networks. This knowledge is needed to understand how Parameter objects are used in the classes.

With this understanding, the developer will need to understand the number and types of Parameters that are contained in the Ludwig module to be tested. One method is by printing the module. Using the fc_layer object from the earlier example, we see

This shows fc_layer is made up of torch.nn.Linear and torch.nn.ReLU classes. From the perspective of Parameter, fc_layer contains 2 Parameters (weights and bias in torch.nn.Linear). The torch.nn.ReLU is an activation function and does not contain a Parameter.

We ‘ll now show two examples of unit tests using the check function. The Ludwig Developer Guide contains a section that describes how to incorporate parameter update checking into the unit tests in more detail.

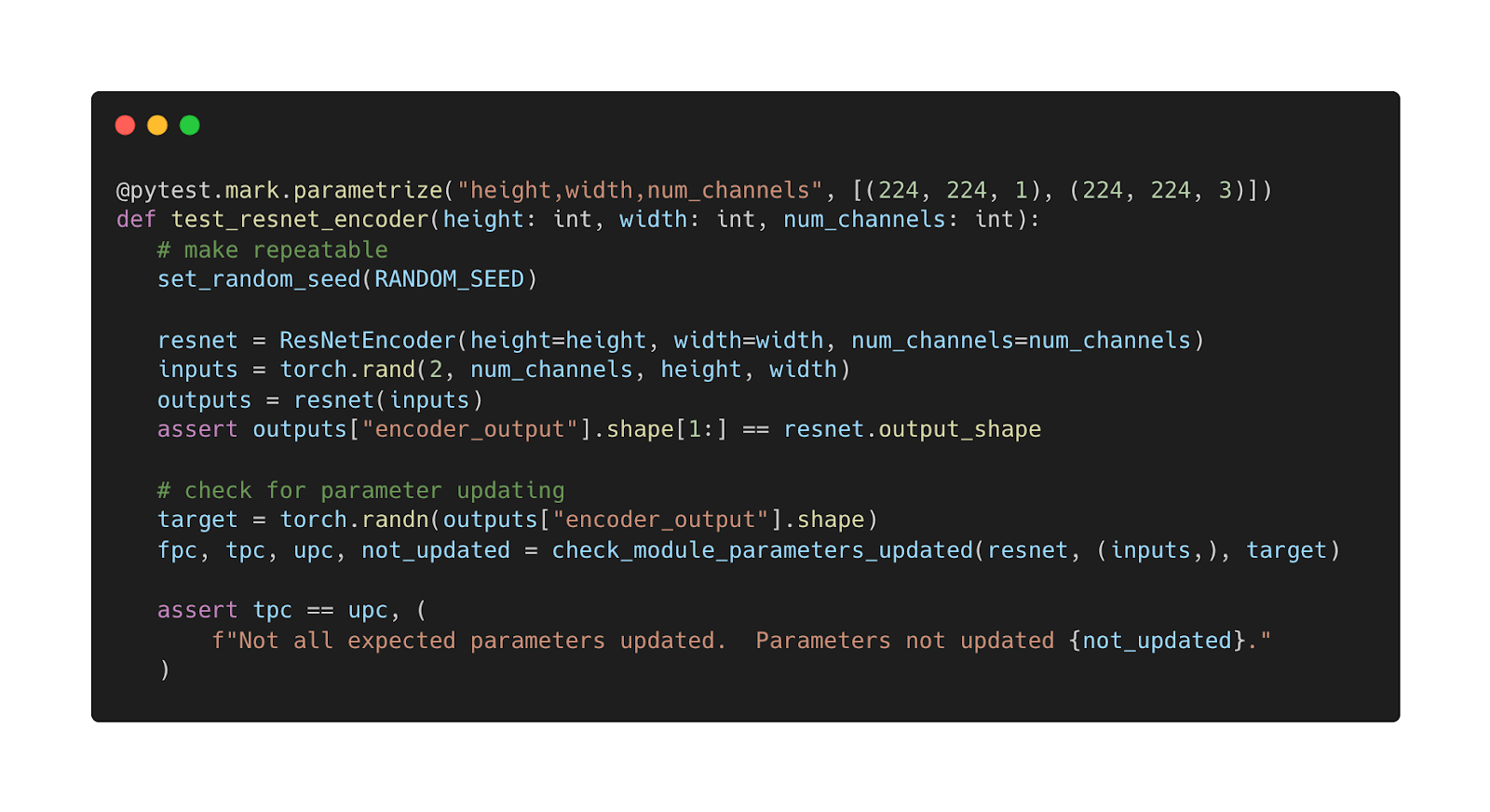

Simple Parameter update check

This unit test is composed of these steps:

- Set the random seed to ensure repeatability

- Create synthetic input tensor and instantiate the

ResNetEncoderto test - Confirm the output contains the expected content and is the correct shape

- Create synthetic target tensor and use it to check for parameter updates

In this simple case, the number of updated parameters (upc) should equal the number of trainable parameters (tpc). If this is not the case, raise an AssertionError because some of the parameters that should have been updated were not.

The situation where upc == tpc is, for the most part, the expected case and is the approach described in the two blog posts mentioned earlier. However, there are times when this is not the case and is not an error. The next example illustrates this situation.

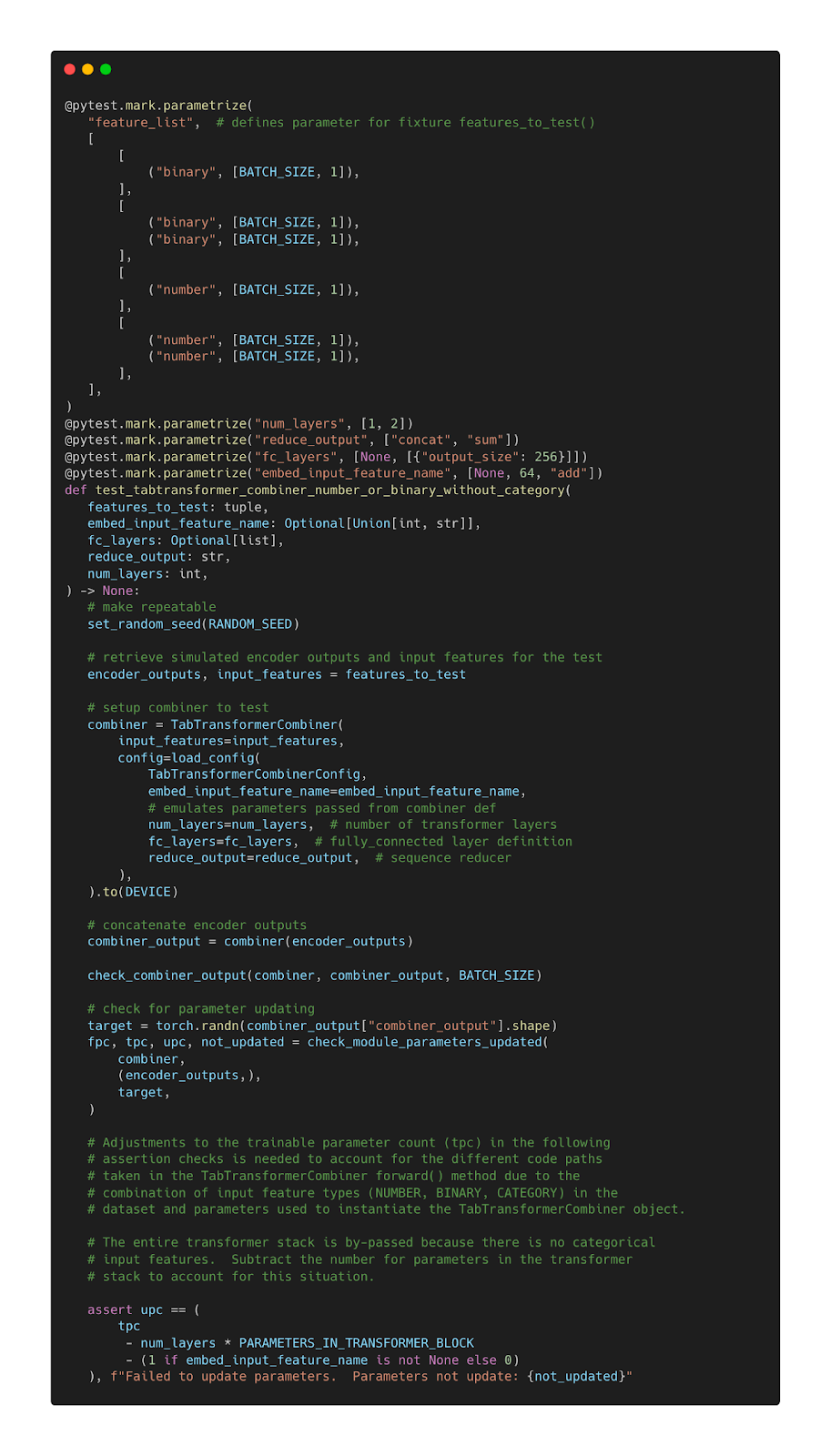

Complex Parameter update check

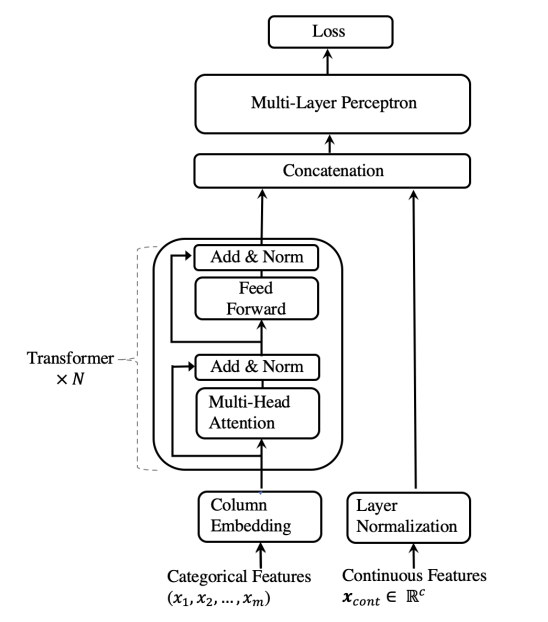

This covers one of the unit tests for the TabTransformerCombiner. To understand circumstances when upc != tpc one needs to understand how TabTransformer works. Figure 2, which comes from the paper, shows the TabTransformer architecture. From this we see there are two processing paths through the network. One path involves categorical features and the other involves numerical (aka continuous) features.

Figure 2: TabTransformer Architecture

For the situation where it is not possible to know ahead of time if categorical features will be in the input, then Parameters associated with the Transformer stack may not be used at all. While the count of trainable parameters will include all Parameters in the model, the Transformer only parameters will not be reflected in the count of updated parameters. For this situation, adjustments need to be made to reflect this operation. This is shown in the final Assert check where the number of parameters in the Transformer stack is subtracted from the count of trainable parameters.

Summary

As neural network architectures become more sophisticated, their processing complexity increases. This makes it almost impossible to verify their correct operation through static analyses, such as code reviews. The check_module_parameters_updated() function was developed to assist Ludwig developers in confirming correct operation of Ludwig components. An advanced user who is developing custom components can also use this capability in their work.