Govern Every Agent.

Trust Every Action.

Unleash AI with confidence on the first enterprise platform to monitor, govern, and rewind every agent action.

Predibase Platform Benefits: Clearest way to observe. Safest way to govern. Only way to rewind.

Introducing Rubrik Agent Cloud

Move fast without losing control with the first end-to-end agent operations platform.

The Control Tower for Your AI Operations

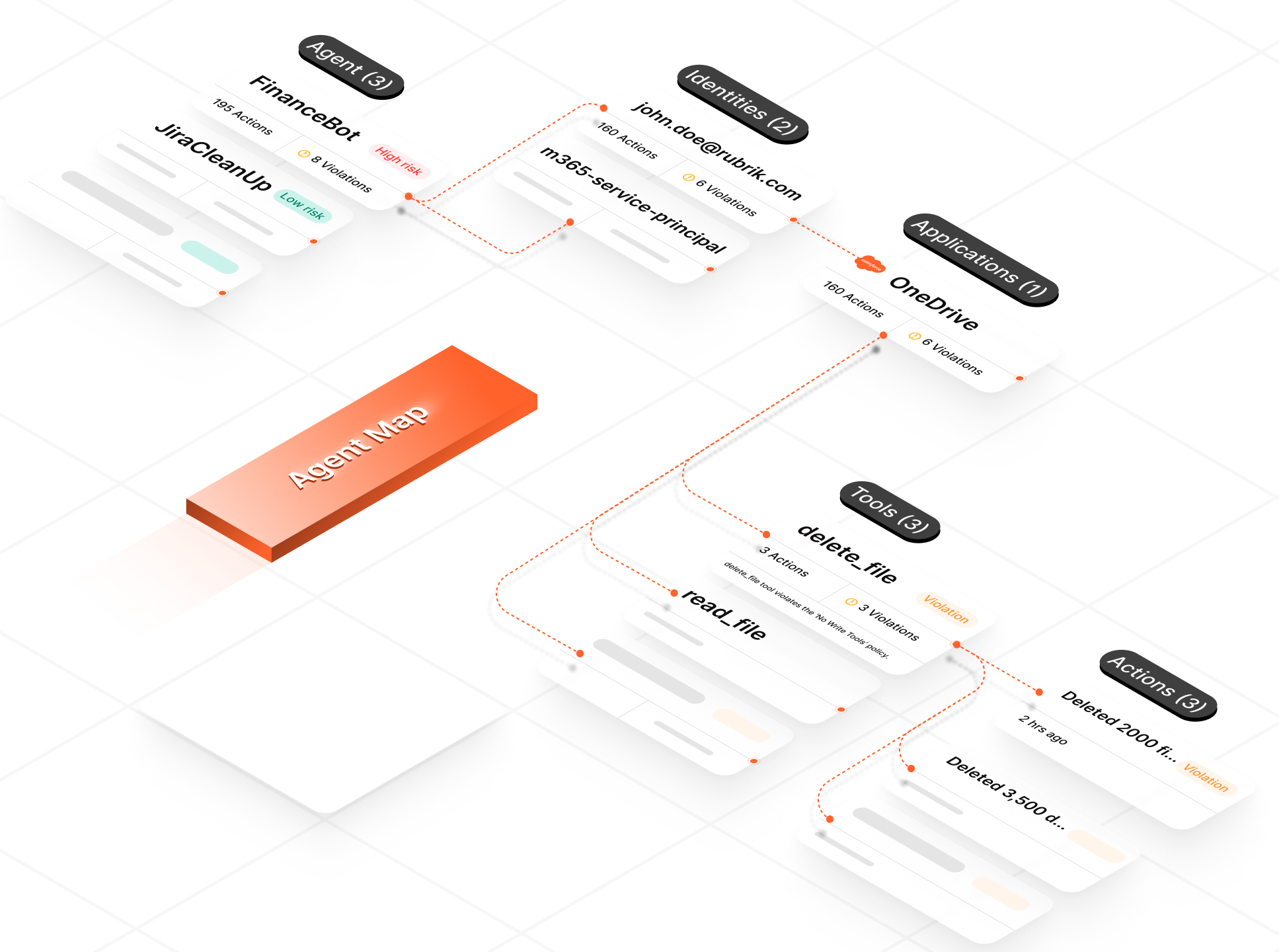

Know What Your Agents Are Doing. Always.

Get a single source of truth for all AI agent activity. See every data query, API call, and system change as it happens, empowering you to identify risks early and maintain confident control over your operations.

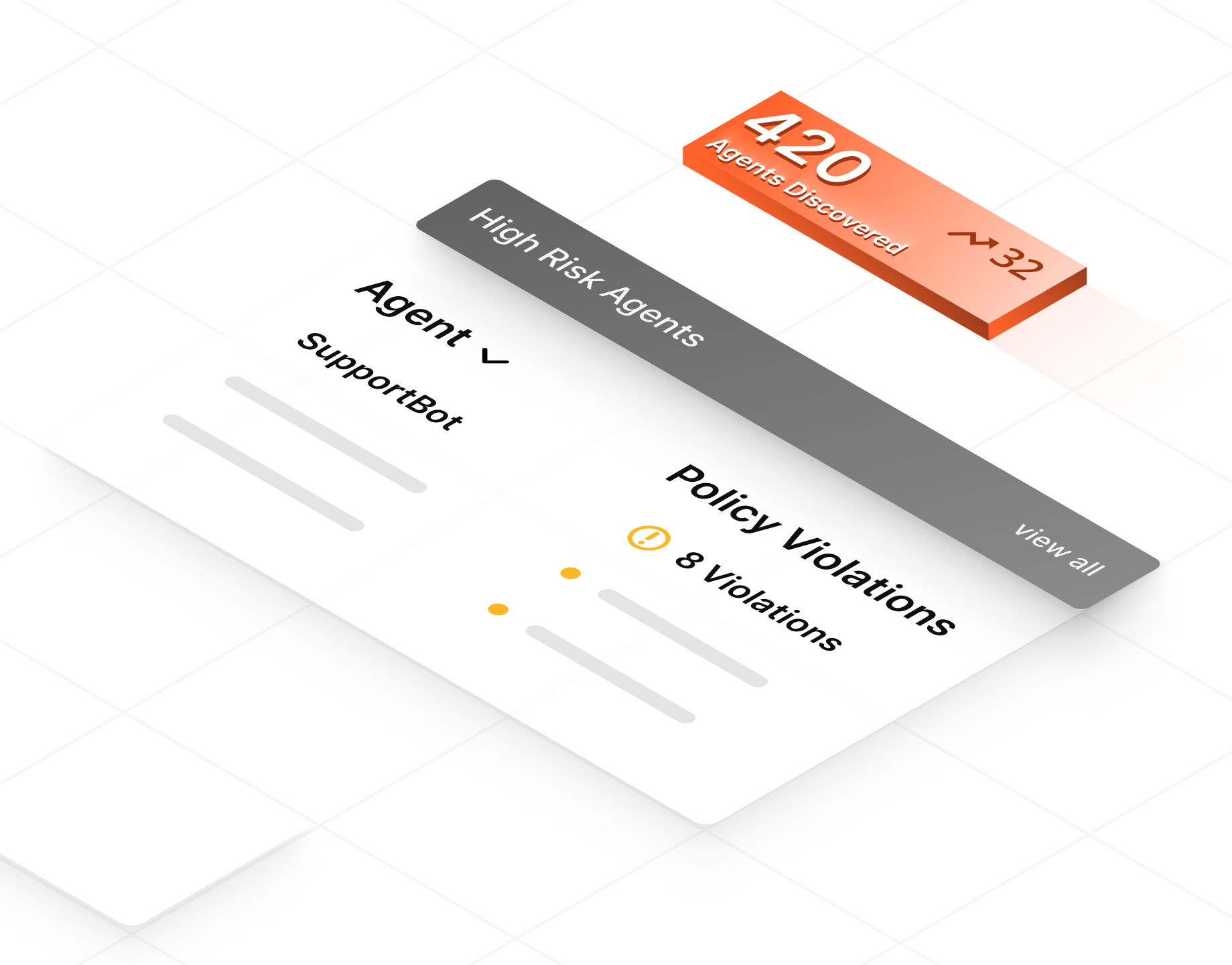

Eliminate Unpredictable Behavior.

Confidently prevent unauthorized or unintended actions before they happen. By applying granular, policy-based controls, you set firm boundaries for every agent. This ensures your AI operates safely and exclusively within its designated purpose.

Innovate Fearlessly with an AI Safety Net.

Deploy new agents with confidence. If an agent produces an unintended outcome, Agent Rewind acts as your instant undo button. Roll back any unwanted actions from minor errors to destructive changes without disrupting operations or losing data.

Unleash Agents. Not Risk.

Discover every agent, control every action, and undo any mistakes with Rubrik Agent Cloud.