Excited about Llama 4, but concerned about the privacy of your data? Starting today, the latest open-source Llama 4 Models, Scout and Maverick, are now available for serving in the Predibase Cloud or in your private cloud (VPC) on AWS, GCP, and Azure—no need to share your sensitive data.

Launch Llama 4 on a Predibase private deployment in your cloud or ours:

- Start a free trial to get a Predibase account and credits, and contact our team to get access to high-end GPUs.

- Schedule a 1-on-1 session with an LLM expert to discuss your use case.

Llama 4: Frontier Model Performance with Support for Multimodal in an Open-source Package

We’re excited to support the latest open-weight LLMs from Meta—Llama 4 Scout and Llama 4 Maverick—on Predibase. Developed by Meta, the Llama 4 series combines text and vision inputs within a single architecture, offering a unified approach that pushes the boundaries of what's possible with multimodal AI. This design empowers developers to create applications that can learn from massive amounts of unlabeled text, image, and video content. Furthermore, Meta’s latest models provide an extensive context length and were built on a novel mixture-of-experts (MoE) architecture, delivering performance on par with the best frontier models.

We’ve included detailed descriptions of Llama 4 Scout and Maverick at the end of this post if you’d like more insight into their unique features, use cases, and performance.

How to Easily Serve Llama 4 in the Predibase Cloud or Your VPC

Predibase makes it simple to deploy Maverick and Scout in your own cloud with our Virtual Private Cloud (VPC) offering on AWS, GCP, and Azure, or leverage our dedicated SaaS infrastructure to serve these models with high-speed inference and low latency, while ensuring data security and privacy.

In this guide, we’ll show you how to deploy llama-4-maverick-instruct in your cloud on 8 x H100 GPUs and in a dedicated SaaS deployment on Predibase—the same technique can be used to deploy Llama 4 Scout as well.

Hardware Requirements for the Llama 4 Series

Deploying Scout and Maverick requires serious compute power given the size and power of these models. At Predibase, we currently require an H100 with 8 GPUs.

GPU Availability Considerations

While high-end GPUs like H100s are ideal, they are in short supply on cloud providers. If securing GPUs from your cloud provider is challenging, Predibase’s fully managed SaaS deployment provides a reliable alternative with pre-allocated infrastructure and competitive GPU pricing.

Flexible Deployment Options for Llama 4 Models

Predibase customers can get started serving the Scout and Maverick models in one of two ways: deploy them with Predibase in your own VPC or leverage our dedicated SaaS infrastructure for a fully managed experience.

Option 1: Deploying Llama 4 in Your VPC

If data privacy, security, or compliance is a top concern, Predibase makes it easy to deploy Llama 4 with full control over your models, data, and infrastructure through private deployments within your own cloud.

To deploy the Llama 4 series of models in your cloud, Predibase VPC customers simply need to run the following few lines of code:

# Install Predibase

!pip install predibase

# Import and setup Predibase

from predibase import Predibase, DeploymentConfig

pb = Predibase(api_token="pb_your_token") # Grab token from Home Page

# Create Llama 4 Maverick deployment

deployment = pb.deployments.create(

name="llama-4-maverick",

config=DeploymentConfig(

base_model="meta-llama/Llama-4-Maverick-17B-128E-Instruct-FP8",

accelerator="h100_80gb_sxm_800",

min_replicas=1,

max_replicas=1,

cooldown_time=1800,

speculator="disabled",

max_total_tokens=40000

)

)

# Prompt it!

client = pb.deployments.client("llama-4-maverick", force_bare_client=True)

print(client.generate("Hello Llama 4!", max_new_tokens=128).generated_text)Sample code to deploy Llama 4 Maverick (or Scout) in your Virtual Private Cloud (VPC)

Option 2: Deploying Llama 4 via Predibase SaaS

For teams that want a dedicated Llama 4 deployment without worrying about GPU capacity constraints in the public cloud, Predibase provides dedicated SaaS infrastructure with more GPU types available at competitive prices.

Advantages of Predibase SaaS:

- No GPU procurement required: Predibase has pre-allocated H100 clusters.

- Faster time-to-deployment: Deploy in minutes, not days.

- Fully managed scaling: Auto-adjusts the number of instances (replicas) to handle workload spikes without a pause in performance.

- Security & Compliance: We offer private, dedicated deployments that meet enterprise security needs. Your deployments are never shared with other customers, and your data is yours forever. Our GPU datacenters all reside in the United States and meet the strictest security standards and we are fully SOC 2 Type 2 compliant.

With this option, customers still get a dedicated and private deployment — without worrying about securing scarce compute resources.

# Install Predibase

!pip install predibase

# Import and setup Predibase

from predibase import Predibase, DeploymentConfig

pb = Predibase(api_token="pb_your_token") # Grab token from Home Page

# Create Llama 4 Maverick deployment

deployment = pb.deployments.create(

name="llama-4-maverick",

config=DeploymentConfig(

base_model="meta-llama/Llama-4-Maverick-17B-128E-Instruct-FP8",

accelerator="h100_80gb_sxm_800",

min_replicas=1,

max_replicas=1,

cooldown_time=1800,

speculator="disabled",

uses_guaranteed_capacity=True,

max_total_tokens=40000

)

)

# Prompt it!

client = pb.deployments.client("llama-4-maverick", force_bare_client=True)

print(client.generate("Hello Llama 4!", max_new_tokens=128).generated_text)To deploy the Llama 4 series of models on the Predibase cloud, customers simply need to run the following few lines of code:

To get started on our SaaS, sign up for a free Predibase account and contact our team to get access to high-end GPUs in your account.

Additional Information about Llama 4 Scout and Maverick

Llama 4 Scout: Optimized for Speed and Efficiency

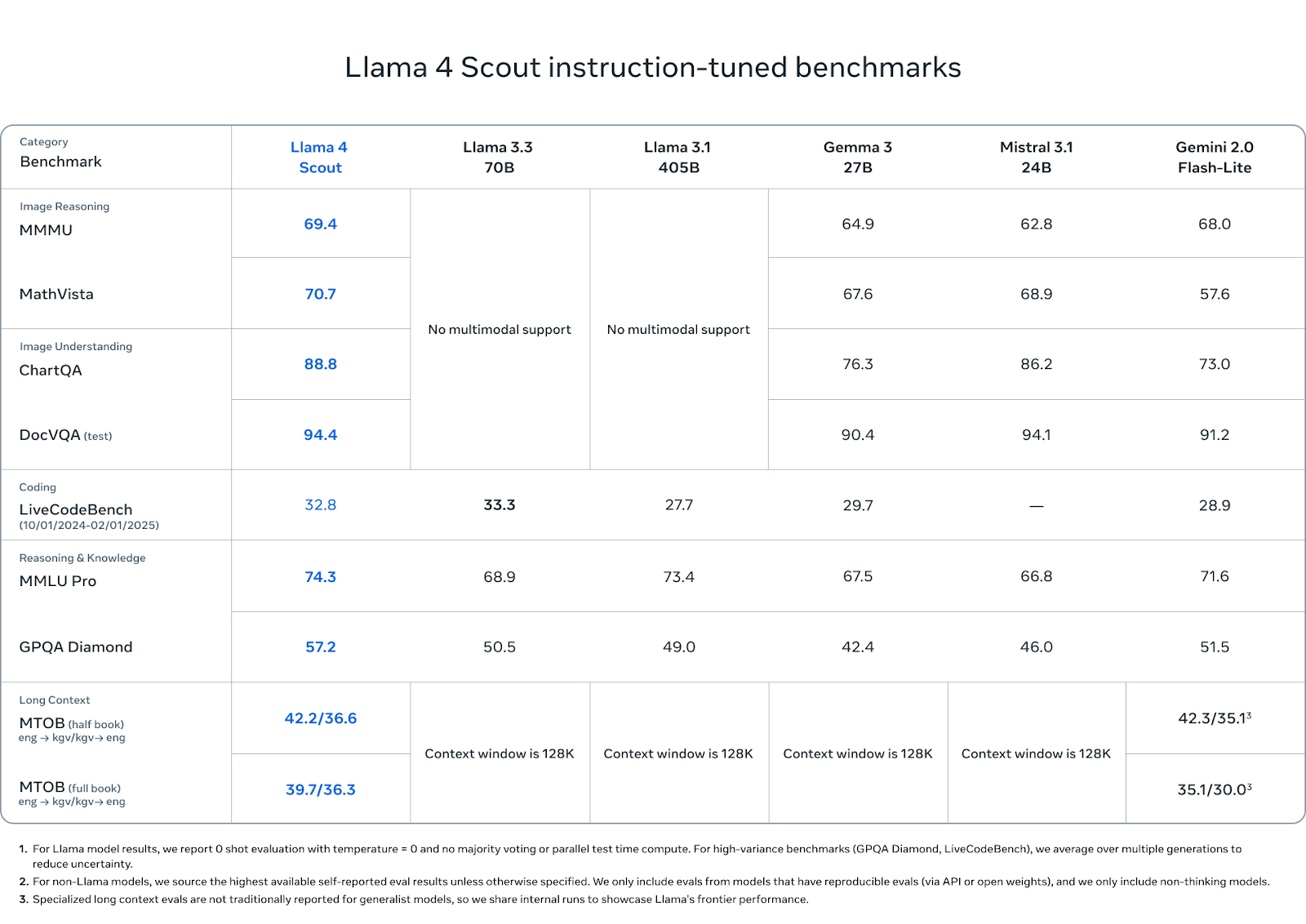

Llama 4 Scout is Meta’s lightweight model of the “herd”, designed for high-performance and faster response times. With 17B active parameters (109B total) and 16 experts, it sets a new bar in its class—delivering an industry-leading 10 million token context window, up from 128K in Llama 3. This unlocks use cases like multi-document summarization, deep personalization, and reasoning over massive codebases. According to Meta’s benchmarks, Scout outperforms popular Frontier models like Gemma 3, Gemini 2.0 Flash-Lite, and Mistral 3.1 on a series of tasks.

Given its smaller size and simplified architecture, Scout is ideal for providing accurate answers at lower latency than its larger sister model, Maverick. This makes Scout ideal for real-time tasks like customer support, on-the-fly translation, and in-product chatbots.

Scout is both pre-trained and post-trained with a 256K context, enabling robust length generalization. Architecturally, it introduces iRoPE—interleaved attention layers without positional embeddings, paired with inference-time attention scaling—to pave the way for models that can handle near-infinite context.

Llama 4 Scout Performance Benchmarks vs. Llama 3.3, Llama 3.1, Gemma 3, Mistral 3.1 and Gemini 2.0

Llama 4 Maverick: A Multimodal Powerhouse for Advanced AI Apps

Maverick is Meta’s flagship general-purpose LLM in the Llama 4 family, engineered for depth, versatility, and high performance. With 17 billion active parameters, 128 experts, and a total of 400 billion parameters, Maverick is built using a mixture-of-experts (MoE) architecture that allows it to achieve high-quality outputs. Compared to earlier models like Llama 3.3 70B, Maverick delivers stronger results in both performance and cost-efficiency, especially for tasks involving complex reasoning or rich data.

Where Llama 4 Scout is lightweight and fast, Maverick is optimized for more demanding applications. It shines in scenarios requiring nuanced understanding, creative language generation, and deep analysis. Use cases include multilingual agents that interpret both text and image queries, creative collaboration on storytelling or content generation, and reasoning over extensive internal codebases.

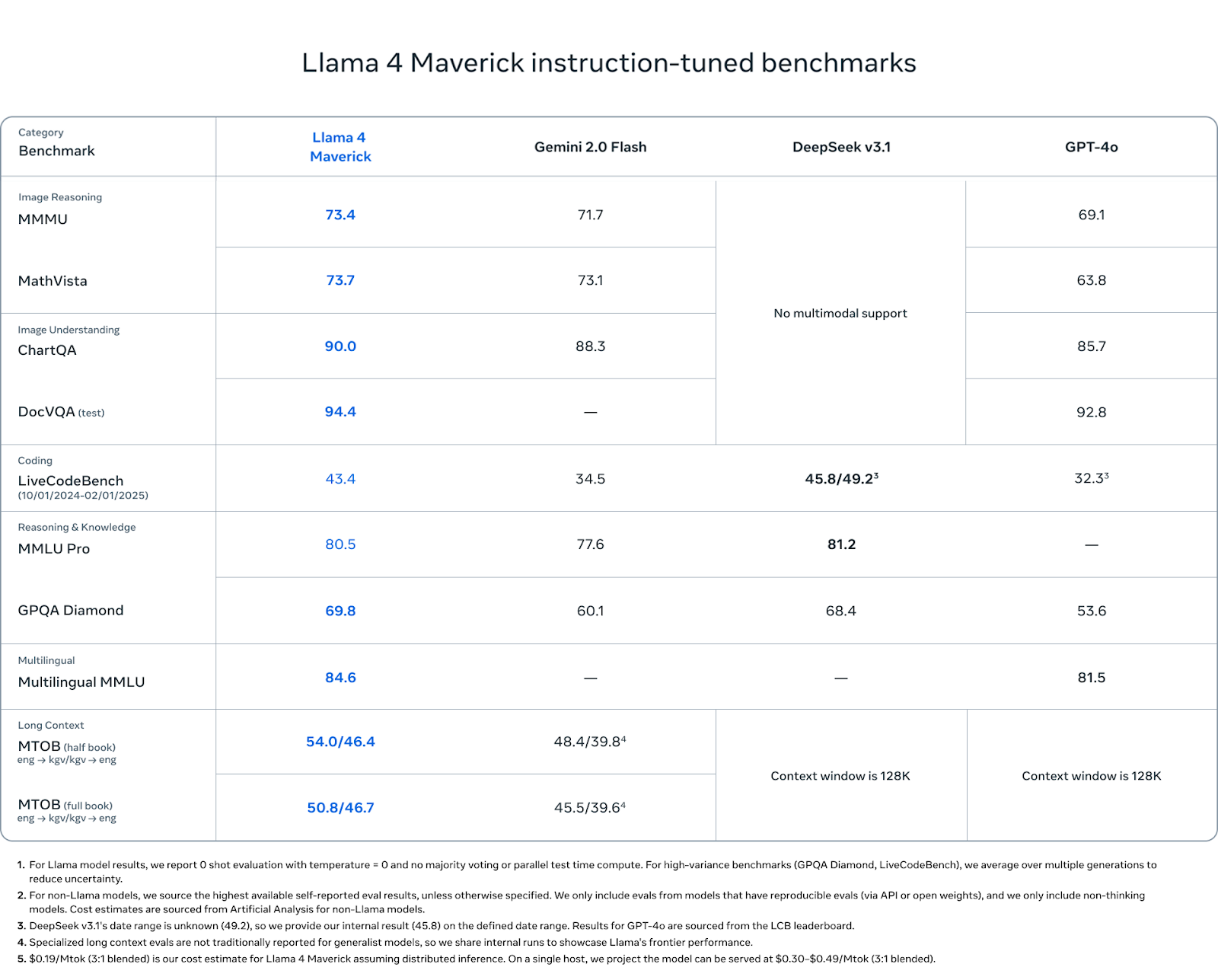

Under the hood, Maverick benefits from innovations in training and architecture. It was fine-tuned, focusing on response quality, multilingual performance, and conversational tone, making it the most capable chat-optimized model available in the Llama 4 series. It also builds on knowledge distilled from Llama 4 Behemoth—a 288B active parameter teacher model—helping Maverick achieve high scores on reasoning and coding benchmarks, and according to Meta’s benchmarks, outperforms or provides comparable results to state-of-the-art frontier models, GPT-4o and DeepSeek v3.

Llama 4 Maverick Performance Benchmarks vs. Gemini 2.0, DeepSeek v3.1 and GPT-4o

FAQ: Frequently Asked Questions about Llama 4

What is Llama 4 Scout?

Llama 4 Scout is Meta’s lightweight open-source LLM optimized for speed and low-latency inference. With 17B active parameters and a 10 million token context window, it's ideal for real-time use cases like customer support, code search, and in-product chatbots.

What is the difference between Llama 4 and Llama 3? Use H2

Llama 4 introduces significant upgrades over Llama 3, including support for vision input (multimodality), a 10M token context window, MoE architecture, and stronger performance on reasoning and coding benchmarks.

Llama 4 vs Mistral vs DeepSeek: How Does It Stack Up?

When evaluating open-source LLMs, Llama 4 Scout and Maverick stand out for their blend of performance, scalability, and context length. Compared to Mistral 7B and 8x22B, Scout offers significantly longer context windows (up to 10 million tokens) and faster inference, while Maverick brings multimodal capabilities Mistral lacks. Against DeepSeek v3, Llama 4 holds its own in reasoning and multilingual benchmarks, and benefits from a more mature deployment ecosystem. DeepSeek remains a strong contender for dense reasoning tasks, but Llama 4’s support for both VPC and SaaS deployments via Predibase makes it easier to get up and running in production.

How can I deploy Llama 4?

You can deploy Llama 4 models like Scout and Maverick in your own cloud (VPC on AWS, GCP, or Azure) or use a fully managed SaaS deployment via Predibase. Both options provide enterprise-grade privacy and high-speed inference.

What are the GPU requirements for Llama 4?

Llama 4 Scout can run efficiently on 8 x A100 or H100 GPUs. Maverick typically requires 8–16 x H100s for optimal performance due to its larger parameter count and multimodal capabilities.

Can I run Llama 4 in a private cloud?

Yes. Predibase enables private cloud deployments of Llama 4 via VPC infrastructure, allowing teams to maintain full control over their data, GPUs, and compliance requirements.

How does Llama 4 inference work in a VPC?

In a VPC deployment, Llama 4 inference benefits from co-located training and serving on the same infrastructure—reducing latency, boosting accuracy, and improving cost efficiency.

What are some alternatives to Llama 4?

Notable alternatives include Mistral, DeepSeek, Gemma, and Yi models. However, Llama 4 stands out for its multimodal support, long context length, and open-source availability.