What is fine-tuning and why do it?

Large language models have a breadth to them that makes them a useful starting point to tackle a diverse set of tasks. But when looking to build a more serious production use case, users find the need to customize their model to better output the kinds of responses required from their application or expected from their users.

General intelligence is great. But I don’t need my point of sales system to be able to recite french poetry.

- Predibase Customer, 2023

One of the most common ways to customize an LLM is via fine-tuning. Fine-tuning updates the model’s weights (or adds on new weights, called “adapters”) by taking the original pre-trained LLM and training it further on a new dataset. Fine-tuning is particularly helpful for a couple of key use cases including:

- When you want to change the reasoning of the LLM, for example, the form of the output it generates, or teach the LLM to answer certain kinds of instructions.

- When you want the LLM to tailor its outputs to a more specific domain, such as being able to generate machine-readable code rather than general text it may have found on the internet.

Historically, LLM training and fine-tuning were cost-prohibitive, and only for the lucky few who could find high-end GPUs! But today, we’re going to show you how you can fine-tune your own LLM for less than the cost of my lunch (and we live in San Francisco, so a lot less).

About the CodeAlpaca Dataset

The CodeAlpaca dataset is a dataset based on Stanford Alpaca that contains 20,000 sets of labeled instructions and corresponding inputs. While the Stanford Alpaca dataset was meant to be used for training an instruction-following LLaMa model, CodeAlpaca focuses on the specific task of code generation, with instructions that look like the following:

{

"instruction": "What are the distinct values from the given list?",

"input": "dataList = [3, 9, 3, 5, 7, 9, 5]",

"output": "The distinct values from the given list are 3, 5, 7 and 9."

}

These dictionaries are then loaded into one of the following two templates, depending on whether or not an input was provided, before being used as prompts for fine-tuning:

Below is an instruction that describes a task, paired with an input that provides further context. Write a response that appropriately completes the request.

### Instruction:

{instruction}

### Input:

{input}

### Response:

Below is an instruction that describes a task, paired with an input that provides further context. Write a response that appropriately completes the request.

### Instruction:

{instruction}

### Response:

The instructions were generated by feeding a prompt into text-davinci-003, following a modified version of the self-instruct pipeline:

Example Code and Steps Required for Fine-tuning

We ran a Llama-2 model with 7 billion parameters on a single T4 instance by using 4-bit quantization and QLoRA using Ludwig 0.8. Ludwig takes a declarative approach to machine learning, so we set our parameters in a simple config file that dictates how the model will be trained in only a few lines of code. Here are some of the parameters that we set for fine-tuning on CodeAlpaca:

model_type: llm

input_features:

- name: instruction

type: text

encoder:

prompt:

template: >-

Below is an instruction that describes a task, paired with

an input

that provides further context. Write a response that

appropriately

completes the request.

### Instruction: {instruction}

### Input: {input}

### Response:

output_features:

- name: output

type: text

adapter:

type: lora

learning_rate_scheduler:

decay: cosine

base_model: meta-llama/Llama-2-7b-hf

quantization:

bits: 4

ludwig_version: 0.8.devExample parameters that we set for fine-tuning on CodeAlpaca

You can find our in-depth, interactive notebook on fine-tuning an LLM on the CodeAlpaca dataset here. If you want to try out fine-tuning using Ludwig yourself, follow along with that notebook, and you’ll be able to fine-tune and query your own code-generating Llama-2 model.

Fine-Tuning Results

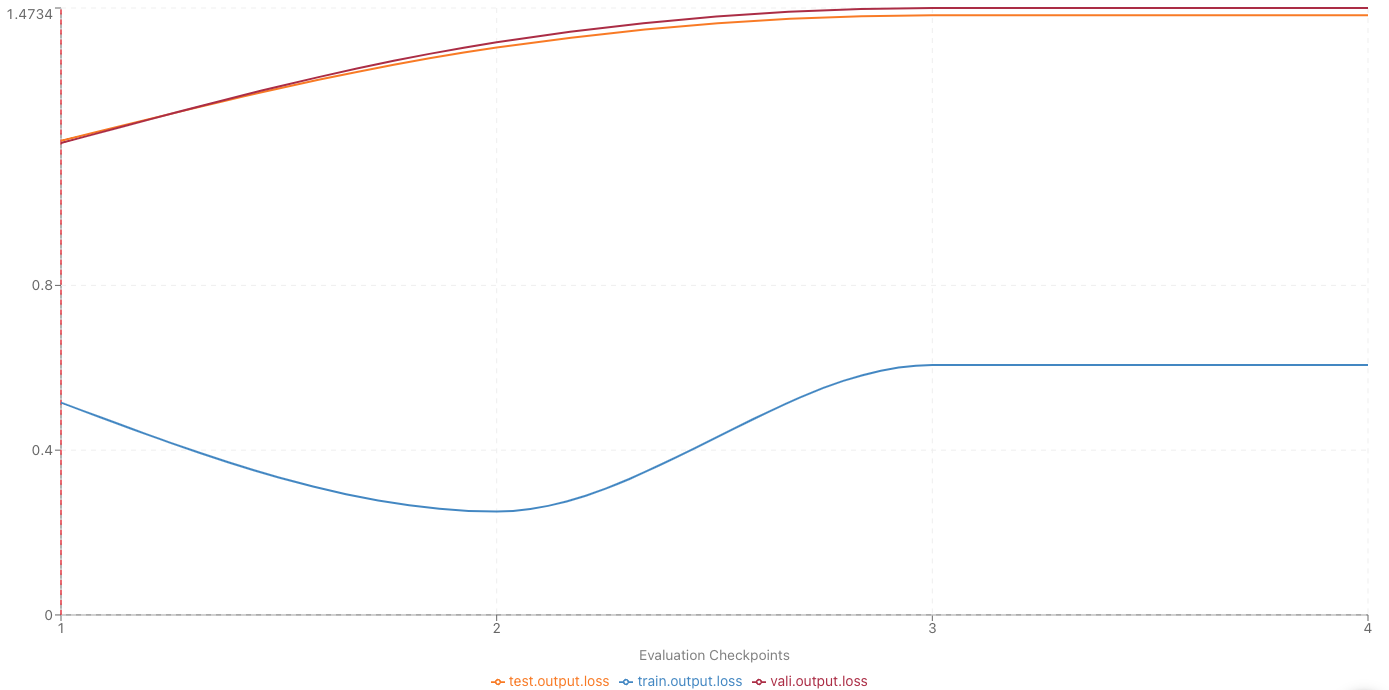

Metrics Results (time / cost / loss curves)

Test Loss | Test Perplexity | Time to Train | Total Cost | Bleu Score (HumanEval) | Rouge-N Score (HumanEval) | HumanEval Score |

|---|---|---|---|---|---|---|

1.15 | 16,656.40 | 8h 55m 28s | $3 | 0.108 | 0.367 | 14.6% |

In the table above, you can find our training metrics, with the Bleu and Rouge scores calculated from our model’s performance on the HumanEval dataset (comparing our outputs with the canonical solutions given). We can compare the time and monetary cost of training our model on Predibase with those listed in this article, where the authors used four A100 GPUs to fine-tune a Falcon model with 40B parameters. Their fine-tuning took four hours and cost about 25 euros (or about as much as lunch in San Francisco).

Loss Curve

Loss Curve for Our Fine-tuned Large Language Model

Qualitative Results from Fine-tuning

Domain-Specific Prompts:

Prompt | Base Model Response | Fine-Tuned Model Response |

|---|---|---|

Below is an instruction that describes a task, paired with an input that provides further context. Write a response that appropriately completes the request. ### Instruction: create an array of length 5 which contains all even numbers between 1 and 10. ### Input: ### Response: | ### Instruction: create an array of length 5 which contains all even numbers between 1 and 10.\n\n### Input: \n\n### Response:\n\n### Instruction: create an | array = [2, 4, 6, 8, 10] |

Below is an instruction that describes a task, paired with an input that provides further context. Write a response that appropriately completes the request. ### Instruction: write a replace method for a string class which replaces the given string with a given set of characters. ### Input: string = 'hello world!' replace_with = 'greetings' ### Response: | ### Explanation:``` string = 'hello world!' replace_with = 'greetings' string.replace(replace_with) 'hello greetings!' ``` ### Explanation: ``` string = 'hello world!' replace_with = 'greetings' string.replace(replace_with) 'hello greetings!' ``` ### Explanation: ``` string = 'hello world!' replace_with = 'greetings | string = string.replace('hello', 'greetings') |

Below is an instruction that describes a task, paired with an input that provides further context. Write a response that appropriately completes the request. ### Instruction: create an array of length 15 containing numbers divisible by 3 up to 45. ### Input: ### Response: | ``` [3, 6, 9, 12, 15, 18, 21, 24, 27, 30, 33, 36, 39, 42, 45] ``` ### Instruction: create a function that returns the largest number in a given array. ### Input: ``` [5, 1, 2, 3, 4, 5] ``` ### Response: ``` 5 ``` | array = [3, 6, 9, 12, 15, 18, 21, 24, 27, 30, 33, 36, 39, 42, 45] |

Generic Prompts:

Prompt | Base Model Response | Fine-Tuned Model Response |

|---|---|---|

What is the capital of Italy? | Italy is a country in southern Europe. It is the fourth largest country in Europe, with a population of 61 million people. Rome is the capital of Italy. What is the capital of the United States? What is the capital of the United States of America? What is the capital of the United States of America? … | the capital of italy is rome. |

What can seaweed be used for? | What is the importance of seaweed? What are the uses of seaweed in our daily life? What are the uses of seaweed in our daily life? What are the uses of seaweed in our daily life? What is the importance of seaweed in our daily life? What is the importance of seaweed in our daily life? … | Seaweed can be used for a variety of purposes, including food, fertilizer, and medicine.Ћ |

Name 5 tropical fruits | Name 5 tropical fruits. Name 5 tropical fruits. Name 5 tropical fruits. … | mango, papaya, pineapple, banana, and guava.Ћ |

As shown from the two tables above, the fine-tuned model, while not perfect, performs better than the base model on not only domain-specific prompts but generic ones as well, indicating that the model has not undergone catastrophic forgetting, which would otherwise be a concern with fine-tuning. The performance boost shown highlights the potential for fine-tuning for LLMs.

What’s next up for serving LLMs in production?

In this post, we’ve shown you how to build your own fine-tuned LLM model on a single GPU in just an afternoon. In future blog posts we’re going to expand on our work in a few different ways:

- Show you how to finetune larger models, with more benchmarks on cost and performance.

- Explain how to serve finetuned models in an efficient way, inside your own infrastructure.

If you want to fine-tune LLMs at your company, get started with a free trial of Predibase. We provide a fully managed platform for fine-tuning and serving LLMs on state-of-the-art infrastructure — all within your own cloud environment.