Large Language Models (LLMs) have consistently shown promise on complex natural language tasks, even those that they have not been explicitly trained to handle. In this blog post, we will show you how to use Predibase to leverage the natural language abilities of LLMs on a task traditionally regarded as the domain of gradient-boosting models — predictions on tabular data.

Step 1: How to connect data to Predibase

For an in-depth overview of connections and datasets, check out our docs here: https://docs.predibase.com/user-guide/data-connectors/overview.

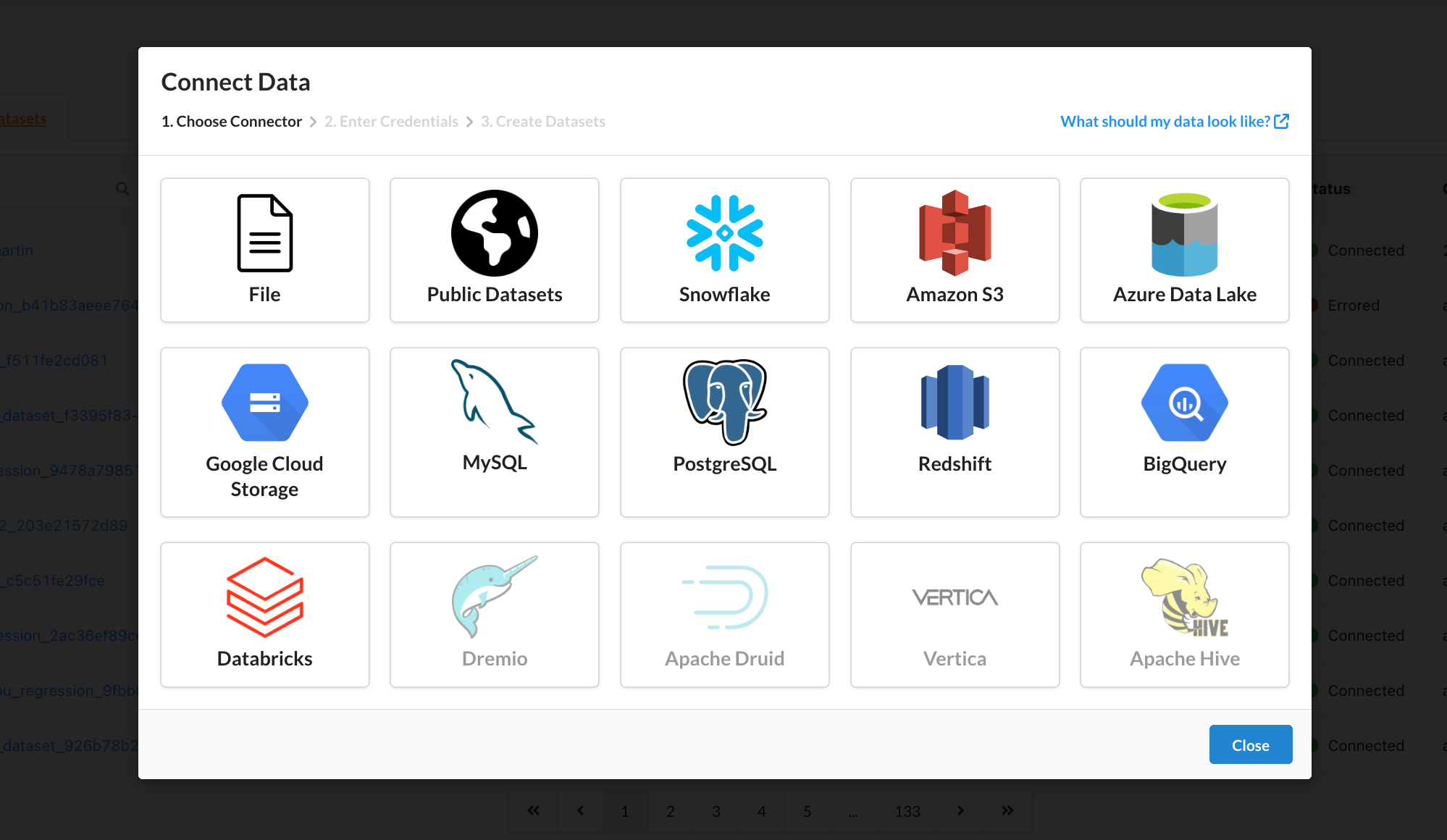

After navigating to the Data tab, you should see a blue button labeled “Connect Data”. Predibase supports a variety of data connectors including S3, Snowflake, etc. If you have your dataset available locally, you can also upload it directly using the File connector.

Choose your data source to connect data in Predibase.

After connecting your tabular data to Predibase, you can begin training your baselines and experimenting with serialization.

Step 2: Build the Classifier with a Neural Network

Now that we've connected our data, let's explore how to train a baseline model with Predibase.

Click on the “Models” tab and click “New Model Repository.” Give it a name, and click “Next: Train a model.”

Here, select “Build a custom model,” set the connection to “file: file_uploads” (or wherever you connected your data from,” and set the dataset to whatever your file is. Set the target(s) to the appropriate output feature(s), and then scroll down to select either Neural Network or Gradient-Boosted Tree, depending on which baseline you choose to build. Then, continue to Feature Selection. Make sure all relevant features are activated, then hit “Next.”

Click on “Config”. Make sure that the configuration looks correct (you can refer to our documentation on Ludwig Config files here), and then hit “Train”.

How To Build a Text Model on Serialized Tabular Data with Predibase

Hold on. We still have unserialized tabular data. Why are we building the classifier already?

Patience.

Click on the “Models” tab and click “New Model Repository.” Give it a name, and click “Next: Train a model.”

Here, select “Build a custom model,” set the connection to “file: file_uploads” (or wherever you connected your data from,” and set the dataset to whatever your file is. Set the target(s) to the appropriate output feature(s), and then scroll down. Make sure that the model type is set to Neural Network, and then continue to Feature Selection. Deactivate all but one of the non-target features, then hit “Next.”

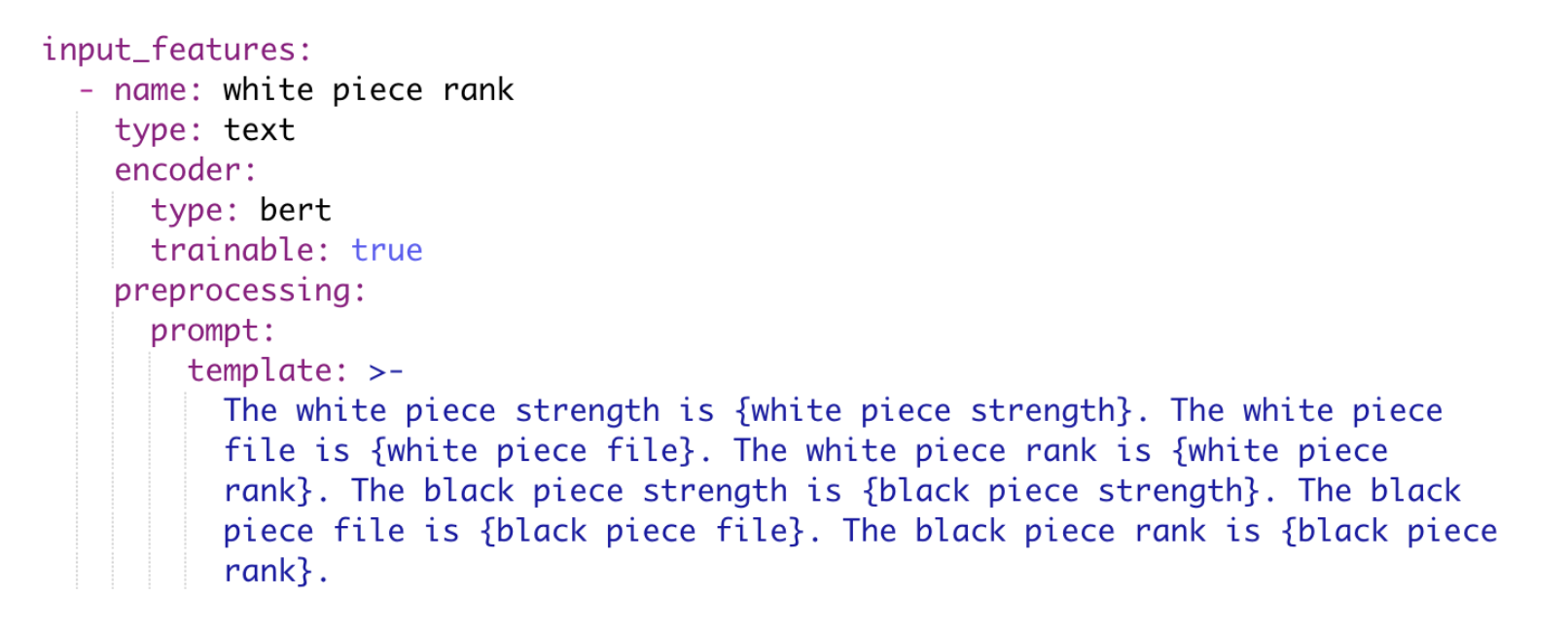

Click on “Config”. You should see under “input_features” the one input feature that you left active. This is where we will use Predibase’s “template” config feature:

Example Predibase model configuration.

Note that there is no “column” label here. Instead, we have added “prompt” and “template” under “preprocessing,” and changed the feature type of “white piece rank” to “text.” By doing so, we can replace the feature values of “white piece rank” with text that contains both the feature names and the feature values (the text in curly brackets will be replaced with the corresponding feature values). In this case, we have chosen to serialize the data into the Text Template format, but we can easily do the same with List Template.

Once the template and the rest of the config look good, hit “Train”!

How to Perform LLM Serialization with Predibase

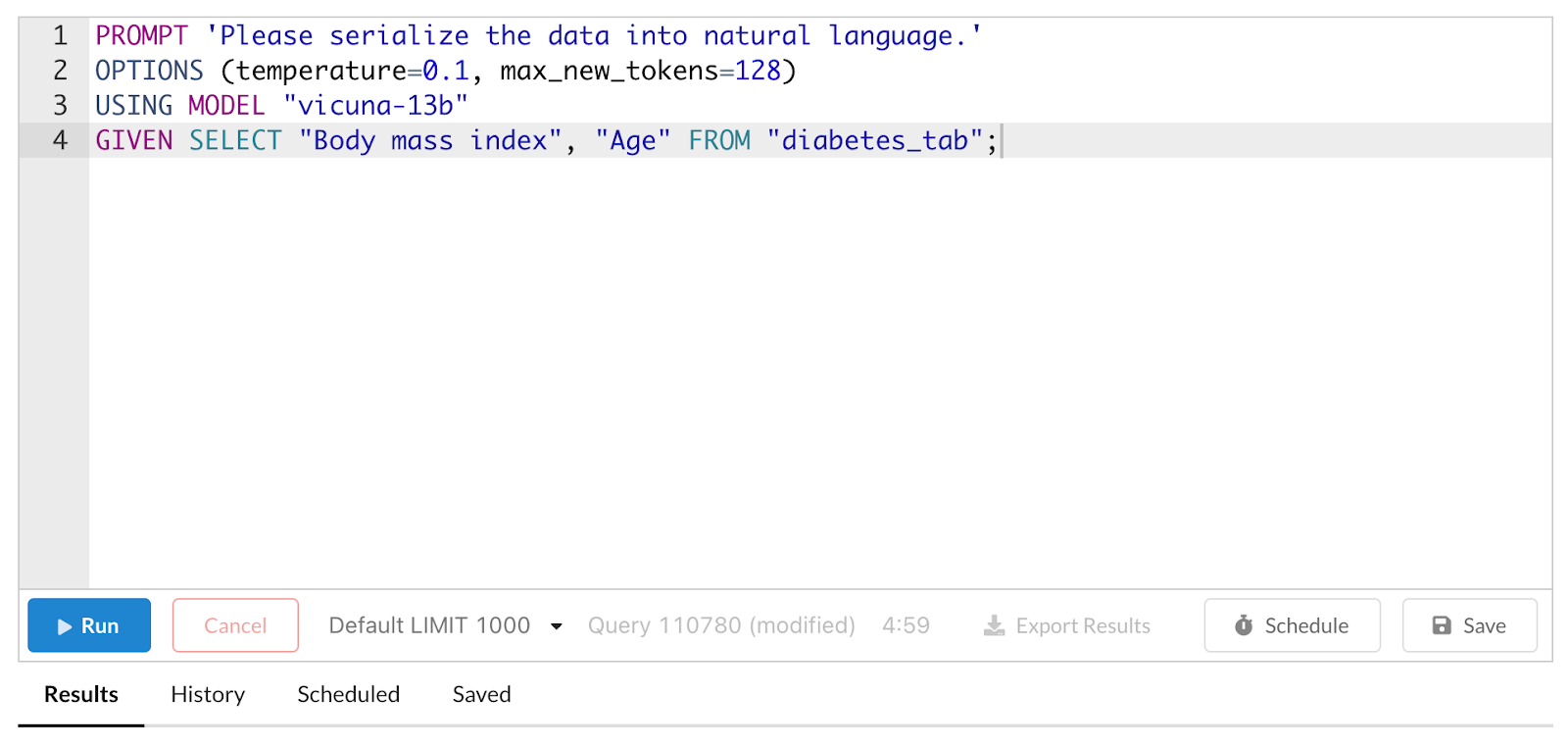

Go to the “Query” tab, and you should see a large box in which you can type a prompt. Make sure to switch from “LLM” to “PQL” on the top left of the screen. Then, you can enter a prompt like the one shown below.

Prompting an LLM in Predibase.

The first line is the actual prompt to the LLM. The second line configures the LLM to your liking Temperature refers to the temperature parameter in the LLM’s softmax function which controls how the probability is distributed across outputs – a higher number means you are likely to have more variety in your responses, which may or may not be desired – and max_new_tokens regulates the length of the response. The third line determines which of Predibase’s LLMs will be used, and the fourth line provides extra information to the model. In this case, we provide the model vicuna-13b with the two columns labeled “Body mass index” and “Age” from the dataset we connected called “diabetes_tab.” Thus, when the model receives the prompt “Please serialize the data into natural language,” it will try to serialize those two features and feature names into a natural language representation.

You can then export your results when the query is finished running. This will come in the form of a CSV file. Make sure to add your target column back to the CSV. You can then connect your CSV to Predibase and train a Neural Network on the “response” column with the appropriate target.

On the free trial, there is a limit to how many rows you can run, but if you would like to perform LLM serialization on your full dataset, please reach out to us to discuss upgrading!

Step 3: Check your Results

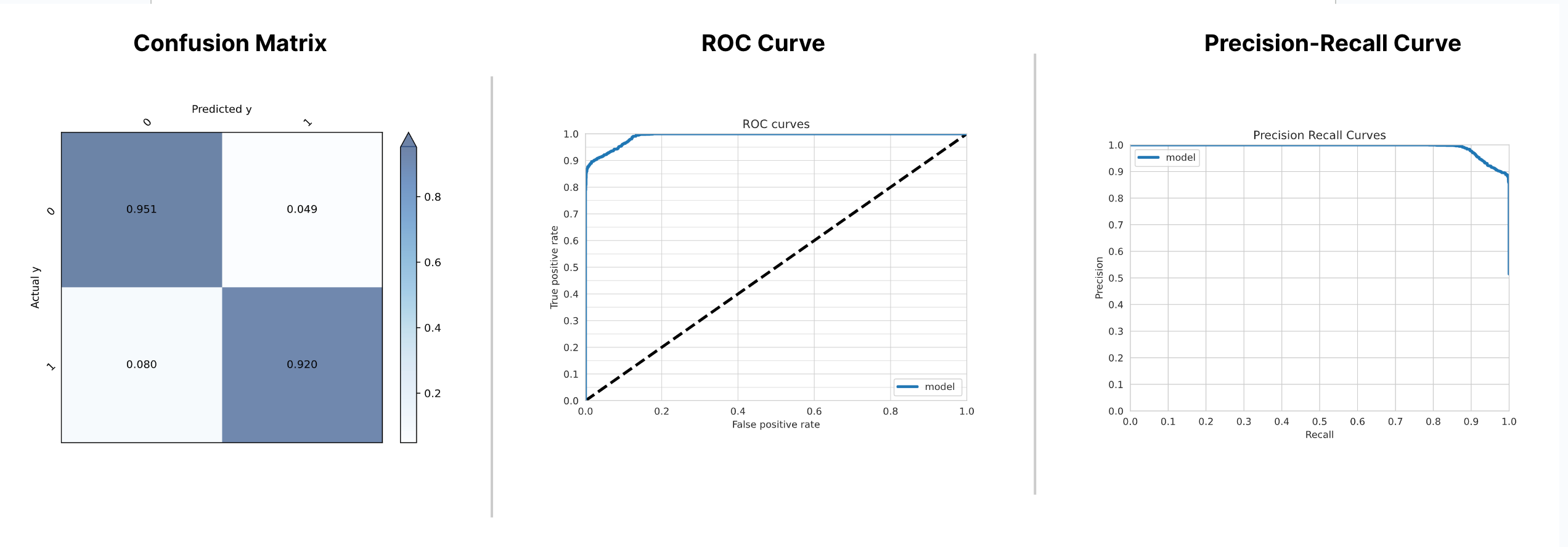

Predibase customizes the model training and the resources used based on the specifications set in your config and the characteristics of your dataset. When the model is finished training, Predibase shows you the hyperparameters used as well as the performance metrics of your new model through a series of visualizations for key metrics like confusion matrices, ROC curves and precision-recall curves:

We can use Predibase's model dashboards for our Jungle Text Dataset to evaluate model performance.

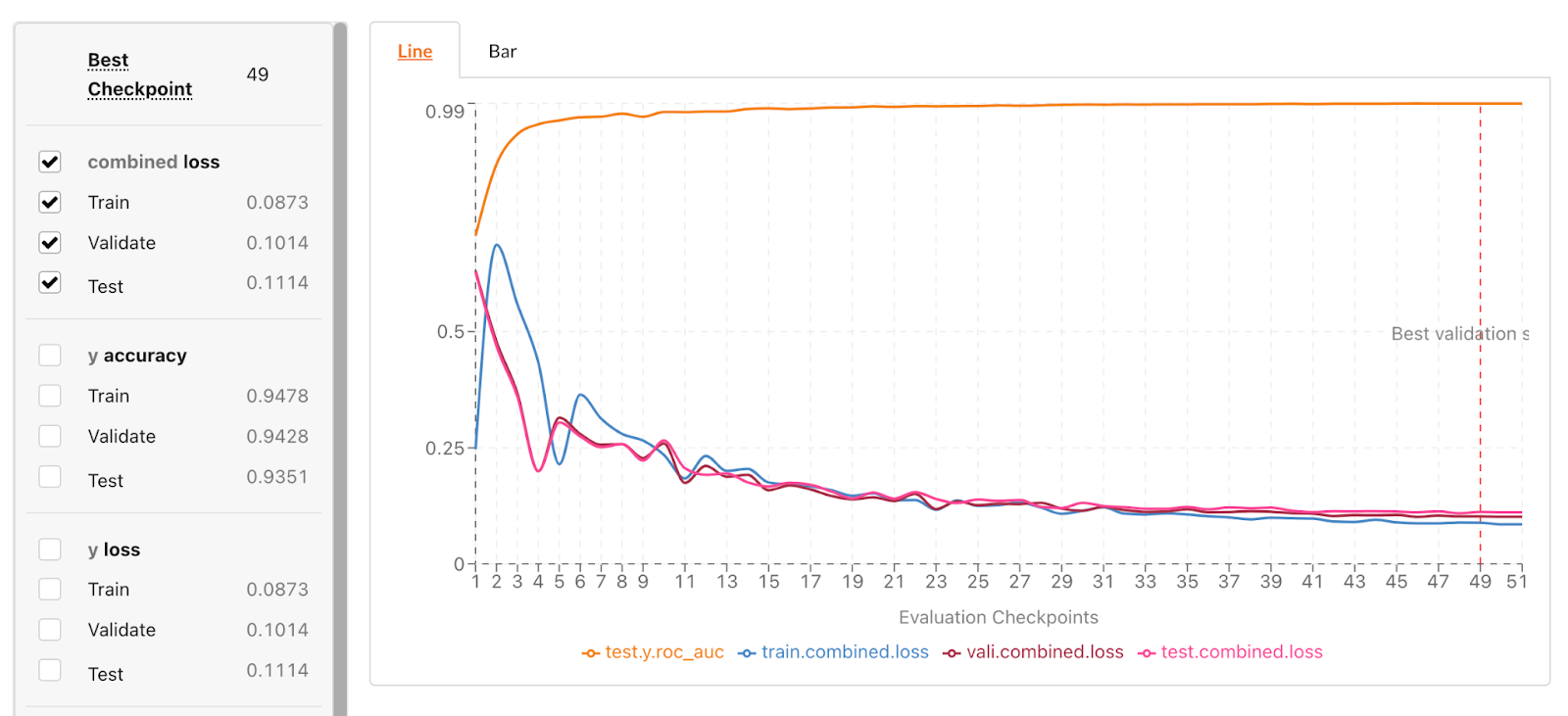

Predibase also provides a detailed learning curve graph for our model:

Learning Curve for Jungle Text Template Dataset.

Get started building models using text-serialized tabular data with declarative ML

Predibase can help you build a model on serialized tabular data in just a few minutes. By writing just a few lines to set up your configuration, you can convert a table into text, train a model on it, and receive clear metrics and visualizations in a matter of minutes.

As the first platform to spread a Declarative ML approach, Predibase lowers the barrier of entry to machine learning by eliminating the need for hundreds of lines of computer code for processing, training, and testing. What we have shown in this article is only one of Predibase’s potential applications.

If you’re interested in learning more about Predibase and Declarative ML and how they can help your company:

- Recreate these results in Predibase by signing up for a free trial.

- Check out some of our other blog posts.

- Take a look at our resources center for videos, webinars, and other materials

If you would like to take a look at our results and analysis for our TabLLM experiments, check out our blog, Getting the Best Zero-Shot Performance on your Tabular Data with LLMs.