Engineering teams are increasingly planning to put multiple LLMs into production with nearly 70% of surveyed ML practitioners having already deployed at least one to two LLMs in production or for experimentation purposes. Fine-tuning LLMs for task-specific use cases is the most cost- and resource-efficient approach for deploying LLMs, and it’s essential that teams have access to best-in-class tools for managing every step of their production process. To that end, we’re excited to release a major update to the Predibase platform that enables teams to view and manage LLM deployments in the UI.

Predibase provides fully-managed infrastructure for fine-tuning and serving LLMs and offers dedicated deployments and shared serverless deployments (via a serverless fine-tuned endpoint). For experimentation or smaller workloads, serverless deployments are often the best place to start. You only pay per token, never have to worry about paying for an idle GPU, and don’t have to manage the deployment yourself at all. As teams start expecting significant traffic to their model, dedicated deployments start to become a more attractive option.

Predibase is designed to be the command center for your LLMs and, with our latest release, now provides the best experience for managing and monitoring dedicated and serverless deployments.

View and Manage Deployments in One Place

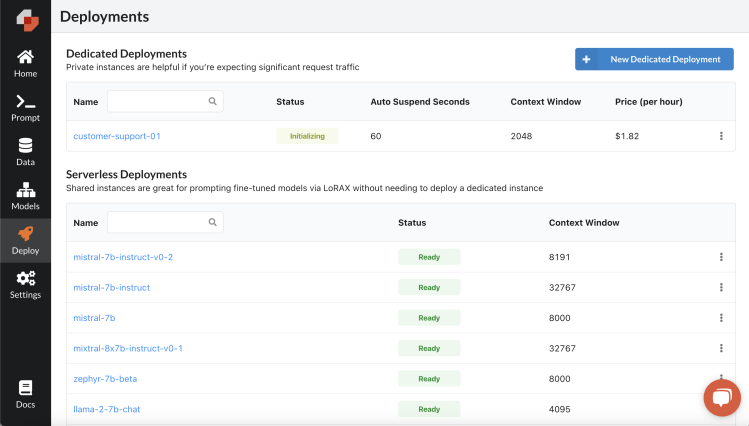

The new Deployments page gives you a bird’s eye view of all available deployments, including your dedicated deployments and Predibase’s serverless deployments. With this view, you can quickly view all the essential deployment details including status, base model (for dedicated deployments), and context window.

The “status” is particularly useful for monitoring all deployments without needing to use the llm_deployment.get_status() command for each deployment. A full list of possible statuses is available in our documentation.

Create New Deployments in the UI

With the new Deployments page, we’ve introduced a simple one-step process for creating a new dedicated deployment.

To create a new dedicated deployment:

- Click “New Dedicated Deployment”

- Enter a name for the deployment

- Select your base model from the list of models supported in Predibase. As a reminder, models larger than 7B parameters are only available for Enterprise customers. See the full list of supported base models in our documentation.

From here, you can deploy the model as-is, or continue on to configure advanced options:

- Enterprise customers may choose the accelerator (GPU) that best fits the needs of their deployment. See our documentation for a full list of available GPUs.

- Customize the auto-suspend settings by specifying how long a deployment should remain active without receiving any requests before scaling down. The default setting is 0, which means the deployment will always be on and won’t scale down.

Before clicking “Deploy”, you will see a summary of the auto-suspend settings and the cost per hour of running the deployment.

Quickly Prompt Fine-Tuned Adapters and Base Models

Whether you’re prompting a fine-tuned adapter or a base model through the UI or programmatically, the new Deployment page simplifies your workflow. For prompting via the API/SDK/CLI, you’ll even be presented with code snippets to help get you started.

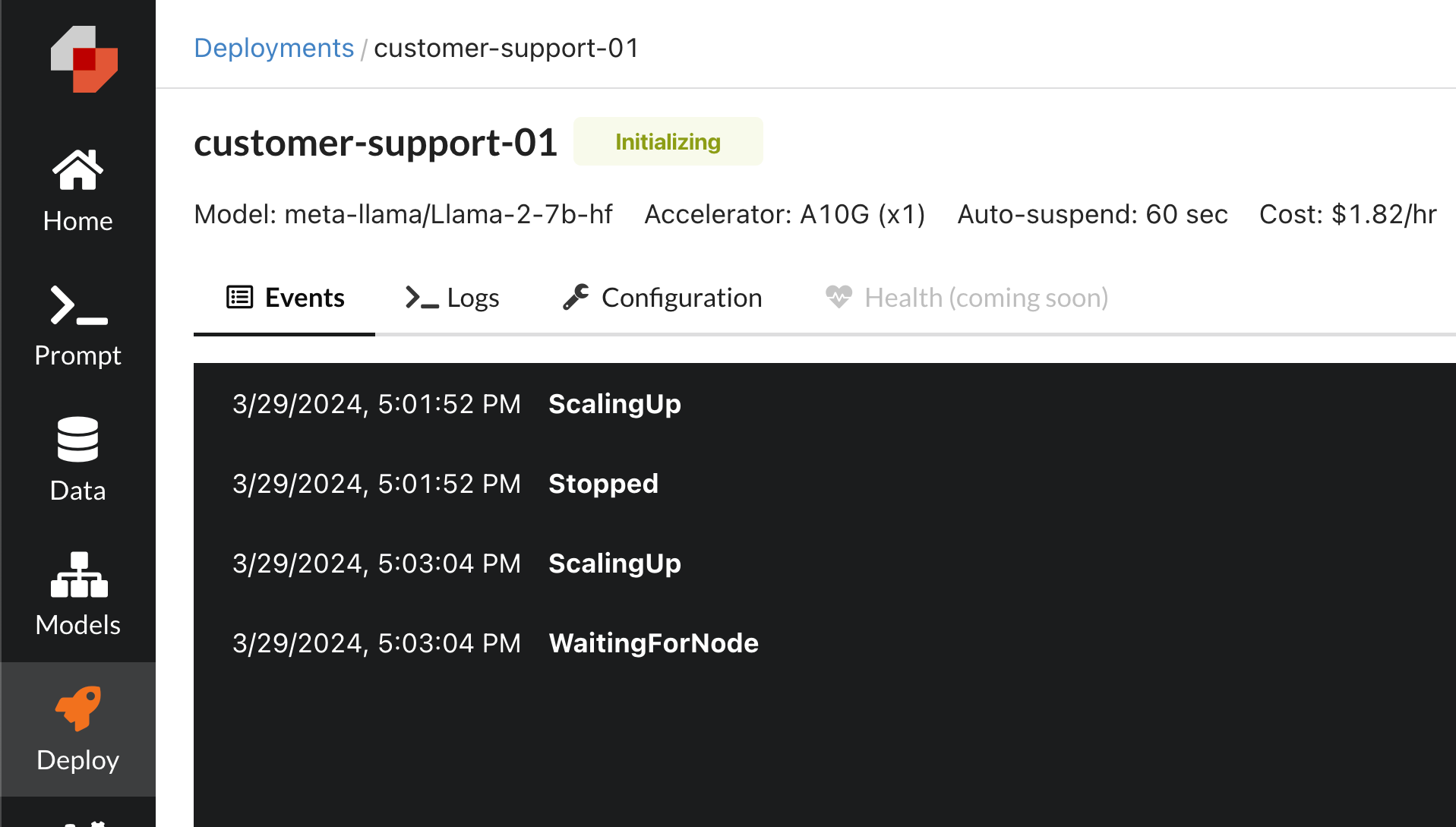

See the Full Picture with Detailed Event History and Logs

In the detail view of a deployment, the Events tab displays the historical status of your deployment so you can track your deployment’s lifecycle. The Logs tab shows more information about the requests being made to the model and gives a look at what’s going on behind the scenes with LoRAX models on Predibase.

Stop Worrying About Managing Infra and Try Predibase For Free Today

The new Deployments page is just another step forward in simplifying every aspect of managing infra for fine-tuning and serving LLMs and will help your organization spend less time configuring GPUs and base models so you can spend more time building your next AI breakthrough and getting it into production. Predibase provides better visibility and control of essential infra so you can move faster and focus on high-impact work. Get started fine-tuning and serving for free today with $25 of credit.